mirror of https://github.com/alibaba/EasyCV.git

Feature/paddleocr inference (#148)

* add ocr model and convert weights from paddleocrv3pull/204/head^2

parent

bb68fcbf5c

commit

397ecf2658

|

|

@ -0,0 +1,85 @@

|

|||

# OCR algorithm

|

||||

## PP-OCRv3

|

||||

We convert [PaddleOCRv3](https://github.com/PaddlePaddle/PaddleOCR) models to pytorch style, and provide end2end interface to recognize text in images, by simplely load exported models.

|

||||

### detection

|

||||

We test on on icdar2015 dataset.

|

||||

|Algorithm|backbone|configs|precison|recall|Hmean|Download|

|

||||

|:---:|:---:|:---:|:---:|:---:|:---:|:---:|

|

||||

|DB|MobileNetv3|[det_model_en.py](configs/ocr/detection/det_model_en.py)|0.7803|0.7250|0.7516|[log](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/det/fintune_icdar2015_mobilev3/20220902_140307.log.json)-[model](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/det/fintune_icdar2015_mobilev3/epoch_70.pth)|

|

||||

|DB|R50|[det_model_en_r50.py](configs/ocr/detection/det_model_en_r50.py)|0.8622|0.8218|0.8415|[log](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/det/fintune_icdar2015_r50/20220906_110252.log.json)-[model](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/det/fintune_icdar2015_r50/epoch_1150.pth)|

|

||||

### recognition

|

||||

We test on on [DTRB](https://arxiv.org/abs/1904.01906) dataset.

|

||||

|Algorithm|backbone|configs|acc|Download|

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

|SVTR|MobileNetv1|[rec_model_en.py](configs/ocr/recognition/rec_model_en.py)|0.7536|[log](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/rec/fintune_dtrb/20220914_125616.log.json)-[model](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/rec/fintune_dtrb/epoch_60.pth)|

|

||||

### predict

|

||||

We provide exported models contain weights and process config for easyly predict, which convert from PaddleOCRv3.

|

||||

#### detection model

|

||||

|language|Download|

|

||||

|---|---|

|

||||

|chinese|[chinese_det.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/det/chinese_det.pth)|

|

||||

|english|[english_det.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/det/english_det.pth)|

|

||||

|multilingual|[multilingual_det.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/det/multilingual_det.pth)|

|

||||

#### recognition model

|

||||

|language|Download|

|

||||

|---|---|

|

||||

|chiese|[chinese_rec.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/rec/chinese_rec.pth)|

|

||||

|english|[english_rec.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/rec/english_rec.pth)|

|

||||

|korean|[korean_rec.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/rec/korean_rec.pth)|

|

||||

|japan|[japan_rec.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/rec/japan_rec.pth)|

|

||||

|chinese_cht|[chinese_cht_rec.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/rec/chinese_cht_rec.pth)|

|

||||

|Telugu|[te_rec.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/rec/te_rec.pth)|

|

||||

|Canada|[ka_rec.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/rec/ka_rec.pth)|

|

||||

|Tamil|[ta_rec.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/rec/ta_rec.pth)|

|

||||

|latin|[latin_rec.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/rec/latin_rec.pth)|

|

||||

|cyrillic|[cyrillic_rec.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/rec/cyrillic_rec.pth)|

|

||||

|devanagari|[devanagari_rec.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/rec/devanagari_rec.pth)|

|

||||

#### direction model

|

||||

|language|Download|

|

||||

|---|---|

|

||||

||[direction.pth](http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/export_model/cls/direction.pth)|

|

||||

#### usage

|

||||

##### detection

|

||||

```

|

||||

import cv2

|

||||

from easycv.predictors.ocr import OCRDetPredictor

|

||||

predictor = OCRDetPredictor(model_path)

|

||||

out = predictor([img_path]) # out = predictor([img])

|

||||

img = cv2.imread(img_path)

|

||||

out_img = predictor.show_result(out[0], img)

|

||||

cv2.imwrite(out_img_path,out_img)

|

||||

```

|

||||

|

||||

##### recognition

|

||||

```

|

||||

import cv2

|

||||

from easycv.predictors.ocr import OCRRecPredictor

|

||||

predictor = OCRRecPredictor(model_path)

|

||||

out = predictor([img_path])

|

||||

print(out)

|

||||

```

|

||||

<br/>

|

||||

|

||||

##### end2end

|

||||

```

|

||||

import cv2

|

||||

from easycv.predictors.ocr import OCRPredictor

|

||||

! wget http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/simfang.ttf

|

||||

! wget http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/ocr_det.jpg

|

||||

predictor = OCRPredictor(

|

||||

det_model_path=path_to_detmodel,

|

||||

rec_model_path=path_to_recmodel,

|

||||

cls_model_path=path_to_clsmodel,

|

||||

use_angle_cls=True)

|

||||

filter_boxes, filter_rec_res = predictor(img_path)

|

||||

img = cv2.imread('ocr_det.jpg')

|

||||

out_img = predictor.show(

|

||||

filter_boxes[0],

|

||||

filter_rec_res[0],

|

||||

img,

|

||||

font_path='simfang.ttf')

|

||||

cv2.imwrite('out_img.jpg', out_img)

|

||||

```

|

||||

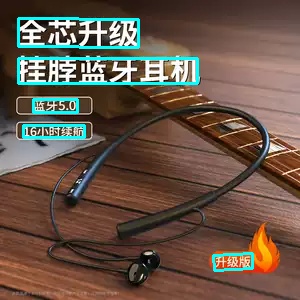

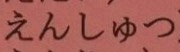

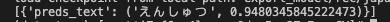

There are some ocr results.<br/>

|

||||

|

||||

|

||||

|

|

@ -0,0 +1,148 @@

|

|||

_base_ = ['configs/base.py']

|

||||

|

||||

model = dict(

|

||||

type='DBNet',

|

||||

backbone=dict(

|

||||

type='OCRDetMobileNetV3',

|

||||

scale=0.5,

|

||||

model_name='large',

|

||||

disable_se=True),

|

||||

neck=dict(

|

||||

type='RSEFPN',

|

||||

in_channels=[16, 24, 56, 480],

|

||||

out_channels=96,

|

||||

shortcut=True),

|

||||

head=dict(type='DBHead', in_channels=96, k=50),

|

||||

postprocess=dict(

|

||||

type='DBPostProcess',

|

||||

thresh=0.3,

|

||||

box_thresh=0.6,

|

||||

max_candidates=1000,

|

||||

unclip_ratio=1.5,

|

||||

use_dilation=False,

|

||||

score_mode='fast'),

|

||||

loss=dict(

|

||||

type='DBLoss',

|

||||

balance_loss=True,

|

||||

main_loss_type='DiceLoss',

|

||||

alpha=5,

|

||||

beta=10,

|

||||

ohem_ratio=3),

|

||||

pretrained=

|

||||

'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/det/ch_PP-OCRv3_det/student.pth'

|

||||

)

|

||||

|

||||

img_norm_cfg = dict(

|

||||

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=False)

|

||||

|

||||

train_pipeline = [

|

||||

dict(

|

||||

type='IaaAugment',

|

||||

augmenter_args=[{

|

||||

'type': 'Fliplr',

|

||||

'args': {

|

||||

'p': 0.5

|

||||

}

|

||||

}, {

|

||||

'type': 'Affine',

|

||||

'args': {

|

||||

'rotate': [-10, 10]

|

||||

}

|

||||

}, {

|

||||

'type': 'Resize',

|

||||

'args': {

|

||||

'size': [0.5, 3]

|

||||

}

|

||||

}]),

|

||||

dict(

|

||||

type='EastRandomCropData',

|

||||

size=[640, 640],

|

||||

max_tries=50,

|

||||

keep_ratio=True),

|

||||

dict(

|

||||

type='MakeBorderMap', shrink_ratio=0.4, thresh_min=0.3,

|

||||

thresh_max=0.7),

|

||||

dict(type='MakeShrinkMap', shrink_ratio=0.4, min_text_size=8),

|

||||

dict(type='MMNormalize', **img_norm_cfg),

|

||||

dict(

|

||||

type='ImageToTensor',

|

||||

keys=[

|

||||

'img', 'threshold_map', 'threshold_mask', 'shrink_map',

|

||||

'shrink_mask'

|

||||

]),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=[

|

||||

'img', 'threshold_map', 'threshold_mask', 'shrink_map',

|

||||

'shrink_mask'

|

||||

]),

|

||||

]

|

||||

|

||||

test_pipeline = [

|

||||

dict(type='OCRDetResize', limit_side_len=640, limit_type='min'),

|

||||

dict(type='MMNormalize', **img_norm_cfg),

|

||||

dict(type='ImageToTensor', keys=['img']),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img'],

|

||||

meta_keys=['ori_img_shape', 'polys', 'ignore_tags']),

|

||||

]

|

||||

|

||||

val_pipeline = [

|

||||

dict(type='OCRDetResize', limit_side_len=640, limit_type='min'),

|

||||

dict(type='MMNormalize', **img_norm_cfg),

|

||||

dict(type='ImageToTensor', keys=['img']),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img'],

|

||||

meta_keys=['ori_img_shape', 'polys', 'ignore_tags']),

|

||||

]

|

||||

|

||||

train_dataset = dict(

|

||||

type='OCRDetDataset',

|

||||

data_source=dict(

|

||||

type='OCRPaiDetSource',

|

||||

label_file=[

|

||||

'ocr/det/pai/label_file/train/20191218131226_npx_e2e_train.csv',

|

||||

'ocr/det/pai/label_file/train/20191218131302_social_e2e_train.csv',

|

||||

'ocr/det/pai/label_file/train/20191218122330_book_e2e_train.csv',

|

||||

],

|

||||

data_dir='ocr/det/pai/img/train'),

|

||||

pipeline=train_pipeline)

|

||||

|

||||

val_dataset = dict(

|

||||

type='OCRDetDataset',

|

||||

imgs_per_gpu=1,

|

||||

data_source=dict(

|

||||

type='OCRPaiDetSource',

|

||||

label_file=[

|

||||

'ocr/det/pai/label_file/test/20191218131744_npx_e2e_test.csv',

|

||||

'ocr/det/pai/label_file/test/20191218131817_social_e2e_test.csv'

|

||||

],

|

||||

data_dir='ocr/det/pai/img/test'),

|

||||

pipeline=val_pipeline)

|

||||

|

||||

data = dict(

|

||||

imgs_per_gpu=16, workers_per_gpu=2, train=train_dataset, val=val_dataset)

|

||||

|

||||

total_epochs = 100

|

||||

optimizer = dict(type='Adam', lr=0.001, betas=(0.9, 0.999))

|

||||

|

||||

# learning policy

|

||||

lr_config = dict(policy='fixed')

|

||||

|

||||

checkpoint_config = dict(interval=1)

|

||||

|

||||

log_config = dict(

|

||||

interval=10, hooks=[

|

||||

dict(type='TextLoggerHook'),

|

||||

])

|

||||

|

||||

eval_config = dict(initial=True, interval=1, gpu_collect=False)

|

||||

eval_pipelines = [

|

||||

dict(

|

||||

mode='test',

|

||||

dist_eval=True,

|

||||

evaluators=[dict(type='OCRDetEvaluator')],

|

||||

)

|

||||

]

|

||||

|

|

@ -0,0 +1,145 @@

|

|||

_base_ = ['configs/base.py']

|

||||

|

||||

model = dict(

|

||||

type='DBNet',

|

||||

backbone=dict(type='OCRDetResNet', in_channels=3, layers=50),

|

||||

neck=dict(

|

||||

type='LKPAN',

|

||||

in_channels=[256, 512, 1024, 2048],

|

||||

out_channels=256,

|

||||

shortcut=True),

|

||||

head=dict(type='DBHead', in_channels=256, kernel_list=[7, 2, 2], k=50),

|

||||

postprocess=dict(

|

||||

type='DBPostProcess',

|

||||

thresh=0.3,

|

||||

box_thresh=0.6,

|

||||

max_candidates=1000,

|

||||

unclip_ratio=1.5,

|

||||

use_dilation=False,

|

||||

score_mode='fast'),

|

||||

loss=dict(

|

||||

type='DBLoss',

|

||||

balance_loss=True,

|

||||

main_loss_type='DiceLoss',

|

||||

alpha=5,

|

||||

beta=10,

|

||||

ohem_ratio=3),

|

||||

pretrained=

|

||||

'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/det/ch_PP-OCRv3_det/teacher.pth'

|

||||

)

|

||||

|

||||

img_norm_cfg = dict(

|

||||

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=False)

|

||||

|

||||

train_pipeline = [

|

||||

dict(

|

||||

type='IaaAugment',

|

||||

augmenter_args=[{

|

||||

'type': 'Fliplr',

|

||||

'args': {

|

||||

'p': 0.5

|

||||

}

|

||||

}, {

|

||||

'type': 'Affine',

|

||||

'args': {

|

||||

'rotate': [-10, 10]

|

||||

}

|

||||

}, {

|

||||

'type': 'Resize',

|

||||

'args': {

|

||||

'size': [0.5, 3]

|

||||

}

|

||||

}]),

|

||||

dict(

|

||||

type='EastRandomCropData',

|

||||

size=[640, 640],

|

||||

max_tries=50,

|

||||

keep_ratio=True),

|

||||

dict(

|

||||

type='MakeBorderMap', shrink_ratio=0.4, thresh_min=0.3,

|

||||

thresh_max=0.7),

|

||||

dict(type='MakeShrinkMap', shrink_ratio=0.4, min_text_size=8),

|

||||

dict(type='MMNormalize', **img_norm_cfg),

|

||||

dict(

|

||||

type='ImageToTensor',

|

||||

keys=[

|

||||

'img', 'threshold_map', 'threshold_mask', 'shrink_map',

|

||||

'shrink_mask'

|

||||

]),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=[

|

||||

'img', 'threshold_map', 'threshold_mask', 'shrink_map',

|

||||

'shrink_mask'

|

||||

]),

|

||||

]

|

||||

|

||||

test_pipeline = [

|

||||

dict(type='OCRDetResize', limit_side_len=960),

|

||||

dict(type='MMNormalize', **img_norm_cfg),

|

||||

dict(type='ImageToTensor', keys=['img']),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img'],

|

||||

meta_keys=['ori_img_shape', 'polys', 'ignore_tags']),

|

||||

]

|

||||

|

||||

val_pipeline = [

|

||||

dict(type='OCRDetResize', limit_side_len=960),

|

||||

dict(type='MMNormalize', **img_norm_cfg),

|

||||

dict(type='ImageToTensor', keys=['img']),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img'],

|

||||

meta_keys=['ori_img_shape', 'polys', 'ignore_tags']),

|

||||

]

|

||||

|

||||

train_dataset = dict(

|

||||

type='OCRDetDataset',

|

||||

data_source=dict(

|

||||

type='OCRPaiDetSource',

|

||||

label_file=[

|

||||

'ocr/det/pai/label_file/train/20191218131226_npx_e2e_train.csv',

|

||||

'ocr/det/pai/label_file/train/20191218131302_social_e2e_train.csv',

|

||||

'ocr/det/pai/label_file/train/20191218122330_book_e2e_train.csv',

|

||||

],

|

||||

data_dir='ocr/det/pai/img/train'),

|

||||

pipeline=train_pipeline)

|

||||

|

||||

val_dataset = dict(

|

||||

type='OCRDetDataset',

|

||||

imgs_per_gpu=1,

|

||||

data_source=dict(

|

||||

type='OCRPaiDetSource',

|

||||

label_file=[

|

||||

'ocr/det/pai/label_file/test/20191218131744_npx_e2e_test.csv',

|

||||

'ocr/det/pai/label_file/test/20191218131817_social_e2e_test.csv'

|

||||

],

|

||||

data_dir='ocr/det/pai/img/test'),

|

||||

pipeline=val_pipeline)

|

||||

|

||||

data = dict(

|

||||

imgs_per_gpu=8, workers_per_gpu=2, train=train_dataset, val=val_dataset)

|

||||

|

||||

total_epochs = 100

|

||||

|

||||

optimizer = dict(type='Adam', lr=0.001, betas=(0.9, 0.999))

|

||||

|

||||

# learning policy

|

||||

lr_config = dict(policy='fixed')

|

||||

|

||||

checkpoint_config = dict(interval=1)

|

||||

|

||||

log_config = dict(

|

||||

interval=10, hooks=[

|

||||

dict(type='TextLoggerHook'),

|

||||

])

|

||||

|

||||

eval_config = dict(initial=True, interval=1, gpu_collect=False)

|

||||

eval_pipelines = [

|

||||

dict(

|

||||

mode='test',

|

||||

dist_eval=True,

|

||||

evaluators=[dict(type='OCRDetEvaluator')],

|

||||

)

|

||||

]

|

||||

|

|

@ -0,0 +1,154 @@

|

|||

_base_ = ['configs/base.py']

|

||||

|

||||

model = dict(

|

||||

type='DBNet',

|

||||

backbone=dict(

|

||||

type='OCRDetMobileNetV3',

|

||||

scale=0.5,

|

||||

model_name='large',

|

||||

disable_se=True),

|

||||

neck=dict(

|

||||

type='RSEFPN',

|

||||

in_channels=[16, 24, 56, 480],

|

||||

out_channels=96,

|

||||

shortcut=True),

|

||||

head=dict(type='DBHead', in_channels=96, k=50),

|

||||

postprocess=dict(

|

||||

type='DBPostProcess',

|

||||

thresh=0.3,

|

||||

box_thresh=0.6,

|

||||

max_candidates=1000,

|

||||

unclip_ratio=1.5,

|

||||

use_dilation=False,

|

||||

score_mode='fast'),

|

||||

loss=dict(

|

||||

type='DBLoss',

|

||||

balance_loss=True,

|

||||

main_loss_type='DiceLoss',

|

||||

alpha=5,

|

||||

beta=10,

|

||||

ohem_ratio=3),

|

||||

pretrained=

|

||||

'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/det/en_PP-OCRv3_det/student.pth'

|

||||

)

|

||||

|

||||

img_norm_cfg = dict(

|

||||

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=False)

|

||||

|

||||

train_pipeline = [

|

||||

dict(

|

||||

type='IaaAugment',

|

||||

augmenter_args=[{

|

||||

'type': 'Fliplr',

|

||||

'args': {

|

||||

'p': 0.5

|

||||

}

|

||||

}, {

|

||||

'type': 'Affine',

|

||||

'args': {

|

||||

'rotate': [-10, 10]

|

||||

}

|

||||

}, {

|

||||

'type': 'Resize',

|

||||

'args': {

|

||||

'size': [0.5, 3]

|

||||

}

|

||||

}]),

|

||||

dict(

|

||||

type='EastRandomCropData',

|

||||

size=[640, 640],

|

||||

max_tries=50,

|

||||

keep_ratio=True),

|

||||

dict(

|

||||

type='MakeBorderMap', shrink_ratio=0.4, thresh_min=0.3,

|

||||

thresh_max=0.7),

|

||||

dict(type='MakeShrinkMap', shrink_ratio=0.4, min_text_size=8),

|

||||

dict(type='MMNormalize', **img_norm_cfg),

|

||||

dict(

|

||||

type='ImageToTensor',

|

||||

keys=[

|

||||

'img', 'threshold_map', 'threshold_mask', 'shrink_map',

|

||||

'shrink_mask'

|

||||

]),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=[

|

||||

'img', 'threshold_map', 'threshold_mask', 'shrink_map',

|

||||

'shrink_mask'

|

||||

]),

|

||||

]

|

||||

|

||||

# test_pipeline = [

|

||||

# dict(type='MMResize', img_scale=(960, 960)),

|

||||

# dict(type='ResizeDivisor', size_divisor=32),

|

||||

# dict(type='MMNormalize', **img_norm_cfg),

|

||||

# dict(type='ImageToTensor', keys=['img']),

|

||||

# dict(

|

||||

# type='Collect',

|

||||

# keys=['img'],

|

||||

# meta_keys=['ori_img_shape', 'polys', 'ignore_tags']),

|

||||

# ]

|

||||

test_pipeline = [

|

||||

dict(type='OCRDetResize', limit_side_len=640, limit_type='min'),

|

||||

dict(type='MMNormalize', **img_norm_cfg),

|

||||

dict(type='ImageToTensor', keys=['img']),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img'],

|

||||

meta_keys=['ori_img_shape', 'polys', 'ignore_tags']),

|

||||

]

|

||||

|

||||

val_pipeline = [

|

||||

dict(type='OCRDetResize', image_shape=(736, 1280)),

|

||||

dict(type='MMNormalize', **img_norm_cfg),

|

||||

dict(type='ImageToTensor', keys=['img']),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img'],

|

||||

meta_keys=['ori_img_shape', 'polys', 'ignore_tags']),

|

||||

]

|

||||

|

||||

train_dataset = dict(

|

||||

type='OCRDetDataset',

|

||||

data_source=dict(

|

||||

type='OCRDetSource',

|

||||

label_file=

|

||||

'ocr/det/icdar2015/text_localization/train_icdar2015_label.txt',

|

||||

data_dir='ocr/det/icdar2015/text_localization'),

|

||||

pipeline=train_pipeline)

|

||||

|

||||

val_dataset = dict(

|

||||

type='OCRDetDataset',

|

||||

imgs_per_gpu=2,

|

||||

data_source=dict(

|

||||

type='OCRDetSource',

|

||||

label_file=

|

||||

'ocr/det/icdar2015/text_localization/test_icdar2015_label.txt',

|

||||

data_dir='ocr/det/icdar2015/text_localization',

|

||||

test_mode=True),

|

||||

pipeline=val_pipeline)

|

||||

|

||||

data = dict(

|

||||

imgs_per_gpu=16, workers_per_gpu=2, train=train_dataset, val=val_dataset)

|

||||

|

||||

total_epochs = 100

|

||||

optimizer = dict(type='Adam', lr=0.001, betas=(0.9, 0.999))

|

||||

|

||||

# learning policy

|

||||

lr_config = dict(policy='fixed')

|

||||

|

||||

checkpoint_config = dict(interval=1)

|

||||

|

||||

log_config = dict(

|

||||

interval=10, hooks=[

|

||||

dict(type='TextLoggerHook'),

|

||||

])

|

||||

|

||||

eval_config = dict(initial=False, interval=1, gpu_collect=False)

|

||||

eval_pipelines = [

|

||||

dict(

|

||||

mode='test',

|

||||

dist_eval=True,

|

||||

evaluators=[dict(type='OCRDetEvaluator')],

|

||||

)

|

||||

]

|

||||

|

|

@ -0,0 +1,148 @@

|

|||

_base_ = ['configs/base.py']

|

||||

|

||||

model = dict(

|

||||

type='DBNet',

|

||||

backbone=dict(type='OCRDetResNet', in_channels=3, layers=50),

|

||||

neck=dict(

|

||||

type='LKPAN',

|

||||

in_channels=[256, 512, 1024, 2048],

|

||||

out_channels=256,

|

||||

shortcut=True),

|

||||

head=dict(type='DBHead', in_channels=256, kernel_list=[7, 2, 2], k=50),

|

||||

postprocess=dict(

|

||||

type='DBPostProcess',

|

||||

thresh=0.3,

|

||||

box_thresh=0.6,

|

||||

max_candidates=1000,

|

||||

unclip_ratio=1.5,

|

||||

use_dilation=False,

|

||||

score_mode='fast'),

|

||||

loss=dict(

|

||||

type='DBLoss',

|

||||

balance_loss=True,

|

||||

main_loss_type='DiceLoss',

|

||||

alpha=5,

|

||||

beta=10,

|

||||

ohem_ratio=3),

|

||||

pretrained=

|

||||

'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/det/en_PP-OCRv3_det/teacher.pth'

|

||||

)

|

||||

|

||||

img_norm_cfg = dict(

|

||||

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=False)

|

||||

|

||||

train_pipeline = [

|

||||

dict(

|

||||

type='IaaAugment',

|

||||

augmenter_args=[{

|

||||

'type': 'Fliplr',

|

||||

'args': {

|

||||

'p': 0.5

|

||||

}

|

||||

}, {

|

||||

'type': 'Affine',

|

||||

'args': {

|

||||

'rotate': [-10, 10]

|

||||

}

|

||||

}, {

|

||||

'type': 'Resize',

|

||||

'args': {

|

||||

'size': [0.5, 3]

|

||||

}

|

||||

}]),

|

||||

dict(

|

||||

type='EastRandomCropData',

|

||||

size=[640, 640],

|

||||

max_tries=50,

|

||||

keep_ratio=True),

|

||||

dict(

|

||||

type='MakeBorderMap', shrink_ratio=0.4, thresh_min=0.3,

|

||||

thresh_max=0.7),

|

||||

dict(type='MakeShrinkMap', shrink_ratio=0.4, min_text_size=8),

|

||||

dict(type='MMNormalize', **img_norm_cfg),

|

||||

dict(

|

||||

type='ImageToTensor',

|

||||

keys=[

|

||||

'img', 'threshold_map', 'threshold_mask', 'shrink_map',

|

||||

'shrink_mask'

|

||||

]),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=[

|

||||

'img', 'threshold_map', 'threshold_mask', 'shrink_map',

|

||||

'shrink_mask'

|

||||

]),

|

||||

]

|

||||

|

||||

test_pipeline = [

|

||||

dict(type='MMResize', img_scale=(960, 960)),

|

||||

dict(type='ResizeDivisor', size_divisor=32),

|

||||

dict(type='MMNormalize', **img_norm_cfg),

|

||||

dict(type='ImageToTensor', keys=['img']),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img'],

|

||||

meta_keys=['ori_img_shape', 'polys', 'ignore_tags']),

|

||||

]

|

||||

|

||||

val_pipeline = [

|

||||

dict(type='OCRDetResize', image_shape=(736, 1280)),

|

||||

dict(type='MMNormalize', **img_norm_cfg),

|

||||

dict(type='ImageToTensor', keys=['img']),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img'],

|

||||

meta_keys=['ori_img_shape', 'polys', 'ignore_tags']),

|

||||

]

|

||||

|

||||

train_dataset = dict(

|

||||

type='OCRDetDataset',

|

||||

data_source=dict(

|

||||

type='OCRDetSource',

|

||||

label_file=

|

||||

'ocr/det/icdar2015/text_localization/train_icdar2015_label.txt',

|

||||

data_dir='ocr/det/icdar2015/text_localization'),

|

||||

pipeline=train_pipeline)

|

||||

|

||||

val_dataset = dict(

|

||||

type='OCRDetDataset',

|

||||

imgs_per_gpu=2,

|

||||

data_source=dict(

|

||||

type='OCRDetSource',

|

||||

label_file=

|

||||

'ocr/det/icdar2015/text_localization/test_icdar2015_label.txt',

|

||||

data_dir='ocr/det/icdar2015/text_localization',

|

||||

test_mode=True),

|

||||

pipeline=val_pipeline)

|

||||

|

||||

data = dict(

|

||||

imgs_per_gpu=16, workers_per_gpu=2, train=train_dataset, val=val_dataset)

|

||||

|

||||

total_epochs = 1200

|

||||

optimizer = dict(type='Adam', lr=0.001, weight_decay=1e-4, betas=(0.9, 0.999))

|

||||

|

||||

# learning policy

|

||||

lr_config = dict(

|

||||

policy='CosineAnnealing',

|

||||

min_lr=1e-5,

|

||||

warmup='linear',

|

||||

warmup_iters=5,

|

||||

warmup_ratio=1e-4,

|

||||

warmup_by_epoch=True,

|

||||

by_epoch=False)

|

||||

|

||||

checkpoint_config = dict(interval=10)

|

||||

|

||||

log_config = dict(

|

||||

interval=10, hooks=[

|

||||

dict(type='TextLoggerHook'),

|

||||

])

|

||||

|

||||

eval_config = dict(initial=True, interval=1, gpu_collect=False)

|

||||

eval_pipelines = [

|

||||

dict(

|

||||

mode='test',

|

||||

dist_eval=True,

|

||||

evaluators=[dict(type='OCRDetEvaluator')],

|

||||

)

|

||||

]

|

||||

|

|

@ -0,0 +1,86 @@

|

|||

_base_ = ['configs/base.py']

|

||||

|

||||

model = dict(

|

||||

type='TextClassifier',

|

||||

backbone=dict(type='OCRRecMobileNetV3', scale=0.35, model_name='small'),

|

||||

head=dict(

|

||||

type='ClsHead',

|

||||

with_avg_pool=True,

|

||||

in_channels=200,

|

||||

num_classes=2,

|

||||

),

|

||||

pretrained=

|

||||

'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/cls/ch_ppocr_mobile_v2.0_cls/best_accuracy.pth'

|

||||

)

|

||||

|

||||

train_pipeline = [

|

||||

dict(type='RecAug', use_tia=False),

|

||||

dict(type='ClsResizeImg', img_shape=(3, 48, 192)),

|

||||

dict(type='MMToTensor'),

|

||||

dict(type='Collect', keys=['img', 'label'], meta_keys=['img_path'])

|

||||

]

|

||||

|

||||

val_pipeline = [

|

||||

dict(type='ClsResizeImg', img_shape=(3, 48, 192)),

|

||||

dict(type='MMToTensor'),

|

||||

dict(type='Collect', keys=['img', 'label'], meta_keys=['img_path'])

|

||||

]

|

||||

|

||||

test_pipeline = [

|

||||

dict(type='ClsResizeImg', img_shape=(3, 48, 192)),

|

||||

dict(type='MMToTensor'),

|

||||

dict(type='Collect', keys=['img'], meta_keys=['img_path'])

|

||||

]

|

||||

|

||||

train_dataset = dict(

|

||||

type='OCRClsDataset',

|

||||

data_source=dict(

|

||||

type='OCRClsSource',

|

||||

label_file='ocr/direction/pai/label_file/test_direction.txt',

|

||||

data_dir='ocr/direction/pai/img/test',

|

||||

label_list=['0', '180'],

|

||||

),

|

||||

pipeline=train_pipeline)

|

||||

|

||||

val_dataset = dict(

|

||||

type='OCRClsDataset',

|

||||

data_source=dict(

|

||||

type='OCRClsSource',

|

||||

label_file='ocr/direction/pai/label_file/test_direction.txt',

|

||||

data_dir='ocr/direction/pai/img/test',

|

||||

label_list=['0', '180'],

|

||||

test_mode=True),

|

||||

pipeline=val_pipeline)

|

||||

|

||||

data = dict(

|

||||

imgs_per_gpu=512, workers_per_gpu=8, train=train_dataset, val=val_dataset)

|

||||

|

||||

total_epochs = 100

|

||||

optimizer = dict(type='Adam', lr=0.001, betas=(0.9, 0.999))

|

||||

|

||||

# learning policy

|

||||

lr_config = dict(

|

||||

policy='CosineAnnealing',

|

||||

min_lr=1e-5,

|

||||

warmup='linear',

|

||||

warmup_iters=5,

|

||||

warmup_ratio=1e-4,

|

||||

warmup_by_epoch=True,

|

||||

by_epoch=False)

|

||||

|

||||

checkpoint_config = dict(interval=10)

|

||||

|

||||

log_config = dict(

|

||||

interval=10, hooks=[

|

||||

dict(type='TextLoggerHook'),

|

||||

])

|

||||

|

||||

eval_config = dict(initial=True, interval=1, gpu_collect=False)

|

||||

eval_pipelines = [

|

||||

dict(

|

||||

mode='test',

|

||||

data=data['val'],

|

||||

dist_eval=False,

|

||||

evaluators=[dict(type='ClsEvaluator', topk=(1, ))],

|

||||

)

|

||||

]

|

||||

|

|

@ -0,0 +1,144 @@

|

|||

_base_ = ['configs/base.py']

|

||||

|

||||

character_dict_path = 'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/dict/arabic_dict.txt'

|

||||

|

||||

model = dict(

|

||||

type='OCRRecNet',

|

||||

backbone=dict(

|

||||

type='OCRRecMobileNetV1Enhance',

|

||||

scale=0.5,

|

||||

last_conv_stride=[1, 2],

|

||||

last_pool_type='avg'),

|

||||

# inference

|

||||

# neck=dict(

|

||||

# type='SequenceEncoder',

|

||||

# in_channels=512,

|

||||

# encoder_type='svtr',

|

||||

# dims=64,

|

||||

# depth=2,

|

||||

# hidden_dims=120,

|

||||

# use_guide=True),

|

||||

# head=dict(

|

||||

# type='CTCHead',

|

||||

# in_channels=64,

|

||||

# fc_decay=0.00001),

|

||||

head=dict(

|

||||

type='MultiHead',

|

||||

in_channels=512,

|

||||

out_channels_list=dict(

|

||||

CTCLabelDecode=163,

|

||||

SARLabelDecode=165,

|

||||

),

|

||||

head_list=[

|

||||

dict(

|

||||

type='CTCHead',

|

||||

Neck=dict(

|

||||

type='svtr',

|

||||

dims=64,

|

||||

depth=2,

|

||||

hidden_dims=120,

|

||||

use_guide=True),

|

||||

Head=dict(fc_decay=0.00001, )),

|

||||

dict(type='SARHead', enc_dim=512, max_text_length=25)

|

||||

]),

|

||||

postprocess=dict(

|

||||

type='CTCLabelDecode',

|

||||

character_dict_path=character_dict_path,

|

||||

use_space_char=True),

|

||||

loss=dict(

|

||||

type='MultiLoss',

|

||||

ignore_index=164,

|

||||

loss_config_list=[

|

||||

dict(CTCLoss=None),

|

||||

dict(SARLoss=None),

|

||||

]),

|

||||

pretrained=None)

|

||||

|

||||

train_pipeline = [

|

||||

dict(type='RecConAug', prob=0.5, image_shape=(48, 320, 3)),

|

||||

dict(type='RecAug'),

|

||||

dict(

|

||||

type='MultiLabelEncode',

|

||||

max_text_length=25,

|

||||

use_space_char=True,

|

||||

character_dict_path=character_dict_path,

|

||||

),

|

||||

dict(type='RecResizeImg', image_shape=(3, 48, 320)),

|

||||

dict(type='MMToTensor'),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img', 'label_ctc', 'label_sar', 'length', 'valid_ratio'],

|

||||

meta_keys=['img_path'])

|

||||

]

|

||||

|

||||

val_pipeline = [

|

||||

dict(

|

||||

type='MultiLabelEncode',

|

||||

max_text_length=25,

|

||||

use_space_char=True,

|

||||

character_dict_path=character_dict_path,

|

||||

),

|

||||

dict(type='RecResizeImg', image_shape=(3, 48, 320)),

|

||||

dict(type='MMToTensor'),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img', 'label_ctc', 'label_sar', 'length', 'valid_ratio'],

|

||||

meta_keys=['img_path'])

|

||||

]

|

||||

|

||||

test_pipeline = [

|

||||

dict(type='RecResizeImg', image_shape=(3, 48, 320)),

|

||||

dict(type='MMToTensor'),

|

||||

dict(type='Collect', keys=['img'], meta_keys=['img_path'])

|

||||

]

|

||||

|

||||

train_dataset = dict(

|

||||

type='OCRRecDataset',

|

||||

data_source=dict(

|

||||

type='OCRRecSource',

|

||||

label_file='ocr/rec/pai/label_file/train.txt',

|

||||

data_dir='ocr/rec/pai/img/train',

|

||||

ext_data_num=2,

|

||||

),

|

||||

pipeline=train_pipeline)

|

||||

|

||||

val_dataset = dict(

|

||||

type='OCRRecDataset',

|

||||

data_source=dict(

|

||||

type='OCRRecSource',

|

||||

label_file='ocr/rec/pai/label_file/test.txt',

|

||||

data_dir='ocr/rec/pai/img/test',

|

||||

ext_data_num=0,

|

||||

),

|

||||

pipeline=val_pipeline)

|

||||

|

||||

data = dict(

|

||||

imgs_per_gpu=128, workers_per_gpu=4, train=train_dataset, val=val_dataset)

|

||||

|

||||

total_epochs = 10

|

||||

optimizer = dict(type='Adam', lr=0.001, betas=(0.9, 0.999))

|

||||

|

||||

lr_config = dict(

|

||||

policy='CosineAnnealing',

|

||||

min_lr=1e-5,

|

||||

warmup='linear',

|

||||

warmup_iters=5,

|

||||

warmup_ratio=1e-4,

|

||||

warmup_by_epoch=True,

|

||||

by_epoch=False)

|

||||

|

||||

checkpoint_config = dict(interval=1)

|

||||

|

||||

log_config = dict(

|

||||

interval=10, hooks=[

|

||||

dict(type='TextLoggerHook'),

|

||||

])

|

||||

|

||||

eval_config = dict(initial=True, interval=1, gpu_collect=False)

|

||||

eval_pipelines = [

|

||||

dict(

|

||||

mode='test',

|

||||

dist_eval=False,

|

||||

evaluators=[dict(type='OCRRecEvaluator', ignore_space=False)],

|

||||

)

|

||||

]

|

||||

|

|

@ -0,0 +1,145 @@

|

|||

_base_ = ['configs/base.py']

|

||||

|

||||

character_dict_path = 'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/dict/ppocr_keys_v1.txt'

|

||||

|

||||

model = dict(

|

||||

type='OCRRecNet',

|

||||

backbone=dict(

|

||||

type='OCRRecMobileNetV1Enhance',

|

||||

scale=0.5,

|

||||

last_conv_stride=[1, 2],

|

||||

last_pool_type='avg'),

|

||||

# neck=dict(

|

||||

# type='SequenceEncoder',

|

||||

# in_channels=512,

|

||||

# encoder_type='svtr',

|

||||

# dims=64,

|

||||

# depth=2,

|

||||

# hidden_dims=120,

|

||||

# use_guide=True),

|

||||

# head=dict(

|

||||

# type='CTCHead',

|

||||

# in_channels=64,

|

||||

# fc_decay=0.00001),

|

||||

head=dict(

|

||||

type='MultiHead',

|

||||

in_channels=512,

|

||||

out_channels_list=dict(

|

||||

CTCLabelDecode=6625,

|

||||

SARLabelDecode=6627,

|

||||

),

|

||||

head_list=[

|

||||

dict(

|

||||

type='CTCHead',

|

||||

Neck=dict(

|

||||

type='svtr',

|

||||

dims=64,

|

||||

depth=2,

|

||||

hidden_dims=120,

|

||||

use_guide=True),

|

||||

Head=dict(fc_decay=0.00001, )),

|

||||

dict(type='SARHead', enc_dim=512, max_text_length=25)

|

||||

]),

|

||||

postprocess=dict(

|

||||

type='CTCLabelDecode',

|

||||

character_dict_path=character_dict_path,

|

||||

use_space_char=True),

|

||||

loss=dict(

|

||||

type='MultiLoss',

|

||||

ignore_index=6626,

|

||||

loss_config_list=[

|

||||

dict(CTCLoss=None),

|

||||

dict(SARLoss=None),

|

||||

]),

|

||||

pretrained=

|

||||

'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/rec/ch_PP-OCRv3_rec/best_accuracy_student.pth'

|

||||

)

|

||||

|

||||

train_pipeline = [

|

||||

dict(type='RecConAug', prob=0.5, image_shape=(48, 320, 3)),

|

||||

dict(type='RecAug'),

|

||||

dict(

|

||||

type='MultiLabelEncode',

|

||||

max_text_length=25,

|

||||

use_space_char=True,

|

||||

character_dict_path=character_dict_path,

|

||||

),

|

||||

dict(type='RecResizeImg', image_shape=(3, 48, 320)),

|

||||

dict(type='MMToTensor'),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img', 'label_ctc', 'label_sar', 'length', 'valid_ratio'],

|

||||

meta_keys=['img_path'])

|

||||

]

|

||||

|

||||

val_pipeline = [

|

||||

dict(

|

||||

type='MultiLabelEncode',

|

||||

max_text_length=25,

|

||||

use_space_char=True,

|

||||

character_dict_path=character_dict_path,

|

||||

),

|

||||

dict(type='RecResizeImg', image_shape=(3, 48, 320)),

|

||||

dict(type='MMToTensor'),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img', 'label_ctc', 'label_sar', 'length', 'valid_ratio'],

|

||||

meta_keys=['img_path'])

|

||||

]

|

||||

|

||||

test_pipeline = [

|

||||

dict(type='RecResizeImg', image_shape=(3, 48, 320)),

|

||||

dict(type='MMToTensor'),

|

||||

dict(type='Collect', keys=['img'], meta_keys=['img_path'])

|

||||

]

|

||||

|

||||

train_dataset = dict(

|

||||

type='OCRRecDataset',

|

||||

data_source=dict(

|

||||

type='OCRRecSource',

|

||||

label_file='ocr/rec/pai/label_file/train.txt',

|

||||

data_dir='ocr/rec/pai/img/train',

|

||||

ext_data_num=2,

|

||||

),

|

||||

pipeline=train_pipeline)

|

||||

|

||||

val_dataset = dict(

|

||||

type='OCRRecDataset',

|

||||

data_source=dict(

|

||||

type='OCRRecSource',

|

||||

label_file='ocr/rec/pai/label_file/test.txt',

|

||||

data_dir='ocr/rec/pai/img/test',

|

||||

ext_data_num=0,

|

||||

),

|

||||

pipeline=val_pipeline)

|

||||

|

||||

data = dict(

|

||||

imgs_per_gpu=128, workers_per_gpu=4, train=train_dataset, val=val_dataset)

|

||||

|

||||

total_epochs = 10

|

||||

optimizer = dict(type='Adam', lr=0.001, betas=(0.9, 0.999))

|

||||

|

||||

lr_config = dict(

|

||||

policy='CosineAnnealing',

|

||||

min_lr=1e-5,

|

||||

warmup='linear',

|

||||

warmup_iters=5,

|

||||

warmup_ratio=1e-4,

|

||||

warmup_by_epoch=True,

|

||||

by_epoch=False)

|

||||

|

||||

checkpoint_config = dict(interval=1)

|

||||

|

||||

log_config = dict(

|

||||

interval=10, hooks=[

|

||||

dict(type='TextLoggerHook'),

|

||||

])

|

||||

|

||||

eval_config = dict(initial=True, interval=1, gpu_collect=False)

|

||||

eval_pipelines = [

|

||||

dict(

|

||||

mode='test',

|

||||

dist_eval=False,

|

||||

evaluators=[dict(type='OCRRecEvaluator', ignore_space=False)],

|

||||

)

|

||||

]

|

||||

|

|

@ -0,0 +1,55 @@

|

|||

_base_ = ['configs/ocr/recognition/rec_model_ch.py']

|

||||

|

||||

character_dict_path = 'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/dict/chinese_cht_dict.txt'

|

||||

label_length = 8421

|

||||

model = dict(

|

||||

type='OCRRecNet',

|

||||

backbone=dict(

|

||||

type='OCRRecMobileNetV1Enhance',

|

||||

scale=0.5,

|

||||

last_conv_stride=[1, 2],

|

||||

last_pool_type='avg'),

|

||||

# inference

|

||||

# neck=dict(

|

||||

# type='SequenceEncoder',

|

||||

# in_channels=512,

|

||||

# encoder_type='svtr',

|

||||

# dims=64,

|

||||

# depth=2,

|

||||

# hidden_dims=120,

|

||||

# use_guide=True),

|

||||

# head=dict(

|

||||

# type='CTCHead',

|

||||

# in_channels=64,

|

||||

# fc_decay=0.00001),

|

||||

head=dict(

|

||||

type='MultiHead',

|

||||

in_channels=512,

|

||||

out_channels_list=dict(

|

||||

CTCLabelDecode=label_length + 2,

|

||||

SARLabelDecode=label_length + 4,

|

||||

),

|

||||

head_list=[

|

||||

dict(

|

||||

type='CTCHead',

|

||||

Neck=dict(

|

||||

type='svtr',

|

||||

dims=64,

|

||||

depth=2,

|

||||

hidden_dims=120,

|

||||

use_guide=True),

|

||||

Head=dict(fc_decay=0.00001, )),

|

||||

dict(type='SARHead', enc_dim=512, max_text_length=25)

|

||||

]),

|

||||

postprocess=dict(

|

||||

type='CTCLabelDecode',

|

||||

character_dict_path=character_dict_path,

|

||||

use_space_char=True),

|

||||

loss=dict(

|

||||

type='MultiLoss',

|

||||

ignore_index=label_length + 3,

|

||||

loss_config_list=[

|

||||

dict(CTCLoss=None),

|

||||

dict(SARLoss=None),

|

||||

]),

|

||||

pretrained=None)

|

||||

|

|

@ -0,0 +1,55 @@

|

|||

_base_ = ['configs/ocr/recognition/rec_model_ch.py']

|

||||

|

||||

character_dict_path = 'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/dict/cyrillic_dict.txt'

|

||||

label_length = 163

|

||||

model = dict(

|

||||

type='OCRRecNet',

|

||||

backbone=dict(

|

||||

type='OCRRecMobileNetV1Enhance',

|

||||

scale=0.5,

|

||||

last_conv_stride=[1, 2],

|

||||

last_pool_type='avg'),

|

||||

# inference

|

||||

# neck=dict(

|

||||

# type='SequenceEncoder',

|

||||

# in_channels=512,

|

||||

# encoder_type='svtr',

|

||||

# dims=64,

|

||||

# depth=2,

|

||||

# hidden_dims=120,

|

||||

# use_guide=True),

|

||||

# head=dict(

|

||||

# type='CTCHead',

|

||||

# in_channels=64,

|

||||

# fc_decay=0.00001),

|

||||

head=dict(

|

||||

type='MultiHead',

|

||||

in_channels=512,

|

||||

out_channels_list=dict(

|

||||

CTCLabelDecode=label_length + 2,

|

||||

SARLabelDecode=label_length + 4,

|

||||

),

|

||||

head_list=[

|

||||

dict(

|

||||

type='CTCHead',

|

||||

Neck=dict(

|

||||

type='svtr',

|

||||

dims=64,

|

||||

depth=2,

|

||||

hidden_dims=120,

|

||||

use_guide=True),

|

||||

Head=dict(fc_decay=0.00001, )),

|

||||

dict(type='SARHead', enc_dim=512, max_text_length=25)

|

||||

]),

|

||||

postprocess=dict(

|

||||

type='CTCLabelDecode',

|

||||

character_dict_path=character_dict_path,

|

||||

use_space_char=True),

|

||||

loss=dict(

|

||||

type='MultiLoss',

|

||||

ignore_index=label_length + 3,

|

||||

loss_config_list=[

|

||||

dict(CTCLoss=None),

|

||||

dict(SARLoss=None),

|

||||

]),

|

||||

pretrained=None)

|

||||

|

|

@ -0,0 +1,55 @@

|

|||

_base_ = ['configs/ocr/recognition/rec_model_ch.py']

|

||||

|

||||

character_dict_path = 'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/dict/devanagari_dict.txt'

|

||||

label_length = 167

|

||||

model = dict(

|

||||

type='OCRRecNet',

|

||||

backbone=dict(

|

||||

type='OCRRecMobileNetV1Enhance',

|

||||

scale=0.5,

|

||||

last_conv_stride=[1, 2],

|

||||

last_pool_type='avg'),

|

||||

# inference

|

||||

# neck=dict(

|

||||

# type='SequenceEncoder',

|

||||

# in_channels=512,

|

||||

# encoder_type='svtr',

|

||||

# dims=64,

|

||||

# depth=2,

|

||||

# hidden_dims=120,

|

||||

# use_guide=True),

|

||||

# head=dict(

|

||||

# type='CTCHead',

|

||||

# in_channels=64,

|

||||

# fc_decay=0.00001),

|

||||

head=dict(

|

||||

type='MultiHead',

|

||||

in_channels=512,

|

||||

out_channels_list=dict(

|

||||

CTCLabelDecode=label_length + 2,

|

||||

SARLabelDecode=label_length + 4,

|

||||

),

|

||||

head_list=[

|

||||

dict(

|

||||

type='CTCHead',

|

||||

Neck=dict(

|

||||

type='svtr',

|

||||

dims=64,

|

||||

depth=2,

|

||||

hidden_dims=120,

|

||||

use_guide=True),

|

||||

Head=dict(fc_decay=0.00001, )),

|

||||

dict(type='SARHead', enc_dim=512, max_text_length=25)

|

||||

]),

|

||||

postprocess=dict(

|

||||

type='CTCLabelDecode',

|

||||

character_dict_path=character_dict_path,

|

||||

use_space_char=True),

|

||||

loss=dict(

|

||||

type='MultiLoss',

|

||||

ignore_index=label_length + 3,

|

||||

loss_config_list=[

|

||||

dict(CTCLoss=None),

|

||||

dict(SARLoss=None),

|

||||

]),

|

||||

pretrained=None)

|

||||

|

|

@ -0,0 +1,152 @@

|

|||

_base_ = ['configs/base.py']

|

||||

|

||||

# character_dict_path = 'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/dict/ic15_dict.txt'

|

||||

character_dict_path = 'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/dict/en_dict.txt'

|

||||

model = dict(

|

||||

type='OCRRecNet',

|

||||

backbone=dict(

|

||||

type='OCRRecMobileNetV1Enhance',

|

||||

scale=0.5,

|

||||

last_conv_stride=[1, 2],

|

||||

last_pool_type='avg'),

|

||||

# neck=dict(

|

||||

# type='SequenceEncoder',

|

||||

# in_channels=512,

|

||||

# encoder_type='svtr',

|

||||

# dims=64,

|

||||

# depth=2,

|

||||

# hidden_dims=120,

|

||||

# use_guide=True),

|

||||

# head=dict(

|

||||

# type='CTCHead',

|

||||

# in_channels=64,

|

||||

# fc_decay=0.00001),

|

||||

head=dict(

|

||||

type='MultiHead',

|

||||

in_channels=512,

|

||||

# out_channels_list=dict(

|

||||

# CTCLabelDecode=37,

|

||||

# SARLabelDecode=39,

|

||||

# ),

|

||||

out_channels_list=dict(

|

||||

CTCLabelDecode=97,

|

||||

SARLabelDecode=99,

|

||||

),

|

||||

head_list=[

|

||||

dict(

|

||||

type='CTCHead',

|

||||

Neck=dict(

|

||||

type='svtr',

|

||||

dims=64,

|

||||

depth=2,

|

||||

hidden_dims=120,

|

||||

use_guide=True),

|

||||

Head=dict(fc_decay=0.00001, )),

|

||||

dict(type='SARHead', enc_dim=512, max_text_length=25)

|

||||

]),

|

||||

postprocess=dict(

|

||||

type='CTCLabelDecode',

|

||||

character_dict_path=character_dict_path,

|

||||

use_space_char=False),

|

||||

loss=dict(

|

||||

type='MultiLoss',

|

||||

# ignore_index=38,

|

||||

ignore_index=98,

|

||||

loss_config_list=[

|

||||

dict(CTCLoss=None),

|

||||

dict(SARLoss=None),

|

||||

]),

|

||||

pretrained=

|

||||

'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/rec/en_PP-OCRv3_rec/best_accuracy.pth'

|

||||

)

|

||||

|

||||

train_pipeline = [

|

||||

dict(type='RecConAug', prob=0.5, image_shape=(48, 320, 3)),

|

||||

dict(type='RecAug'),

|

||||

dict(

|

||||

type='MultiLabelEncode',

|

||||

max_text_length=25,

|

||||

use_space_char=False,

|

||||

character_dict_path=character_dict_path),

|

||||

dict(type='RecResizeImg', image_shape=(3, 48, 320)),

|

||||

dict(type='MMToTensor'),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img', 'label_ctc', 'label_sar', 'length', 'valid_ratio'],

|

||||

meta_keys=['img_path'])

|

||||

]

|

||||

|

||||

val_pipeline = [

|

||||

dict(

|

||||

type='MultiLabelEncode',

|

||||

max_text_length=25,

|

||||

use_space_char=False,

|

||||

character_dict_path=character_dict_path),

|

||||

dict(type='RecResizeImg', image_shape=(3, 48, 320)),

|

||||

dict(type='MMToTensor'),

|

||||

dict(

|

||||

type='Collect',

|

||||

keys=['img', 'label_ctc', 'label_sar', 'length', 'valid_ratio'],

|

||||

meta_keys=['img_path'])

|

||||

]

|

||||

# test_pipeline = [

|

||||

# dict(type='OCRResizeNorm', img_shape=(48, 320)),

|

||||

# dict(type='ImageToTensor', keys=['img']),

|

||||

# dict(type='Collect', keys=['img']),

|

||||

# ]

|

||||

test_pipeline = [

|

||||

dict(type='RecResizeImg', image_shape=(3, 48, 320)),

|

||||

dict(type='MMToTensor'),

|

||||

dict(type='Collect', keys=['img'], meta_keys=['img_path'])

|

||||

]

|

||||

|

||||

train_dataset = dict(

|

||||

type='OCRRecDataset',

|

||||

data_source=dict(

|

||||

type='OCRReclmdbSource',

|

||||

data_dir='ocr/rec/DTRB/debug/data_lmdb_release/validation',

|

||||

ext_data_num=2,

|

||||

),

|

||||

pipeline=train_pipeline)

|

||||

|

||||

val_dataset = dict(

|

||||

type='OCRRecDataset',

|

||||

data_source=dict(

|

||||

type='OCRReclmdbSource',

|

||||

data_dir='ocr/rec/DTRB/debug/data_lmdb_release/validation',

|

||||

ext_data_num=0,

|

||||

test_mode=True,

|

||||

),

|

||||

pipeline=val_pipeline)

|

||||

|

||||

data = dict(

|

||||

imgs_per_gpu=256, workers_per_gpu=4, train=train_dataset, val=val_dataset)

|

||||

|

||||

total_epochs = 72

|

||||

|

||||

optimizer = dict(type='Adam', lr=0.0005, betas=(0.9, 0.999), weight_decay=0.0)

|

||||

|

||||

lr_config = dict(

|

||||

policy='CosineAnnealing',

|

||||

min_lr=1e-5,

|

||||

warmup='linear',

|

||||

warmup_iters=5,

|

||||

warmup_ratio=1e-4,

|

||||

warmup_by_epoch=True,

|

||||

by_epoch=False)

|

||||

|

||||

checkpoint_config = dict(interval=5)

|

||||

|

||||

log_config = dict(

|

||||

interval=100, hooks=[

|

||||

dict(type='TextLoggerHook'),

|

||||

])

|

||||

|

||||

eval_config = dict(initial=True, interval=1, gpu_collect=False)

|

||||

eval_pipelines = [

|

||||

dict(

|

||||

mode='test',

|

||||

dist_eval=False,

|

||||

evaluators=[dict(type='OCRRecEvaluator')],

|

||||

)

|

||||

]

|

||||

|

|

@ -0,0 +1,55 @@

|

|||

_base_ = ['configs/ocr/recognition/rec_model_ch.py']

|

||||

|

||||

character_dict_path = 'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/dict/japan_dict.txt'

|

||||

label_length = 4399

|

||||

model = dict(

|

||||

type='OCRRecNet',

|

||||

backbone=dict(

|

||||

type='OCRRecMobileNetV1Enhance',

|

||||

scale=0.5,

|

||||

last_conv_stride=[1, 2],

|

||||

last_pool_type='avg'),

|

||||

# inference

|

||||

# neck=dict(

|

||||

# type='SequenceEncoder',

|

||||

# in_channels=512,

|

||||

# encoder_type='svtr',

|

||||

# dims=64,

|

||||

# depth=2,

|

||||

# hidden_dims=120,

|

||||

# use_guide=True),

|

||||

# head=dict(

|

||||

# type='CTCHead',

|

||||

# in_channels=64,

|

||||

# fc_decay=0.00001),

|

||||

head=dict(

|

||||

type='MultiHead',

|

||||

in_channels=512,

|

||||

out_channels_list=dict(

|

||||

CTCLabelDecode=label_length + 2,

|

||||

SARLabelDecode=label_length + 4,

|

||||

),

|

||||

head_list=[

|

||||

dict(

|

||||

type='CTCHead',

|

||||

Neck=dict(

|

||||

type='svtr',

|

||||

dims=64,

|

||||

depth=2,

|

||||

hidden_dims=120,

|

||||

use_guide=True),

|

||||

Head=dict(fc_decay=0.00001, )),

|

||||

dict(type='SARHead', enc_dim=512, max_text_length=25)

|

||||

]),

|

||||

postprocess=dict(

|

||||

type='CTCLabelDecode',

|

||||

character_dict_path=character_dict_path,

|

||||

use_space_char=True),

|

||||

loss=dict(

|

||||

type='MultiLoss',

|

||||

ignore_index=label_length + 3,

|

||||

loss_config_list=[

|

||||

dict(CTCLoss=None),

|

||||

dict(SARLoss=None),

|

||||

]),

|

||||

pretrained=None)

|

||||

|

|

@ -0,0 +1,55 @@

|

|||

_base_ = ['configs/ocr/recognition/rec_model_ch.py']

|

||||

|

||||

character_dict_path = 'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/dict/ka_dict.txt'

|

||||

label_length = 153

|

||||

model = dict(

|

||||

type='OCRRecNet',

|

||||

backbone=dict(

|

||||

type='OCRRecMobileNetV1Enhance',

|

||||

scale=0.5,

|

||||

last_conv_stride=[1, 2],

|

||||

last_pool_type='avg'),

|

||||

# inference

|

||||

# neck=dict(

|

||||

# type='SequenceEncoder',

|

||||

# in_channels=512,

|

||||

# encoder_type='svtr',

|

||||

# dims=64,

|

||||

# depth=2,

|

||||

# hidden_dims=120,

|

||||

# use_guide=True),

|

||||

# head=dict(

|

||||

# type='CTCHead',

|

||||

# in_channels=64,

|

||||

# fc_decay=0.00001),

|

||||

head=dict(

|

||||

type='MultiHead',

|

||||

in_channels=512,

|

||||

out_channels_list=dict(

|

||||

CTCLabelDecode=label_length + 2,

|

||||

SARLabelDecode=label_length + 4,

|

||||

),

|

||||

head_list=[

|

||||

dict(

|

||||

type='CTCHead',

|

||||

Neck=dict(

|

||||

type='svtr',

|

||||

dims=64,

|

||||

depth=2,

|

||||

hidden_dims=120,

|

||||

use_guide=True),

|

||||

Head=dict(fc_decay=0.00001, )),

|

||||

dict(type='SARHead', enc_dim=512, max_text_length=25)

|

||||

]),

|

||||

postprocess=dict(

|

||||

type='CTCLabelDecode',

|

||||

character_dict_path=character_dict_path,

|

||||

use_space_char=True),

|

||||

loss=dict(

|

||||

type='MultiLoss',

|

||||

ignore_index=label_length + 3,

|

||||

loss_config_list=[

|

||||

dict(CTCLoss=None),

|

||||

dict(SARLoss=None),

|

||||

]),

|

||||

pretrained=None)

|

||||

|

|

@ -0,0 +1,55 @@

|

|||

_base_ = ['configs/ocr/recognition/rec_model_ch.py']

|

||||

|

||||

character_dict_path = 'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/dict/korean_dict.txt'

|

||||

|

||||

model = dict(

|

||||

type='OCRRecNet',

|

||||

backbone=dict(

|

||||

type='OCRRecMobileNetV1Enhance',

|

||||

scale=0.5,

|

||||

last_conv_stride=[1, 2],

|

||||

last_pool_type='avg'),

|

||||

# inference

|

||||

# neck=dict(

|

||||

# type='SequenceEncoder',

|

||||

# in_channels=512,

|

||||

# encoder_type='svtr',

|

||||

# dims=64,

|

||||

# depth=2,

|

||||

# hidden_dims=120,

|

||||

# use_guide=True),

|

||||

# head=dict(

|

||||

# type='CTCHead',

|

||||

# in_channels=64,

|

||||

# fc_decay=0.00001),

|

||||

head=dict(

|

||||

type='MultiHead',

|

||||

in_channels=512,

|

||||

out_channels_list=dict(

|

||||

CTCLabelDecode=3690,

|

||||

SARLabelDecode=3692,

|

||||

),

|

||||

head_list=[

|

||||

dict(

|

||||

type='CTCHead',

|

||||

Neck=dict(

|

||||

type='svtr',

|

||||

dims=64,

|

||||

depth=2,

|

||||

hidden_dims=120,

|

||||

use_guide=True),

|

||||

Head=dict(fc_decay=0.00001, )),

|

||||

dict(type='SARHead', enc_dim=512, max_text_length=25)

|

||||

]),

|

||||

postprocess=dict(

|

||||

type='CTCLabelDecode',

|

||||

character_dict_path=character_dict_path,

|

||||

use_space_char=True),

|

||||

loss=dict(

|

||||

type='MultiLoss',

|

||||

ignore_index=3691,

|

||||

loss_config_list=[

|

||||

dict(CTCLoss=None),

|

||||

dict(SARLoss=None),

|

||||

]),

|

||||

pretrained=None)

|

||||

|

|

@ -0,0 +1,55 @@

|

|||

_base_ = ['configs/ocr/recognition/rec_model_ch.py']

|

||||

|

||||

character_dict_path = 'http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/ocr/dict/latin_dict.txt'

|

||||

label_length = 185

|

||||

model = dict(

|

||||

type='OCRRecNet',

|

||||

backbone=dict(

|

||||

type='OCRRecMobileNetV1Enhance',

|

||||

scale=0.5,

|

||||

last_conv_stride=[1, 2],

|

||||

last_pool_type='avg'),

|

||||

# inference

|

||||

# neck=dict(

|

||||

# type='SequenceEncoder',

|

||||

# in_channels=512,

|

||||

# encoder_type='svtr',

|

||||

# dims=64,

|

||||

# depth=2,

|

||||

# hidden_dims=120,

|

||||

# use_guide=True),

|

||||

# head=dict(

|

||||

# type='CTCHead',

|

||||

# in_channels=64,

|

||||

# fc_decay=0.00001),

|

||||

head=dict(

|

||||

type='MultiHead',

|

||||

in_channels=512,

|

||||

out_channels_list=dict(

|

||||

CTCLabelDecode=label_length + 2,

|

||||

SARLabelDecode=label_length + 4,

|

||||

),

|

||||

head_list=[

|

||||

dict(

|

||||

type='CTCHead',

|

||||

Neck=dict(

|

||||

type='svtr',

|

||||

dims=64,

|