diff --git a/.gitignore b/.gitignore

index 3a05fb746..3300be325 100644

--- a/.gitignore

+++ b/.gitignore

@@ -31,4 +31,4 @@ paddleocr.egg-info/

/deploy/android_demo/app/.cxx/

/deploy/android_demo/app/cache/

test_tipc/web/models/

-test_tipc/web/node_modules/

\ No newline at end of file

+test_tipc/web/node_modules/

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index 3c26460ba..4121e4a65 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -1,26 +1,22 @@

repos:

- repo: https://github.com/pre-commit/pre-commit-hooks

- rev: a11d9314b22d8f8c7556443875b731ef05965464

+ rev: v4.6.0

hooks:

+ - id: check-added-large-files

+ args: ['--maxkb=512']

+ - id: check-case-conflict

- id: check-merge-conflict

- id: check-symlinks

- id: detect-private-key

- files: (?!.*paddle)^.*$

- id: end-of-file-fixer

- files: \.md$

- id: trailing-whitespace

- files: \.md$

+ files: \.(c|cc|cxx|cpp|cu|h|hpp|hxx|py|md)$

- repo: https://github.com/Lucas-C/pre-commit-hooks

- rev: v1.0.1

+ rev: v1.5.1

hooks:

- - id: forbid-crlf

- files: \.md$

- id: remove-crlf

- files: \.md$

- - id: forbid-tabs

- files: \.md$

- id: remove-tabs

- files: \.md$

+ files: \.(c|cc|cxx|cpp|cu|h|hpp|hxx|py|md)$

- repo: local

hooks:

- id: clang-format

@@ -31,7 +27,7 @@ repos:

files: \.(c|cc|cxx|cpp|cu|h|hpp|hxx|cuh|proto)$

# For Python files

- repo: https://github.com/psf/black.git

- rev: 23.3.0

+ rev: 24.4.2

hooks:

- id: black

files: (.*\.(py|pyi|bzl)|BUILD|.*\.BUILD|WORKSPACE)$

@@ -47,4 +43,3 @@ repos:

- --show-source

- --statistics

exclude: ^benchmark/|^test_tipc/

-

diff --git a/MANIFEST.in b/MANIFEST.in

index f821618ab..a72b3728a 100644

--- a/MANIFEST.in

+++ b/MANIFEST.in

@@ -7,4 +7,4 @@ recursive-include ppocr/postprocess *.py

recursive-include tools/infer *.py

recursive-include tools __init__.py

recursive-include ppocr/utils/e2e_utils *.py

-recursive-include ppstructure *.py

\ No newline at end of file

+recursive-include ppstructure *.py

diff --git a/README.md b/README.md

index 28708236c..e0b88b069 100644

--- a/README.md

+++ b/README.md

@@ -207,12 +207,12 @@ PaddleOCR is being oversight by a [PMC](https://github.com/PaddlePaddle/PaddleOC

<details open>

<summary>PP-Structure 文档分析</summary>

-- 版面分析+表格识别

+- 版面分析+表格识别

<div align="center">

<img src="./ppstructure/docs/table/ppstructure.GIF" width="800">

</div>

-- SER(语义实体识别)

+- SER(语义实体识别)

<div align="center">

<img src="https://user-images.githubusercontent.com/14270174/185310636-6ce02f7c-790d-479f-b163-ea97a5a04808.jpg" width="600">

</div>

diff --git a/README_en.md b/README_en.md

index be6551293..93b8e0fe6 100644

--- a/README_en.md

+++ b/README_en.md

@@ -119,11 +119,11 @@ PaddleOCR support a variety of cutting-edge algorithms related to OCR, and devel

- [Mobile](./deploy/lite/readme.md)

- [Paddle2ONNX](./deploy/paddle2onnx/readme.md)

- [PaddleCloud](./deploy/paddlecloud/README.md)

- - [Benchmark](./doc/doc_en/benchmark_en.md)

+ - [Benchmark](./doc/doc_en/benchmark_en.md)

- [PP-Structure 🔥](./ppstructure/README.md)

- [Quick Start](./ppstructure/docs/quickstart_en.md)

- [Model Zoo](./ppstructure/docs/models_list_en.md)

- - [Model training](./doc/doc_en/training_en.md)

+ - [Model training](./doc/doc_en/training_en.md)

- [Layout Analysis](./ppstructure/layout/README.md)

- [Table Recognition](./ppstructure/table/README.md)

- [Key Information Extraction](./ppstructure/kie/README.md)

@@ -136,7 +136,7 @@ PaddleOCR support a variety of cutting-edge algorithms related to OCR, and devel

- [Text recognition](./doc/doc_en/algorithm_overview_en.md)

- [End-to-end OCR](./doc/doc_en/algorithm_overview_en.md)

- [Table Recognition](./doc/doc_en/algorithm_overview_en.md)

- - [Key Information Extraction](./doc/doc_en/algorithm_overview_en.md)

+ - [Key Information Extraction](./doc/doc_en/algorithm_overview_en.md)

- [Add New Algorithms to PaddleOCR](./doc/doc_en/add_new_algorithm_en.md)

- Data Annotation and Synthesis

- [Semi-automatic Annotation Tool: PPOCRLabel](https://github.com/PFCCLab/PPOCRLabel/blob/main/README.md)

@@ -188,7 +188,7 @@ PaddleOCR support a variety of cutting-edge algorithms related to OCR, and devel

<details open>

<summary>PP-StructureV2</summary>

-- layout analysis + table recognition

+- layout analysis + table recognition

<div align="center">

<img src="./ppstructure/docs/table/ppstructure.GIF" width="800">

</div>

@@ -209,7 +209,7 @@ PaddleOCR support a variety of cutting-edge algorithms related to OCR, and devel

- RE (Relation Extraction)

<div align="center">

<img src="https://user-images.githubusercontent.com/25809855/186094813-3a8e16cc-42e5-4982-b9f4-0134dfb5688d.png" width="600">

-</div>

+</div>

<div align="center">

<img src="https://user-images.githubusercontent.com/14270174/185393805-c67ff571-cf7e-4217-a4b0-8b396c4f22bb.jpg" width="600">

diff --git a/applications/PCB字符识别/PCB字符识别.md b/applications/PCB字符识别/PCB字符识别.md

index 4b4efe473..b16d54e53 100644

--- a/applications/PCB字符识别/PCB字符识别.md

+++ b/applications/PCB字符识别/PCB字符识别.md

@@ -546,7 +546,7 @@ python3 tools/infer/predict_system.py \

--use_gpu=True

```

-得到保存结果,文本检测识别可视化图保存在`det_rec_infer/`目录下,预测结果保存在`det_rec_infer/system_results.txt`中,格式如下:`0018.jpg [{"transcription": "E295", "points": [[88, 33], [137, 33], [137, 40], [88, 40]]}]`

+得到保存结果,文本检测识别可视化图保存在`det_rec_infer/`目录下,预测结果保存在`det_rec_infer/system_results.txt`中,格式如下:`0018.jpg [{"transcription": "E295", "points": [[88, 33], [137, 33], [137, 40], [88, 40]]}]`

2)然后将步骤一保存的数据转换为端对端评测需要的数据格式: 修改 `tools/end2end/convert_ppocr_label.py`中的代码,convert_label函数中设置输入标签路径,Mode,保存标签路径等,对预测数据的GTlabel和预测结果的label格式进行转换。

```

diff --git a/applications/PCB字符识别/gen_data/corpus/text.txt b/applications/PCB字符识别/gen_data/corpus/text.txt

index 8b8cb793e..ef40e9cdc 100644

--- a/applications/PCB字符识别/gen_data/corpus/text.txt

+++ b/applications/PCB字符识别/gen_data/corpus/text.txt

@@ -27,4 +27,4 @@ K06

KIEY

NZQJ

UN1B

-6X4

\ No newline at end of file

+6X4

diff --git a/applications/中文表格识别.md b/applications/中文表格识别.md

index d61514ff2..3ed72d20e 100644

--- a/applications/中文表格识别.md

+++ b/applications/中文表格识别.md

@@ -456,7 +456,7 @@ display(HTML('<html><body><table><tr><td colspan="5">alleadersh</td><td rowspan=

预测结果如下:

```

-val_9.jpg: {'attributes': ['Scanned', 'Little', 'Black-and-White', 'Clear', 'Without-Obstacles', 'Horizontal'], 'output': [1, 1, 1, 1, 1, 1]}

+val_9.jpg: {'attributes': ['Scanned', 'Little', 'Black-and-White', 'Clear', 'Without-Obstacles', 'Horizontal'], 'output': [1, 1, 1, 1, 1, 1]}

```

@@ -466,7 +466,7 @@ val_9.jpg: {'attributes': ['Scanned', 'Little', 'Black-and-White', 'Clear', 'Wi

预测结果如下:

```

-val_3253.jpg: {'attributes': ['Photo', 'Little', 'Black-and-White', 'Blurry', 'Without-Obstacles', 'Tilted'], 'output': [0, 1, 1, 0, 1, 0]}

+val_3253.jpg: {'attributes': ['Photo', 'Little', 'Black-and-White', 'Blurry', 'Without-Obstacles', 'Tilted'], 'output': [0, 1, 1, 0, 1, 0]}

```

对比两张图片可以发现,第一张图片比较清晰,表格属性的结果也偏向于比较容易识别,我们可以更相信表格识别的结果,第二张图片比较模糊,且存在倾斜现象,表格识别可能存在错误,需要我们人工进一步校验。通过表格的属性识别能力,可以进一步将“人工”和“智能”很好的结合起来,为表格识别能力的落地的精度提供保障。

diff --git a/applications/光功率计数码管字符识别/光功率计数码管字符识别.md b/applications/光功率计数码管字符识别/光功率计数码管字符识别.md

index 215b308d3..4e6e7acd5 100644

--- a/applications/光功率计数码管字符识别/光功率计数码管字符识别.md

+++ b/applications/光功率计数码管字符识别/光功率计数码管字符识别.md

@@ -434,16 +434,16 @@ python3 -m paddle.distributed.launch --gpus '0' tools/eval.py -c configs/rec/PP-

```

output/rec/

-├── best_accuracy.pdopt

-├── best_accuracy.pdparams

-├── best_accuracy.states

-├── config.yml

-├── iter_epoch_3.pdopt

-├── iter_epoch_3.pdparams

-├── iter_epoch_3.states

-├── latest.pdopt

-├── latest.pdparams

-├── latest.states

+├── best_accuracy.pdopt

+├── best_accuracy.pdparams

+├── best_accuracy.states

+├── config.yml

+├── iter_epoch_3.pdopt

+├── iter_epoch_3.pdparams

+├── iter_epoch_3.states

+├── latest.pdopt

+├── latest.pdparams

+├── latest.states

└── train.log

```

diff --git a/applications/包装生产日期识别.md b/applications/包装生产日期识别.md

index 670ec9cda..bc833687e 100644

--- a/applications/包装生产日期识别.md

+++ b/applications/包装生产日期识别.md

@@ -243,7 +243,7 @@ def get_cropus(f):

elif 0.7 < rad < 0.8:

f.write('20{:02d}-{:02d}-{:02d}'.format(year, month, day))

elif 0.8 < rad < 0.9:

- f.write('20{:02d}.{:02d}.{:02d}'.format(year, month, day))

+ f.write('20{:02d}.{:02d}.{:02d}'.format(year, month, day))

else:

f.write('{:02d}:{:02d}:{:02d} {:02d}'.format(hours, minute, second, file_id2))

diff --git a/applications/印章弯曲文字识别.md b/applications/印章弯曲文字识别.md

index bc0eaa35d..5e230cf02 100644

--- a/applications/印章弯曲文字识别.md

+++ b/applications/印章弯曲文字识别.md

@@ -409,7 +409,7 @@ def crop_seal_from_img(label_file, data_dir, save_dir, save_gt_path):

-if __name__ == "__main__":

+if __name__ == "__main__":

# 数据处理

gen_extract_label("./seal_labeled_datas", "./seal_labeled_datas/Label.txt", "./seal_ppocr_gt/seal_det_img.txt", "./seal_ppocr_gt/seal_ppocr_img.txt")

@@ -523,7 +523,7 @@ def gen_xml_label(mode='train'):

xml_file = open(("./seal_VOC/Annotations" + '/' + i_name + '.xml'), 'w')

xml_file.write('<annotation>\n')

xml_file.write(' <folder>seal_VOC</folder>\n')

- xml_file.write(' <filename>' + str(img_name) + '</filename>\n')

+ xml_file.write(' <filename>' + str(img_name) + '</filename>\n')

xml_file.write(' <path>' + 'Annotations/' + str(img_name) + '</path>\n')

xml_file.write(' <size>\n')

xml_file.write(' <width>' + str(width) + '</width>\n')

@@ -553,7 +553,7 @@ def gen_xml_label(mode='train'):

xml_file.write(' <ymax>'+str(ymax)+'</ymax>\n')

xml_file.write(' </bndbox>\n')

xml_file.write(' </object>\n')

- xml_file.write('</annotation>')

+ xml_file.write('</annotation>')

xml_file.close()

print(f'{mode} xml save done!')

diff --git a/applications/多模态表单识别.md b/applications/多模态表单识别.md

index 59aaf72b7..fd403bb84 100644

--- a/applications/多模态表单识别.md

+++ b/applications/多模态表单识别.md

@@ -110,12 +110,12 @@ tar -xf XFUND.tar

```bash

/home/aistudio/PaddleOCR/ppstructure/vqa/XFUND

- └─ zh_train/ 训练集

- ├── image/ 图片存放文件夹

- ├── xfun_normalize_train.json 标注信息

- └─ zh_val/ 验证集

- ├── image/ 图片存放文件夹

- ├── xfun_normalize_val.json 标注信息

+ └─ zh_train/ 训练集

+ ├── image/ 图片存放文件夹

+ ├── xfun_normalize_train.json 标注信息

+ └─ zh_val/ 验证集

+ ├── image/ 图片存放文件夹

+ ├── xfun_normalize_val.json 标注信息

```

@@ -805,7 +805,7 @@ CUDA_VISIBLE_DEVICES=0 python3 tools/infer_vqa_token_ser_re.py \

最终会在config.Global.save_res_path字段所配置的目录下保存预测结果可视化图像以及预测结果文本文件,预测结果文本文件名为infer_results.txt, 每一行表示一张图片的结果,每张图片的结果如下所示,前面表示测试图片路径,后面为测试结果:key字段及对应的value字段。

```

-test_imgs/t131.jpg {"政治面税": "群众", "性别": "男", "籍贯": "河北省邯郸市", "婚姻状况": "亏末婚口已婚口已娇", "通讯地址": "邯郸市阳光苑7号楼003", "民族": "汉族", "毕业院校": "河南工业大学", "户口性质": "口农村城镇", "户口地址": "河北省邯郸市", "联系电话": "13288888888", "健康状况": "健康", "姓名": "小六", "好高cm": "180", "出生年月": "1996年8月9日", "文化程度": "本科", "身份证号码": "458933777777777777"}

+test_imgs/t131.jpg {"政治面税": "群众", "性别": "男", "籍贯": "河北省邯郸市", "婚姻状况": "亏末婚口已婚口已娇", "通讯地址": "邯郸市阳光苑7号楼003", "民族": "汉族", "毕业院校": "河南工业大学", "户口性质": "口农村城镇", "户口地址": "河北省邯郸市", "联系电话": "13288888888", "健康状况": "健康", "姓名": "小六", "好高cm": "180", "出生年月": "1996年8月9日", "文化程度": "本科", "身份证号码": "458933777777777777"}

````

展示预测结果

diff --git a/applications/快速构建卡证类OCR.md b/applications/快速构建卡证类OCR.md

index 79266c6c2..50b70ff3a 100644

--- a/applications/快速构建卡证类OCR.md

+++ b/applications/快速构建卡证类OCR.md

@@ -1,775 +1,775 @@

-# 快速构建卡证类OCR

-

-

-- [快速构建卡证类OCR](#快速构建卡证类ocr)

- - [1. 金融行业卡证识别应用](#1-金融行业卡证识别应用)

- - [1.1 金融行业中的OCR相关技术](#11-金融行业中的ocr相关技术)

- - [1.2 金融行业中的卡证识别场景介绍](#12-金融行业中的卡证识别场景介绍)

- - [1.3 OCR落地挑战](#13-ocr落地挑战)

- - [2. 卡证识别技术解析](#2-卡证识别技术解析)

- - [2.1 卡证分类模型](#21-卡证分类模型)

- - [2.2 卡证识别模型](#22-卡证识别模型)

- - [3. OCR技术拆解](#3-ocr技术拆解)

- - [3.1技术流程](#31技术流程)

- - [3.2 OCR技术拆解---卡证分类](#32-ocr技术拆解---卡证分类)

- - [卡证分类:数据、模型准备](#卡证分类数据模型准备)

- - [卡证分类---修改配置文件](#卡证分类---修改配置文件)

- - [卡证分类---训练](#卡证分类---训练)

- - [3.2 OCR技术拆解---卡证识别](#32-ocr技术拆解---卡证识别)

- - [身份证识别:检测+分类](#身份证识别检测分类)

- - [数据标注](#数据标注)

- - [4 . 项目实践](#4--项目实践)

- - [4.1 环境准备](#41-环境准备)

- - [4.2 配置文件修改](#42-配置文件修改)

- - [4.3 代码修改](#43-代码修改)

- - [4.3.1 数据读取](#431-数据读取)

- - [4.3.2 head修改](#432--head修改)

- - [4.3.3 修改loss](#433-修改loss)

- - [4.3.4 后处理](#434-后处理)

- - [4.4. 模型启动](#44-模型启动)

- - [5 总结](#5-总结)

- - [References](#references)

-

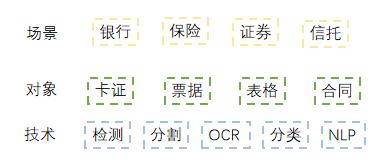

-## 1. 金融行业卡证识别应用

-

-### 1.1 金融行业中的OCR相关技术

-

-* 《“十四五”数字经济发展规划》指出,2020年我国数字经济核心产业增加值占GDP比重达7.8%,随着数字经济迈向全面扩展,到2025年该比例将提升至10%。

-

-* 在过去数年的跨越发展与积累沉淀中,数字金融、金融科技已在对金融业的重塑与再造中充分印证了其自身价值。

-

-* 以智能为目标,提升金融数字化水平,实现业务流程自动化,降低人力成本。

-

-

-

-

-

-

-### 1.2 金融行业中的卡证识别场景介绍

-

-应用场景:身份证、银行卡、营业执照、驾驶证等。

-

-应用难点:由于数据的采集来源多样,以及实际采集数据各种噪声:反光、褶皱、模糊、倾斜等各种问题干扰。

-

-

-

-

-

-### 1.3 OCR落地挑战

-

-

-

-

-

-

-

-

-## 2. 卡证识别技术解析

-

-

-

-

-

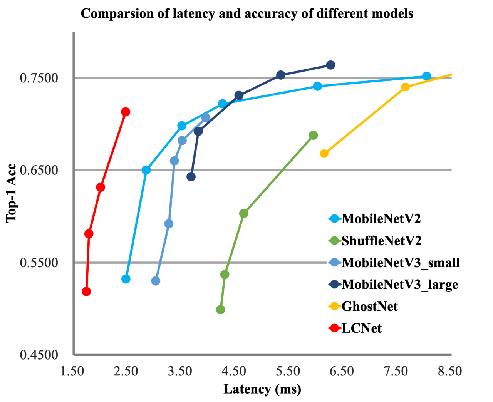

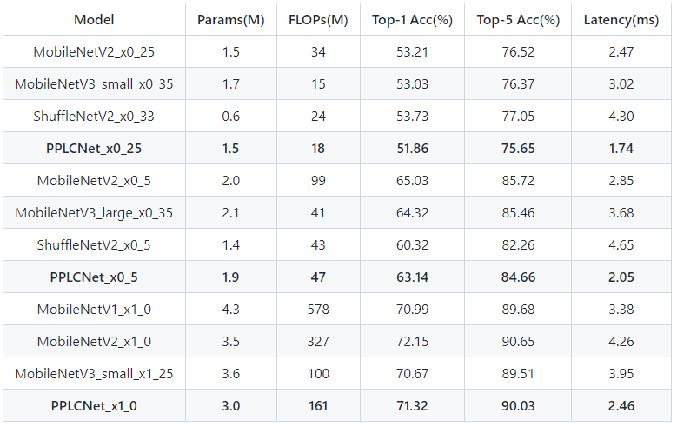

-### 2.1 卡证分类模型

-

-卡证分类:基于PPLCNet

-

-与其他轻量级模型相比在CPU环境下ImageNet数据集上的表现

-

-

-

-

-

-

-

-* 模型来自模型库PaddleClas,它是一个图像识别和图像分类任务的工具集,助力使用者训练出更好的视觉模型和应用落地。

-

-### 2.2 卡证识别模型

-

-* 检测:DBNet 识别:SVRT

-

-

-

-

-* PPOCRv3在文本检测、识别进行了一系列改进优化,在保证精度的同时提升预测效率

-

-

-

-

-

-

-

-

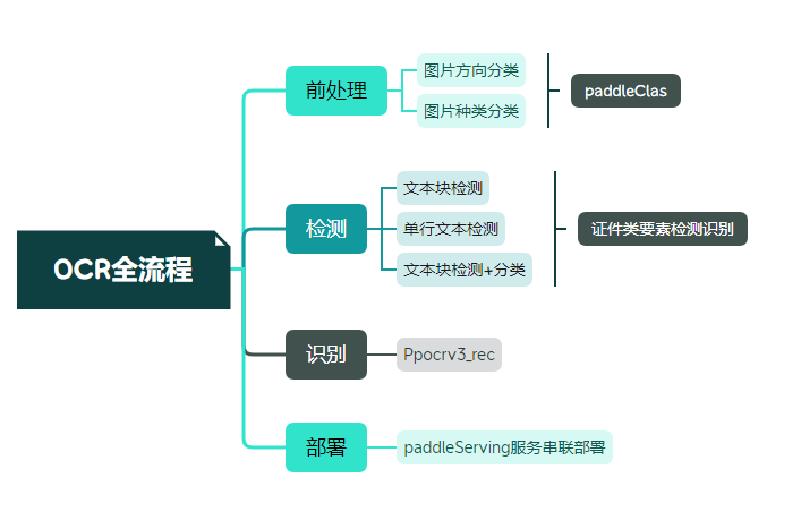

-## 3. OCR技术拆解

-

-### 3.1技术流程

-

-

-

-

-### 3.2 OCR技术拆解---卡证分类

-

-#### 卡证分类:数据、模型准备

-

-

-A 使用爬虫获取无标注数据,将相同类别的放在同一文件夹下,文件名从0开始命名。具体格式如下图所示。

-

- 注:卡证类数据,建议每个类别数据量在500张以上

-

-

-

-B 一行命令生成标签文件

-

-```

-tree -r -i -f | grep -E "jpg|JPG|jpeg|JPEG|png|PNG|webp" | awk -F "/" '{print $0" "$2}' > train_list.txt

-```

-

-C [下载预训练模型 ](https://github.com/PaddlePaddle/PaddleClas/blob/release/2.4/docs/zh_CN/models/PP-LCNet.md)

-

-

-

-#### 卡证分类---修改配置文件

-

-

-配置文件主要修改三个部分:

-

- 全局参数:预训练模型路径/训练轮次/图像尺寸

-

- 模型结构:分类数

-

- 数据处理:训练/评估数据路径

-

-

-

-

-#### 卡证分类---训练

-

-

-指定配置文件启动训练:

-

-```

-!python /home/aistudio/work/PaddleClas/tools/train.py -c /home/aistudio/work/PaddleClas/ppcls/configs/PULC/text_image_orientation/PPLCNet_x1_0.yaml

-```

-

-

- 注:日志中显示了训练结果和评估结果(训练时可以设置固定轮数评估一次)

-

-

-### 3.2 OCR技术拆解---卡证识别

-

-卡证识别(以身份证检测为例)

-存在的困难及问题:

-

- * 在自然场景下,由于各种拍摄设备以及光线、角度不同等影响导致实际得到的证件影像千差万别。

-

- * 如何快速提取需要的关键信息

-

- * 多行的文本信息,检测结果如何正确拼接

-

-

-

-

-

-* OCR技术拆解---OCR工具库

-

- PaddleOCR是一个丰富、领先且实用的OCR工具库,助力开发者训练出更好的模型并应用落地

-

-

-身份证识别:用现有的方法识别

-

-

-

-

-

-

-#### 身份证识别:检测+分类

-

-> 方法:基于现有的dbnet检测模型,加入分类方法。检测同时进行分类,从一定程度上优化识别流程

-

-

-

-

-

-

-#### 数据标注

-

-使用PaddleOCRLable进行快速标注

-

-

-

-

-* 修改PPOCRLabel.py,将下图中的kie参数设置为True

-

-

-

-

-

-* 数据标注踩坑分享

-

-

-

- 注:两者只有标注有差别,训练参数数据集都相同

-

-## 4 . 项目实践

-

-AIStudio项目链接:[快速构建卡证类OCR](https://aistudio.baidu.com/aistudio/projectdetail/4459116)

-

-### 4.1 环境准备

-

-1)拉取[paddleocr](https://github.com/PaddlePaddle/PaddleOCR)项目,如果从github上拉取速度慢可以选择从gitee上获取。

-```

-!git clone https://github.com/PaddlePaddle/PaddleOCR.git -b release/2.6 /home/aistudio/work/

-```

-

-2)获取并解压预训练模型,如果要使用其他模型可以从模型库里自主选择合适模型。

-```

-!wget -P work/pre_trained/ https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_distill_train.tar

-!tar -vxf /home/aistudio/work/pre_trained/ch_PP-OCRv3_det_distill_train.tar -C /home/aistudio/work/pre_trained

-```

-3) 安装必要依赖

-```

-!pip install -r /home/aistudio/work/requirements.txt

-```

-

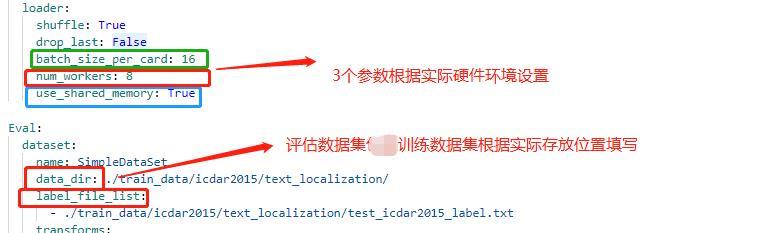

-### 4.2 配置文件修改

-

-修改配置文件 *work/configs/det/detmv3db.yml*

-

-具体修改说明如下:

-

-

-

- 注:在上述的配置文件的Global变量中需要添加以下两个参数:

-

- label_list 为标签表

- num_classes 为分类数

- 上述两个参数根据实际的情况配置即可

-

-

-

-

-其中lable_list内容如下例所示,***建议第一个参数设置为 background,不要设置为实际要提取的关键信息种类***:

-

-

-

-配置文件中的其他设置说明

-

-

-

-

-

-

-

-

-

-

-### 4.3 代码修改

-

-

-#### 4.3.1 数据读取

-

-

-

-* 修改 PaddleOCR/ppocr/data/imaug/label_ops.py中的DetLabelEncode

-

-

-```python

-class DetLabelEncode(object):

-

- # 修改检测标签的编码处,新增了参数分类数:num_classes,重写初始化方法,以及分类标签的读取

-

- def __init__(self, label_list, num_classes=8, **kwargs):

- self.num_classes = num_classes

- self.label_list = []

- if label_list:

- if isinstance(label_list, str):

- with open(label_list, 'r+', encoding='utf-8') as f:

- for line in f.readlines():

- self.label_list.append(line.replace("\n", ""))

- else:

- self.label_list = label_list

- else:

- assert ' please check label_list whether it is none or config is right'

-

- if num_classes != len(self.label_list): # 校验分类数和标签的一致性

- assert 'label_list length is not equal to the num_classes'

-

- def __call__(self, data):

- label = data['label']

- label = json.loads(label)

- nBox = len(label)

- boxes, txts, txt_tags, classes = [], [], [], []

- for bno in range(0, nBox):

- box = label[bno]['points']

- txt = label[bno]['key_cls'] # 此处将kie中的参数作为分类读取

- boxes.append(box)

- txts.append(txt)

-

- if txt in ['*', '###']:

- txt_tags.append(True)

- if self.num_classes > 1:

- classes.append(-2)

- else:

- txt_tags.append(False)

- if self.num_classes > 1: # 将KIE内容的key标签作为分类标签使用

- classes.append(int(self.label_list.index(txt)))

-

- if len(boxes) == 0:

-

- return None

- boxes = self.expand_points_num(boxes)

- boxes = np.array(boxes, dtype=np.float32)

- txt_tags = np.array(txt_tags, dtype=np.bool_)

- classes = classes

- data['polys'] = boxes

- data['texts'] = txts

- data['ignore_tags'] = txt_tags

- if self.num_classes > 1:

- data['classes'] = classes

- return data

-```

-

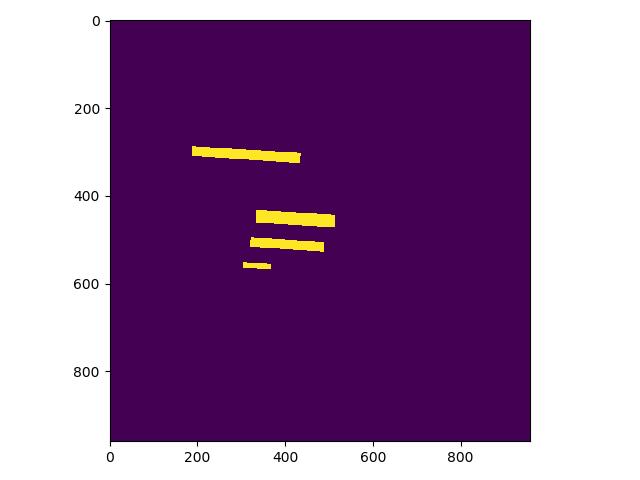

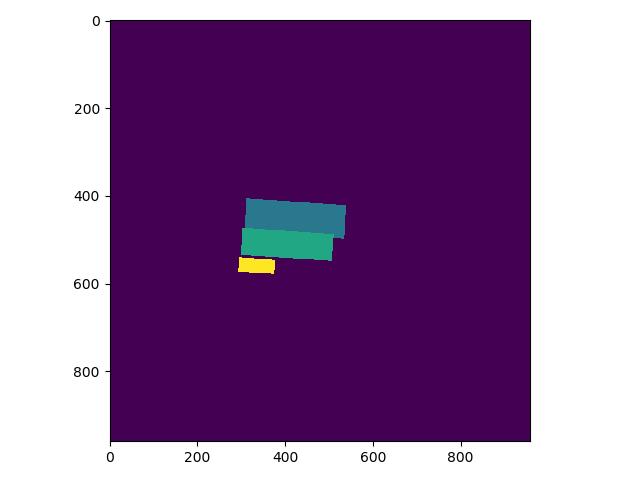

-* 修改 PaddleOCR/ppocr/data/imaug/make_shrink_map.py中的MakeShrinkMap类。这里需要注意的是,如果我们设置的label_list中的第一个参数为要检测的信息那么会得到如下的mask,

-

-举例说明:

-这是检测的mask图,图中有四个mask那么实际对应的分类应该是4类

-

-

-

-

-

-label_list中第一个为关键分类,则得到的分类Mask实际如下,与上图相比,少了一个box:

-

-

-

-

-

-```python

-class MakeShrinkMap(object):

- r'''

- Making binary mask from detection data with ICDAR format.

- Typically following the process of class `MakeICDARData`.

- '''

-

- def __init__(self, min_text_size=8, shrink_ratio=0.4, num_classes=8, **kwargs):

- self.min_text_size = min_text_size

- self.shrink_ratio = shrink_ratio

- self.num_classes = num_classes # 添加了分类

-

- def __call__(self, data):

- image = data['image']

- text_polys = data['polys']

- ignore_tags = data['ignore_tags']

- if self.num_classes > 1:

- classes = data['classes']

-

- h, w = image.shape[:2]

- text_polys, ignore_tags = self.validate_polygons(text_polys,

- ignore_tags, h, w)

- gt = np.zeros((h, w), dtype=np.float32)

- mask = np.ones((h, w), dtype=np.float32)

- gt_class = np.zeros((h, w), dtype=np.float32) # 新增分类

- for i in range(len(text_polys)):

- polygon = text_polys[i]

- height = max(polygon[:, 1]) - min(polygon[:, 1])

- width = max(polygon[:, 0]) - min(polygon[:, 0])

- if ignore_tags[i] or min(height, width) < self.min_text_size:

- cv2.fillPoly(mask,

- polygon.astype(np.int32)[np.newaxis, :, :], 0)

- ignore_tags[i] = True

- else:

- polygon_shape = Polygon(polygon)

- subject = [tuple(l) for l in polygon]

- padding = pyclipper.PyclipperOffset()

- padding.AddPath(subject, pyclipper.JT_ROUND,

- pyclipper.ET_CLOSEDPOLYGON)

- shrinked = []

-

- # Increase the shrink ratio every time we get multiple polygon returned back

- possible_ratios = np.arange(self.shrink_ratio, 1,

- self.shrink_ratio)

- np.append(possible_ratios, 1)

- for ratio in possible_ratios:

- distance = polygon_shape.area * (

- 1 - np.power(ratio, 2)) / polygon_shape.length

- shrinked = padding.Execute(-distance)

- if len(shrinked) == 1:

- break

-

- if shrinked == []:

- cv2.fillPoly(mask,

- polygon.astype(np.int32)[np.newaxis, :, :], 0)

- ignore_tags[i] = True

- continue

-

- for each_shirnk in shrinked:

- shirnk = np.array(each_shirnk).reshape(-1, 2)

- cv2.fillPoly(gt, [shirnk.astype(np.int32)], 1)

- if self.num_classes > 1: # 绘制分类的mask

- cv2.fillPoly(gt_class, polygon.astype(np.int32)[np.newaxis, :, :], classes[i])

-

-

- data['shrink_map'] = gt

-

- if self.num_classes > 1:

- data['class_mask'] = gt_class

-

- data['shrink_mask'] = mask

- return data

-```

-

-由于在训练数据中会对数据进行resize设置,yml中的操作为:EastRandomCropData,所以需要修改PaddleOCR/ppocr/data/imaug/random_crop_data.py中的EastRandomCropData

-

-

-```python

-class EastRandomCropData(object):

- def __init__(self,

- size=(640, 640),

- max_tries=10,

- min_crop_side_ratio=0.1,

- keep_ratio=True,

- num_classes=8,

- **kwargs):

- self.size = size

- self.max_tries = max_tries

- self.min_crop_side_ratio = min_crop_side_ratio

- self.keep_ratio = keep_ratio

- self.num_classes = num_classes

-

- def __call__(self, data):

- img = data['image']

- text_polys = data['polys']

- ignore_tags = data['ignore_tags']

- texts = data['texts']

- if self.num_classes > 1:

- classes = data['classes']

- all_care_polys = [

- text_polys[i] for i, tag in enumerate(ignore_tags) if not tag

- ]

- # 计算crop区域

- crop_x, crop_y, crop_w, crop_h = crop_area(

- img, all_care_polys, self.min_crop_side_ratio, self.max_tries)

- # crop 图片 保持比例填充

- scale_w = self.size[0] / crop_w

- scale_h = self.size[1] / crop_h

- scale = min(scale_w, scale_h)

- h = int(crop_h * scale)

- w = int(crop_w * scale)

- if self.keep_ratio:

- padimg = np.zeros((self.size[1], self.size[0], img.shape[2]),

- img.dtype)

- padimg[:h, :w] = cv2.resize(

- img[crop_y:crop_y + crop_h, crop_x:crop_x + crop_w], (w, h))

- img = padimg

- else:

- img = cv2.resize(

- img[crop_y:crop_y + crop_h, crop_x:crop_x + crop_w],

- tuple(self.size))

- # crop 文本框

- text_polys_crop = []

- ignore_tags_crop = []

- texts_crop = []

- classes_crop = []

- for poly, text, tag,class_index in zip(text_polys, texts, ignore_tags,classes):

- poly = ((poly - (crop_x, crop_y)) * scale).tolist()

- if not is_poly_outside_rect(poly, 0, 0, w, h):

- text_polys_crop.append(poly)

- ignore_tags_crop.append(tag)

- texts_crop.append(text)

- if self.num_classes > 1:

- classes_crop.append(class_index)

- data['image'] = img

- data['polys'] = np.array(text_polys_crop)

- data['ignore_tags'] = ignore_tags_crop

- data['texts'] = texts_crop

- if self.num_classes > 1:

- data['classes'] = classes_crop

- return data

-```

-

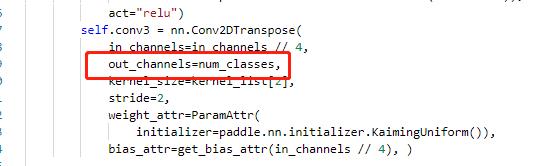

-#### 4.3.2 head修改

-

-

-

-主要修改 ppocr/modeling/heads/det_db_head.py,将Head类中的最后一层的输出修改为实际的分类数,同时在DBHead中新增分类的head。

-

-

-

-

-

-#### 4.3.3 修改loss

-

-

-修改PaddleOCR/ppocr/losses/det_db_loss.py中的DBLoss类,分类采用交叉熵损失函数进行计算。

-

-

-

-

-#### 4.3.4 后处理

-

-

-

-由于涉及到eval以及后续推理能否正常使用,我们需要修改后处理的相关代码,修改位置 PaddleOCR/ppocr/postprocess/db_postprocess.py中的DBPostProcess类

-

-

-```python

-class DBPostProcess(object):

- """

- The post process for Differentiable Binarization (DB).

- """

-

- def __init__(self,

- thresh=0.3,

- box_thresh=0.7,

- max_candidates=1000,

- unclip_ratio=2.0,

- use_dilation=False,

- score_mode="fast",

- **kwargs):

- self.thresh = thresh

- self.box_thresh = box_thresh

- self.max_candidates = max_candidates

- self.unclip_ratio = unclip_ratio

- self.min_size = 3

- self.score_mode = score_mode

- assert score_mode in [

- "slow", "fast"

- ], "Score mode must be in [slow, fast] but got: {}".format(score_mode)

-

- self.dilation_kernel = None if not use_dilation else np.array(

- [[1, 1], [1, 1]])

-

- def boxes_from_bitmap(self, pred, _bitmap, classes, dest_width, dest_height):

- """

- _bitmap: single map with shape (1, H, W),

- whose values are binarized as {0, 1}

- """

-

- bitmap = _bitmap

- height, width = bitmap.shape

-

- outs = cv2.findContours((bitmap * 255).astype(np.uint8), cv2.RETR_LIST,

- cv2.CHAIN_APPROX_SIMPLE)

- if len(outs) == 3:

- img, contours, _ = outs[0], outs[1], outs[2]

- elif len(outs) == 2:

- contours, _ = outs[0], outs[1]

-

- num_contours = min(len(contours), self.max_candidates)

-

- boxes = []

- scores = []

- class_indexes = []

- class_scores = []

- for index in range(num_contours):

- contour = contours[index]

- points, sside = self.get_mini_boxes(contour)

- if sside < self.min_size:

- continue

- points = np.array(points)

- if self.score_mode == "fast":

- score, class_index, class_score = self.box_score_fast(pred, points.reshape(-1, 2), classes)

- else:

- score, class_index, class_score = self.box_score_slow(pred, contour, classes)

- if self.box_thresh > score:

- continue

-

- box = self.unclip(points).reshape(-1, 1, 2)

- box, sside = self.get_mini_boxes(box)

- if sside < self.min_size + 2:

- continue

- box = np.array(box)

-

- box[:, 0] = np.clip(

- np.round(box[:, 0] / width * dest_width), 0, dest_width)

- box[:, 1] = np.clip(

- np.round(box[:, 1] / height * dest_height), 0, dest_height)

-

- boxes.append(box.astype(np.int16))

- scores.append(score)

-

- class_indexes.append(class_index)

- class_scores.append(class_score)

-

- if classes is None:

- return np.array(boxes, dtype=np.int16), scores

- else:

- return np.array(boxes, dtype=np.int16), scores, class_indexes, class_scores

-

- def unclip(self, box):

- unclip_ratio = self.unclip_ratio

- poly = Polygon(box)

- distance = poly.area * unclip_ratio / poly.length

- offset = pyclipper.PyclipperOffset()

- offset.AddPath(box, pyclipper.JT_ROUND, pyclipper.ET_CLOSEDPOLYGON)

- expanded = np.array(offset.Execute(distance))

- return expanded

-

- def get_mini_boxes(self, contour):

- bounding_box = cv2.minAreaRect(contour)

- points = sorted(list(cv2.boxPoints(bounding_box)), key=lambda x: x[0])

-

- index_1, index_2, index_3, index_4 = 0, 1, 2, 3

- if points[1][1] > points[0][1]:

- index_1 = 0

- index_4 = 1

- else:

- index_1 = 1

- index_4 = 0

- if points[3][1] > points[2][1]:

- index_2 = 2

- index_3 = 3

- else:

- index_2 = 3

- index_3 = 2

-

- box = [

- points[index_1], points[index_2], points[index_3], points[index_4]

- ]

- return box, min(bounding_box[1])

-

- def box_score_fast(self, bitmap, _box, classes):

- '''

- box_score_fast: use bbox mean score as the mean score

- '''

- h, w = bitmap.shape[:2]

- box = _box.copy()

- xmin = np.clip(np.floor(box[:, 0].min()).astype(np.int32), 0, w - 1)

- xmax = np.clip(np.ceil(box[:, 0].max()).astype(np.int32), 0, w - 1)

- ymin = np.clip(np.floor(box[:, 1].min()).astype(np.int32), 0, h - 1)

- ymax = np.clip(np.ceil(box[:, 1].max()).astype(np.int32), 0, h - 1)

-

- mask = np.zeros((ymax - ymin + 1, xmax - xmin + 1), dtype=np.uint8)

- box[:, 0] = box[:, 0] - xmin

- box[:, 1] = box[:, 1] - ymin

- cv2.fillPoly(mask, box.reshape(1, -1, 2).astype(np.int32), 1)

-

- if classes is None:

- return cv2.mean(bitmap[ymin:ymax + 1, xmin:xmax + 1], mask)[0], None, None

- else:

- k = 999

- class_mask = np.full((ymax - ymin + 1, xmax - xmin + 1), k, dtype=np.int32)

-

- cv2.fillPoly(class_mask, box.reshape(1, -1, 2).astype(np.int32), 0)

- classes = classes[ymin:ymax + 1, xmin:xmax + 1]

-

- new_classes = classes + class_mask

- a = new_classes.reshape(-1)

- b = np.where(a >= k)

- classes = np.delete(a, b[0].tolist())

-

- class_index = np.argmax(np.bincount(classes))

- class_score = np.sum(classes == class_index) / len(classes)

-

- return cv2.mean(bitmap[ymin:ymax + 1, xmin:xmax + 1], mask)[0], class_index, class_score

-

- def box_score_slow(self, bitmap, contour, classes):

- """

- box_score_slow: use polyon mean score as the mean score

- """

- h, w = bitmap.shape[:2]

- contour = contour.copy()

- contour = np.reshape(contour, (-1, 2))

-

- xmin = np.clip(np.min(contour[:, 0]), 0, w - 1)

- xmax = np.clip(np.max(contour[:, 0]), 0, w - 1)

- ymin = np.clip(np.min(contour[:, 1]), 0, h - 1)

- ymax = np.clip(np.max(contour[:, 1]), 0, h - 1)

-

- mask = np.zeros((ymax - ymin + 1, xmax - xmin + 1), dtype=np.uint8)

-

- contour[:, 0] = contour[:, 0] - xmin

- contour[:, 1] = contour[:, 1] - ymin

-

- cv2.fillPoly(mask, contour.reshape(1, -1, 2).astype(np.int32), 1)

-

- if classes is None:

- return cv2.mean(bitmap[ymin:ymax + 1, xmin:xmax + 1], mask)[0], None, None

- else:

- k = 999

- class_mask = np.full((ymax - ymin + 1, xmax - xmin + 1), k, dtype=np.int32)

-

- cv2.fillPoly(class_mask, contour.reshape(1, -1, 2).astype(np.int32), 0)

- classes = classes[ymin:ymax + 1, xmin:xmax + 1]

-

- new_classes = classes + class_mask

- a = new_classes.reshape(-1)

- b = np.where(a >= k)

- classes = np.delete(a, b[0].tolist())

-

- class_index = np.argmax(np.bincount(classes))

- class_score = np.sum(classes == class_index) / len(classes)

-

- return cv2.mean(bitmap[ymin:ymax + 1, xmin:xmax + 1], mask)[0], class_index, class_score

-

- def __call__(self, outs_dict, shape_list):

- pred = outs_dict['maps']

- if isinstance(pred, paddle.Tensor):

- pred = pred.numpy()

- pred = pred[:, 0, :, :]

- segmentation = pred > self.thresh

-

- if "classes" in outs_dict:

- classes = outs_dict['classes']

- if isinstance(classes, paddle.Tensor):

- classes = classes.numpy()

- classes = classes[:, 0, :, :]

-

- else:

- classes = None

-

- boxes_batch = []

- for batch_index in range(pred.shape[0]):

- src_h, src_w, ratio_h, ratio_w = shape_list[batch_index]

- if self.dilation_kernel is not None:

- mask = cv2.dilate(

- np.array(segmentation[batch_index]).astype(np.uint8),

- self.dilation_kernel)

- else:

- mask = segmentation[batch_index]

-

- if classes is None:

- boxes, scores = self.boxes_from_bitmap(pred[batch_index], mask, None,

- src_w, src_h)

- boxes_batch.append({'points': boxes})

- else:

- boxes, scores, class_indexes, class_scores = self.boxes_from_bitmap(pred[batch_index], mask,

- classes[batch_index],

- src_w, src_h)

- boxes_batch.append({'points': boxes, "classes": class_indexes, "class_scores": class_scores})

-

- return boxes_batch

-```

-

-### 4.4. 模型启动

-

-在完成上述步骤后我们就可以正常启动训练

-

-```

-!python /home/aistudio/work/PaddleOCR/tools/train.py -c /home/aistudio/work/PaddleOCR/configs/det/det_mv3_db.yml

-```

-

-其他命令:

-```

-!python /home/aistudio/work/PaddleOCR/tools/eval.py -c /home/aistudio/work/PaddleOCR/configs/det/det_mv3_db.yml

-!python /home/aistudio/work/PaddleOCR/tools/infer_det.py -c /home/aistudio/work/PaddleOCR/configs/det/det_mv3_db.yml

-```

-模型推理

-```

-!python /home/aistudio/work/PaddleOCR/tools/infer/predict_det.py --image_dir="/home/aistudio/work/test_img/" --det_model_dir="/home/aistudio/work/PaddleOCR/output/infer"

-```

-

-## 5 总结

-

-1. 分类+检测在一定程度上能够缩短用时,具体的模型选取要根据业务场景恰当选择。

-2. 数据标注需要多次进行测试调整标注方法,一般进行检测模型微调,需要标注至少上百张。

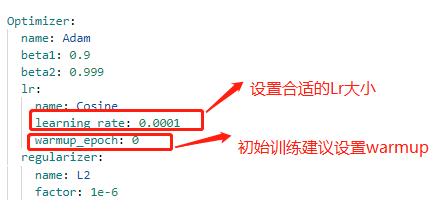

-3. 设置合理的batch_size以及resize大小,同时注意lr设置。

-

-

-## References

-

-1 https://github.com/PaddlePaddle/PaddleOCR

-

-2 https://github.com/PaddlePaddle/PaddleClas

-

-3 https://blog.csdn.net/YY007H/article/details/124491217

+# 快速构建卡证类OCR

+

+

+- [快速构建卡证类OCR](#快速构建卡证类ocr)

+ - [1. 金融行业卡证识别应用](#1-金融行业卡证识别应用)

+ - [1.1 金融行业中的OCR相关技术](#11-金融行业中的ocr相关技术)

+ - [1.2 金融行业中的卡证识别场景介绍](#12-金融行业中的卡证识别场景介绍)

+ - [1.3 OCR落地挑战](#13-ocr落地挑战)

+ - [2. 卡证识别技术解析](#2-卡证识别技术解析)

+ - [2.1 卡证分类模型](#21-卡证分类模型)

+ - [2.2 卡证识别模型](#22-卡证识别模型)

+ - [3. OCR技术拆解](#3-ocr技术拆解)

+ - [3.1技术流程](#31技术流程)

+ - [3.2 OCR技术拆解---卡证分类](#32-ocr技术拆解---卡证分类)

+ - [卡证分类:数据、模型准备](#卡证分类数据模型准备)

+ - [卡证分类---修改配置文件](#卡证分类---修改配置文件)

+ - [卡证分类---训练](#卡证分类---训练)

+ - [3.2 OCR技术拆解---卡证识别](#32-ocr技术拆解---卡证识别)

+ - [身份证识别:检测+分类](#身份证识别检测分类)

+ - [数据标注](#数据标注)

+ - [4 . 项目实践](#4--项目实践)

+ - [4.1 环境准备](#41-环境准备)

+ - [4.2 配置文件修改](#42-配置文件修改)

+ - [4.3 代码修改](#43-代码修改)

+ - [4.3.1 数据读取](#431-数据读取)

+ - [4.3.2 head修改](#432--head修改)

+ - [4.3.3 修改loss](#433-修改loss)

+ - [4.3.4 后处理](#434-后处理)

+ - [4.4. 模型启动](#44-模型启动)

+ - [5 总结](#5-总结)

+ - [References](#references)

+

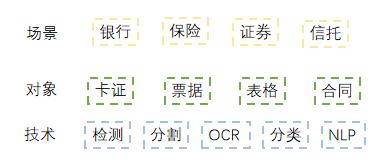

+## 1. 金融行业卡证识别应用

+

+### 1.1 金融行业中的OCR相关技术

+

+* 《“十四五”数字经济发展规划》指出,2020年我国数字经济核心产业增加值占GDP比重达7.8%,随着数字经济迈向全面扩展,到2025年该比例将提升至10%。

+

+* 在过去数年的跨越发展与积累沉淀中,数字金融、金融科技已在对金融业的重塑与再造中充分印证了其自身价值。

+

+* 以智能为目标,提升金融数字化水平,实现业务流程自动化,降低人力成本。

+

+

+

+

+

+

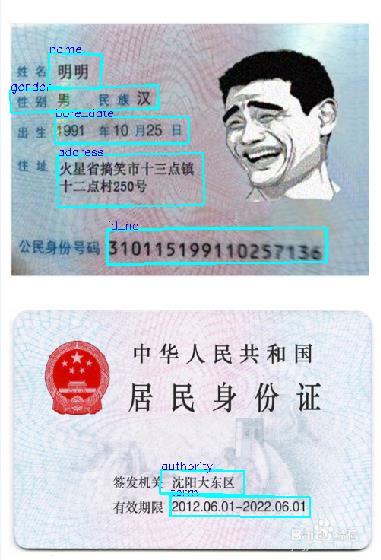

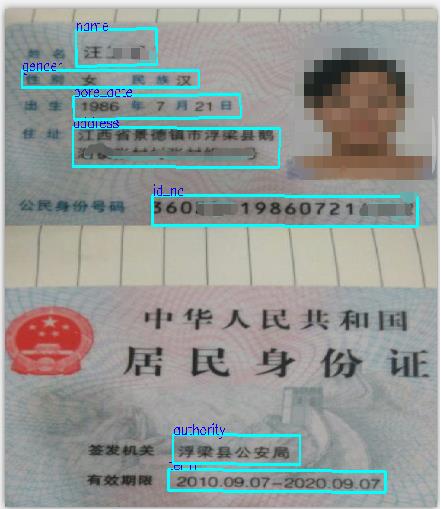

+### 1.2 金融行业中的卡证识别场景介绍

+

+应用场景:身份证、银行卡、营业执照、驾驶证等。

+

+应用难点:由于数据的采集来源多样,以及实际采集数据各种噪声:反光、褶皱、模糊、倾斜等各种问题干扰。

+

+

+

+

+

+### 1.3 OCR落地挑战

+

+

+

+

+

+

+

+

+## 2. 卡证识别技术解析

+

+

+

+

+

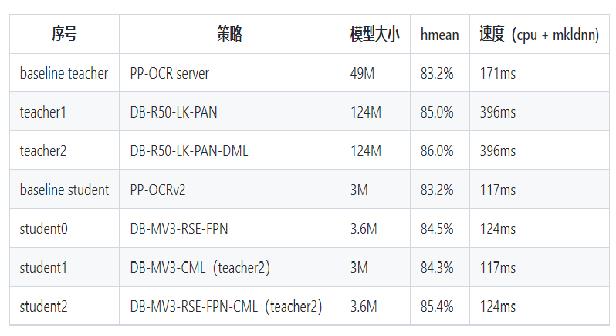

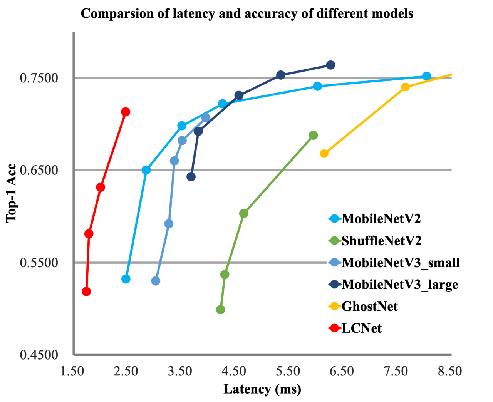

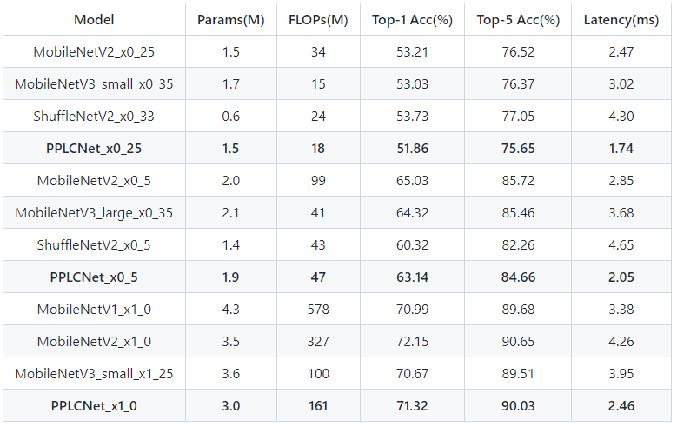

+### 2.1 卡证分类模型

+

+卡证分类:基于PPLCNet

+

+与其他轻量级模型相比在CPU环境下ImageNet数据集上的表现

+

+

+

+

+

+

+

+* 模型来自模型库PaddleClas,它是一个图像识别和图像分类任务的工具集,助力使用者训练出更好的视觉模型和应用落地。

+

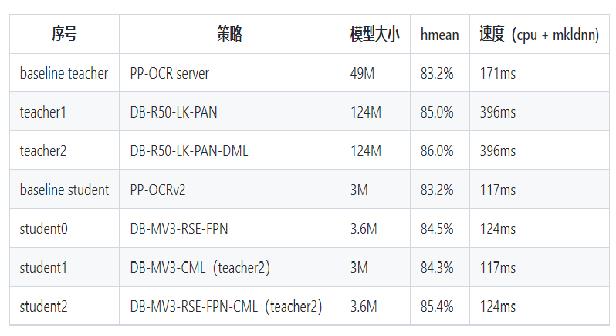

+### 2.2 卡证识别模型

+

+* 检测:DBNet 识别:SVRT

+

+

+

+

+* PPOCRv3在文本检测、识别进行了一系列改进优化,在保证精度的同时提升预测效率

+

+

+

+

+

+

+

+

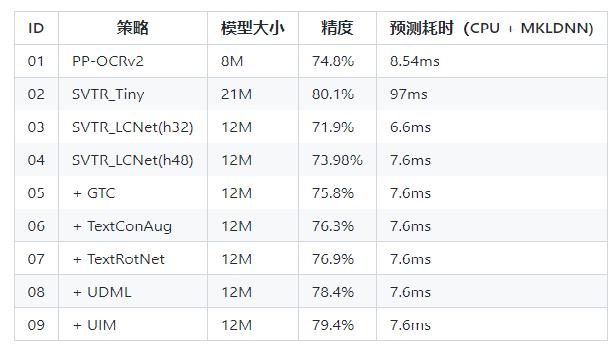

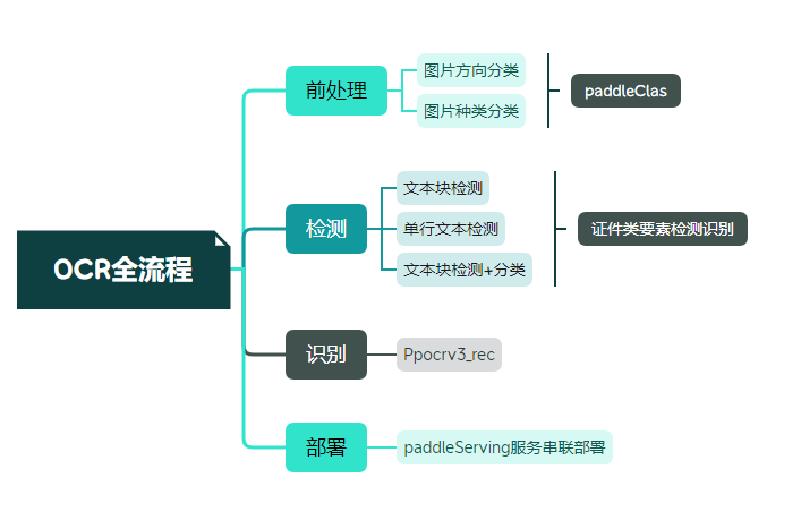

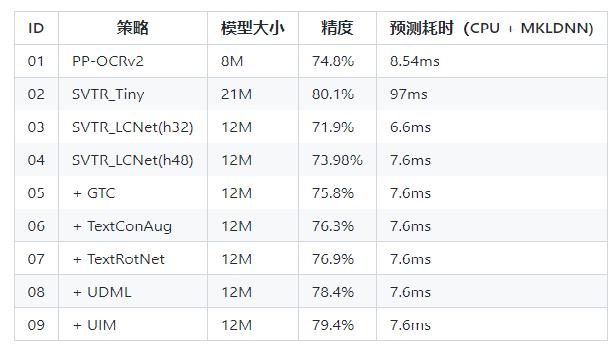

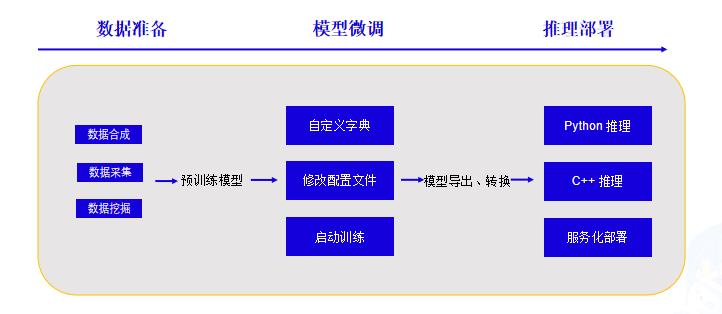

+## 3. OCR技术拆解

+

+### 3.1技术流程

+

+

+

+

+### 3.2 OCR技术拆解---卡证分类

+

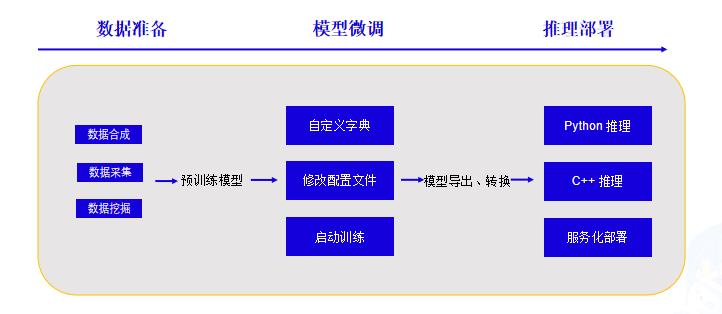

+#### 卡证分类:数据、模型准备

+

+

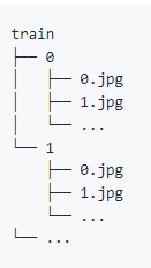

+A 使用爬虫获取无标注数据,将相同类别的放在同一文件夹下,文件名从0开始命名。具体格式如下图所示。

+

+ 注:卡证类数据,建议每个类别数据量在500张以上

+

+

+

+B 一行命令生成标签文件

+

+```

+tree -r -i -f | grep -E "jpg|JPG|jpeg|JPEG|png|PNG|webp" | awk -F "/" '{print $0" "$2}' > train_list.txt

+```

+

+C [下载预训练模型 ](https://github.com/PaddlePaddle/PaddleClas/blob/release/2.4/docs/zh_CN/models/PP-LCNet.md)

+

+

+

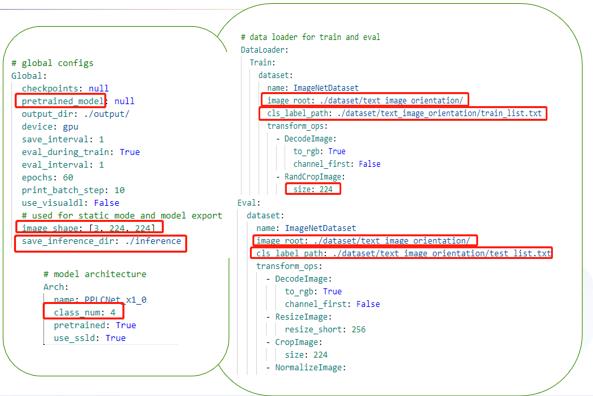

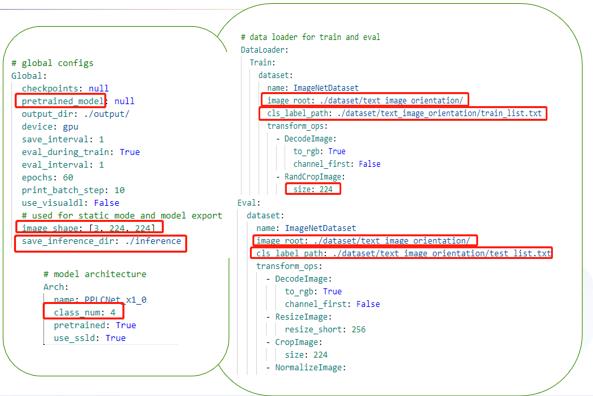

+#### 卡证分类---修改配置文件

+

+

+配置文件主要修改三个部分:

+

+ 全局参数:预训练模型路径/训练轮次/图像尺寸

+

+ 模型结构:分类数

+

+ 数据处理:训练/评估数据路径

+

+

+

+

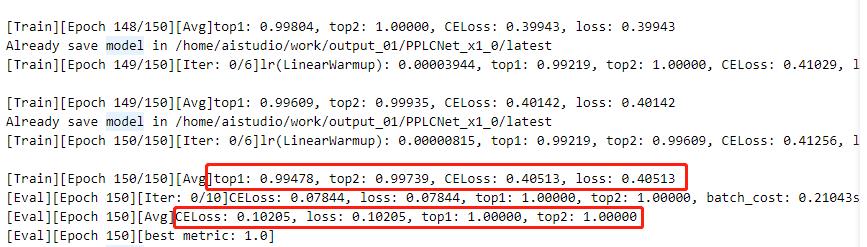

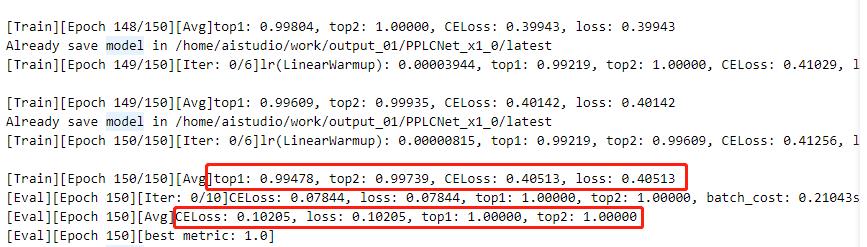

+#### 卡证分类---训练

+

+

+指定配置文件启动训练:

+

+```

+!python /home/aistudio/work/PaddleClas/tools/train.py -c /home/aistudio/work/PaddleClas/ppcls/configs/PULC/text_image_orientation/PPLCNet_x1_0.yaml

+```

+

+

+ 注:日志中显示了训练结果和评估结果(训练时可以设置固定轮数评估一次)

+

+

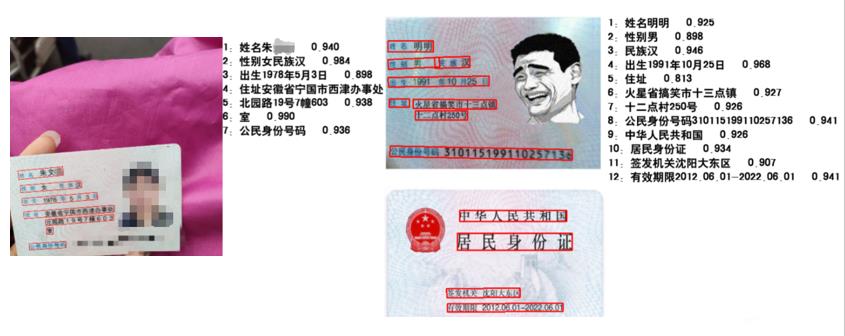

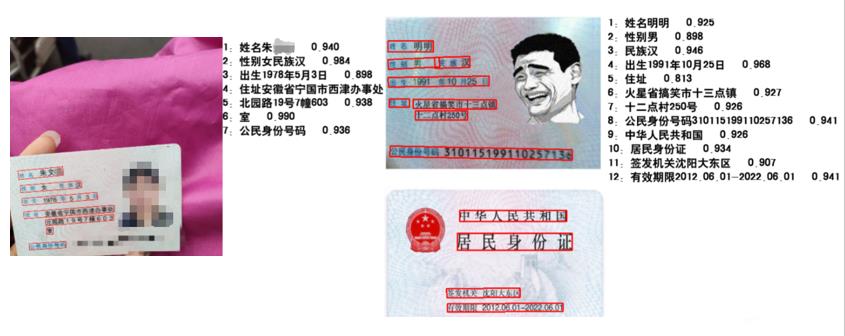

+### 3.2 OCR技术拆解---卡证识别

+

+卡证识别(以身份证检测为例)

+存在的困难及问题:

+

+ * 在自然场景下,由于各种拍摄设备以及光线、角度不同等影响导致实际得到的证件影像千差万别。

+

+ * 如何快速提取需要的关键信息

+

+ * 多行的文本信息,检测结果如何正确拼接

+

+

+

+

+

+* OCR技术拆解---OCR工具库

+

+ PaddleOCR是一个丰富、领先且实用的OCR工具库,助力开发者训练出更好的模型并应用落地

+

+

+身份证识别:用现有的方法识别

+

+

+

+

+

+

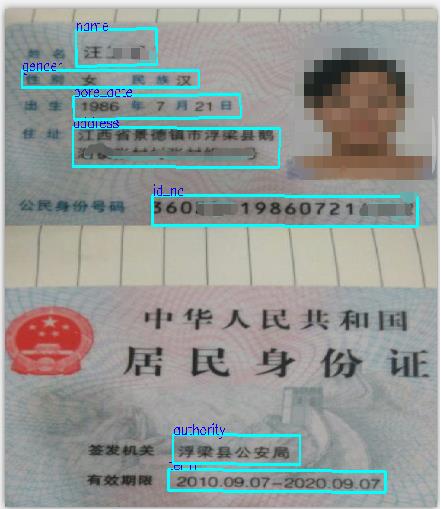

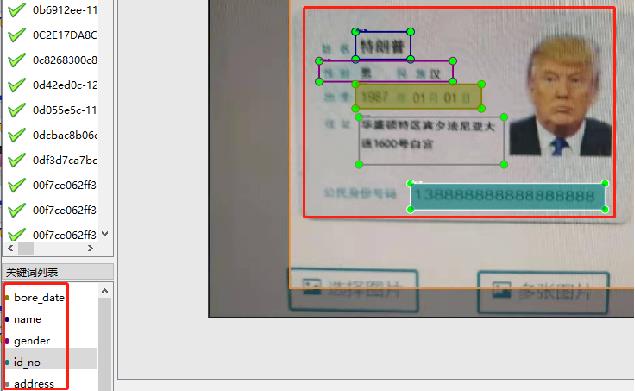

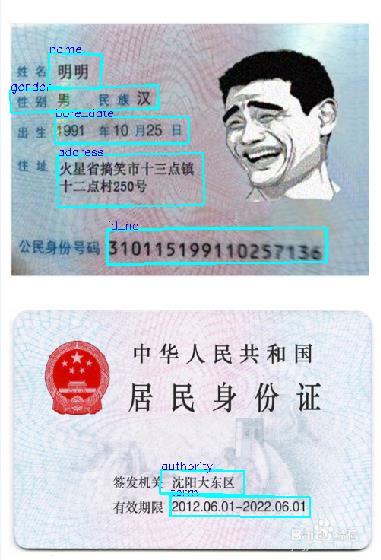

+#### 身份证识别:检测+分类

+

+> 方法:基于现有的dbnet检测模型,加入分类方法。检测同时进行分类,从一定程度上优化识别流程

+

+

+

+

+

+

+#### 数据标注

+

+使用PaddleOCRLable进行快速标注

+

+

+

+

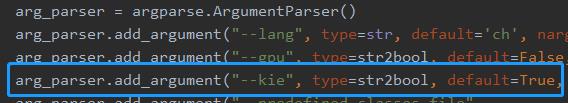

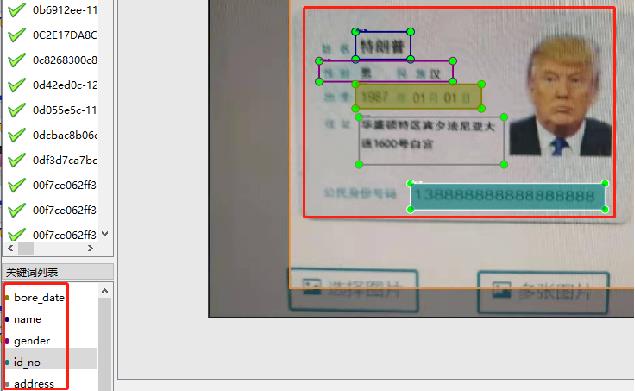

+* 修改PPOCRLabel.py,将下图中的kie参数设置为True

+

+

+

+

+

+* 数据标注踩坑分享

+

+

+

+ 注:两者只有标注有差别,训练参数数据集都相同

+

+## 4 . 项目实践

+

+AIStudio项目链接:[快速构建卡证类OCR](https://aistudio.baidu.com/aistudio/projectdetail/4459116)

+

+### 4.1 环境准备

+

+1)拉取[paddleocr](https://github.com/PaddlePaddle/PaddleOCR)项目,如果从github上拉取速度慢可以选择从gitee上获取。

+```

+!git clone https://github.com/PaddlePaddle/PaddleOCR.git -b release/2.6 /home/aistudio/work/

+```

+

+2)获取并解压预训练模型,如果要使用其他模型可以从模型库里自主选择合适模型。

+```

+!wget -P work/pre_trained/ https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_distill_train.tar

+!tar -vxf /home/aistudio/work/pre_trained/ch_PP-OCRv3_det_distill_train.tar -C /home/aistudio/work/pre_trained

+```

+3) 安装必要依赖

+```

+!pip install -r /home/aistudio/work/requirements.txt

+```

+

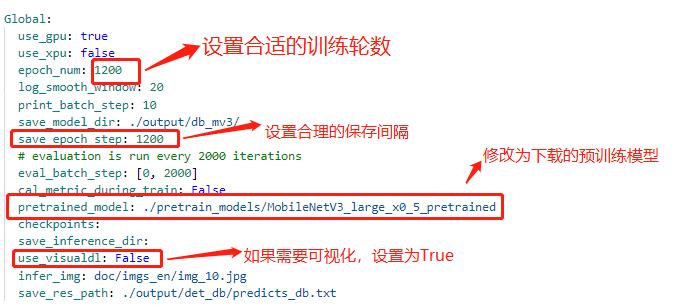

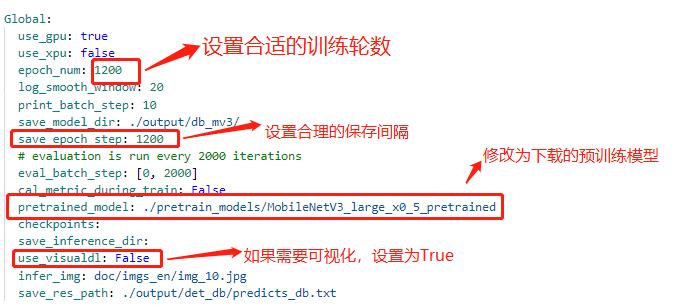

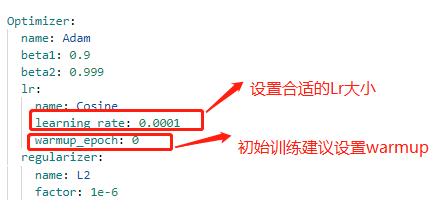

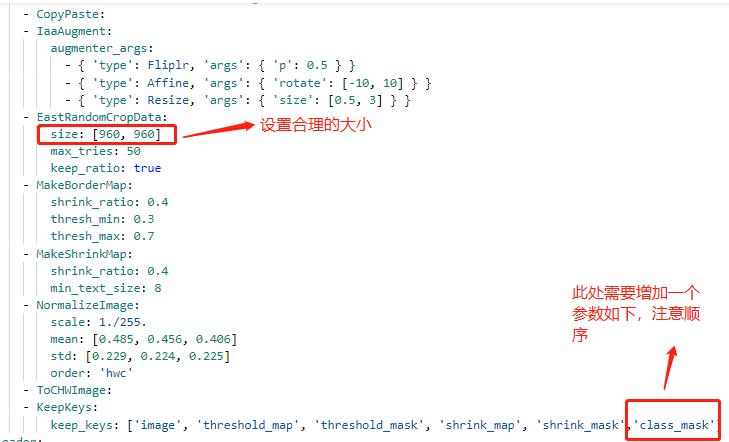

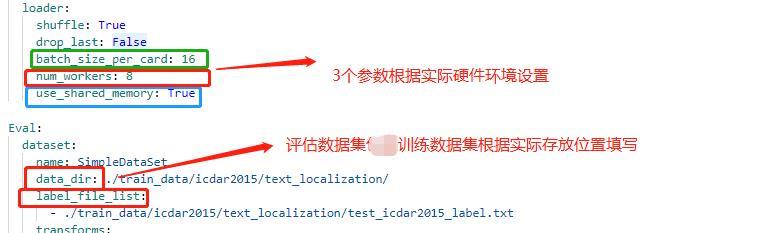

+### 4.2 配置文件修改

+

+修改配置文件 *work/configs/det/detmv3db.yml*

+

+具体修改说明如下:

+

+

+

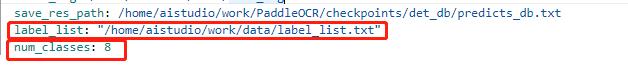

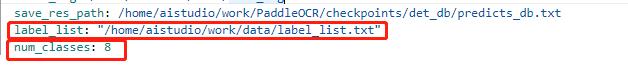

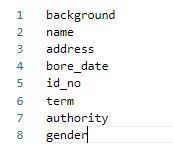

+ 注:在上述的配置文件的Global变量中需要添加以下两个参数:

+

+ label_list 为标签表

+ num_classes 为分类数

+ 上述两个参数根据实际的情况配置即可

+

+

+

+

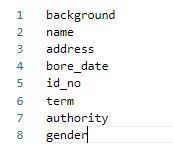

+其中lable_list内容如下例所示,***建议第一个参数设置为 background,不要设置为实际要提取的关键信息种类***:

+

+

+

+配置文件中的其他设置说明

+

+

+

+

+

+

+

+

+

+

+### 4.3 代码修改

+

+

+#### 4.3.1 数据读取

+

+

+

+* 修改 PaddleOCR/ppocr/data/imaug/label_ops.py中的DetLabelEncode

+

+

+```python

+class DetLabelEncode(object):

+

+ # 修改检测标签的编码处,新增了参数分类数:num_classes,重写初始化方法,以及分类标签的读取

+

+ def __init__(self, label_list, num_classes=8, **kwargs):

+ self.num_classes = num_classes

+ self.label_list = []

+ if label_list:

+ if isinstance(label_list, str):

+ with open(label_list, 'r+', encoding='utf-8') as f:

+ for line in f.readlines():

+ self.label_list.append(line.replace("\n", ""))

+ else:

+ self.label_list = label_list

+ else:

+ assert ' please check label_list whether it is none or config is right'

+

+ if num_classes != len(self.label_list): # 校验分类数和标签的一致性

+ assert 'label_list length is not equal to the num_classes'

+

+ def __call__(self, data):

+ label = data['label']

+ label = json.loads(label)

+ nBox = len(label)

+ boxes, txts, txt_tags, classes = [], [], [], []

+ for bno in range(0, nBox):

+ box = label[bno]['points']

+ txt = label[bno]['key_cls'] # 此处将kie中的参数作为分类读取

+ boxes.append(box)

+ txts.append(txt)

+

+ if txt in ['*', '###']:

+ txt_tags.append(True)

+ if self.num_classes > 1:

+ classes.append(-2)

+ else:

+ txt_tags.append(False)

+ if self.num_classes > 1: # 将KIE内容的key标签作为分类标签使用

+ classes.append(int(self.label_list.index(txt)))

+

+ if len(boxes) == 0:

+

+ return None

+ boxes = self.expand_points_num(boxes)

+ boxes = np.array(boxes, dtype=np.float32)

+ txt_tags = np.array(txt_tags, dtype=np.bool_)

+ classes = classes

+ data['polys'] = boxes

+ data['texts'] = txts

+ data['ignore_tags'] = txt_tags

+ if self.num_classes > 1:

+ data['classes'] = classes

+ return data

+```

+

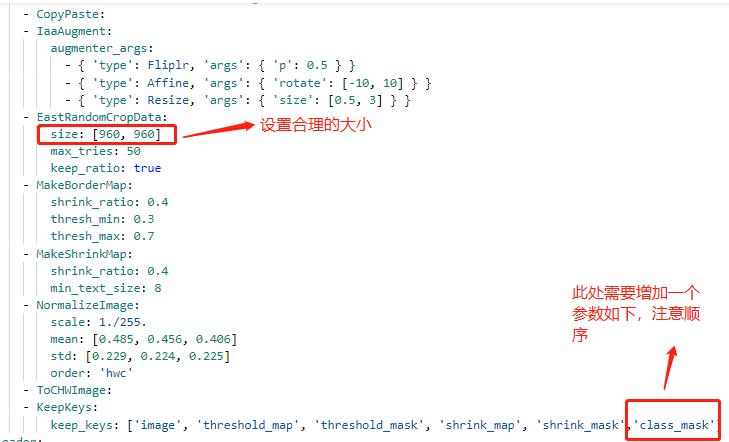

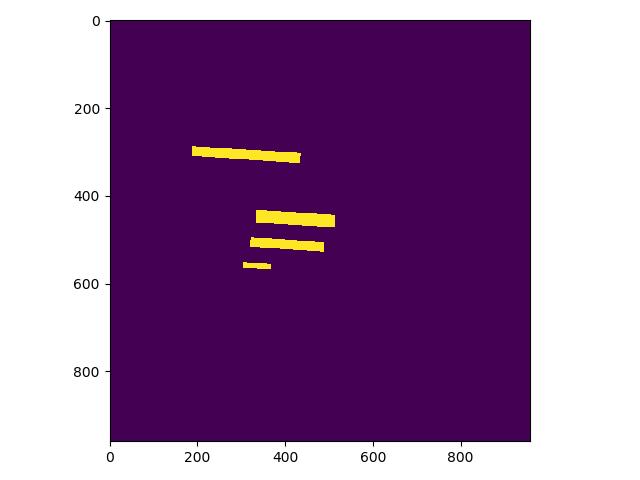

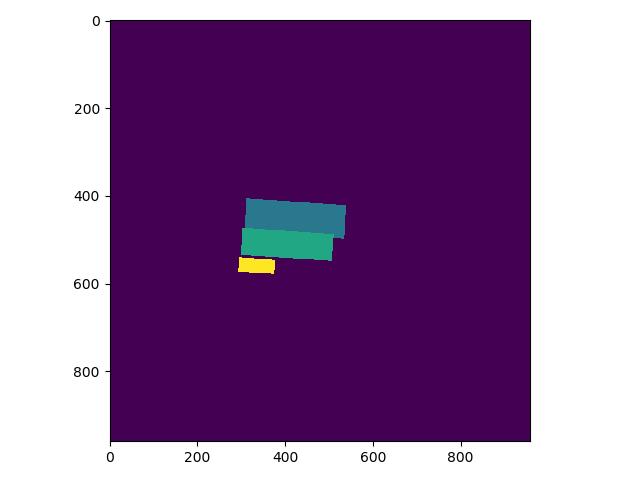

+* 修改 PaddleOCR/ppocr/data/imaug/make_shrink_map.py中的MakeShrinkMap类。这里需要注意的是,如果我们设置的label_list中的第一个参数为要检测的信息那么会得到如下的mask,

+

+举例说明:

+这是检测的mask图,图中有四个mask那么实际对应的分类应该是4类

+

+

+

+

+

+label_list中第一个为关键分类,则得到的分类Mask实际如下,与上图相比,少了一个box:

+

+

+

+

+

+```python

+class MakeShrinkMap(object):

+ r'''

+ Making binary mask from detection data with ICDAR format.

+ Typically following the process of class `MakeICDARData`.

+ '''

+

+ def __init__(self, min_text_size=8, shrink_ratio=0.4, num_classes=8, **kwargs):

+ self.min_text_size = min_text_size

+ self.shrink_ratio = shrink_ratio

+ self.num_classes = num_classes # 添加了分类

+

+ def __call__(self, data):

+ image = data['image']

+ text_polys = data['polys']

+ ignore_tags = data['ignore_tags']

+ if self.num_classes > 1:

+ classes = data['classes']

+

+ h, w = image.shape[:2]

+ text_polys, ignore_tags = self.validate_polygons(text_polys,

+ ignore_tags, h, w)

+ gt = np.zeros((h, w), dtype=np.float32)

+ mask = np.ones((h, w), dtype=np.float32)

+ gt_class = np.zeros((h, w), dtype=np.float32) # 新增分类

+ for i in range(len(text_polys)):

+ polygon = text_polys[i]

+ height = max(polygon[:, 1]) - min(polygon[:, 1])

+ width = max(polygon[:, 0]) - min(polygon[:, 0])

+ if ignore_tags[i] or min(height, width) < self.min_text_size:

+ cv2.fillPoly(mask,

+ polygon.astype(np.int32)[np.newaxis, :, :], 0)

+ ignore_tags[i] = True

+ else:

+ polygon_shape = Polygon(polygon)

+ subject = [tuple(l) for l in polygon]

+ padding = pyclipper.PyclipperOffset()

+ padding.AddPath(subject, pyclipper.JT_ROUND,

+ pyclipper.ET_CLOSEDPOLYGON)

+ shrinked = []

+

+ # Increase the shrink ratio every time we get multiple polygon returned back

+ possible_ratios = np.arange(self.shrink_ratio, 1,

+ self.shrink_ratio)

+ np.append(possible_ratios, 1)

+ for ratio in possible_ratios:

+ distance = polygon_shape.area * (

+ 1 - np.power(ratio, 2)) / polygon_shape.length

+ shrinked = padding.Execute(-distance)

+ if len(shrinked) == 1:

+ break

+

+ if shrinked == []:

+ cv2.fillPoly(mask,

+ polygon.astype(np.int32)[np.newaxis, :, :], 0)

+ ignore_tags[i] = True

+ continue

+

+ for each_shirnk in shrinked:

+ shirnk = np.array(each_shirnk).reshape(-1, 2)

+ cv2.fillPoly(gt, [shirnk.astype(np.int32)], 1)

+ if self.num_classes > 1: # 绘制分类的mask

+ cv2.fillPoly(gt_class, polygon.astype(np.int32)[np.newaxis, :, :], classes[i])

+

+

+ data['shrink_map'] = gt

+

+ if self.num_classes > 1:

+ data['class_mask'] = gt_class

+

+ data['shrink_mask'] = mask

+ return data

+```

+

+由于在训练数据中会对数据进行resize设置,yml中的操作为:EastRandomCropData,所以需要修改PaddleOCR/ppocr/data/imaug/random_crop_data.py中的EastRandomCropData

+

+

+```python

+class EastRandomCropData(object):

+ def __init__(self,

+ size=(640, 640),

+ max_tries=10,

+ min_crop_side_ratio=0.1,

+ keep_ratio=True,

+ num_classes=8,

+ **kwargs):

+ self.size = size

+ self.max_tries = max_tries

+ self.min_crop_side_ratio = min_crop_side_ratio

+ self.keep_ratio = keep_ratio

+ self.num_classes = num_classes

+

+ def __call__(self, data):

+ img = data['image']

+ text_polys = data['polys']

+ ignore_tags = data['ignore_tags']

+ texts = data['texts']

+ if self.num_classes > 1:

+ classes = data['classes']

+ all_care_polys = [

+ text_polys[i] for i, tag in enumerate(ignore_tags) if not tag

+ ]

+ # 计算crop区域

+ crop_x, crop_y, crop_w, crop_h = crop_area(

+ img, all_care_polys, self.min_crop_side_ratio, self.max_tries)

+ # crop 图片 保持比例填充

+ scale_w = self.size[0] / crop_w

+ scale_h = self.size[1] / crop_h

+ scale = min(scale_w, scale_h)

+ h = int(crop_h * scale)

+ w = int(crop_w * scale)

+ if self.keep_ratio:

+ padimg = np.zeros((self.size[1], self.size[0], img.shape[2]),

+ img.dtype)

+ padimg[:h, :w] = cv2.resize(

+ img[crop_y:crop_y + crop_h, crop_x:crop_x + crop_w], (w, h))

+ img = padimg

+ else:

+ img = cv2.resize(

+ img[crop_y:crop_y + crop_h, crop_x:crop_x + crop_w],

+ tuple(self.size))

+ # crop 文本框

+ text_polys_crop = []

+ ignore_tags_crop = []

+ texts_crop = []

+ classes_crop = []

+ for poly, text, tag,class_index in zip(text_polys, texts, ignore_tags,classes):

+ poly = ((poly - (crop_x, crop_y)) * scale).tolist()

+ if not is_poly_outside_rect(poly, 0, 0, w, h):

+ text_polys_crop.append(poly)

+ ignore_tags_crop.append(tag)

+ texts_crop.append(text)

+ if self.num_classes > 1:

+ classes_crop.append(class_index)

+ data['image'] = img

+ data['polys'] = np.array(text_polys_crop)

+ data['ignore_tags'] = ignore_tags_crop

+ data['texts'] = texts_crop

+ if self.num_classes > 1:

+ data['classes'] = classes_crop

+ return data

+```

+

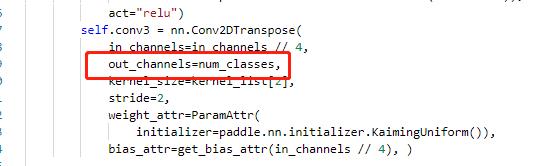

+#### 4.3.2 head修改

+

+

+

+主要修改 ppocr/modeling/heads/det_db_head.py,将Head类中的最后一层的输出修改为实际的分类数,同时在DBHead中新增分类的head。

+

+

+

+

+

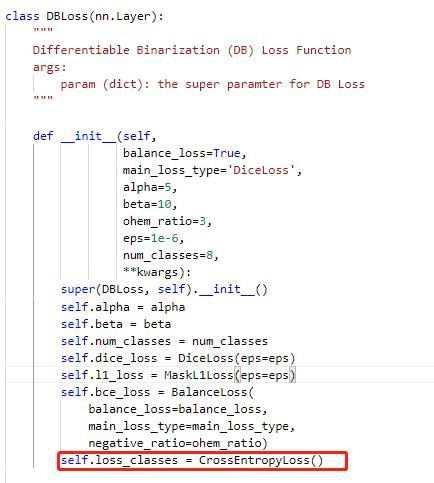

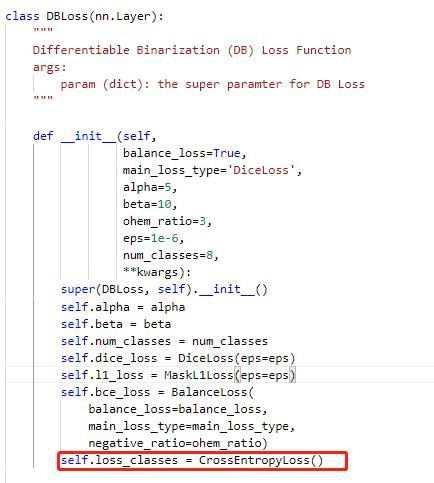

+#### 4.3.3 修改loss

+

+

+修改PaddleOCR/ppocr/losses/det_db_loss.py中的DBLoss类,分类采用交叉熵损失函数进行计算。

+

+

+

+

+#### 4.3.4 后处理

+

+

+

+由于涉及到eval以及后续推理能否正常使用,我们需要修改后处理的相关代码,修改位置 PaddleOCR/ppocr/postprocess/db_postprocess.py中的DBPostProcess类

+

+

+```python

+class DBPostProcess(object):

+ """

+ The post process for Differentiable Binarization (DB).

+ """

+

+ def __init__(self,

+ thresh=0.3,

+ box_thresh=0.7,

+ max_candidates=1000,

+ unclip_ratio=2.0,

+ use_dilation=False,

+ score_mode="fast",

+ **kwargs):

+ self.thresh = thresh

+ self.box_thresh = box_thresh

+ self.max_candidates = max_candidates

+ self.unclip_ratio = unclip_ratio

+ self.min_size = 3

+ self.score_mode = score_mode

+ assert score_mode in [

+ "slow", "fast"

+ ], "Score mode must be in [slow, fast] but got: {}".format(score_mode)

+

+ self.dilation_kernel = None if not use_dilation else np.array(

+ [[1, 1], [1, 1]])

+

+ def boxes_from_bitmap(self, pred, _bitmap, classes, dest_width, dest_height):

+ """

+ _bitmap: single map with shape (1, H, W),

+ whose values are binarized as {0, 1}

+ """

+

+ bitmap = _bitmap

+ height, width = bitmap.shape

+

+ outs = cv2.findContours((bitmap * 255).astype(np.uint8), cv2.RETR_LIST,

+ cv2.CHAIN_APPROX_SIMPLE)

+ if len(outs) == 3:

+ img, contours, _ = outs[0], outs[1], outs[2]

+ elif len(outs) == 2:

+ contours, _ = outs[0], outs[1]

+

+ num_contours = min(len(contours), self.max_candidates)

+

+ boxes = []

+ scores = []

+ class_indexes = []

+ class_scores = []

+ for index in range(num_contours):

+ contour = contours[index]

+ points, sside = self.get_mini_boxes(contour)

+ if sside < self.min_size:

+ continue

+ points = np.array(points)

+ if self.score_mode == "fast":

+ score, class_index, class_score = self.box_score_fast(pred, points.reshape(-1, 2), classes)

+ else:

+ score, class_index, class_score = self.box_score_slow(pred, contour, classes)

+ if self.box_thresh > score:

+ continue

+

+ box = self.unclip(points).reshape(-1, 1, 2)

+ box, sside = self.get_mini_boxes(box)

+ if sside < self.min_size + 2:

+ continue

+ box = np.array(box)

+

+ box[:, 0] = np.clip(

+ np.round(box[:, 0] / width * dest_width), 0, dest_width)

+ box[:, 1] = np.clip(

+ np.round(box[:, 1] / height * dest_height), 0, dest_height)

+

+ boxes.append(box.astype(np.int16))

+ scores.append(score)

+

+ class_indexes.append(class_index)

+ class_scores.append(class_score)

+

+ if classes is None:

+ return np.array(boxes, dtype=np.int16), scores

+ else:

+ return np.array(boxes, dtype=np.int16), scores, class_indexes, class_scores

+

+ def unclip(self, box):

+ unclip_ratio = self.unclip_ratio

+ poly = Polygon(box)

+ distance = poly.area * unclip_ratio / poly.length

+ offset = pyclipper.PyclipperOffset()

+ offset.AddPath(box, pyclipper.JT_ROUND, pyclipper.ET_CLOSEDPOLYGON)

+ expanded = np.array(offset.Execute(distance))

+ return expanded

+

+ def get_mini_boxes(self, contour):

+ bounding_box = cv2.minAreaRect(contour)

+ points = sorted(list(cv2.boxPoints(bounding_box)), key=lambda x: x[0])

+

+ index_1, index_2, index_3, index_4 = 0, 1, 2, 3

+ if points[1][1] > points[0][1]:

+ index_1 = 0

+ index_4 = 1

+ else:

+ index_1 = 1

+ index_4 = 0

+ if points[3][1] > points[2][1]:

+ index_2 = 2

+ index_3 = 3

+ else:

+ index_2 = 3

+ index_3 = 2

+

+ box = [

+ points[index_1], points[index_2], points[index_3], points[index_4]

+ ]

+ return box, min(bounding_box[1])

+

+ def box_score_fast(self, bitmap, _box, classes):

+ '''

+ box_score_fast: use bbox mean score as the mean score

+ '''

+ h, w = bitmap.shape[:2]

+ box = _box.copy()

+ xmin = np.clip(np.floor(box[:, 0].min()).astype(np.int32), 0, w - 1)

+ xmax = np.clip(np.ceil(box[:, 0].max()).astype(np.int32), 0, w - 1)

+ ymin = np.clip(np.floor(box[:, 1].min()).astype(np.int32), 0, h - 1)

+ ymax = np.clip(np.ceil(box[:, 1].max()).astype(np.int32), 0, h - 1)

+

+ mask = np.zeros((ymax - ymin + 1, xmax - xmin + 1), dtype=np.uint8)

+ box[:, 0] = box[:, 0] - xmin

+ box[:, 1] = box[:, 1] - ymin

+ cv2.fillPoly(mask, box.reshape(1, -1, 2).astype(np.int32), 1)

+

+ if classes is None:

+ return cv2.mean(bitmap[ymin:ymax + 1, xmin:xmax + 1], mask)[0], None, None

+ else:

+ k = 999

+ class_mask = np.full((ymax - ymin + 1, xmax - xmin + 1), k, dtype=np.int32)

+

+ cv2.fillPoly(class_mask, box.reshape(1, -1, 2).astype(np.int32), 0)

+ classes = classes[ymin:ymax + 1, xmin:xmax + 1]

+

+ new_classes = classes + class_mask

+ a = new_classes.reshape(-1)

+ b = np.where(a >= k)

+ classes = np.delete(a, b[0].tolist())

+

+ class_index = np.argmax(np.bincount(classes))

+ class_score = np.sum(classes == class_index) / len(classes)

+

+ return cv2.mean(bitmap[ymin:ymax + 1, xmin:xmax + 1], mask)[0], class_index, class_score

+

+ def box_score_slow(self, bitmap, contour, classes):

+ """

+ box_score_slow: use polyon mean score as the mean score

+ """

+ h, w = bitmap.shape[:2]

+ contour = contour.copy()

+ contour = np.reshape(contour, (-1, 2))

+

+ xmin = np.clip(np.min(contour[:, 0]), 0, w - 1)

+ xmax = np.clip(np.max(contour[:, 0]), 0, w - 1)

+ ymin = np.clip(np.min(contour[:, 1]), 0, h - 1)

+ ymax = np.clip(np.max(contour[:, 1]), 0, h - 1)

+

+ mask = np.zeros((ymax - ymin + 1, xmax - xmin + 1), dtype=np.uint8)

+

+ contour[:, 0] = contour[:, 0] - xmin

+ contour[:, 1] = contour[:, 1] - ymin

+

+ cv2.fillPoly(mask, contour.reshape(1, -1, 2).astype(np.int32), 1)

+

+ if classes is None:

+ return cv2.mean(bitmap[ymin:ymax + 1, xmin:xmax + 1], mask)[0], None, None

+ else:

+ k = 999

+ class_mask = np.full((ymax - ymin + 1, xmax - xmin + 1), k, dtype=np.int32)

+

+ cv2.fillPoly(class_mask, contour.reshape(1, -1, 2).astype(np.int32), 0)

+ classes = classes[ymin:ymax + 1, xmin:xmax + 1]

+

+ new_classes = classes + class_mask

+ a = new_classes.reshape(-1)

+ b = np.where(a >= k)

+ classes = np.delete(a, b[0].tolist())

+

+ class_index = np.argmax(np.bincount(classes))

+ class_score = np.sum(classes == class_index) / len(classes)

+

+ return cv2.mean(bitmap[ymin:ymax + 1, xmin:xmax + 1], mask)[0], class_index, class_score

+

+ def __call__(self, outs_dict, shape_list):

+ pred = outs_dict['maps']

+ if isinstance(pred, paddle.Tensor):

+ pred = pred.numpy()

+ pred = pred[:, 0, :, :]

+ segmentation = pred > self.thresh

+

+ if "classes" in outs_dict:

+ classes = outs_dict['classes']

+ if isinstance(classes, paddle.Tensor):

+ classes = classes.numpy()

+ classes = classes[:, 0, :, :]

+

+ else:

+ classes = None

+

+ boxes_batch = []

+ for batch_index in range(pred.shape[0]):

+ src_h, src_w, ratio_h, ratio_w = shape_list[batch_index]

+ if self.dilation_kernel is not None:

+ mask = cv2.dilate(

+ np.array(segmentation[batch_index]).astype(np.uint8),

+ self.dilation_kernel)

+ else:

+ mask = segmentation[batch_index]

+

+ if classes is None:

+ boxes, scores = self.boxes_from_bitmap(pred[batch_index], mask, None,

+ src_w, src_h)

+ boxes_batch.append({'points': boxes})

+ else:

+ boxes, scores, class_indexes, class_scores = self.boxes_from_bitmap(pred[batch_index], mask,

+ classes[batch_index],

+ src_w, src_h)

+ boxes_batch.append({'points': boxes, "classes": class_indexes, "class_scores": class_scores})

+

+ return boxes_batch

+```

+

+### 4.4. 模型启动

+

+在完成上述步骤后我们就可以正常启动训练

+

+```

+!python /home/aistudio/work/PaddleOCR/tools/train.py -c /home/aistudio/work/PaddleOCR/configs/det/det_mv3_db.yml

+```

+

+其他命令:

+```

+!python /home/aistudio/work/PaddleOCR/tools/eval.py -c /home/aistudio/work/PaddleOCR/configs/det/det_mv3_db.yml

+!python /home/aistudio/work/PaddleOCR/tools/infer_det.py -c /home/aistudio/work/PaddleOCR/configs/det/det_mv3_db.yml

+```

+模型推理

+```

+!python /home/aistudio/work/PaddleOCR/tools/infer/predict_det.py --image_dir="/home/aistudio/work/test_img/" --det_model_dir="/home/aistudio/work/PaddleOCR/output/infer"

+```

+

+## 5 总结

+

+1. 分类+检测在一定程度上能够缩短用时,具体的模型选取要根据业务场景恰当选择。

+2. 数据标注需要多次进行测试调整标注方法,一般进行检测模型微调,需要标注至少上百张。

+3. 设置合理的batch_size以及resize大小,同时注意lr设置。

+

+

+## References

+

+1 https://github.com/PaddlePaddle/PaddleOCR

+

+2 https://github.com/PaddlePaddle/PaddleClas

+

+3 https://blog.csdn.net/YY007H/article/details/124491217

diff --git a/applications/手写文字识别.md b/applications/手写文字识别.md

index b2bfdb3aa..3685b6645 100644

--- a/applications/手写文字识别.md

+++ b/applications/手写文字识别.md

@@ -228,7 +228,7 @@ python tools/infer/predict_rec.py --image_dir="train_data/handwrite/HWDB2.0Test_

```python

# 可视化文字识别图片

-from PIL import Image

+from PIL import Image

import matplotlib.pyplot as plt

import numpy as np

import os

@@ -238,10 +238,10 @@ img_path = 'train_data/handwrite/HWDB2.0Test_images/104-P16_4.jpg'

def vis(img_path):

plt.figure()

- image = Image.open(img_path)

+ image = Image.open(img_path)

plt.imshow(image)

plt.show()

- # image = image.resize([208, 208])

+ # image = image.resize([208, 208])

vis(img_path)

diff --git a/applications/液晶屏读数识别.md b/applications/液晶屏读数识别.md

index 9e11407e0..2e55dbcd9 100644

--- a/applications/液晶屏读数识别.md

+++ b/applications/液晶屏读数识别.md

@@ -64,7 +64,7 @@ unzip icdar2015.zip -d train_data

```python

# 随机查看文字检测数据集图片

-from PIL import Image

+from PIL import Image

import matplotlib.pyplot as plt

import numpy as np

import os

@@ -77,13 +77,13 @@ def get_one_image(train):

files = os.listdir(train)

n = len(files)

ind = np.random.randint(0,n)

- img_dir = os.path.join(train,files[ind])

- image = Image.open(img_dir)

+ img_dir = os.path.join(train,files[ind])

+ image = Image.open(img_dir)

plt.imshow(image)

plt.show()

- image = image.resize([208, 208])

+ image = image.resize([208, 208])

-get_one_image(train)

+get_one_image(train)

```

@@ -355,7 +355,7 @@ unzip ic15_data.zip -d train_data

```python

# 随机查看文字检测数据集图片

-from PIL import Image

+from PIL import Image

import matplotlib.pyplot as plt

import numpy as np

import os

@@ -367,11 +367,11 @@ def get_one_image(train):

files = os.listdir(train)

n = len(files)

ind = np.random.randint(0,n)

- img_dir = os.path.join(train,files[ind])

- image = Image.open(img_dir)

+ img_dir = os.path.join(train,files[ind])

+ image = Image.open(img_dir)

plt.imshow(image)

plt.show()

- image = image.resize([208, 208])

+ image = image.resize([208, 208])

get_one_image(train)

```

diff --git a/benchmark/PaddleOCR_DBNet/.gitattributes b/benchmark/PaddleOCR_DBNet/.gitattributes

index 8543e0a71..b4419d46e 100644

--- a/benchmark/PaddleOCR_DBNet/.gitattributes

+++ b/benchmark/PaddleOCR_DBNet/.gitattributes

@@ -1,2 +1,2 @@

*.html linguist-language=python

-*.ipynb linguist-language=python

\ No newline at end of file

+*.ipynb linguist-language=python

diff --git a/benchmark/PaddleOCR_DBNet/.gitignore b/benchmark/PaddleOCR_DBNet/.gitignore

index cef1c73b3..f18fe1018 100644

--- a/benchmark/PaddleOCR_DBNet/.gitignore

+++ b/benchmark/PaddleOCR_DBNet/.gitignore

@@ -13,4 +13,4 @@ datasets/

index/

train_log/

log/

-profiling_log/

\ No newline at end of file

+profiling_log/

diff --git a/benchmark/PaddleOCR_DBNet/config/SynthText.yaml b/benchmark/PaddleOCR_DBNet/config/SynthText.yaml

index 61d5da7d3..8fd511c80 100644

--- a/benchmark/PaddleOCR_DBNet/config/SynthText.yaml

+++ b/benchmark/PaddleOCR_DBNet/config/SynthText.yaml

@@ -37,4 +37,4 @@ dataset:

batch_size: 1

shuffle: true

num_workers: 0

- collate_fn: ''

\ No newline at end of file

+ collate_fn: ''

diff --git a/benchmark/PaddleOCR_DBNet/config/SynthText_resnet18_FPN_DBhead_polyLR.yaml b/benchmark/PaddleOCR_DBNet/config/SynthText_resnet18_FPN_DBhead_polyLR.yaml

index a665e94a7..c285d361b 100644

--- a/benchmark/PaddleOCR_DBNet/config/SynthText_resnet18_FPN_DBhead_polyLR.yaml

+++ b/benchmark/PaddleOCR_DBNet/config/SynthText_resnet18_FPN_DBhead_polyLR.yaml

@@ -62,4 +62,4 @@ dataset:

batch_size: 2

shuffle: true

num_workers: 6

- collate_fn: ''

\ No newline at end of file

+ collate_fn: ''

diff --git a/benchmark/PaddleOCR_DBNet/config/icdar2015.yaml b/benchmark/PaddleOCR_DBNet/config/icdar2015.yaml

index 4551b14b2..4233d3e2d 100644

--- a/benchmark/PaddleOCR_DBNet/config/icdar2015.yaml

+++ b/benchmark/PaddleOCR_DBNet/config/icdar2015.yaml

@@ -66,4 +66,4 @@ dataset:

batch_size: 1

shuffle: true

num_workers: 0

- collate_fn: ICDARCollectFN

\ No newline at end of file

+ collate_fn: ICDARCollectFN

diff --git a/benchmark/PaddleOCR_DBNet/config/icdar2015_dcn_resnet18_FPN_DBhead_polyLR.yaml b/benchmark/PaddleOCR_DBNet/config/icdar2015_dcn_resnet18_FPN_DBhead_polyLR.yaml

index 608ef42c1..3e2442838 100644

--- a/benchmark/PaddleOCR_DBNet/config/icdar2015_dcn_resnet18_FPN_DBhead_polyLR.yaml

+++ b/benchmark/PaddleOCR_DBNet/config/icdar2015_dcn_resnet18_FPN_DBhead_polyLR.yaml

@@ -79,4 +79,4 @@ dataset:

batch_size: 1

shuffle: true

num_workers: 6

- collate_fn: ICDARCollectFN

\ No newline at end of file

+ collate_fn: ICDARCollectFN

diff --git a/benchmark/PaddleOCR_DBNet/config/open_dataset.yaml b/benchmark/PaddleOCR_DBNet/config/open_dataset.yaml

index 97267586c..05ece6e91 100644

--- a/benchmark/PaddleOCR_DBNet/config/open_dataset.yaml

+++ b/benchmark/PaddleOCR_DBNet/config/open_dataset.yaml

@@ -70,4 +70,4 @@ dataset:

batch_size: 1

shuffle: true

num_workers: 0

- collate_fn: ICDARCollectFN

\ No newline at end of file

+ collate_fn: ICDARCollectFN

diff --git a/benchmark/PaddleOCR_DBNet/eval.sh b/benchmark/PaddleOCR_DBNet/eval.sh

index b3bf46818..7520a73cf 100644

--- a/benchmark/PaddleOCR_DBNet/eval.sh

+++ b/benchmark/PaddleOCR_DBNet/eval.sh

@@ -1 +1 @@

-CUDA_VISIBLE_DEVICES=0 python3 tools/eval.py --model_path ''

\ No newline at end of file

+CUDA_VISIBLE_DEVICES=0 python3 tools/eval.py --model_path ''

diff --git a/benchmark/PaddleOCR_DBNet/multi_gpu_train.sh b/benchmark/PaddleOCR_DBNet/multi_gpu_train.sh

index b49a73f15..4b9a9158c 100644

--- a/benchmark/PaddleOCR_DBNet/multi_gpu_train.sh

+++ b/benchmark/PaddleOCR_DBNet/multi_gpu_train.sh

@@ -1,2 +1,2 @@

# export NCCL_P2P_DISABLE=1

-CUDA_VISIBLE_DEVICES=0,1,2,3 python3 -m paddle.distributed.launch tools/train.py --config_file "config/icdar2015_resnet50_FPN_DBhead_polyLR.yaml"

\ No newline at end of file

+CUDA_VISIBLE_DEVICES=0,1,2,3 python3 -m paddle.distributed.launch tools/train.py --config_file "config/icdar2015_resnet50_FPN_DBhead_polyLR.yaml"

diff --git a/benchmark/PaddleOCR_DBNet/predict.sh b/benchmark/PaddleOCR_DBNet/predict.sh

index 37ab14828..a4b9bfa4f 100644

--- a/benchmark/PaddleOCR_DBNet/predict.sh

+++ b/benchmark/PaddleOCR_DBNet/predict.sh

@@ -1 +1 @@

-CUDA_VISIBLE_DEVICES=0 python tools/predict.py --model_path model_best.pth --input_folder ./input --output_folder ./output --thre 0.7 --polygon --show --save_result

\ No newline at end of file

+CUDA_VISIBLE_DEVICES=0 python tools/predict.py --model_path model_best.pth --input_folder ./input --output_folder ./output --thre 0.7 --polygon --show --save_result

diff --git a/benchmark/PaddleOCR_DBNet/singlel_gpu_train.sh b/benchmark/PaddleOCR_DBNet/singlel_gpu_train.sh

index f8b9f0e89..6803201fc 100644

--- a/benchmark/PaddleOCR_DBNet/singlel_gpu_train.sh

+++ b/benchmark/PaddleOCR_DBNet/singlel_gpu_train.sh

@@ -1 +1 @@

-CUDA_VISIBLE_DEVICES=0 python3 tools/train.py --config_file "config/icdar2015_resnet50_FPN_DBhead_polyLR.yaml"

\ No newline at end of file

+CUDA_VISIBLE_DEVICES=0 python3 tools/train.py --config_file "config/icdar2015_resnet50_FPN_DBhead_polyLR.yaml"

diff --git a/benchmark/PaddleOCR_DBNet/test/README.MD b/benchmark/PaddleOCR_DBNet/test/README.MD

index b43c6e9a1..3e2bb1a78 100644

--- a/benchmark/PaddleOCR_DBNet/test/README.MD

+++ b/benchmark/PaddleOCR_DBNet/test/README.MD

@@ -5,4 +5,4 @@ img_{img_id}.jpg

For predicting single images, you can change the `img_path` in the `/tools/predict.py` to your image number.

-The result will be saved in the output_folder(default is test/output) you give in predict.sh

\ No newline at end of file

+The result will be saved in the output_folder(default is test/output) you give in predict.sh

diff --git a/benchmark/PaddleOCR_DBNet/test_tipc/common_func.sh b/benchmark/PaddleOCR_DBNet/test_tipc/common_func.sh

index c123d3cf6..9ec22f03a 100644

--- a/benchmark/PaddleOCR_DBNet/test_tipc/common_func.sh

+++ b/benchmark/PaddleOCR_DBNet/test_tipc/common_func.sh

@@ -64,4 +64,4 @@ function status_check(){

else

echo -e "\033[33m Run failed with command - ${model_name} - ${run_command} - ${log_path} \033[0m" | tee -a ${run_log}

fi

-}

\ No newline at end of file

+}

diff --git a/benchmark/PaddleOCR_DBNet/test_tipc/prepare.sh b/benchmark/PaddleOCR_DBNet/test_tipc/prepare.sh

index cd8f56fd7..a9616032b 100644

--- a/benchmark/PaddleOCR_DBNet/test_tipc/prepare.sh

+++ b/benchmark/PaddleOCR_DBNet/test_tipc/prepare.sh

@@ -51,4 +51,4 @@ elif [ ${MODE} = "benchmark_train" ];then

# cat dup* > train_icdar2015_label.txt && rm -rf dup*

# cd ../../../

fi

-fi

\ No newline at end of file

+fi

diff --git a/benchmark/PaddleOCR_DBNet/test_tipc/test_train_inference_python.sh b/benchmark/PaddleOCR_DBNet/test_tipc/test_train_inference_python.sh

index a54591a60..33c81e1c3 100644

--- a/benchmark/PaddleOCR_DBNet/test_tipc/test_train_inference_python.sh

+++ b/benchmark/PaddleOCR_DBNet/test_tipc/test_train_inference_python.sh

@@ -340,4 +340,4 @@ else

done # done with: for trainer in ${trainer_list[*]}; do

done # done with: for autocast in ${autocast_list[*]}; do

done # done with: for gpu in ${gpu_list[*]}; do

-fi # end if [ ${MODE} = "infer" ]; then

\ No newline at end of file

+fi # end if [ ${MODE} = "infer" ]; then

diff --git a/benchmark/PaddleOCR_DBNet/tools/infer.py b/benchmark/PaddleOCR_DBNet/tools/infer.py

index 5ed4b8e94..1ca4bef80 100644

--- a/benchmark/PaddleOCR_DBNet/tools/infer.py

+++ b/benchmark/PaddleOCR_DBNet/tools/infer.py

@@ -194,7 +194,9 @@ class InferenceEngine(object):

box_list = [box_list[i] for i, v in enumerate(idx) if v]

score_list = [score_list[i] for i, v in enumerate(idx) if v]

else:

- idx = box_list.reshape(box_list.shape[0], -1).sum(axis=1) > 0 # 去掉全为0的框

+ idx = (

+ box_list.reshape(box_list.shape[0], -1).sum(axis=1) > 0

+ ) # 去掉全为0的框

box_list, score_list = box_list[idx], score_list[idx]

else:

box_list, score_list = [], []

diff --git a/benchmark/run_benchmark_det.sh b/benchmark/run_benchmark_det.sh

index 9f5b46cde..125d8743b 100644

--- a/benchmark/run_benchmark_det.sh

+++ b/benchmark/run_benchmark_det.sh

@@ -59,4 +59,3 @@ source ${BENCHMARK_ROOT}/scripts/run_model.sh # 在该脚本中会对符合

_set_params $@

#_train # 如果只想产出训练log,不解析,可取消注释

_run # 该函数在run_model.sh中,执行时会调用_train; 如果不联调只想要产出训练log可以注掉本行,提交时需打开

-

diff --git a/benchmark/run_det.sh b/benchmark/run_det.sh

index 981510c9a..75b0f17f8 100644

--- a/benchmark/run_det.sh

+++ b/benchmark/run_det.sh

@@ -34,6 +34,3 @@ for model_mode in ${model_mode_list[@]}; do

done

done

done

-

-

-

diff --git a/configs/det/det_mv3_east.yml b/configs/det/det_mv3_east.yml

index 4ae32ab00..461179e4e 100644

--- a/configs/det/det_mv3_east.yml

+++ b/configs/det/det_mv3_east.yml

@@ -106,4 +106,4 @@ Eval:

shuffle: False

drop_last: False

batch_size_per_card: 1 # must be 1

- num_workers: 2

\ No newline at end of file

+ num_workers: 2

diff --git a/configs/det/det_mv3_pse.yml b/configs/det/det_mv3_pse.yml

index f80180ce7..4b8c4be2f 100644

--- a/configs/det/det_mv3_pse.yml

+++ b/configs/det/det_mv3_pse.yml

@@ -132,4 +132,4 @@ Eval:

shuffle: False

drop_last: False

batch_size_per_card: 1 # must be 1

- num_workers: 8

\ No newline at end of file

+ num_workers: 8

diff --git a/configs/det/det_r50_drrg_ctw.yml b/configs/det/det_r50_drrg_ctw.yml

index f67c926f3..f56ac3963 100755

--- a/configs/det/det_r50_drrg_ctw.yml

+++ b/configs/det/det_r50_drrg_ctw.yml

@@ -130,4 +130,4 @@ Eval:

shuffle: False

drop_last: False

batch_size_per_card: 1 # must be 1

- num_workers: 2

\ No newline at end of file

+ num_workers: 2

diff --git a/configs/det/det_r50_vd_dcn_fce_ctw.yml b/configs/det/det_r50_vd_dcn_fce_ctw.yml

index 3a4075b32..5e851d1ac 100755

--- a/configs/det/det_r50_vd_dcn_fce_ctw.yml

+++ b/configs/det/det_r50_vd_dcn_fce_ctw.yml

@@ -136,4 +136,4 @@ Eval:

shuffle: False

drop_last: False

batch_size_per_card: 1 # must be 1

- num_workers: 2

\ No newline at end of file

+ num_workers: 2

diff --git a/configs/det/det_r50_vd_east.yml b/configs/det/det_r50_vd_east.yml

index af90ef0ad..5a488ddb0 100644

--- a/configs/det/det_r50_vd_east.yml

+++ b/configs/det/det_r50_vd_east.yml

@@ -105,4 +105,4 @@ Eval:

shuffle: False

drop_last: False

batch_size_per_card: 1 # must be 1

- num_workers: 2

\ No newline at end of file

+ num_workers: 2

diff --git a/configs/det/det_r50_vd_pse.yml b/configs/det/det_r50_vd_pse.yml

index 1a971564f..408e16d11 100644

--- a/configs/det/det_r50_vd_pse.yml

+++ b/configs/det/det_r50_vd_pse.yml

@@ -131,4 +131,4 @@ Eval:

shuffle: False

drop_last: False

batch_size_per_card: 1 # must be 1

- num_workers: 8

\ No newline at end of file

+ num_workers: 8

diff --git a/configs/det/det_r50_vd_sast_totaltext.yml b/configs/det/det_r50_vd_sast_totaltext.yml

index 44a0766b1..557ff8bf0 100755

--- a/configs/det/det_r50_vd_sast_totaltext.yml

+++ b/configs/det/det_r50_vd_sast_totaltext.yml

@@ -105,4 +105,4 @@ Eval:

shuffle: False

drop_last: False

batch_size_per_card: 1 # must be 1

- num_workers: 2

\ No newline at end of file

+ num_workers: 2

diff --git a/configs/kie/vi_layoutxlm/re_vi_layoutxlm_xfund_zh_udml.yml b/configs/kie/vi_layoutxlm/re_vi_layoutxlm_xfund_zh_udml.yml

index eda9fcddb..eda3a2b97 100644

--- a/configs/kie/vi_layoutxlm/re_vi_layoutxlm_xfund_zh_udml.yml

+++ b/configs/kie/vi_layoutxlm/re_vi_layoutxlm_xfund_zh_udml.yml

@@ -173,5 +173,3 @@ Eval:

drop_last: False

batch_size_per_card: 8

num_workers: 8

-

-

diff --git a/configs/rec/multi_language/generate_multi_language_configs.py b/configs/rec/multi_language/generate_multi_language_configs.py

index e41be8f77..d4b0b5131 100644

--- a/configs/rec/multi_language/generate_multi_language_configs.py

+++ b/configs/rec/multi_language/generate_multi_language_configs.py

@@ -198,9 +198,9 @@ class ArgsParser(ArgumentParser):

lang = "cyrillic"

elif lang in devanagari_lang:

lang = "devanagari"

- global_config["Global"][

- "character_dict_path"

- ] = "ppocr/utils/dict/{}_dict.txt".format(lang)

+ global_config["Global"]["character_dict_path"] = (

+ "ppocr/utils/dict/{}_dict.txt".format(lang)

+ )

global_config["Global"]["save_model_dir"] = "./output/rec_{}_lite".format(lang)

global_config["Train"]["dataset"]["label_file_list"] = [

"train_data/{}_train.txt".format(lang)

diff --git a/configs/rec/rec_satrn.yml b/configs/rec/rec_satrn.yml

index 8ed688b65..376c2ccfe 100644

--- a/configs/rec/rec_satrn.yml

+++ b/configs/rec/rec_satrn.yml

@@ -114,4 +114,3 @@ Eval:

batch_size_per_card: 128

num_workers: 4

use_shared_memory: False

-

diff --git a/configs/sr/sr_telescope.yml b/configs/sr/sr_telescope.yml

index 33d07e8f2..ed257251c 100644

--- a/configs/sr/sr_telescope.yml

+++ b/configs/sr/sr_telescope.yml

@@ -81,4 +81,3 @@ Eval:

drop_last: False

batch_size_per_card: 16

num_workers: 4

-

diff --git a/configs/sr/sr_tsrn_transformer_strock.yml b/configs/sr/sr_tsrn_transformer_strock.yml

index c8c308c43..627bf24a4 100644

--- a/configs/sr/sr_tsrn_transformer_strock.yml

+++ b/configs/sr/sr_tsrn_transformer_strock.yml

@@ -82,4 +82,3 @@ Eval:

drop_last: False

batch_size_per_card: 16

num_workers: 4

-

diff --git a/configs/table/table_master.yml b/configs/table/table_master.yml

index 125162f18..37cf44dec 100755

--- a/configs/table/table_master.yml

+++ b/configs/table/table_master.yml

@@ -142,4 +142,4 @@ Eval:

shuffle: False

drop_last: False

batch_size_per_card: 10

- num_workers: 8

\ No newline at end of file

+ num_workers: 8

diff --git a/deploy/android_demo/.gitignore b/deploy/android_demo/.gitignore

index 93dcb2935..d77f574d8 100644

--- a/deploy/android_demo/.gitignore

+++ b/deploy/android_demo/.gitignore

@@ -6,4 +6,3 @@

/build

/captures

.externalNativeBuild

-

diff --git a/deploy/android_demo/app/build.gradle b/deploy/android_demo/app/build.gradle

index 2607f32ec..00fae70d2 100644

--- a/deploy/android_demo/app/build.gradle

+++ b/deploy/android_demo/app/build.gradle

@@ -90,4 +90,4 @@ task downloadAndExtractArchives(type: DefaultTask) {

}

}

}

-preBuild.dependsOn downloadAndExtractArchives

\ No newline at end of file

+preBuild.dependsOn downloadAndExtractArchives

diff --git a/deploy/android_demo/app/src/main/AndroidManifest.xml b/deploy/android_demo/app/src/main/AndroidManifest.xml

index 133f35703..fef3a396f 100644

--- a/deploy/android_demo/app/src/main/AndroidManifest.xml

+++ b/deploy/android_demo/app/src/main/AndroidManifest.xml

@@ -35,4 +35,4 @@

</provider>

</application>

-</manifest>

\ No newline at end of file

+</manifest>

diff --git a/deploy/android_demo/app/src/main/cpp/CMakeLists.txt b/deploy/android_demo/app/src/main/cpp/CMakeLists.txt

index 742786ad6..39b710262 100644

--- a/deploy/android_demo/app/src/main/cpp/CMakeLists.txt

+++ b/deploy/android_demo/app/src/main/cpp/CMakeLists.txt

@@ -114,4 +114,4 @@ add_custom_command(

COMMAND

${CMAKE_COMMAND} -E copy

${PaddleLite_DIR}/cxx/libs/${ANDROID_ABI}/libhiai_ir_build.so

- ${CMAKE_LIBRARY_OUTPUT_DIRECTORY}/libhiai_ir_build.so)

\ No newline at end of file

+ ${CMAKE_LIBRARY_OUTPUT_DIRECTORY}/libhiai_ir_build.so)

diff --git a/deploy/android_demo/app/src/main/cpp/ocr_cls_process.cpp b/deploy/android_demo/app/src/main/cpp/ocr_cls_process.cpp

index 141b5157a..c3434d9cf 100644

--- a/deploy/android_demo/app/src/main/cpp/ocr_cls_process.cpp

+++ b/deploy/android_demo/app/src/main/cpp/ocr_cls_process.cpp

@@ -42,4 +42,4 @@ cv::Mat cls_resize_img(const cv::Mat &img) {

cv::BORDER_CONSTANT, {0, 0, 0});

}

return resize_img;

-}

\ No newline at end of file

+}

diff --git a/deploy/android_demo/app/src/main/cpp/ocr_cls_process.h b/deploy/android_demo/app/src/main/cpp/ocr_cls_process.h

index 1c30ee107..17480a92d 100644

--- a/deploy/android_demo/app/src/main/cpp/ocr_cls_process.h

+++ b/deploy/android_demo/app/src/main/cpp/ocr_cls_process.h

@@ -20,4 +20,4 @@

extern const std::vector<int> CLS_IMAGE_SHAPE;

-cv::Mat cls_resize_img(const cv::Mat &img);

\ No newline at end of file

+cv::Mat cls_resize_img(const cv::Mat &img);

diff --git a/deploy/android_demo/app/src/main/cpp/ocr_crnn_process.h b/deploy/android_demo/app/src/main/cpp/ocr_crnn_process.h

index 0346afe45..6ce812805 100644

--- a/deploy/android_demo/app/src/main/cpp/ocr_crnn_process.h

+++ b/deploy/android_demo/app/src/main/cpp/ocr_crnn_process.h

@@ -17,4 +17,4 @@ cv::Mat crnn_resize_img(const cv::Mat &img, float wh_ratio);

template <class ForwardIterator>

inline size_t argmax(ForwardIterator first, ForwardIterator last) {