mirror of

https://github.com/PaddlePaddle/PaddleOCR.git

synced 2025-06-03 21:53:39 +08:00

update common pre-commit configs and commit the results of running pre-commit run -a (#12516)

This commit is contained in:

parent

6e7a1b871d

commit

24f06d1a1b

2

.gitignore

vendored

2

.gitignore

vendored

@ -31,4 +31,4 @@ paddleocr.egg-info/

|

|||||||

/deploy/android_demo/app/.cxx/

|

/deploy/android_demo/app/.cxx/

|

||||||

/deploy/android_demo/app/cache/

|

/deploy/android_demo/app/cache/

|

||||||

test_tipc/web/models/

|

test_tipc/web/models/

|

||||||

test_tipc/web/node_modules/

|

test_tipc/web/node_modules/

|

||||||

|

|||||||

@ -1,26 +1,22 @@

|

|||||||

repos:

|

repos:

|

||||||

- repo: https://github.com/pre-commit/pre-commit-hooks

|

- repo: https://github.com/pre-commit/pre-commit-hooks

|

||||||

rev: a11d9314b22d8f8c7556443875b731ef05965464

|

rev: v4.6.0

|

||||||

hooks:

|

hooks:

|

||||||

|

- id: check-added-large-files

|

||||||

|

args: ['--maxkb=512']

|

||||||

|

- id: check-case-conflict

|

||||||

- id: check-merge-conflict

|

- id: check-merge-conflict

|

||||||

- id: check-symlinks

|

- id: check-symlinks

|

||||||

- id: detect-private-key

|

- id: detect-private-key

|

||||||

files: (?!.*paddle)^.*$

|

|

||||||

- id: end-of-file-fixer

|

- id: end-of-file-fixer

|

||||||

files: \.md$

|

|

||||||

- id: trailing-whitespace

|

- id: trailing-whitespace

|

||||||

files: \.md$

|

files: \.(c|cc|cxx|cpp|cu|h|hpp|hxx|py|md)$

|

||||||

- repo: https://github.com/Lucas-C/pre-commit-hooks

|

- repo: https://github.com/Lucas-C/pre-commit-hooks

|

||||||

rev: v1.0.1

|

rev: v1.5.1

|

||||||

hooks:

|

hooks:

|

||||||

- id: forbid-crlf

|

|

||||||

files: \.md$

|

|

||||||

- id: remove-crlf

|

- id: remove-crlf

|

||||||

files: \.md$

|

|

||||||

- id: forbid-tabs

|

|

||||||

files: \.md$

|

|

||||||

- id: remove-tabs

|

- id: remove-tabs

|

||||||

files: \.md$

|

files: \.(c|cc|cxx|cpp|cu|h|hpp|hxx|py|md)$

|

||||||

- repo: local

|

- repo: local

|

||||||

hooks:

|

hooks:

|

||||||

- id: clang-format

|

- id: clang-format

|

||||||

@ -31,7 +27,7 @@ repos:

|

|||||||

files: \.(c|cc|cxx|cpp|cu|h|hpp|hxx|cuh|proto)$

|

files: \.(c|cc|cxx|cpp|cu|h|hpp|hxx|cuh|proto)$

|

||||||

# For Python files

|

# For Python files

|

||||||

- repo: https://github.com/psf/black.git

|

- repo: https://github.com/psf/black.git

|

||||||

rev: 23.3.0

|

rev: 24.4.2

|

||||||

hooks:

|

hooks:

|

||||||

- id: black

|

- id: black

|

||||||

files: (.*\.(py|pyi|bzl)|BUILD|.*\.BUILD|WORKSPACE)$

|

files: (.*\.(py|pyi|bzl)|BUILD|.*\.BUILD|WORKSPACE)$

|

||||||

@ -47,4 +43,3 @@ repos:

|

|||||||

- --show-source

|

- --show-source

|

||||||

- --statistics

|

- --statistics

|

||||||

exclude: ^benchmark/|^test_tipc/

|

exclude: ^benchmark/|^test_tipc/

|

||||||

|

|

||||||

|

|||||||

@ -7,4 +7,4 @@ recursive-include ppocr/postprocess *.py

|

|||||||

recursive-include tools/infer *.py

|

recursive-include tools/infer *.py

|

||||||

recursive-include tools __init__.py

|

recursive-include tools __init__.py

|

||||||

recursive-include ppocr/utils/e2e_utils *.py

|

recursive-include ppocr/utils/e2e_utils *.py

|

||||||

recursive-include ppstructure *.py

|

recursive-include ppstructure *.py

|

||||||

|

|||||||

@ -207,12 +207,12 @@ PaddleOCR is being oversight by a [PMC](https://github.com/PaddlePaddle/PaddleOC

|

|||||||

<details open>

|

<details open>

|

||||||

<summary>PP-Structure 文档分析</summary>

|

<summary>PP-Structure 文档分析</summary>

|

||||||

|

|

||||||

- 版面分析+表格识别

|

- 版面分析+表格识别

|

||||||

<div align="center">

|

<div align="center">

|

||||||

<img src="./ppstructure/docs/table/ppstructure.GIF" width="800">

|

<img src="./ppstructure/docs/table/ppstructure.GIF" width="800">

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

- SER(语义实体识别)

|

- SER(语义实体识别)

|

||||||

<div align="center">

|

<div align="center">

|

||||||

<img src="https://user-images.githubusercontent.com/14270174/185310636-6ce02f7c-790d-479f-b163-ea97a5a04808.jpg" width="600">

|

<img src="https://user-images.githubusercontent.com/14270174/185310636-6ce02f7c-790d-479f-b163-ea97a5a04808.jpg" width="600">

|

||||||

</div>

|

</div>

|

||||||

|

|||||||

10

README_en.md

10

README_en.md

@ -119,11 +119,11 @@ PaddleOCR support a variety of cutting-edge algorithms related to OCR, and devel

|

|||||||

- [Mobile](./deploy/lite/readme.md)

|

- [Mobile](./deploy/lite/readme.md)

|

||||||

- [Paddle2ONNX](./deploy/paddle2onnx/readme.md)

|

- [Paddle2ONNX](./deploy/paddle2onnx/readme.md)

|

||||||

- [PaddleCloud](./deploy/paddlecloud/README.md)

|

- [PaddleCloud](./deploy/paddlecloud/README.md)

|

||||||

- [Benchmark](./doc/doc_en/benchmark_en.md)

|

- [Benchmark](./doc/doc_en/benchmark_en.md)

|

||||||

- [PP-Structure 🔥](./ppstructure/README.md)

|

- [PP-Structure 🔥](./ppstructure/README.md)

|

||||||

- [Quick Start](./ppstructure/docs/quickstart_en.md)

|

- [Quick Start](./ppstructure/docs/quickstart_en.md)

|

||||||

- [Model Zoo](./ppstructure/docs/models_list_en.md)

|

- [Model Zoo](./ppstructure/docs/models_list_en.md)

|

||||||

- [Model training](./doc/doc_en/training_en.md)

|

- [Model training](./doc/doc_en/training_en.md)

|

||||||

- [Layout Analysis](./ppstructure/layout/README.md)

|

- [Layout Analysis](./ppstructure/layout/README.md)

|

||||||

- [Table Recognition](./ppstructure/table/README.md)

|

- [Table Recognition](./ppstructure/table/README.md)

|

||||||

- [Key Information Extraction](./ppstructure/kie/README.md)

|

- [Key Information Extraction](./ppstructure/kie/README.md)

|

||||||

@ -136,7 +136,7 @@ PaddleOCR support a variety of cutting-edge algorithms related to OCR, and devel

|

|||||||

- [Text recognition](./doc/doc_en/algorithm_overview_en.md)

|

- [Text recognition](./doc/doc_en/algorithm_overview_en.md)

|

||||||

- [End-to-end OCR](./doc/doc_en/algorithm_overview_en.md)

|

- [End-to-end OCR](./doc/doc_en/algorithm_overview_en.md)

|

||||||

- [Table Recognition](./doc/doc_en/algorithm_overview_en.md)

|

- [Table Recognition](./doc/doc_en/algorithm_overview_en.md)

|

||||||

- [Key Information Extraction](./doc/doc_en/algorithm_overview_en.md)

|

- [Key Information Extraction](./doc/doc_en/algorithm_overview_en.md)

|

||||||

- [Add New Algorithms to PaddleOCR](./doc/doc_en/add_new_algorithm_en.md)

|

- [Add New Algorithms to PaddleOCR](./doc/doc_en/add_new_algorithm_en.md)

|

||||||

- Data Annotation and Synthesis

|

- Data Annotation and Synthesis

|

||||||

- [Semi-automatic Annotation Tool: PPOCRLabel](https://github.com/PFCCLab/PPOCRLabel/blob/main/README.md)

|

- [Semi-automatic Annotation Tool: PPOCRLabel](https://github.com/PFCCLab/PPOCRLabel/blob/main/README.md)

|

||||||

@ -188,7 +188,7 @@ PaddleOCR support a variety of cutting-edge algorithms related to OCR, and devel

|

|||||||

<details open>

|

<details open>

|

||||||

<summary>PP-StructureV2</summary>

|

<summary>PP-StructureV2</summary>

|

||||||

|

|

||||||

- layout analysis + table recognition

|

- layout analysis + table recognition

|

||||||

<div align="center">

|

<div align="center">

|

||||||

<img src="./ppstructure/docs/table/ppstructure.GIF" width="800">

|

<img src="./ppstructure/docs/table/ppstructure.GIF" width="800">

|

||||||

</div>

|

</div>

|

||||||

@ -209,7 +209,7 @@ PaddleOCR support a variety of cutting-edge algorithms related to OCR, and devel

|

|||||||

- RE (Relation Extraction)

|

- RE (Relation Extraction)

|

||||||

<div align="center">

|

<div align="center">

|

||||||

<img src="https://user-images.githubusercontent.com/25809855/186094813-3a8e16cc-42e5-4982-b9f4-0134dfb5688d.png" width="600">

|

<img src="https://user-images.githubusercontent.com/25809855/186094813-3a8e16cc-42e5-4982-b9f4-0134dfb5688d.png" width="600">

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

<div align="center">

|

<div align="center">

|

||||||

<img src="https://user-images.githubusercontent.com/14270174/185393805-c67ff571-cf7e-4217-a4b0-8b396c4f22bb.jpg" width="600">

|

<img src="https://user-images.githubusercontent.com/14270174/185393805-c67ff571-cf7e-4217-a4b0-8b396c4f22bb.jpg" width="600">

|

||||||

|

|||||||

@ -546,7 +546,7 @@ python3 tools/infer/predict_system.py \

|

|||||||

--use_gpu=True

|

--use_gpu=True

|

||||||

```

|

```

|

||||||

|

|

||||||

得到保存结果,文本检测识别可视化图保存在`det_rec_infer/`目录下,预测结果保存在`det_rec_infer/system_results.txt`中,格式如下:`0018.jpg [{"transcription": "E295", "points": [[88, 33], [137, 33], [137, 40], [88, 40]]}]`

|

得到保存结果,文本检测识别可视化图保存在`det_rec_infer/`目录下,预测结果保存在`det_rec_infer/system_results.txt`中,格式如下:`0018.jpg [{"transcription": "E295", "points": [[88, 33], [137, 33], [137, 40], [88, 40]]}]`

|

||||||

|

|

||||||

2)然后将步骤一保存的数据转换为端对端评测需要的数据格式: 修改 `tools/end2end/convert_ppocr_label.py`中的代码,convert_label函数中设置输入标签路径,Mode,保存标签路径等,对预测数据的GTlabel和预测结果的label格式进行转换。

|

2)然后将步骤一保存的数据转换为端对端评测需要的数据格式: 修改 `tools/end2end/convert_ppocr_label.py`中的代码,convert_label函数中设置输入标签路径,Mode,保存标签路径等,对预测数据的GTlabel和预测结果的label格式进行转换。

|

||||||

```

|

```

|

||||||

|

|||||||

@ -27,4 +27,4 @@ K06

|

|||||||

KIEY

|

KIEY

|

||||||

NZQJ

|

NZQJ

|

||||||

UN1B

|

UN1B

|

||||||

6X4

|

6X4

|

||||||

|

|||||||

@ -456,7 +456,7 @@ display(HTML('<html><body><table><tr><td colspan="5">alleadersh</td><td rowspan=

|

|||||||

|

|

||||||

预测结果如下:

|

预测结果如下:

|

||||||

```

|

```

|

||||||

val_9.jpg: {'attributes': ['Scanned', 'Little', 'Black-and-White', 'Clear', 'Without-Obstacles', 'Horizontal'], 'output': [1, 1, 1, 1, 1, 1]}

|

val_9.jpg: {'attributes': ['Scanned', 'Little', 'Black-and-White', 'Clear', 'Without-Obstacles', 'Horizontal'], 'output': [1, 1, 1, 1, 1, 1]}

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

@ -466,7 +466,7 @@ val_9.jpg: {'attributes': ['Scanned', 'Little', 'Black-and-White', 'Clear', 'Wi

|

|||||||

|

|

||||||

预测结果如下:

|

预测结果如下:

|

||||||

```

|

```

|

||||||

val_3253.jpg: {'attributes': ['Photo', 'Little', 'Black-and-White', 'Blurry', 'Without-Obstacles', 'Tilted'], 'output': [0, 1, 1, 0, 1, 0]}

|

val_3253.jpg: {'attributes': ['Photo', 'Little', 'Black-and-White', 'Blurry', 'Without-Obstacles', 'Tilted'], 'output': [0, 1, 1, 0, 1, 0]}

|

||||||

```

|

```

|

||||||

|

|

||||||

对比两张图片可以发现,第一张图片比较清晰,表格属性的结果也偏向于比较容易识别,我们可以更相信表格识别的结果,第二张图片比较模糊,且存在倾斜现象,表格识别可能存在错误,需要我们人工进一步校验。通过表格的属性识别能力,可以进一步将“人工”和“智能”很好的结合起来,为表格识别能力的落地的精度提供保障。

|

对比两张图片可以发现,第一张图片比较清晰,表格属性的结果也偏向于比较容易识别,我们可以更相信表格识别的结果,第二张图片比较模糊,且存在倾斜现象,表格识别可能存在错误,需要我们人工进一步校验。通过表格的属性识别能力,可以进一步将“人工”和“智能”很好的结合起来,为表格识别能力的落地的精度提供保障。

|

||||||

|

|||||||

@ -434,16 +434,16 @@ python3 -m paddle.distributed.launch --gpus '0' tools/eval.py -c configs/rec/PP-

|

|||||||

|

|

||||||

```

|

```

|

||||||

output/rec/

|

output/rec/

|

||||||

├── best_accuracy.pdopt

|

├── best_accuracy.pdopt

|

||||||

├── best_accuracy.pdparams

|

├── best_accuracy.pdparams

|

||||||

├── best_accuracy.states

|

├── best_accuracy.states

|

||||||

├── config.yml

|

├── config.yml

|

||||||

├── iter_epoch_3.pdopt

|

├── iter_epoch_3.pdopt

|

||||||

├── iter_epoch_3.pdparams

|

├── iter_epoch_3.pdparams

|

||||||

├── iter_epoch_3.states

|

├── iter_epoch_3.states

|

||||||

├── latest.pdopt

|

├── latest.pdopt

|

||||||

├── latest.pdparams

|

├── latest.pdparams

|

||||||

├── latest.states

|

├── latest.states

|

||||||

└── train.log

|

└── train.log

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|||||||

@ -243,7 +243,7 @@ def get_cropus(f):

|

|||||||

elif 0.7 < rad < 0.8:

|

elif 0.7 < rad < 0.8:

|

||||||

f.write('20{:02d}-{:02d}-{:02d}'.format(year, month, day))

|

f.write('20{:02d}-{:02d}-{:02d}'.format(year, month, day))

|

||||||

elif 0.8 < rad < 0.9:

|

elif 0.8 < rad < 0.9:

|

||||||

f.write('20{:02d}.{:02d}.{:02d}'.format(year, month, day))

|

f.write('20{:02d}.{:02d}.{:02d}'.format(year, month, day))

|

||||||

else:

|

else:

|

||||||

f.write('{:02d}:{:02d}:{:02d} {:02d}'.format(hours, minute, second, file_id2))

|

f.write('{:02d}:{:02d}:{:02d} {:02d}'.format(hours, minute, second, file_id2))

|

||||||

|

|

||||||

|

|||||||

@ -409,7 +409,7 @@ def crop_seal_from_img(label_file, data_dir, save_dir, save_gt_path):

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

if __name__ == "__main__":

|

if __name__ == "__main__":

|

||||||

|

|

||||||

# 数据处理

|

# 数据处理

|

||||||

gen_extract_label("./seal_labeled_datas", "./seal_labeled_datas/Label.txt", "./seal_ppocr_gt/seal_det_img.txt", "./seal_ppocr_gt/seal_ppocr_img.txt")

|

gen_extract_label("./seal_labeled_datas", "./seal_labeled_datas/Label.txt", "./seal_ppocr_gt/seal_det_img.txt", "./seal_ppocr_gt/seal_ppocr_img.txt")

|

||||||

@ -523,7 +523,7 @@ def gen_xml_label(mode='train'):

|

|||||||

xml_file = open(("./seal_VOC/Annotations" + '/' + i_name + '.xml'), 'w')

|

xml_file = open(("./seal_VOC/Annotations" + '/' + i_name + '.xml'), 'w')

|

||||||

xml_file.write('<annotation>\n')

|

xml_file.write('<annotation>\n')

|

||||||

xml_file.write(' <folder>seal_VOC</folder>\n')

|

xml_file.write(' <folder>seal_VOC</folder>\n')

|

||||||

xml_file.write(' <filename>' + str(img_name) + '</filename>\n')

|

xml_file.write(' <filename>' + str(img_name) + '</filename>\n')

|

||||||

xml_file.write(' <path>' + 'Annotations/' + str(img_name) + '</path>\n')

|

xml_file.write(' <path>' + 'Annotations/' + str(img_name) + '</path>\n')

|

||||||

xml_file.write(' <size>\n')

|

xml_file.write(' <size>\n')

|

||||||

xml_file.write(' <width>' + str(width) + '</width>\n')

|

xml_file.write(' <width>' + str(width) + '</width>\n')

|

||||||

@ -553,7 +553,7 @@ def gen_xml_label(mode='train'):

|

|||||||

xml_file.write(' <ymax>'+str(ymax)+'</ymax>\n')

|

xml_file.write(' <ymax>'+str(ymax)+'</ymax>\n')

|

||||||

xml_file.write(' </bndbox>\n')

|

xml_file.write(' </bndbox>\n')

|

||||||

xml_file.write(' </object>\n')

|

xml_file.write(' </object>\n')

|

||||||

xml_file.write('</annotation>')

|

xml_file.write('</annotation>')

|

||||||

xml_file.close()

|

xml_file.close()

|

||||||

print(f'{mode} xml save done!')

|

print(f'{mode} xml save done!')

|

||||||

|

|

||||||

|

|||||||

@ -110,12 +110,12 @@ tar -xf XFUND.tar

|

|||||||

|

|

||||||

```bash

|

```bash

|

||||||

/home/aistudio/PaddleOCR/ppstructure/vqa/XFUND

|

/home/aistudio/PaddleOCR/ppstructure/vqa/XFUND

|

||||||

└─ zh_train/ 训练集

|

└─ zh_train/ 训练集

|

||||||

├── image/ 图片存放文件夹

|

├── image/ 图片存放文件夹

|

||||||

├── xfun_normalize_train.json 标注信息

|

├── xfun_normalize_train.json 标注信息

|

||||||

└─ zh_val/ 验证集

|

└─ zh_val/ 验证集

|

||||||

├── image/ 图片存放文件夹

|

├── image/ 图片存放文件夹

|

||||||

├── xfun_normalize_val.json 标注信息

|

├── xfun_normalize_val.json 标注信息

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

@ -805,7 +805,7 @@ CUDA_VISIBLE_DEVICES=0 python3 tools/infer_vqa_token_ser_re.py \

|

|||||||

最终会在config.Global.save_res_path字段所配置的目录下保存预测结果可视化图像以及预测结果文本文件,预测结果文本文件名为infer_results.txt, 每一行表示一张图片的结果,每张图片的结果如下所示,前面表示测试图片路径,后面为测试结果:key字段及对应的value字段。

|

最终会在config.Global.save_res_path字段所配置的目录下保存预测结果可视化图像以及预测结果文本文件,预测结果文本文件名为infer_results.txt, 每一行表示一张图片的结果,每张图片的结果如下所示,前面表示测试图片路径,后面为测试结果:key字段及对应的value字段。

|

||||||

|

|

||||||

```

|

```

|

||||||

test_imgs/t131.jpg {"政治面税": "群众", "性别": "男", "籍贯": "河北省邯郸市", "婚姻状况": "亏末婚口已婚口已娇", "通讯地址": "邯郸市阳光苑7号楼003", "民族": "汉族", "毕业院校": "河南工业大学", "户口性质": "口农村城镇", "户口地址": "河北省邯郸市", "联系电话": "13288888888", "健康状况": "健康", "姓名": "小六", "好高cm": "180", "出生年月": "1996年8月9日", "文化程度": "本科", "身份证号码": "458933777777777777"}

|

test_imgs/t131.jpg {"政治面税": "群众", "性别": "男", "籍贯": "河北省邯郸市", "婚姻状况": "亏末婚口已婚口已娇", "通讯地址": "邯郸市阳光苑7号楼003", "民族": "汉族", "毕业院校": "河南工业大学", "户口性质": "口农村城镇", "户口地址": "河北省邯郸市", "联系电话": "13288888888", "健康状况": "健康", "姓名": "小六", "好高cm": "180", "出生年月": "1996年8月9日", "文化程度": "本科", "身份证号码": "458933777777777777"}

|

||||||

````

|

````

|

||||||

|

|

||||||

展示预测结果

|

展示预测结果

|

||||||

|

|||||||

File diff suppressed because it is too large

Load Diff

@ -228,7 +228,7 @@ python tools/infer/predict_rec.py --image_dir="train_data/handwrite/HWDB2.0Test_

|

|||||||

|

|

||||||

```python

|

```python

|

||||||

# 可视化文字识别图片

|

# 可视化文字识别图片

|

||||||

from PIL import Image

|

from PIL import Image

|

||||||

import matplotlib.pyplot as plt

|

import matplotlib.pyplot as plt

|

||||||

import numpy as np

|

import numpy as np

|

||||||

import os

|

import os

|

||||||

@ -238,10 +238,10 @@ img_path = 'train_data/handwrite/HWDB2.0Test_images/104-P16_4.jpg'

|

|||||||

|

|

||||||

def vis(img_path):

|

def vis(img_path):

|

||||||

plt.figure()

|

plt.figure()

|

||||||

image = Image.open(img_path)

|

image = Image.open(img_path)

|

||||||

plt.imshow(image)

|

plt.imshow(image)

|

||||||

plt.show()

|

plt.show()

|

||||||

# image = image.resize([208, 208])

|

# image = image.resize([208, 208])

|

||||||

|

|

||||||

|

|

||||||

vis(img_path)

|

vis(img_path)

|

||||||

|

|||||||

@ -64,7 +64,7 @@ unzip icdar2015.zip -d train_data

|

|||||||

|

|

||||||

```python

|

```python

|

||||||

# 随机查看文字检测数据集图片

|

# 随机查看文字检测数据集图片

|

||||||

from PIL import Image

|

from PIL import Image

|

||||||

import matplotlib.pyplot as plt

|

import matplotlib.pyplot as plt

|

||||||

import numpy as np

|

import numpy as np

|

||||||

import os

|

import os

|

||||||

@ -77,13 +77,13 @@ def get_one_image(train):

|

|||||||

files = os.listdir(train)

|

files = os.listdir(train)

|

||||||

n = len(files)

|

n = len(files)

|

||||||

ind = np.random.randint(0,n)

|

ind = np.random.randint(0,n)

|

||||||

img_dir = os.path.join(train,files[ind])

|

img_dir = os.path.join(train,files[ind])

|

||||||

image = Image.open(img_dir)

|

image = Image.open(img_dir)

|

||||||

plt.imshow(image)

|

plt.imshow(image)

|

||||||

plt.show()

|

plt.show()

|

||||||

image = image.resize([208, 208])

|

image = image.resize([208, 208])

|

||||||

|

|

||||||

get_one_image(train)

|

get_one_image(train)

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

@ -355,7 +355,7 @@ unzip ic15_data.zip -d train_data

|

|||||||

|

|

||||||

```python

|

```python

|

||||||

# 随机查看文字检测数据集图片

|

# 随机查看文字检测数据集图片

|

||||||

from PIL import Image

|

from PIL import Image

|

||||||

import matplotlib.pyplot as plt

|

import matplotlib.pyplot as plt

|

||||||

import numpy as np

|

import numpy as np

|

||||||

import os

|

import os

|

||||||

@ -367,11 +367,11 @@ def get_one_image(train):

|

|||||||

files = os.listdir(train)

|

files = os.listdir(train)

|

||||||

n = len(files)

|

n = len(files)

|

||||||

ind = np.random.randint(0,n)

|

ind = np.random.randint(0,n)

|

||||||

img_dir = os.path.join(train,files[ind])

|

img_dir = os.path.join(train,files[ind])

|

||||||

image = Image.open(img_dir)

|

image = Image.open(img_dir)

|

||||||

plt.imshow(image)

|

plt.imshow(image)

|

||||||

plt.show()

|

plt.show()

|

||||||

image = image.resize([208, 208])

|

image = image.resize([208, 208])

|

||||||

|

|

||||||

get_one_image(train)

|

get_one_image(train)

|

||||||

```

|

```

|

||||||

|

|||||||

2

benchmark/PaddleOCR_DBNet/.gitattributes

vendored

2

benchmark/PaddleOCR_DBNet/.gitattributes

vendored

@ -1,2 +1,2 @@

|

|||||||

*.html linguist-language=python

|

*.html linguist-language=python

|

||||||

*.ipynb linguist-language=python

|

*.ipynb linguist-language=python

|

||||||

|

|||||||

2

benchmark/PaddleOCR_DBNet/.gitignore

vendored

2

benchmark/PaddleOCR_DBNet/.gitignore

vendored

@ -13,4 +13,4 @@ datasets/

|

|||||||

index/

|

index/

|

||||||

train_log/

|

train_log/

|

||||||

log/

|

log/

|

||||||

profiling_log/

|

profiling_log/

|

||||||

|

|||||||

@ -37,4 +37,4 @@ dataset:

|

|||||||

batch_size: 1

|

batch_size: 1

|

||||||

shuffle: true

|

shuffle: true

|

||||||

num_workers: 0

|

num_workers: 0

|

||||||

collate_fn: ''

|

collate_fn: ''

|

||||||

|

|||||||

@ -62,4 +62,4 @@ dataset:

|

|||||||

batch_size: 2

|

batch_size: 2

|

||||||

shuffle: true

|

shuffle: true

|

||||||

num_workers: 6

|

num_workers: 6

|

||||||

collate_fn: ''

|

collate_fn: ''

|

||||||

|

|||||||

@ -66,4 +66,4 @@ dataset:

|

|||||||

batch_size: 1

|

batch_size: 1

|

||||||

shuffle: true

|

shuffle: true

|

||||||

num_workers: 0

|

num_workers: 0

|

||||||

collate_fn: ICDARCollectFN

|

collate_fn: ICDARCollectFN

|

||||||

|

|||||||

@ -79,4 +79,4 @@ dataset:

|

|||||||

batch_size: 1

|

batch_size: 1

|

||||||

shuffle: true

|

shuffle: true

|

||||||

num_workers: 6

|

num_workers: 6

|

||||||

collate_fn: ICDARCollectFN

|

collate_fn: ICDARCollectFN

|

||||||

|

|||||||

@ -70,4 +70,4 @@ dataset:

|

|||||||

batch_size: 1

|

batch_size: 1

|

||||||

shuffle: true

|

shuffle: true

|

||||||

num_workers: 0

|

num_workers: 0

|

||||||

collate_fn: ICDARCollectFN

|

collate_fn: ICDARCollectFN

|

||||||

|

|||||||

@ -1 +1 @@

|

|||||||

CUDA_VISIBLE_DEVICES=0 python3 tools/eval.py --model_path ''

|

CUDA_VISIBLE_DEVICES=0 python3 tools/eval.py --model_path ''

|

||||||

|

|||||||

@ -1,2 +1,2 @@

|

|||||||

# export NCCL_P2P_DISABLE=1

|

# export NCCL_P2P_DISABLE=1

|

||||||

CUDA_VISIBLE_DEVICES=0,1,2,3 python3 -m paddle.distributed.launch tools/train.py --config_file "config/icdar2015_resnet50_FPN_DBhead_polyLR.yaml"

|

CUDA_VISIBLE_DEVICES=0,1,2,3 python3 -m paddle.distributed.launch tools/train.py --config_file "config/icdar2015_resnet50_FPN_DBhead_polyLR.yaml"

|

||||||

|

|||||||

@ -1 +1 @@

|

|||||||

CUDA_VISIBLE_DEVICES=0 python tools/predict.py --model_path model_best.pth --input_folder ./input --output_folder ./output --thre 0.7 --polygon --show --save_result

|

CUDA_VISIBLE_DEVICES=0 python tools/predict.py --model_path model_best.pth --input_folder ./input --output_folder ./output --thre 0.7 --polygon --show --save_result

|

||||||

|

|||||||

@ -1 +1 @@

|

|||||||

CUDA_VISIBLE_DEVICES=0 python3 tools/train.py --config_file "config/icdar2015_resnet50_FPN_DBhead_polyLR.yaml"

|

CUDA_VISIBLE_DEVICES=0 python3 tools/train.py --config_file "config/icdar2015_resnet50_FPN_DBhead_polyLR.yaml"

|

||||||

|

|||||||

@ -5,4 +5,4 @@ img_{img_id}.jpg

|

|||||||

|

|

||||||

For predicting single images, you can change the `img_path` in the `/tools/predict.py` to your image number.

|

For predicting single images, you can change the `img_path` in the `/tools/predict.py` to your image number.

|

||||||

|

|

||||||

The result will be saved in the output_folder(default is test/output) you give in predict.sh

|

The result will be saved in the output_folder(default is test/output) you give in predict.sh

|

||||||

|

|||||||

@ -64,4 +64,4 @@ function status_check(){

|

|||||||

else

|

else

|

||||||

echo -e "\033[33m Run failed with command - ${model_name} - ${run_command} - ${log_path} \033[0m" | tee -a ${run_log}

|

echo -e "\033[33m Run failed with command - ${model_name} - ${run_command} - ${log_path} \033[0m" | tee -a ${run_log}

|

||||||

fi

|

fi

|

||||||

}

|

}

|

||||||

|

|||||||

@ -51,4 +51,4 @@ elif [ ${MODE} = "benchmark_train" ];then

|

|||||||

# cat dup* > train_icdar2015_label.txt && rm -rf dup*

|

# cat dup* > train_icdar2015_label.txt && rm -rf dup*

|

||||||

# cd ../../../

|

# cd ../../../

|

||||||

fi

|

fi

|

||||||

fi

|

fi

|

||||||

|

|||||||

@ -340,4 +340,4 @@ else

|

|||||||

done # done with: for trainer in ${trainer_list[*]}; do

|

done # done with: for trainer in ${trainer_list[*]}; do

|

||||||

done # done with: for autocast in ${autocast_list[*]}; do

|

done # done with: for autocast in ${autocast_list[*]}; do

|

||||||

done # done with: for gpu in ${gpu_list[*]}; do

|

done # done with: for gpu in ${gpu_list[*]}; do

|

||||||

fi # end if [ ${MODE} = "infer" ]; then

|

fi # end if [ ${MODE} = "infer" ]; then

|

||||||

|

|||||||

@ -194,7 +194,9 @@ class InferenceEngine(object):

|

|||||||

box_list = [box_list[i] for i, v in enumerate(idx) if v]

|

box_list = [box_list[i] for i, v in enumerate(idx) if v]

|

||||||

score_list = [score_list[i] for i, v in enumerate(idx) if v]

|

score_list = [score_list[i] for i, v in enumerate(idx) if v]

|

||||||

else:

|

else:

|

||||||

idx = box_list.reshape(box_list.shape[0], -1).sum(axis=1) > 0 # 去掉全为0的框

|

idx = (

|

||||||

|

box_list.reshape(box_list.shape[0], -1).sum(axis=1) > 0

|

||||||

|

) # 去掉全为0的框

|

||||||

box_list, score_list = box_list[idx], score_list[idx]

|

box_list, score_list = box_list[idx], score_list[idx]

|

||||||

else:

|

else:

|

||||||

box_list, score_list = [], []

|

box_list, score_list = [], []

|

||||||

|

|||||||

@ -59,4 +59,3 @@ source ${BENCHMARK_ROOT}/scripts/run_model.sh # 在该脚本中会对符合

|

|||||||

_set_params $@

|

_set_params $@

|

||||||

#_train # 如果只想产出训练log,不解析,可取消注释

|

#_train # 如果只想产出训练log,不解析,可取消注释

|

||||||

_run # 该函数在run_model.sh中,执行时会调用_train; 如果不联调只想要产出训练log可以注掉本行,提交时需打开

|

_run # 该函数在run_model.sh中,执行时会调用_train; 如果不联调只想要产出训练log可以注掉本行,提交时需打开

|

||||||

|

|

||||||

|

|||||||

@ -34,6 +34,3 @@ for model_mode in ${model_mode_list[@]}; do

|

|||||||

done

|

done

|

||||||

done

|

done

|

||||||

done

|

done

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@ -106,4 +106,4 @@ Eval:

|

|||||||

shuffle: False

|

shuffle: False

|

||||||

drop_last: False

|

drop_last: False

|

||||||

batch_size_per_card: 1 # must be 1

|

batch_size_per_card: 1 # must be 1

|

||||||

num_workers: 2

|

num_workers: 2

|

||||||

|

|||||||

@ -132,4 +132,4 @@ Eval:

|

|||||||

shuffle: False

|

shuffle: False

|

||||||

drop_last: False

|

drop_last: False

|

||||||

batch_size_per_card: 1 # must be 1

|

batch_size_per_card: 1 # must be 1

|

||||||

num_workers: 8

|

num_workers: 8

|

||||||

|

|||||||

@ -130,4 +130,4 @@ Eval:

|

|||||||

shuffle: False

|

shuffle: False

|

||||||

drop_last: False

|

drop_last: False

|

||||||

batch_size_per_card: 1 # must be 1

|

batch_size_per_card: 1 # must be 1

|

||||||

num_workers: 2

|

num_workers: 2

|

||||||

|

|||||||

@ -136,4 +136,4 @@ Eval:

|

|||||||

shuffle: False

|

shuffle: False

|

||||||

drop_last: False

|

drop_last: False

|

||||||

batch_size_per_card: 1 # must be 1

|

batch_size_per_card: 1 # must be 1

|

||||||

num_workers: 2

|

num_workers: 2

|

||||||

|

|||||||

@ -105,4 +105,4 @@ Eval:

|

|||||||

shuffle: False

|

shuffle: False

|

||||||

drop_last: False

|

drop_last: False

|

||||||

batch_size_per_card: 1 # must be 1

|

batch_size_per_card: 1 # must be 1

|

||||||

num_workers: 2

|

num_workers: 2

|

||||||

|

|||||||

@ -131,4 +131,4 @@ Eval:

|

|||||||

shuffle: False

|

shuffle: False

|

||||||

drop_last: False

|

drop_last: False

|

||||||

batch_size_per_card: 1 # must be 1

|

batch_size_per_card: 1 # must be 1

|

||||||

num_workers: 8

|

num_workers: 8

|

||||||

|

|||||||

@ -105,4 +105,4 @@ Eval:

|

|||||||

shuffle: False

|

shuffle: False

|

||||||

drop_last: False

|

drop_last: False

|

||||||

batch_size_per_card: 1 # must be 1

|

batch_size_per_card: 1 # must be 1

|

||||||

num_workers: 2

|

num_workers: 2

|

||||||

|

|||||||

@ -173,5 +173,3 @@ Eval:

|

|||||||

drop_last: False

|

drop_last: False

|

||||||

batch_size_per_card: 8

|

batch_size_per_card: 8

|

||||||

num_workers: 8

|

num_workers: 8

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@ -198,9 +198,9 @@ class ArgsParser(ArgumentParser):

|

|||||||

lang = "cyrillic"

|

lang = "cyrillic"

|

||||||

elif lang in devanagari_lang:

|

elif lang in devanagari_lang:

|

||||||

lang = "devanagari"

|

lang = "devanagari"

|

||||||

global_config["Global"][

|

global_config["Global"]["character_dict_path"] = (

|

||||||

"character_dict_path"

|

"ppocr/utils/dict/{}_dict.txt".format(lang)

|

||||||

] = "ppocr/utils/dict/{}_dict.txt".format(lang)

|

)

|

||||||

global_config["Global"]["save_model_dir"] = "./output/rec_{}_lite".format(lang)

|

global_config["Global"]["save_model_dir"] = "./output/rec_{}_lite".format(lang)

|

||||||

global_config["Train"]["dataset"]["label_file_list"] = [

|

global_config["Train"]["dataset"]["label_file_list"] = [

|

||||||

"train_data/{}_train.txt".format(lang)

|

"train_data/{}_train.txt".format(lang)

|

||||||

|

|||||||

@ -114,4 +114,3 @@ Eval:

|

|||||||

batch_size_per_card: 128

|

batch_size_per_card: 128

|

||||||

num_workers: 4

|

num_workers: 4

|

||||||

use_shared_memory: False

|

use_shared_memory: False

|

||||||

|

|

||||||

|

|||||||

@ -81,4 +81,3 @@ Eval:

|

|||||||

drop_last: False

|

drop_last: False

|

||||||

batch_size_per_card: 16

|

batch_size_per_card: 16

|

||||||

num_workers: 4

|

num_workers: 4

|

||||||

|

|

||||||

|

|||||||

@ -82,4 +82,3 @@ Eval:

|

|||||||

drop_last: False

|

drop_last: False

|

||||||

batch_size_per_card: 16

|

batch_size_per_card: 16

|

||||||

num_workers: 4

|

num_workers: 4

|

||||||

|

|

||||||

|

|||||||

@ -142,4 +142,4 @@ Eval:

|

|||||||

shuffle: False

|

shuffle: False

|

||||||

drop_last: False

|

drop_last: False

|

||||||

batch_size_per_card: 10

|

batch_size_per_card: 10

|

||||||

num_workers: 8

|

num_workers: 8

|

||||||

|

|||||||

1

deploy/android_demo/.gitignore

vendored

1

deploy/android_demo/.gitignore

vendored

@ -6,4 +6,3 @@

|

|||||||

/build

|

/build

|

||||||

/captures

|

/captures

|

||||||

.externalNativeBuild

|

.externalNativeBuild

|

||||||

|

|

||||||

|

|||||||

@ -90,4 +90,4 @@ task downloadAndExtractArchives(type: DefaultTask) {

|

|||||||

}

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

preBuild.dependsOn downloadAndExtractArchives

|

preBuild.dependsOn downloadAndExtractArchives

|

||||||

|

|||||||

@ -35,4 +35,4 @@

|

|||||||

</provider>

|

</provider>

|

||||||

</application>

|

</application>

|

||||||

|

|

||||||

</manifest>

|

</manifest>

|

||||||

|

|||||||

@ -114,4 +114,4 @@ add_custom_command(

|

|||||||

COMMAND

|

COMMAND

|

||||||

${CMAKE_COMMAND} -E copy

|

${CMAKE_COMMAND} -E copy

|

||||||

${PaddleLite_DIR}/cxx/libs/${ANDROID_ABI}/libhiai_ir_build.so

|

${PaddleLite_DIR}/cxx/libs/${ANDROID_ABI}/libhiai_ir_build.so

|

||||||

${CMAKE_LIBRARY_OUTPUT_DIRECTORY}/libhiai_ir_build.so)

|

${CMAKE_LIBRARY_OUTPUT_DIRECTORY}/libhiai_ir_build.so)

|

||||||

|

|||||||

@ -42,4 +42,4 @@ cv::Mat cls_resize_img(const cv::Mat &img) {

|

|||||||

cv::BORDER_CONSTANT, {0, 0, 0});

|

cv::BORDER_CONSTANT, {0, 0, 0});

|

||||||

}

|

}

|

||||||

return resize_img;

|

return resize_img;

|

||||||

}

|

}

|

||||||

|

|||||||

@ -20,4 +20,4 @@

|

|||||||

|

|

||||||

extern const std::vector<int> CLS_IMAGE_SHAPE;

|

extern const std::vector<int> CLS_IMAGE_SHAPE;

|

||||||

|

|

||||||

cv::Mat cls_resize_img(const cv::Mat &img);

|

cv::Mat cls_resize_img(const cv::Mat &img);

|

||||||

|

|||||||

@ -17,4 +17,4 @@ cv::Mat crnn_resize_img(const cv::Mat &img, float wh_ratio);

|

|||||||

template <class ForwardIterator>

|

template <class ForwardIterator>

|

||||||

inline size_t argmax(ForwardIterator first, ForwardIterator last) {

|

inline size_t argmax(ForwardIterator first, ForwardIterator last) {

|

||||||

return std::distance(first, std::max_element(first, last));

|

return std::distance(first, std::max_element(first, last));

|

||||||

}

|

}

|

||||||

|

|||||||

@ -339,4 +339,4 @@ filter_tag_det_res(const std::vector<std::vector<std::vector<int>>> &o_boxes,

|

|||||||

root_points.push_back(boxes[n]);

|

root_points.push_back(boxes[n]);

|

||||||

}

|

}

|

||||||

return root_points;

|

return root_points;

|

||||||

}

|

}

|

||||||

|

|||||||

@ -10,4 +10,4 @@ boxes_from_bitmap(const cv::Mat &pred, const cv::Mat &bitmap);

|

|||||||

|

|

||||||

std::vector<std::vector<std::vector<int>>>

|

std::vector<std::vector<std::vector<int>>>

|

||||||

filter_tag_det_res(const std::vector<std::vector<std::vector<int>>> &o_boxes,

|

filter_tag_det_res(const std::vector<std::vector<std::vector<int>>> &o_boxes,

|

||||||

float ratio_h, float ratio_w, const cv::Mat &srcimg);

|

float ratio_h, float ratio_w, const cv::Mat &srcimg);

|

||||||

|

|||||||

@ -351,4 +351,4 @@ float OCR_PPredictor::postprocess_rec_score(const PredictorOutput &res) {

|

|||||||

}

|

}

|

||||||

|

|

||||||

NET_TYPE OCR_PPredictor::get_net_flag() const { return NET_OCR; }

|

NET_TYPE OCR_PPredictor::get_net_flag() const { return NET_OCR; }

|

||||||

} // namespace ppredictor

|

} // namespace ppredictor

|

||||||

|

|||||||

@ -96,4 +96,4 @@ std::vector<PredictorOutput> PPredictor::infer() {

|

|||||||

}

|

}

|

||||||

|

|

||||||

NET_TYPE PPredictor::get_net_flag() const { return (NET_TYPE)_net_flag; }

|

NET_TYPE PPredictor::get_net_flag() const { return (NET_TYPE)_net_flag; }

|

||||||

} // namespace ppredictor

|

} // namespace ppredictor

|

||||||

|

|||||||

@ -25,4 +25,4 @@ void PredictorInput::set_data(const float *input_data, int input_float_len) {

|

|||||||

float *input_raw_data = get_mutable_float_data();

|

float *input_raw_data = get_mutable_float_data();

|

||||||

memcpy(input_raw_data, input_data, input_float_len * sizeof(float));

|

memcpy(input_raw_data, input_data, input_float_len * sizeof(float));

|

||||||

}

|

}

|

||||||

} // namespace ppredictor

|

} // namespace ppredictor

|

||||||

|

|||||||

@ -23,4 +23,4 @@ int64_t PredictorOutput::get_size() const {

|

|||||||

const std::vector<int64_t> PredictorOutput::get_shape() const {

|

const std::vector<int64_t> PredictorOutput::get_shape() const {

|

||||||

return _tensor->shape();

|

return _tensor->shape();

|

||||||

}

|

}

|

||||||

} // namespace ppredictor

|

} // namespace ppredictor

|

||||||

|

|||||||

@ -79,4 +79,4 @@ void neon_mean_scale(const float *din, float *dout, int size,

|

|||||||

*(dout_c1++) = (*(din++) - mean[1]) * scale[1];

|

*(dout_c1++) = (*(din++) - mean[1]) * scale[1];

|

||||||

*(dout_c2++) = (*(din++) - mean[2]) * scale[2];

|

*(dout_c2++) = (*(din++) - mean[2]) * scale[2];

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|||||||

@ -2,4 +2,4 @@

|

|||||||

<adaptive-icon xmlns:android="http://schemas.android.com/apk/res/android">

|

<adaptive-icon xmlns:android="http://schemas.android.com/apk/res/android">

|

||||||

<background android:drawable="@drawable/ic_launcher_background" />

|

<background android:drawable="@drawable/ic_launcher_background" />

|

||||||

<foreground android:drawable="@drawable/ic_launcher_foreground" />

|

<foreground android:drawable="@drawable/ic_launcher_foreground" />

|

||||||

</adaptive-icon>

|

</adaptive-icon>

|

||||||

|

|||||||

@ -2,4 +2,4 @@

|

|||||||

<adaptive-icon xmlns:android="http://schemas.android.com/apk/res/android">

|

<adaptive-icon xmlns:android="http://schemas.android.com/apk/res/android">

|

||||||

<background android:drawable="@drawable/ic_launcher_background" />

|

<background android:drawable="@drawable/ic_launcher_background" />

|

||||||

<foreground android:drawable="@drawable/ic_launcher_foreground" />

|

<foreground android:drawable="@drawable/ic_launcher_foreground" />

|

||||||

</adaptive-icon>

|

</adaptive-icon>

|

||||||

|

|||||||

@ -64,4 +64,4 @@

|

|||||||

<item>识别</item>

|

<item>识别</item>

|

||||||

<item>分类</item>

|

<item>分类</item>

|

||||||

</string-array>

|

</string-array>

|

||||||

</resources>

|

</resources>

|

||||||

|

|||||||

@ -17,4 +17,3 @@

|

|||||||

<string name="DET_LONG_SIZE_DEFAULT">960</string>

|

<string name="DET_LONG_SIZE_DEFAULT">960</string>

|

||||||

<string name="SCORE_THRESHOLD_DEFAULT">0.1</string>

|

<string name="SCORE_THRESHOLD_DEFAULT">0.1</string>

|

||||||

</resources>

|

</resources>

|

||||||

|

|

||||||

|

|||||||

@ -1,4 +1,4 @@

|

|||||||

<?xml version="1.0" encoding="utf-8"?>

|

<?xml version="1.0" encoding="utf-8"?>

|

||||||

<paths xmlns:android="http://schemas.android.com/apk/res/android">

|

<paths xmlns:android="http://schemas.android.com/apk/res/android">

|

||||||

<external-files-path name="my_images" path="Pictures" />

|

<external-files-path name="my_images" path="Pictures" />

|

||||||

</paths>

|

</paths>

|

||||||

|

|||||||

@ -14,4 +14,4 @@ public class ExampleUnitTest {

|

|||||||

public void addition_isCorrect() {

|

public void addition_isCorrect() {

|

||||||

assertEquals(4, 2 + 2);

|

assertEquals(4, 2 + 2);

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|||||||

2

deploy/avh/.gitignore

vendored

2

deploy/avh/.gitignore

vendored

@ -2,4 +2,4 @@ include/inputs.h

|

|||||||

include/outputs.h

|

include/outputs.h

|

||||||

|

|

||||||

__pycache__/

|

__pycache__/

|

||||||

build/

|

build/

|

||||||

|

|||||||

@ -44,7 +44,7 @@ Case 3: If the demo is not run in the ci_cpu Docker container, then you will nee

|

|||||||

pip install -r ./requirements.txt

|

pip install -r ./requirements.txt

|

||||||

```

|

```

|

||||||

|

|

||||||

In case2 and case3:

|

In case2 and case3:

|

||||||

|

|

||||||

You will need to update your PATH environment variable to include the path to cmake 3.19.5 and the FVP.

|

You will need to update your PATH environment variable to include the path to cmake 3.19.5 and the FVP.

|

||||||

For example if you've installed these in ```/opt/arm``` , then you would do the following:

|

For example if you've installed these in ```/opt/arm``` , then you would do the following:

|

||||||

|

|||||||

@ -76,4 +76,4 @@ echo -e "\e[36mArm(R) Arm(R) GNU Toolchain Installation SUCCESS\e[0m"

|

|||||||

# Install TVM from TLCPack

|

# Install TVM from TLCPack

|

||||||

echo -e "\e[36mStart installing TVM\e[0m"

|

echo -e "\e[36mStart installing TVM\e[0m"

|

||||||

pip install tlcpack-nightly -f https://tlcpack.ai/wheels

|

pip install tlcpack-nightly -f https://tlcpack.ai/wheels

|

||||||

echo -e "\e[36mTVM Installation SUCCESS\e[0m"

|

echo -e "\e[36mTVM Installation SUCCESS\e[0m"

|

||||||

|

|||||||

@ -45,7 +45,7 @@ paddle_inference

|

|||||||

|

|

||||||

#### 1.2.2 安装配置OpenCV

|

#### 1.2.2 安装配置OpenCV

|

||||||

|

|

||||||

1. 在OpenCV官网下载适用于Windows平台的Opencv, [下载地址](https://github.com/opencv/opencv/releases)

|

1. 在OpenCV官网下载适用于Windows平台的Opencv, [下载地址](https://github.com/opencv/opencv/releases)

|

||||||

2. 运行下载的可执行文件,将OpenCV解压至指定目录,如`D:\projects\cpp\opencv`

|

2. 运行下载的可执行文件,将OpenCV解压至指定目录,如`D:\projects\cpp\opencv`

|

||||||

|

|

||||||

#### 1.2.3 下载PaddleOCR代码

|

#### 1.2.3 下载PaddleOCR代码

|

||||||

|

|||||||

@ -11,4 +11,3 @@ FetchContent_Declare(

|

|||||||

GIT_TAG main

|

GIT_TAG main

|

||||||

)

|

)

|

||||||

FetchContent_MakeAvailable(extern_Autolog)

|

FetchContent_MakeAvailable(extern_Autolog)

|

||||||

|

|

||||||

|

|||||||

@ -65,4 +65,4 @@ DECLARE_bool(det);

|

|||||||

DECLARE_bool(rec);

|

DECLARE_bool(rec);

|

||||||

DECLARE_bool(cls);

|

DECLARE_bool(cls);

|

||||||

DECLARE_bool(table);

|

DECLARE_bool(table);

|

||||||

DECLARE_bool(layout);

|

DECLARE_bool(layout);

|

||||||

|

|||||||

@ -97,4 +97,4 @@ private:

|

|||||||

DBPostProcessor post_processor_;

|

DBPostProcessor post_processor_;

|

||||||

};

|

};

|

||||||

|

|

||||||

} // namespace PaddleOCR

|

} // namespace PaddleOCR

|

||||||

|

|||||||

@ -63,4 +63,4 @@ private:

|

|||||||

}

|

}

|

||||||

};

|

};

|

||||||

|

|

||||||

} // namespace PaddleOCR

|

} // namespace PaddleOCR

|

||||||

|

|||||||

@ -79,4 +79,4 @@ public:

|

|||||||

const int w);

|

const int w);

|

||||||

};

|

};

|

||||||

|

|

||||||

} // namespace PaddleOCR

|

} // namespace PaddleOCR

|

||||||

|

|||||||

@ -75,4 +75,4 @@ private:

|

|||||||

PicodetPostProcessor post_processor_;

|

PicodetPostProcessor post_processor_;

|

||||||

};

|

};

|

||||||

|

|

||||||

} // namespace PaddleOCR

|

} // namespace PaddleOCR

|

||||||

|

|||||||

@ -83,4 +83,4 @@ private:

|

|||||||

|

|

||||||

}; // class StructureTableRecognizer

|

}; // class StructureTableRecognizer

|

||||||

|

|

||||||

} // namespace PaddleOCR

|

} // namespace PaddleOCR

|

||||||

|

|||||||

@ -110,4 +110,4 @@ private:

|

|||||||

}

|

}

|

||||||

};

|

};

|

||||||

|

|

||||||

} // namespace PaddleOCR

|

} // namespace PaddleOCR

|

||||||

|

|||||||

@ -222,7 +222,7 @@ CUDNN_LIB_DIR=/your_cudnn_lib_dir

|

|||||||

**注意** ppocr默认使用`PP-OCRv3`模型,识别模型使用的输入shape为`3,48,320`, 如需使用旧版本的PP-OCR模型,则需要设置参数`--rec_img_h=32`。

|

**注意** ppocr默认使用`PP-OCRv3`模型,识别模型使用的输入shape为`3,48,320`, 如需使用旧版本的PP-OCR模型,则需要设置参数`--rec_img_h=32`。

|

||||||

|

|

||||||

|

|

||||||

运行方式:

|

运行方式:

|

||||||

```shell

|

```shell

|

||||||

./build/ppocr [--param1] [--param2] [...]

|

./build/ppocr [--param1] [--param2] [...]

|

||||||

```

|

```

|

||||||

|

|||||||

@ -73,4 +73,4 @@ DEFINE_bool(det, true, "Whether use det in forward.");

|

|||||||

DEFINE_bool(rec, true, "Whether use rec in forward.");

|

DEFINE_bool(rec, true, "Whether use rec in forward.");

|

||||||

DEFINE_bool(cls, false, "Whether use cls in forward.");

|

DEFINE_bool(cls, false, "Whether use cls in forward.");

|

||||||

DEFINE_bool(table, false, "Whether use table structure in forward.");

|

DEFINE_bool(table, false, "Whether use table structure in forward.");

|

||||||

DEFINE_bool(layout, false, "Whether use layout analysis in forward.");

|

DEFINE_bool(layout, false, "Whether use layout analysis in forward.");

|

||||||

|

|||||||

@ -422,4 +422,4 @@ float Utility::iou(std::vector<float> &box1, std::vector<float> &box2) {

|

|||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

} // namespace PaddleOCR

|

} // namespace PaddleOCR

|

||||||

|

|||||||

@ -27,4 +27,4 @@ RUN tar xf /PaddleOCR/inference/{file}.tar -C /PaddleOCR/inference/

|

|||||||

|

|

||||||

EXPOSE 8868

|

EXPOSE 8868

|

||||||

|

|

||||||

CMD ["/bin/bash","-c","hub install deploy/hubserving/ocr_system/ && hub serving start -m ocr_system"]

|

CMD ["/bin/bash","-c","hub install deploy/hubserving/ocr_system/ && hub serving start -m ocr_system"]

|

||||||

|

|||||||

File diff suppressed because one or more lines are too long

@ -1,12 +1,12 @@

|

|||||||

# PaddleOCR高性能全场景模型部署方案—FastDeploy

|

# PaddleOCR高性能全场景模型部署方案—FastDeploy

|

||||||

|

|

||||||

## 目录

|

## 目录

|

||||||

- [FastDeploy介绍](#FastDeploy介绍)

|

- [FastDeploy介绍](#FastDeploy介绍)

|

||||||

- [PaddleOCR模型部署](#PaddleOCR模型部署)

|

- [PaddleOCR模型部署](#PaddleOCR模型部署)

|

||||||

- [常见问题](#常见问题)

|

- [常见问题](#常见问题)

|

||||||

|

|

||||||

## 1. FastDeploy介绍

|

## 1. FastDeploy介绍

|

||||||

<div id="FastDeploy介绍"></div>

|

<div id="FastDeploy介绍"></div>

|

||||||

|

|

||||||

**[⚡️FastDeploy](https://github.com/PaddlePaddle/FastDeploy)**是一款**全场景**、**易用灵活**、**极致高效**的AI推理部署工具,支持**云边端**部署.使用FastDeploy可以简单高效的在X86 CPU、NVIDIA GPU、飞腾CPU、ARM CPU、Intel GPU、昆仑、昇腾、算能、瑞芯微等10+款硬件上对PaddleOCR模型进行快速部署,并且支持Paddle Inference、Paddle Lite、TensorRT、OpenVINO、ONNXRuntime、SOPHGO、RKNPU2等多种推理后端.

|

**[⚡️FastDeploy](https://github.com/PaddlePaddle/FastDeploy)**是一款**全场景**、**易用灵活**、**极致高效**的AI推理部署工具,支持**云边端**部署.使用FastDeploy可以简单高效的在X86 CPU、NVIDIA GPU、飞腾CPU、ARM CPU、Intel GPU、昆仑、昇腾、算能、瑞芯微等10+款硬件上对PaddleOCR模型进行快速部署,并且支持Paddle Inference、Paddle Lite、TensorRT、OpenVINO、ONNXRuntime、SOPHGO、RKNPU2等多种推理后端.

|

||||||

|

|

||||||

@ -14,10 +14,10 @@

|

|||||||

|

|

||||||

<img src="https://user-images.githubusercontent.com/31974251/224941235-d5ea4ed0-7626-4c62-8bbd-8e4fad1e72ad.png" >

|

<img src="https://user-images.githubusercontent.com/31974251/224941235-d5ea4ed0-7626-4c62-8bbd-8e4fad1e72ad.png" >

|

||||||

|

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

## 2. PaddleOCR模型部署

|

## 2. PaddleOCR模型部署

|

||||||

<div id="PaddleOCR模型部署"></div>

|

<div id="PaddleOCR模型部署"></div>

|

||||||

|

|

||||||

### 2.1 硬件支持列表

|

### 2.1 硬件支持列表

|

||||||

|

|

||||||

@ -27,62 +27,62 @@

|

|||||||

|NVIDIA GPU|✅|[链接](./cpu-gpu)|✅|✅|

|

|NVIDIA GPU|✅|[链接](./cpu-gpu)|✅|✅|

|

||||||

|飞腾CPU|✅|[链接](./cpu-gpu)|✅|✅|

|

|飞腾CPU|✅|[链接](./cpu-gpu)|✅|✅|

|

||||||

|ARM CPU|✅|[链接](./cpu-gpu)|✅|✅|

|

|ARM CPU|✅|[链接](./cpu-gpu)|✅|✅|

|

||||||

|Intel GPU(集成显卡)|✅|[链接](./cpu-gpu)|✅|✅|

|

|Intel GPU(集成显卡)|✅|[链接](./cpu-gpu)|✅|✅|

|

||||||

|Intel GPU(独立显卡)|✅|[链接](./cpu-gpu)|✅|✅|

|

|Intel GPU(独立显卡)|✅|[链接](./cpu-gpu)|✅|✅|

|

||||||

|昆仑|✅|[链接](./kunlunxin)|✅|✅|

|

|昆仑|✅|[链接](./kunlunxin)|✅|✅|

|

||||||

|昇腾|✅|[链接](./ascend)|✅|✅|

|

|昇腾|✅|[链接](./ascend)|✅|✅|

|

||||||

|算能|✅|[链接](./sophgo)|✅|✅|

|

|算能|✅|[链接](./sophgo)|✅|✅|

|

||||||

|瑞芯微|✅|[链接](./rockchip)|✅|✅|

|

|瑞芯微|✅|[链接](./rockchip)|✅|✅|

|

||||||

|

|

||||||

### 2.2. 详细使用文档

|

### 2.2. 详细使用文档

|

||||||

- X86 CPU

|

- X86 CPU

|

||||||

- [部署模型准备](./cpu-gpu)

|

- [部署模型准备](./cpu-gpu)

|

||||||

- [Python部署示例](./cpu-gpu/python/)

|

- [Python部署示例](./cpu-gpu/python/)

|

||||||

- [C++部署示例](./cpu-gpu/cpp/)

|

- [C++部署示例](./cpu-gpu/cpp/)

|

||||||

- NVIDIA GPU

|

- NVIDIA GPU

|

||||||

- [部署模型准备](./cpu-gpu)

|

- [部署模型准备](./cpu-gpu)

|

||||||

- [Python部署示例](./cpu-gpu/python/)

|

- [Python部署示例](./cpu-gpu/python/)

|

||||||

- [C++部署示例](./cpu-gpu/cpp/)

|

- [C++部署示例](./cpu-gpu/cpp/)

|

||||||

- 飞腾CPU

|

- 飞腾CPU

|

||||||

- [部署模型准备](./cpu-gpu)

|

- [部署模型准备](./cpu-gpu)

|

||||||

- [Python部署示例](./cpu-gpu/python/)

|

- [Python部署示例](./cpu-gpu/python/)

|

||||||

- [C++部署示例](./cpu-gpu/cpp/)

|

- [C++部署示例](./cpu-gpu/cpp/)

|

||||||

- ARM CPU

|

- ARM CPU

|

||||||

- [部署模型准备](./cpu-gpu)

|

- [部署模型准备](./cpu-gpu)

|

||||||

- [Python部署示例](./cpu-gpu/python/)

|

- [Python部署示例](./cpu-gpu/python/)

|

||||||

- [C++部署示例](./cpu-gpu/cpp/)

|

- [C++部署示例](./cpu-gpu/cpp/)

|

||||||

- Intel GPU

|

- Intel GPU

|

||||||

- [部署模型准备](./cpu-gpu)

|

- [部署模型准备](./cpu-gpu)

|

||||||

- [Python部署示例](./cpu-gpu/python/)

|

- [Python部署示例](./cpu-gpu/python/)

|

||||||

- [C++部署示例](./cpu-gpu/cpp/)

|

- [C++部署示例](./cpu-gpu/cpp/)

|

||||||

- 昆仑 XPU

|

- 昆仑 XPU

|

||||||

- [部署模型准备](./kunlunxin)

|

- [部署模型准备](./kunlunxin)

|

||||||

- [Python部署示例](./kunlunxin/python/)

|

- [Python部署示例](./kunlunxin/python/)

|

||||||

- [C++部署示例](./kunlunxin/cpp/)

|

- [C++部署示例](./kunlunxin/cpp/)

|

||||||

- 昇腾 Ascend

|

- 昇腾 Ascend

|

||||||

- [部署模型准备](./ascend)

|

- [部署模型准备](./ascend)

|

||||||

- [Python部署示例](./ascend/python/)

|

- [Python部署示例](./ascend/python/)

|

||||||

- [C++部署示例](./ascend/cpp/)

|

- [C++部署示例](./ascend/cpp/)

|

||||||

- 算能 Sophgo

|

- 算能 Sophgo

|

||||||

- [部署模型准备](./sophgo/)

|

- [部署模型准备](./sophgo/)

|

||||||

- [Python部署示例](./sophgo/python/)

|

- [Python部署示例](./sophgo/python/)

|

||||||

- [C++部署示例](./sophgo/cpp/)

|

- [C++部署示例](./sophgo/cpp/)

|

||||||

- 瑞芯微 Rockchip

|

- 瑞芯微 Rockchip

|

||||||

- [部署模型准备](./rockchip/)

|

- [部署模型准备](./rockchip/)

|

||||||

- [Python部署示例](./rockchip/rknpu2/)

|

- [Python部署示例](./rockchip/rknpu2/)

|

||||||

- [C++部署示例](./rockchip/rknpu2/)

|

- [C++部署示例](./rockchip/rknpu2/)

|

||||||

|

|

||||||

### 2.3 更多部署方式

|

### 2.3 更多部署方式

|

||||||

|

|

||||||

- [Android ARM CPU部署](./android)

|

- [Android ARM CPU部署](./android)

|

||||||

- [服务化Serving部署](./serving)

|

- [服务化Serving部署](./serving)

|

||||||

- [web部署](./web)

|

- [web部署](./web)

|

||||||

|

|

||||||

|

|

||||||

## 3. 常见问题

|

## 3. 常见问题

|

||||||

<div id="常见问题"></div>

|

<div id="常见问题"></div>

|

||||||

|

|

||||||

遇到问题可查看常见问题集合,搜索FastDeploy issue,*或给FastDeploy提交[issue](https://github.com/PaddlePaddle/FastDeploy/issues)*:

|

遇到问题可查看常见问题集合,搜索FastDeploy issue,*或给FastDeploy提交[issue](https://github.com/PaddlePaddle/FastDeploy/issues)*:

|

||||||

|

|

||||||

[常见问题集合](https://github.com/PaddlePaddle/FastDeploy/tree/develop/docs/cn/faq)

|

[常见问题集合](https://github.com/PaddlePaddle/FastDeploy/tree/develop/docs/cn/faq)

|

||||||

[FastDeploy issues](https://github.com/PaddlePaddle/FastDeploy/issues)

|

[FastDeploy issues](https://github.com/PaddlePaddle/FastDeploy/issues)

|

||||||

|

|||||||

@ -25,7 +25,7 @@

|

|||||||

|

|

||||||

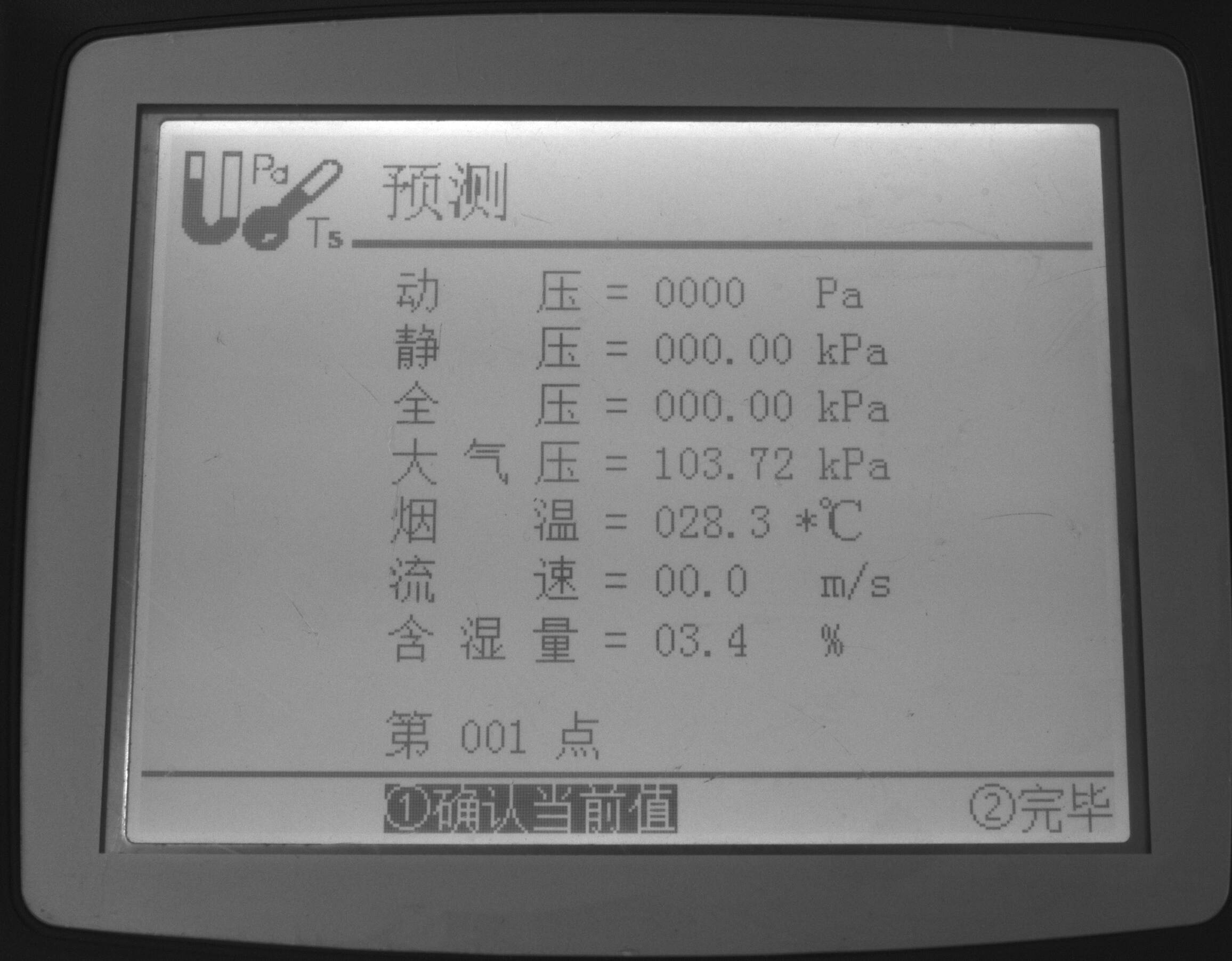

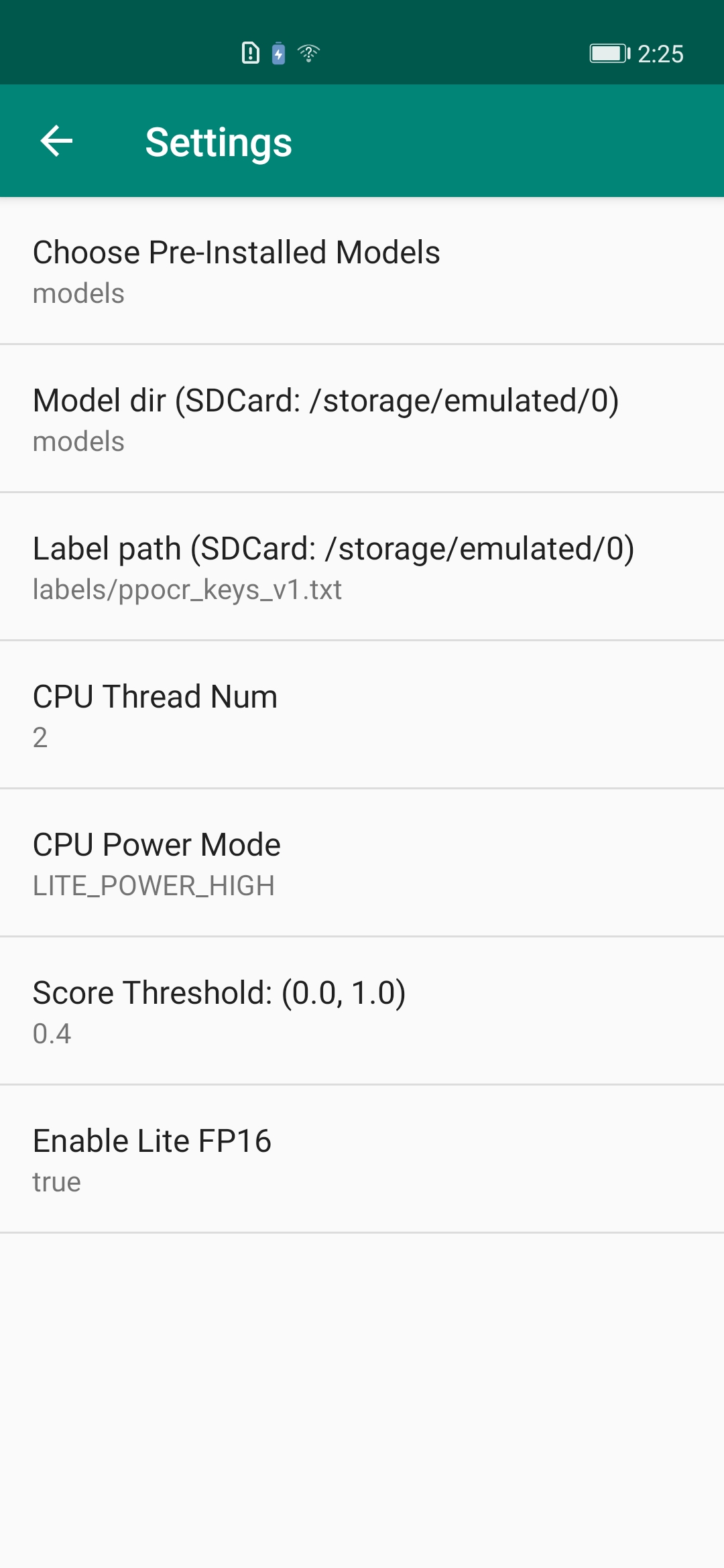

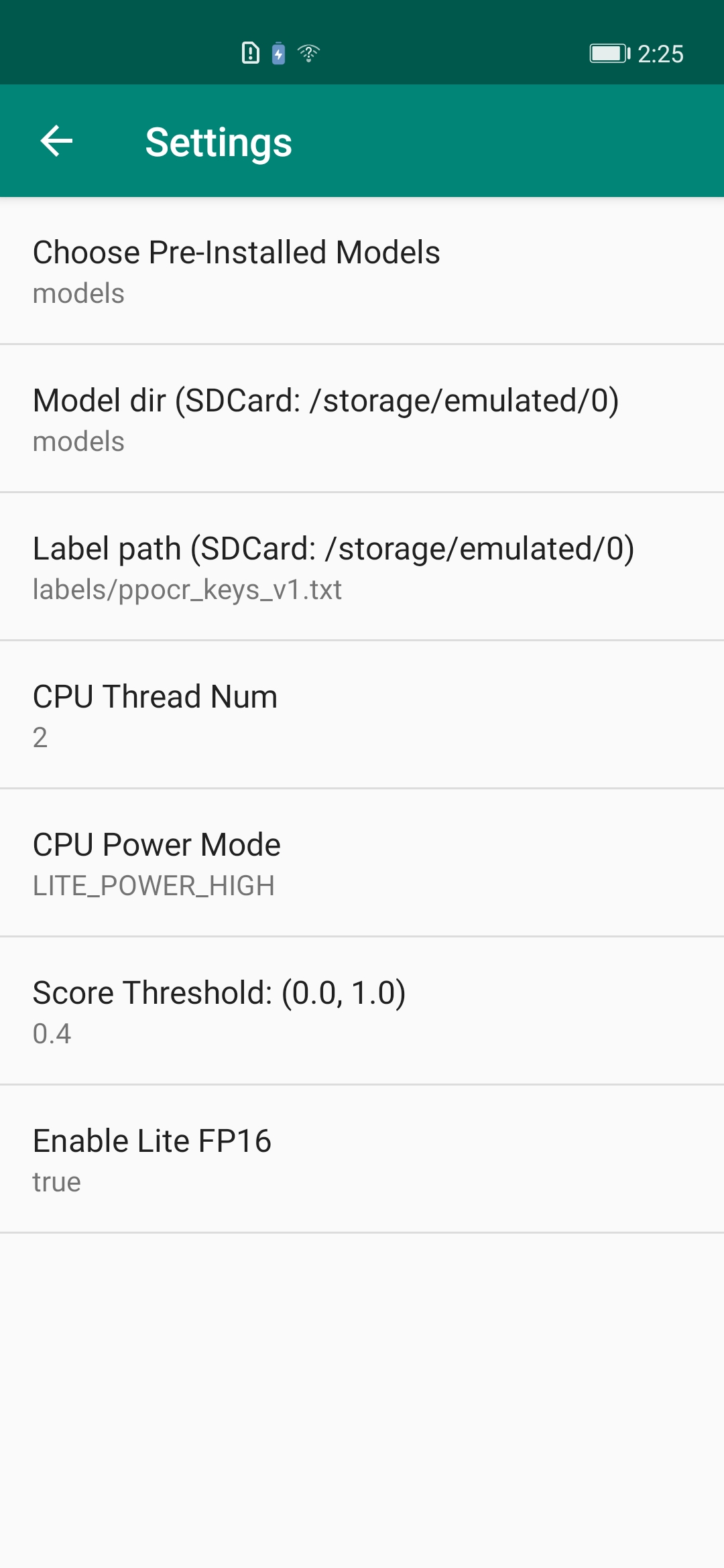

| APP 图标 | APP 效果 | APP设置项

|

| APP 图标 | APP 效果 | APP设置项

|

||||||

| --- | --- | --- |

|

| --- | --- | --- |

|

||||||

|  |  |  |

|

|  |  |  |

|

||||||

|

|

||||||

### PP-OCRv3 Java API 说明

|

### PP-OCRv3 Java API 说明

|

||||||

|

|

||||||

@ -47,7 +47,7 @@ public PPOCRv3(); // 空构造函数,之后可以调用init初始化

|

|||||||

// Constructor w/o classifier

|

// Constructor w/o classifier

|

||||||

public PPOCRv3(DBDetector detModel, Recognizer recModel);

|

public PPOCRv3(DBDetector detModel, Recognizer recModel);

|

||||||

public PPOCRv3(DBDetector detModel, Classifier clsModel, Recognizer recModel);

|

public PPOCRv3(DBDetector detModel, Classifier clsModel, Recognizer recModel);

|

||||||

```

|

```

|

||||||

- 模型预测 API:模型预测API包含直接预测的API以及带可视化功能的API。直接预测是指,不保存图片以及不渲染结果到Bitmap上,仅预测推理结果。预测并且可视化是指,预测结果以及可视化,并将可视化后的图片保存到指定的途径,以及将可视化结果渲染在Bitmap(目前支持ARGB8888格式的Bitmap), 后续可将该Bitmap在camera中进行显示。

|

- 模型预测 API:模型预测API包含直接预测的API以及带可视化功能的API。直接预测是指,不保存图片以及不渲染结果到Bitmap上,仅预测推理结果。预测并且可视化是指,预测结果以及可视化,并将可视化后的图片保存到指定的途径,以及将可视化结果渲染在Bitmap(目前支持ARGB8888格式的Bitmap), 后续可将该Bitmap在camera中进行显示。

|

||||||

```java

|

```java

|

||||||

// 直接预测:不保存图片以及不渲染结果到Bitmap上

|

// 直接预测:不保存图片以及不渲染结果到Bitmap上

|

||||||

@ -58,13 +58,13 @@ public OCRResult predict(Bitmap ARGB8888Bitmap, boolean rendering); // 只渲染

|

|||||||

```

|

```

|

||||||

- 模型资源释放 API:调用 release() API 可以释放模型资源,返回true表示释放成功,false表示失败;调用 initialized() 可以判断模型是否初始化成功,true表示初始化成功,false表示失败。

|

- 模型资源释放 API:调用 release() API 可以释放模型资源,返回true表示释放成功,false表示失败;调用 initialized() 可以判断模型是否初始化成功,true表示初始化成功,false表示失败。

|

||||||

```java

|

```java

|

||||||

public boolean release(); // 释放native资源

|

public boolean release(); // 释放native资源

|

||||||

public boolean initialized(); // 检查是否初始化成功

|

public boolean initialized(); // 检查是否初始化成功

|

||||||

```

|

```

|

||||||

|

|

||||||

- RuntimeOption设置说明

|

- RuntimeOption设置说明

|

||||||

|

|

||||||

```java

|

```java

|

||||||

public void enableLiteFp16(); // 开启fp16精度推理

|

public void enableLiteFp16(); // 开启fp16精度推理

|

||||||

public void disableLiteFP16(); // 关闭fp16精度推理

|

public void disableLiteFP16(); // 关闭fp16精度推理

|

||||||

public void enableLiteInt8(); // 开启int8精度推理,针对量化模型

|

public void enableLiteInt8(); // 开启int8精度推理,针对量化模型

|

||||||

@ -83,13 +83,13 @@ public class OCRResult {

|

|||||||

public float[] mClsScores; // 表示文本框的分类结果的置信度

|

public float[] mClsScores; // 表示文本框的分类结果的置信度

|

||||||

public int[] mClsLabels; // 表示文本框的方向分类类别

|

public int[] mClsLabels; // 表示文本框的方向分类类别

|

||||||

public boolean mInitialized = false; // 检测结果是否有效

|

public boolean mInitialized = false; // 检测结果是否有效

|

||||||

}

|

}

|

||||||

```

|

```

|

||||||

其他参考:C++/Python对应的OCRResult说明: [api/vision_results/ocr_result.md](https://github.com/PaddlePaddle/FastDeploy/blob/develop/docs/api/vision_results/ocr_result.md)

|

其他参考:C++/Python对应的OCRResult说明: [api/vision_results/ocr_result.md](https://github.com/PaddlePaddle/FastDeploy/blob/develop/docs/api/vision_results/ocr_result.md)

|

||||||

|

|

||||||

|

|

||||||

- 模型调用示例1:使用构造函数

|

- 模型调用示例1:使用构造函数

|

||||||

```java

|

```java

|

||||||

import java.nio.ByteBuffer;

|

import java.nio.ByteBuffer;

|

||||||

import android.graphics.Bitmap;

|

import android.graphics.Bitmap;

|

||||||

import android.opengl.GLES20;

|

import android.opengl.GLES20;

|

||||||

@ -119,9 +119,9 @@ recOption.setCpuThreadNum(2);

|

|||||||

detOption.setLitePowerMode(LitePowerMode.LITE_POWER_HIGH);

|

detOption.setLitePowerMode(LitePowerMode.LITE_POWER_HIGH);

|

||||||

clsOption.setLitePowerMode(LitePowerMode.LITE_POWER_HIGH);

|

clsOption.setLitePowerMode(LitePowerMode.LITE_POWER_HIGH);

|

||||||

recOption.setLitePowerMode(LitePowerMode.LITE_POWER_HIGH);

|

recOption.setLitePowerMode(LitePowerMode.LITE_POWER_HIGH);

|

||||||

detOption.enableLiteFp16();

|

detOption.enableLiteFp16();

|

||||||

clsOption.enableLiteFp16();

|

clsOption.enableLiteFp16();

|

||||||

recOption.enableLiteFp16();

|

recOption.enableLiteFp16();

|

||||||

// 初始化模型

|

// 初始化模型

|

||||||

DBDetector detModel = new DBDetector(detModelFile, detParamsFile, detOption);

|

DBDetector detModel = new DBDetector(detModelFile, detParamsFile, detOption);

|

||||||

Classifier clsModel = new Classifier(clsModelFile, clsParamsFile, clsOption);

|

Classifier clsModel = new Classifier(clsModelFile, clsParamsFile, clsOption);

|

||||||

@ -135,14 +135,14 @@ Bitmap ARGB8888ImageBitmap = Bitmap.createBitmap(width, height, Bitmap.Config.AR

|

|||||||

ARGB8888ImageBitmap.copyPixelsFromBuffer(pixelBuffer);

|

ARGB8888ImageBitmap.copyPixelsFromBuffer(pixelBuffer);

|

||||||

|

|

||||||

// 模型推理

|

// 模型推理

|

||||||

OCRResult result = model.predict(ARGB8888ImageBitmap);

|

OCRResult result = model.predict(ARGB8888ImageBitmap);

|

||||||

|

|

||||||

// 释放模型资源

|

// 释放模型资源

|

||||||

model.release();

|

model.release();

|

||||||

```

|

```

|

||||||

|

|

||||||

- 模型调用示例2: 在合适的程序节点,手动调用init

|

- 模型调用示例2: 在合适的程序节点,手动调用init

|

||||||

```java

|

```java

|

||||||

// import 同上 ...

|

// import 同上 ...

|

||||||

import com.baidu.paddle.fastdeploy.RuntimeOption;

|

import com.baidu.paddle.fastdeploy.RuntimeOption;

|

||||||

import com.baidu.paddle.fastdeploy.LitePowerMode;

|

import com.baidu.paddle.fastdeploy.LitePowerMode;

|

||||||

@ -151,7 +151,7 @@ import com.baidu.paddle.fastdeploy.vision.ocr.Classifier;

|

|||||||

import com.baidu.paddle.fastdeploy.vision.ocr.DBDetector;

|

import com.baidu.paddle.fastdeploy.vision.ocr.DBDetector;

|

||||||

import com.baidu.paddle.fastdeploy.vision.ocr.Recognizer;

|

import com.baidu.paddle.fastdeploy.vision.ocr.Recognizer;

|

||||||

// 新建空模型

|

// 新建空模型

|

||||||

PPOCRv3 model = new PPOCRv3();

|

PPOCRv3 model = new PPOCRv3();

|

||||||

// 模型路径

|

// 模型路径

|

||||||

String detModelFile = "ch_PP-OCRv3_det_infer/inference.pdmodel";

|

String detModelFile = "ch_PP-OCRv3_det_infer/inference.pdmodel";

|

||||||

String detParamsFile = "ch_PP-OCRv3_det_infer/inference.pdiparams";

|

String detParamsFile = "ch_PP-OCRv3_det_infer/inference.pdiparams";

|

||||||

@ -170,9 +170,9 @@ recOption.setCpuThreadNum(2);

|

|||||||

detOption.setLitePowerMode(LitePowerMode.LITE_POWER_HIGH);

|

detOption.setLitePowerMode(LitePowerMode.LITE_POWER_HIGH);

|

||||||

clsOption.setLitePowerMode(LitePowerMode.LITE_POWER_HIGH);

|

clsOption.setLitePowerMode(LitePowerMode.LITE_POWER_HIGH);

|

||||||

recOption.setLitePowerMode(LitePowerMode.LITE_POWER_HIGH);

|

recOption.setLitePowerMode(LitePowerMode.LITE_POWER_HIGH);

|

||||||

detOption.enableLiteFp16();

|

detOption.enableLiteFp16();

|

||||||

clsOption.enableLiteFp16();

|

clsOption.enableLiteFp16();

|

||||||

recOption.enableLiteFp16();

|

recOption.enableLiteFp16();

|

||||||

// 使用init函数初始化

|

// 使用init函数初始化

|

||||||

DBDetector detModel = new DBDetector(detModelFile, detParamsFile, detOption);

|

DBDetector detModel = new DBDetector(detModelFile, detParamsFile, detOption);

|

||||||

Classifier clsModel = new Classifier(clsModelFile, clsParamsFile, clsOption);

|

Classifier clsModel = new Classifier(clsModelFile, clsParamsFile, clsOption);

|

||||||

@ -192,10 +192,10 @@ model.init(detModel, clsModel, recModel);

|

|||||||

- 修改 `app/src/main/res/values/strings.xml` 中模型路径的默认值,如:

|

- 修改 `app/src/main/res/values/strings.xml` 中模型路径的默认值,如:

|

||||||

```xml

|

```xml

|

||||||

<!-- 将这个路径修改成您的模型 -->

|

<!-- 将这个路径修改成您的模型 -->

|

||||||

<string name="OCR_MODEL_DIR_DEFAULT">models</string>

|

<string name="OCR_MODEL_DIR_DEFAULT">models</string>

|

||||||

<string name="OCR_LABEL_PATH_DEFAULT">labels/ppocr_keys_v1.txt</string>

|

<string name="OCR_LABEL_PATH_DEFAULT">labels/ppocr_keys_v1.txt</string>

|

||||||

```

|

```

|

||||||

## 使用量化模型

|

## 使用量化模型

|

||||||

如果您使用的是量化格式的模型,只需要使用RuntimeOption的enableLiteInt8()接口设置Int8精度推理即可。

|

如果您使用的是量化格式的模型,只需要使用RuntimeOption的enableLiteInt8()接口设置Int8精度推理即可。

|

||||||

```java

|

```java

|

||||||

String detModelFile = "ch_ppocrv3_plate_det_quant/inference.pdmodel";

|

String detModelFile = "ch_ppocrv3_plate_det_quant/inference.pdmodel";

|

||||||

@ -214,10 +214,10 @@ DBDetector detModel = new DBDetector(detModelFile, detParamsFile, detOption);

|

|||||||

Recognizer recModel = new Recognizer(recModelFile, recParamsFile, recLabelFilePath, recOption);

|

Recognizer recModel = new Recognizer(recModelFile, recParamsFile, recLabelFilePath, recOption);

|

||||||

predictor.init(detModel, recModel);

|

predictor.init(detModel, recModel);

|

||||||

```

|

```

|

||||||

在App中使用,可以参考 [OcrMainActivity.java](./app/src/main/java/com/baidu/paddle/fastdeploy/app/examples/ocr/OcrMainActivity.java) 中的用法。

|

在App中使用,可以参考 [OcrMainActivity.java](./app/src/main/java/com/baidu/paddle/fastdeploy/app/examples/ocr/OcrMainActivity.java) 中的用法。

|

||||||

|

|

||||||

## 更多参考文档

|

## 更多参考文档

|

||||||

如果您想知道更多的FastDeploy Java API文档以及如何通过JNI来接入FastDeploy C++ API感兴趣,可以参考以下内容:

|

如果您想知道更多的FastDeploy Java API文档以及如何通过JNI来接入FastDeploy C++ API感兴趣,可以参考以下内容:

|

||||||

- [在 Android 中使用 FastDeploy Java SDK](https://github.com/PaddlePaddle/FastDeploy/tree/develop/java/android)

|

- [在 Android 中使用 FastDeploy Java SDK](https://github.com/PaddlePaddle/FastDeploy/tree/develop/java/android)

|

||||||

- [在 Android 中使用 FastDeploy C++ SDK](https://github.com/PaddlePaddle/FastDeploy/blob/develop/docs/cn/faq/use_cpp_sdk_on_android.md)

|

- [在 Android 中使用 FastDeploy C++ SDK](https://github.com/PaddlePaddle/FastDeploy/blob/develop/docs/cn/faq/use_cpp_sdk_on_android.md)

|

||||||