mirror of

https://github.com/PaddlePaddle/PaddleOCR.git

synced 2025-06-03 21:53:39 +08:00

add lp recognize doc, test=document

This commit is contained in:

parent

0b6290eaf2

commit

45eb697945

612

applications/车牌识别.md

Normal file

612

applications/车牌识别.md

Normal file

@ -0,0 +1,612 @@

|

||||

- [1. 项目介绍](#1-项目介绍)

|

||||

- [2. 环境搭建](#2-环境搭建)

|

||||

- [3. 数据集准备](#3-数据集准备)

|

||||

- [3.1 数据集标注规则](#31-数据集标注规则)

|

||||

- [3.2 制作符合PP-OCR训练格式的标注文件](#32-制作符合pp-ocr训练格式的标注文件)

|

||||

- [4. 实验](#4-实验)

|

||||

- [4.1 检测](#41-检测)

|

||||

- [4.1.1 方案1:预训练模型](#411-方案1预训练模型)

|

||||

- [4.1.2 方案2:CCPD车牌数据集fine-tune](#412-方案2ccpd车牌数据集fine-tune)

|

||||

- [4.1.3 量化训练](#413-量化训练)

|

||||

- [4.1.4 模型导出](#414-模型导出)

|

||||

- [4.2 识别](#42-识别)

|

||||

- [4.2.1 方案1:预训练模型](#421-方案1预训练模型)

|

||||

- [4.2.2 改动后处理](#422-改动后处理)

|

||||

- [4.2.3 方案2:CCPD车牌数据集fine-tune](#423-方案2ccpd车牌数据集fine-tune)

|

||||

- [4.2.4 量化训练](#424-量化训练)

|

||||

- [4.2.5 模型导出](#425-模型导出)

|

||||

- [4.3 串联推理](#43-串联推理)

|

||||

- [4.4 实验总结](#44-实验总结)

|

||||

|

||||

# 一种基于PaddleOCR的轻量级车牌识别模型

|

||||

|

||||

## 1. 项目介绍

|

||||

|

||||

车牌识别(Vehicle License Plate Recognition,VLPR) 是计算机视频图像识别技术在车辆牌照识别中的一种应用。车牌识别技术要求能够将运动中的汽车牌照从复杂背景中提取并识别出来,在高速公路车辆管理,停车场管理和中得到广泛应用。

|

||||

|

||||

由于使用场景的限制,表单识别通常要求高精度和实时。

|

||||

|

||||

在本例中,使用 [PP-OCRv3](../doc/doc_ch/PP-OCRv3_introduction.md) 进行车牌识别系统的开发并使用量化进行模型体积的压缩和模型推理速度的加速。项目链接: [PaddleOCR车牌](https://aistudio.baidu.com/aistudio/projectdetail/3919091?contributionType=1)

|

||||

|

||||

## 2. 环境搭建

|

||||

|

||||

本任务基于Aistudio完成, 具体环境如下:

|

||||

|

||||

- 操作系统: Linux

|

||||

- PaddlePaddle: 2.3

|

||||

- paddleslim: 2.2.2

|

||||

- PaddleOCR: Release/2.5

|

||||

|

||||

下载 PaddleOCR代码

|

||||

|

||||

```bash

|

||||

git clone -b dygraph https://github.com/PaddlePaddle/PaddleOCR

|

||||

```

|

||||

|

||||

安装依赖库

|

||||

|

||||

```bash

|

||||

pip install -r PaddleOCR/requirements.txt

|

||||

```

|

||||

|

||||

## 3. 数据集准备

|

||||

|

||||

|

||||

所使用的数据集为 CCPD新能源车牌数据集,该数据集分布如下:

|

||||

|

||||

|数据集类型|数量|

|

||||

|---|---|

|

||||

|训练集| 5769|

|

||||

|验证集| 1001|

|

||||

|测试集| 5006|

|

||||

|

||||

数据集图片示例如下:

|

||||

|

||||

|

||||

数据集可以从这里下载 https://aistudio.baidu.com/aistudio/datasetdetail/101595

|

||||

|

||||

下载好数据集后对数据集进行解压

|

||||

|

||||

```bash

|

||||

unzip -d /home/aistudio/data /home/aistudio/data/data101595/CCPD2020.zip

|

||||

```

|

||||

|

||||

### 3.1 数据集标注规则

|

||||

CPPD的图片文件名具有特殊规则,详细可查看:https://github.com/detectRecog/CCPD

|

||||

|

||||

具体规则如下:

|

||||

|

||||

例如: 025-95_113-154&383_386&473-386&473_177&454_154&383_363&402-0_0_22_27_27_33_16-37-15.jpg

|

||||

|

||||

由分隔符'-'分为几个部分:

|

||||

|

||||

- 025:车牌区域与整个图片区域的面积比。

|

||||

|

||||

- 95_113: 车牌水平倾斜度和垂直倾斜度, 水平95°, 竖直113°

|

||||

|

||||

- 154&383_386&473: 车牌边界框坐标:左上(154, 383), 右下(386, 473)

|

||||

|

||||

- 386&473_177&454_154&383_363&402: 车牌四个角点坐标, 坐标顺序为[右下,左下,左上,右上]

|

||||

|

||||

- 0_0_22_27_27_33_16: 车牌号码,CCPD中的每个图像只有一个LP。每个LP编号由一个汉字、一个字母和五个字母或数字组成。有效的中国车牌由七个字符组成:省(1个字符)、字母(1个字符)、字母+数字(5个字符)。“0_0_22_27_27_33_16”是每个字符的索引。这三个数组的定义如下。每个数组的最后一个字符是字母O,而不是数字0。我们使用O作为“无字符”的标志,因为中国车牌字符中没有O。

|

||||

|

||||

```python

|

||||

provinces = ["皖", "沪", "津", "渝", "冀", "晋", "蒙", "辽", "吉", "黑", "苏", "浙", "京", "闽", "赣", "鲁", "豫", "鄂", "湘", "粤", "桂", "琼", "川", "贵", "云", "藏", "陕", "甘", "青", "宁", "新", "警", "学", "O"]

|

||||

alphabets = ['A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'J', 'K', 'L', 'M', 'N', 'P', 'Q', 'R', 'S', 'T', 'U', 'V', 'W',

|

||||

'X', 'Y', 'Z', 'O']

|

||||

ads = ['A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'J', 'K', 'L', 'M', 'N', 'P', 'Q', 'R', 'S', 'T', 'U', 'V', 'W', 'X',

|

||||

'Y', 'Z', '0', '1', '2', '3', '4', '5', '6', '7', '8', '9', 'O']

|

||||

|

||||

```

|

||||

|

||||

### 3.2 制作符合PP-OCR训练格式的标注文件

|

||||

|

||||

在开始训练之前,可使用如下代码制作符合PP-OCR训练格式的标注文件。

|

||||

|

||||

|

||||

```python

|

||||

import cv2

|

||||

import os

|

||||

import json

|

||||

from tqdm import tqdm

|

||||

import numpy as np

|

||||

|

||||

provinces = ["皖", "沪", "津", "渝", "冀", "晋", "蒙", "辽", "吉", "黑", "苏", "浙", "京", "闽", "赣", "鲁", "豫", "鄂", "湘", "粤", "桂", "琼", "川", "贵", "云", "藏", "陕", "甘", "青", "宁", "新", "警", "学", "O"]

|

||||

alphabets = ['A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'J', 'K', 'L', 'M', 'N', 'P', 'Q', 'R', 'S', 'T', 'U', 'V', 'W', 'X', 'Y', 'Z', 'O']

|

||||

ads = ['A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'J', 'K', 'L', 'M', 'N', 'P', 'Q', 'R', 'S', 'T', 'U', 'V', 'W', 'X', 'Y', 'Z', '0', '1', '2', '3', '4', '5', '6', '7', '8', '9', 'O']

|

||||

|

||||

def make_label(img_dir, save_gt_folder, phase):

|

||||

crop_img_save_dir = os.path.join(save_gt_folder, phase, 'crop_imgs')

|

||||

os.makedirs(crop_img_save_dir, exist_ok=True)

|

||||

|

||||

f_det = open(os.path.join(save_gt_folder, phase, 'det.txt'), 'w', encoding='utf-8')

|

||||

f_rec = open(os.path.join(save_gt_folder, phase, 'rec.txt'), 'w', encoding='utf-8')

|

||||

|

||||

i = 0

|

||||

for filename in tqdm(os.listdir(os.path.join(img_dir, phase))):

|

||||

str_list = filename.split('-')

|

||||

if len(str_list) < 5:

|

||||

continue

|

||||

coord_list = str_list[3].split('_')

|

||||

txt_list = str_list[4].split('_')

|

||||

boxes = []

|

||||

for coord in coord_list:

|

||||

boxes.append([int(x) for x in coord.split("&")])

|

||||

boxes = [boxes[2], boxes[3], boxes[0], boxes[1]]

|

||||

lp_number = provinces[int(txt_list[0])] + alphabets[int(txt_list[1])] + ''.join([ads[int(x)] for x in txt_list[2:]])

|

||||

|

||||

# det

|

||||

det_info = [{'points':boxes, 'transcription':lp_number}]

|

||||

f_det.write('{}\t{}\n'.format(os.path.join(phase, filename), json.dumps(det_info, ensure_ascii=False)))

|

||||

|

||||

# rec

|

||||

boxes = np.float32(boxes)

|

||||

img = cv2.imread(os.path.join(img_dir, phase, filename))

|

||||

# crop_img = img[int(boxes[:,1].min()):int(boxes[:,1].max()),int(boxes[:,0].min()):int(boxes[:,0].max())]

|

||||

crop_img = get_rotate_crop_image(img, boxes)

|

||||

crop_img_save_filename = '{}_{}.jpg'.format(i,'_'.join(txt_list))

|

||||

crop_img_save_path = os.path.join(crop_img_save_dir, crop_img_save_filename)

|

||||

cv2.imwrite(crop_img_save_path, crop_img)

|

||||

f_rec.write('{}/crop_imgs/{}\t{}\n'.format(phase, crop_img_save_filename, lp_number))

|

||||

i+=1

|

||||

f_det.close()

|

||||

f_rec.close()

|

||||

|

||||

def get_rotate_crop_image(img, points):

|

||||

'''

|

||||

img_height, img_width = img.shape[0:2]

|

||||

left = int(np.min(points[:, 0]))

|

||||

right = int(np.max(points[:, 0]))

|

||||

top = int(np.min(points[:, 1]))

|

||||

bottom = int(np.max(points[:, 1]))

|

||||

img_crop = img[top:bottom, left:right, :].copy()

|

||||

points[:, 0] = points[:, 0] - left

|

||||

points[:, 1] = points[:, 1] - top

|

||||

'''

|

||||

assert len(points) == 4, "shape of points must be 4*2"

|

||||

img_crop_width = int(

|

||||

max(

|

||||

np.linalg.norm(points[0] - points[1]),

|

||||

np.linalg.norm(points[2] - points[3])))

|

||||

img_crop_height = int(

|

||||

max(

|

||||

np.linalg.norm(points[0] - points[3]),

|

||||

np.linalg.norm(points[1] - points[2])))

|

||||

pts_std = np.float32([[0, 0], [img_crop_width, 0],

|

||||

[img_crop_width, img_crop_height],

|

||||

[0, img_crop_height]])

|

||||

M = cv2.getPerspectiveTransform(points, pts_std)

|

||||

dst_img = cv2.warpPerspective(

|

||||

img,

|

||||

M, (img_crop_width, img_crop_height),

|

||||

borderMode=cv2.BORDER_REPLICATE,

|

||||

flags=cv2.INTER_CUBIC)

|

||||

dst_img_height, dst_img_width = dst_img.shape[0:2]

|

||||

if dst_img_height * 1.0 / dst_img_width >= 1.5:

|

||||

dst_img = np.rot90(dst_img)

|

||||

return dst_img

|

||||

|

||||

img_dir = '/home/aistudio/data/CCPD2020/ccpd_green'

|

||||

save_gt_folder = '/home/aistudio/data/CCPD2020/PPOCR'

|

||||

# phase = 'train' # change to val and test to make val dataset and test dataset

|

||||

for phase in ['train','val','test']:

|

||||

make_label(img_dir, save_gt_folder, phase)

|

||||

```

|

||||

|

||||

通过上述命令可以完成了`训练集`,`验证集`和`测试集`的制作,制作完成的数据集信息如下:

|

||||

|

||||

| 类型 | 数据集 | 图片地址 | 标签地址 | 图片数量 |

|

||||

| --- | --- | --- | --- | --- |

|

||||

| 检测 | 训练集 | /home/aistudio/data/CCPD2020/ccpd_green/train | /home/aistudio/data/CCPD2020/PPOCR/train/det.txt | 5769 |

|

||||

| 检测 | 验证集 | /home/aistudio/data/CCPD2020/ccpd_green/val | /home/aistudio/data/CCPD2020/PPOCR/val/det.txt | 1001 |

|

||||

| 检测 | 测试集 | /home/aistudio/data/CCPD2020/ccpd_green/test | /home/aistudio/data/CCPD2020/PPOCR/test/det.txt | 5006 |

|

||||

| 识别 | 训练集 | /home/aistudio/data/CCPD2020/PPOCR/train/crop_imgs | /home/aistudio/data/CCPD2020/PPOCR/train/rec.txt | 5769 |

|

||||

| 识别 | 验证集 | /home/aistudio/data/CCPD2020/PPOCR/val/crop_imgs | /home/aistudio/data/CCPD2020/PPOCR/val/rec.txt | 1001 |

|

||||

| 识别 | 测试集 | /home/aistudio/data/CCPD2020/PPOCR/test/crop_imgs | /home/aistudio/data/CCPD2020/PPOCR/test/rec.txt | 5006 |

|

||||

|

||||

在本例中,我们只使用训练集和测试集。

|

||||

|

||||

## 4. 实验

|

||||

|

||||

选用飞桨OCR开发套件PaddleOCR中的PP-OCRv3模型进行文本检测和识别。PP-OCRv3在PP-OC2的基础上,中文场景端到端Hmean指标相比于PP-OCRv2提升5%, 英文数字模型端到端效果提升11%。详细优化细节请参考[PP-OCRv3](../doc/doc_ch/PP-OCRv3_introduction.md)技术报告。

|

||||

|

||||

对于车牌检测和识别均使用下面3种方案:

|

||||

|

||||

1. PP-OCRv3中英文超轻量预训练模型

|

||||

2. CCPD车牌数据集在PP-OCRv3模型上fine-tune

|

||||

3. CCPD车牌数据集在PP-OCRv3模型上fine-tune后量化

|

||||

|

||||

### 4.1 检测

|

||||

#### 4.1.1 方案1:预训练模型

|

||||

|

||||

从下表中下载PP-OCRv3文本检测预训练模型

|

||||

|

||||

|模型名称|模型简介|配置文件|推理模型大小|下载地址|

|

||||

| --- | --- | --- | --- | --- |

|

||||

|ch_PP-OCRv3_det| 【最新】原始超轻量模型,支持中英文、多语种文本检测 |[ch_PP-OCRv3_det_cml.yml](https://github.com/PaddlePaddle/PaddleOCR/blob/dygraph/configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml)| 3.8M |[推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_distill_train.tar)|

|

||||

|

||||

使用如下命令下载预训练模型

|

||||

|

||||

```bash

|

||||

mkdir models

|

||||

cd models

|

||||

wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_distill_train.tar

|

||||

tar -xf ch_PP-OCRv3_det_distill_train.tar

|

||||

cd /home/aistudio/PaddleOCR

|

||||

```

|

||||

|

||||

预训练模型下载完成后,我们使用[ch_PP-OCRv3_det_student](../configs/chepai/ch_PP-OCRv3_det_student.yml) 配置文件进行后续实验,在开始评估之前需要对配置文件中部分字段进行设置,具体如下:

|

||||

|

||||

1. Global.pretrained_model: 指向PP-OCRv3文本检测预训练模型地址

|

||||

2. Eval.dataset.data_dir:指向验证集图片存放目录

|

||||

3. Eval.dataset.label_file_list:指向验证集标注文件

|

||||

|

||||

使用如下命令进行PP-OCRv3文本检测预训练模型的评估

|

||||

|

||||

|

||||

```bash

|

||||

python tools/eval.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml -o \

|

||||

Global.pretrained_model=models/ch_PP-OCRv3_det_distill_train/student.pdparams \

|

||||

Eval.dataset.data_dir=/home/aistudio/data/CCPD2020/ccpd_green \

|

||||

Eval.dataset.label_file_list=[/home/aistudio/data/CCPD2020/PPOCR/test/det.txt]

|

||||

```

|

||||

|

||||

使用预训练模型进行评估,指标如下所示:

|

||||

|

||||

|方案|hmeans|

|

||||

|---|---|

|

||||

|PP-OCRv3中英文超轻量检测预训练模型|76.12%|

|

||||

|

||||

#### 4.1.2 方案2:CCPD车牌数据集fine-tune

|

||||

|

||||

**训练**

|

||||

|

||||

和评估相同,进行训练也需要对部分字段进行设置,具体如下:

|

||||

|

||||

在使用预训练模型进行fine-tune时,需要设置如下11个字段

|

||||

1. Global.pretrained_model: 指向PP-OCRv3文本检测预训练模型地址

|

||||

2. Global.eval_batch_step: 模型多少step评估一次,这里设为从第0个step开始没隔772个step评估一次,772为一个epoch总的step数。

|

||||

3. Optimizer.lr.name: 学习率衰减器设为常量 Const

|

||||

4. Optimizer.lr.learning_rate: 学习率设为之前的0.05倍3. Optimizer.lr.learning_rate: 学习率设为之前的0.05倍

|

||||

5. Optimizer.lr.warmup_epoch: warmup_epoch设为0

|

||||

6. Train.dataset.data_dir:指向训练集图片存放目录

|

||||

7. Train.dataset.label_file_list:指向训练集标注文件

|

||||

9. Eval.dataset.data_dir:指向验证集图片存放目录

|

||||

10. Eval.dataset.label_file_list:指向验证集标注文件

|

||||

|

||||

使用如下代码即可启动在CCPD车牌数据集上的fine-tune。

|

||||

|

||||

```bash

|

||||

python tools/train.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml -o \

|

||||

Global.pretrained_model=models/ch_PP-OCRv3_det_distill_train/student.pdparams \

|

||||

Global.save_model_dir=output/CCPD/det \

|

||||

Global.eval_batch_step="[0, 772]" \

|

||||

Optimizer.lr.name=Const \

|

||||

Optimizer.lr.learning_rate=0.0005 \

|

||||

Optimizer.lr.warmup_epoch=0 \

|

||||

Train.dataset.data_dir=/home/aistudio/data/CCPD2020/ccpd_green \

|

||||

Train.dataset.label_file_list=[/home/aistudio/data/CCPD2020/PPOCR/train/det.txt] \

|

||||

Eval.dataset.data_dir=/home/aistudio/data/CCPD2020/ccpd_green \

|

||||

Eval.dataset.label_file_list=[/home/aistudio/data/CCPD2020/PPOCR/test/det.txt]

|

||||

```

|

||||

|

||||

**评估**

|

||||

|

||||

训练完成后使用如下命令进行评估

|

||||

|

||||

|

||||

```bash

|

||||

python tools/eval.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml -o \

|

||||

Global.pretrained_model=output/CCPD/det/best_accuracy.pdparams \

|

||||

Eval.dataset.data_dir=/home/aistudio/data/CCPD2020/ccpd_green \

|

||||

Eval.dataset.label_file_list=[/home/aistudio/data/CCPD2020/PPOCR/test/det.txt]

|

||||

```

|

||||

|

||||

使用预训练模型和CCPD车牌数据集fine-tune,指标分别如下:

|

||||

|

||||

|方案|hmeans|

|

||||

|---|---|

|

||||

|PP-OCRv3中英文超轻量检测预训练模型|76.12%|

|

||||

|PP-OCRv3中英文超轻量检测预训练模型 fine-tune|99%|

|

||||

|

||||

可以看到进行fine-tune能显著提升车牌检测的效果。

|

||||

|

||||

#### 4.1.3 量化训练

|

||||

|

||||

为了在端侧设备上进行部署,我们还需要对模型进行量化以提升模型的运行速度并降低模型体积。

|

||||

|

||||

量化训练课通过如下命令启动:

|

||||

|

||||

```bash

|

||||

python3.7 deploy/slim/quantization/quant.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml -o \

|

||||

Global.pretrained_model=output/CCPD/det/best_accuracy.pdparams \

|

||||

Global.save_model_dir=output/CCPD/det_quant \

|

||||

Global.eval_batch_step="[0, 772]" \

|

||||

Optimizer.lr.name=Const \

|

||||

Optimizer.lr.learning_rate=0.0005 \

|

||||

Optimizer.lr.warmup_epoch=0 \

|

||||

Train.dataset.data_dir=/home/aistudio/data/CCPD2020/ccpd_green \

|

||||

Train.dataset.label_file_list=[/home/aistudio/data/CCPD2020/PPOCR/train/det.txt] \

|

||||

Eval.dataset.data_dir=/home/aistudio/data/CCPD2020/ccpd_green \

|

||||

Eval.dataset.label_file_list=[/home/aistudio/data/CCPD2020/PPOCR/test/det.txt]

|

||||

```

|

||||

|

||||

量化后指标对比如下

|

||||

|

||||

|方案|hmeans|模型大小|预测速度(lite)|

|

||||

|---|---|---|---|

|

||||

|PP-OCRv3中英文超轻量检测预训练模型 fine-tune|99%|2.5m||

|

||||

|PP-OCRv3中英文超轻量检测预训练模型 fine-tune+量化|98.91%|1m||

|

||||

|

||||

可以看到量化后能显著降低模型体积

|

||||

|

||||

#### 4.1.4 模型导出

|

||||

|

||||

使用如下命令可以将训练好的模型进行导出

|

||||

|

||||

* 非量化模型

|

||||

```bash

|

||||

python tools/export_model.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml -o \

|

||||

Global.pretrained_model=output/CCPD/det/best_accuracy.pdparams \

|

||||

Global.save_inference_dir=output/det/infer

|

||||

```

|

||||

* 量化模型

|

||||

```bash

|

||||

python deploy/slim/quantization/export_model.py -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml -o \

|

||||

Global.pretrained_model=output/CCPD/det_quant/best_accuracy.pdparams \

|

||||

Global.save_inference_dir=output/det/infer

|

||||

```

|

||||

|

||||

### 4.2 识别

|

||||

#### 4.2.1 方案1:预训练模型

|

||||

|

||||

从下表中下载PP-OCRv3文本识别预训练模型

|

||||

|

||||

|模型名称|模型简介|配置文件|推理模型大小|下载地址|

|

||||

| --- | --- | --- | --- | --- |

|

||||

|ch_PP-OCRv3_rec|【最新】原始超轻量模型,支持中英文、数字识别|[ch_PP-OCRv3_rec_distillation.yml](https://github.com/PaddlePaddle/PaddleOCR/blob/dygraph/configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml)| 12.4M |[推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_train.tar) |

|

||||

|

||||

使用如下命令下载预训练模型

|

||||

|

||||

```bash

|

||||

mkdir models

|

||||

cd models

|

||||

wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_train.tar

|

||||

tar -xf ch_PP-OCRv3_rec_train.tar

|

||||

cd /home/aistudio/PaddleOCR

|

||||

```

|

||||

|

||||

PaddleOCR提供的PP-OCRv3识别蒸馏预训练模型中包含了多个模型的参数,需要使用如下代码提取Student模型的参数:

|

||||

|

||||

```python

|

||||

import paddle

|

||||

# 加载预训练模型

|

||||

all_params = paddle.load("models/ch_PP-OCRv3_rec_train/best_accuracy.pdparams")

|

||||

# 查看权重参数的keys

|

||||

print(all_params.keys())

|

||||

# 学生模型的权重提取

|

||||

s_params = {key[len("Student."):]: all_params[key] for key in all_params if "Student." in key}

|

||||

# 查看学生模型权重参数的keys

|

||||

print(s_params.keys())

|

||||

# 保存

|

||||

paddle.save(s_params, "models/ch_PP-OCRv3_rec_train/student.pdparams")

|

||||

```

|

||||

|

||||

预训练模型下载完成后,我们使用[ch_PP-OCRv3_rec.yml](../configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml) 配置文件进行后续实验,在开始评估之前需要对配置文件中部分字段进行设置,具体如下:

|

||||

|

||||

1. Global.pretrained_model: 指向PP-OCRv3文本检测预训练模型地址

|

||||

2. Eval.dataset.data_dir:指向验证集图片存放目录

|

||||

3. Eval.dataset.label_file_list:指向验证集标注文件

|

||||

|

||||

使用如下命令进行PP-OCRv3文本识别预训练模型的评估

|

||||

|

||||

```bash

|

||||

python tools/eval.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml -o \

|

||||

Global.pretrained_model=models/ch_PP-OCRv3_rec_train/student.pdparams \

|

||||

Eval.dataset.data_dir=/home/aistudio/data/CCPD2020/PPOCR \

|

||||

Eval.dataset.label_file_list=[/home/aistudio/data/CCPD2020/PPOCR/test/rec.txt]

|

||||

```

|

||||

|

||||

评估部分日志如下:

|

||||

```bash

|

||||

[2022/05/12 19:52:02] ppocr INFO: load pretrain successful from models/ch_PP-OCRv3_rec_train/best_accuracy

|

||||

eval model:: 100%|██████████████████████████████| 40/40 [00:15<00:00, 2.57it/s]

|

||||

[2022/05/12 19:52:17] ppocr INFO: metric eval ***************

|

||||

[2022/05/12 19:52:17] ppocr INFO: acc:0.0

|

||||

[2022/05/12 19:52:17] ppocr INFO: norm_edit_dis:0.8656084923002452

|

||||

[2022/05/12 19:52:17] ppocr INFO: Teacher_acc:0.000399520574511545

|

||||

[2022/05/12 19:52:17] ppocr INFO: Teacher_norm_edit_dis:0.8657902943394548

|

||||

[2022/05/12 19:52:17] ppocr INFO: fps:1443.1801978719905

|

||||

|

||||

```

|

||||

使用预训练模型进行评估,指标如下所示:

|

||||

|

||||

|方案|acc|

|

||||

|---|---|

|

||||

|PP-OCRv3中英文超轻量识别预训练模型|0%|

|

||||

|

||||

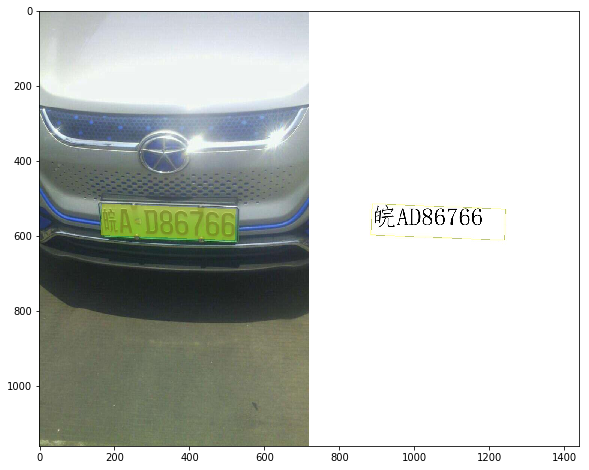

从评估日志中可以看到,直接使用PP-OCRv3预训练模型进行评估,acc非常低,但是norm_edit_dis很高。因此,我们猜测是模型大部分文字识别是对的,只有少部分文字识别错误。使用如下命令进行infer查看模型的推理结果进行验证:

|

||||

|

||||

|

||||

```python

|

||||

! python tools/infer_rec.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml -o \

|

||||

Global.pretrained_model=models/ch_PP-OCRv3_rec_train/student.pdparams \

|

||||

Global.infer_img=/home/aistudio/data/CCPD2020/PPOCR/test/crop_imgs/0_0_0_3_32_30_31_30_30.jpg

|

||||

```

|

||||

|

||||

输出部分日志如下:

|

||||

```bash

|

||||

[2022/05/01 08:51:57] ppocr INFO: train with paddle 2.2.2 and device CUDAPlace(0)

|

||||

W0501 08:51:57.127391 11326 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.0, Runtime API Version: 10.1

|

||||

W0501 08:51:57.132315 11326 device_context.cc:465] device: 0, cuDNN Version: 7.6.

|

||||

[2022/05/01 08:52:00] ppocr INFO: load pretrain successful from models/ch_PP-OCRv3_rec_train/student

|

||||

[2022/05/01 08:52:00] ppocr INFO: infer_img: /home/aistudio/data/CCPD2020/PPOCR/test/crop_imgs/0_0_3_32_30_31_30_30.jpg

|

||||

[2022/05/01 08:52:00] ppocr INFO: result: {"Student": {"label": "皖A·D86766", "score": 0.9552637934684753}, "Teacher": {"label": "皖A·D86766", "score": 0.9917094707489014}}

|

||||

[2022/05/01 08:52:00] ppocr INFO: success!

|

||||

```

|

||||

|

||||

从infer结果可以看到,车牌中的文字大部分都识别正确,只是多识别出了一个`·`。针对这种情况,有如下两种方案:

|

||||

1. 直接通过后处理去掉多识别的`·`。

|

||||

2. 进行finetune。

|

||||

|

||||

#### 4.2.2 改动后处理

|

||||

|

||||

直接通过后处理去掉多识别的`·`,在后处理的改动比较简单,只需在 `ppocr/postprocess/rec_postprocess.py` 文件的76行添加如下代码:

|

||||

```python

|

||||

text = text.replace('·','')

|

||||

```

|

||||

|

||||

改动前后指标对比:

|

||||

|

||||

|方案|acc|

|

||||

|---|---|

|

||||

|PP-OCRv3中英文超轻量识别预训练模型|0.2%|

|

||||

|PP-OCRv3中英文超轻量识别预训练模型+后处理去掉多识别的`·`|90.97%|

|

||||

|

||||

可以看到,去掉多余的`·`能大幅提高精度。

|

||||

|

||||

#### 4.2.3 方案2:CCPD车牌数据集fine-tune

|

||||

|

||||

**训练**

|

||||

|

||||

和评估相同,进行训练也需要对部分字段进行设置,具体如下:

|

||||

|

||||

1. Global.pretrained_model: 指向PP-OCRv3文本识别预训练模型地址

|

||||

2. Global.eval_batch_step: 模型多少step评估一次,这里设为从第0个step开始没隔45个step评估一次,45为一个epoch总的step数。

|

||||

3. Optimizer.lr.name: 学习率衰减器设为常量 Const

|

||||

4. Optimizer.lr.learning_rate: 学习率设为之前的0.05倍

|

||||

5. Optimizer.lr.warmup_epoch: warmup_epoch设为0

|

||||

6. Train.dataset.data_dir:指向训练集图片存放目录

|

||||

7. Train.dataset.label_file_list:指向训练集标注文件

|

||||

9. Train.loader.batch_size_per_card: 训练时每张卡的图片数,这里设为64

|

||||

10. Eval.dataset.data_dir:指向验证集图片存放目录

|

||||

11. Eval.dataset.label_file_list:指向验证集标注文件

|

||||

13. Eval.loader.batch_size_per_card: 验证时每张卡的图片数,这里设为64

|

||||

|

||||

使用如下命令启动finetune

|

||||

|

||||

```bash

|

||||

python tools/train.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml -o \

|

||||

Global.pretrained_model=models/ch_PP-OCRv3_rec_train/student.pdparams \

|

||||

Global.save_model_dir=output/CCPD/rec/ \

|

||||

Global.eval_batch_step="[0, 90]" \

|

||||

Optimizer.lr.name=Const \

|

||||

Optimizer.lr.learning_rate=0.0005 \

|

||||

Optimizer.lr.warmup_epoch=0 \

|

||||

Train.dataset.data_dir=/home/aistudio/data/CCPD2020/PPOCR \

|

||||

Train.dataset.label_file_list=[/home/aistudio/data/CCPD2020/PPOCR/train/rec.txt] \

|

||||

Train.loader.batch_size_per_card=64 \

|

||||

Eval.dataset.data_dir=/home/aistudio/data/CCPD2020/PPOCR \

|

||||

Eval.dataset.label_file_list=[/home/aistudio/data/CCPD2020/PPOCR/test/rec.txt] \

|

||||

Eval.loader.batch_size_per_card=64

|

||||

```

|

||||

|

||||

**评估**

|

||||

|

||||

训练完成后使用如下命令进行评估

|

||||

|

||||

```bash

|

||||

python tools/eval.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml -o \

|

||||

Global.pretrained_model=output/CCPD/rec/best_accuracy.pdparams \

|

||||

Eval.dataset.data_dir=/home/aistudio/data/CCPD2020/PPOCR \

|

||||

Eval.dataset.label_file_list=[/home/aistudio/data/CCPD2020/PPOCR/test/rec.txt]

|

||||

```

|

||||

|

||||

使用预训练模型和CCPD车牌数据集fine-tune,指标分别如下:

|

||||

|

||||

|方案|acc|

|

||||

|---|---|

|

||||

|PP-OCRv3中英文超轻量识别预训练模型|0%|

|

||||

|PP-OCRv3中英文超轻量识别预训练模型+后处理去掉多识别的`·`|90.97%|

|

||||

|PP-OCRv3中英文超轻量识别预训练模型 fine-tune|94.36%|

|

||||

|

||||

可以看到进行fine-tune能显著提升车牌识别的效果。

|

||||

|

||||

#### 4.2.4 量化训练

|

||||

|

||||

为了在端侧设备上进行部署,我们还需要对模型进行量化以提升模型的运行速度并降低模型体积。

|

||||

|

||||

量化训练课通过如下命令启动

|

||||

|

||||

|

||||

```python

|

||||

! python3.7 deploy/slim/quantization/quant.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml -o \

|

||||

Global.pretrained_model=output/CCPD/rec/best_accuracy.pdparams \

|

||||

Global.save_model_dir=output/CCPD/rec_quant/ \

|

||||

Global.eval_batch_step="[0, 90]" \

|

||||

Optimizer.lr.name=Const \

|

||||

Optimizer.lr.learning_rate=0.0005 \

|

||||

Optimizer.lr.warmup_epoch=0 \

|

||||

Train.dataset.data_dir=/home/aistudio/data/CCPD2020/PPOCR \

|

||||

Train.dataset.label_file_list=[/home/aistudio/data/CCPD2020/PPOCR/train/rec.txt] \

|

||||

Train.loader.batch_size_per_card=64 \

|

||||

Eval.dataset.data_dir=/home/aistudio/data/CCPD2020/PPOCR \

|

||||

Eval.dataset.label_file_list=[/home/aistudio/data/CCPD2020/PPOCR/test/rec.txt] \

|

||||

Eval.loader.batch_size_per_card=64

|

||||

```

|

||||

|

||||

量化后指标对比如下

|

||||

|

||||

|方案|acc|模型大小|预测速度(lite)|

|

||||

|---|---|---|---|

|

||||

|PP-OCRv3中英文超轻量识别预训练模型 fine-tune|94.36%|10.3m|

|

||||

|PP-OCRv3中英文超轻量识别预训练模型 fine-tune + 量化|94.36%|4.8m|

|

||||

|

||||

可以看到量化后能显著降低模型体积

|

||||

|

||||

#### 4.2.5 模型导出

|

||||

|

||||

使用如下命令可以将训练好的模型进行导出。

|

||||

|

||||

* 非量化模型

|

||||

```python

|

||||

! python tools/export_model.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml -o \

|

||||

Global.pretrained_model=output/CCPD/rec/best_accuracy.pdparams \

|

||||

Global.save_inference_dir=output/CCPD/rec/infer

|

||||

```

|

||||

* 量化模型

|

||||

```python

|

||||

! python deploy/slim/quantization/export_model.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml -o \

|

||||

Global.pretrained_model=output/CCPD/rec_quant/best_accuracy.pdparams \

|

||||

Global.save_inference_dir=output/CCPD/rec_quant/infer

|

||||

```

|

||||

|

||||

### 4.3 串联推理

|

||||

|

||||

检测模型和识别模型分别fine-tune并导出为inference模型之后,可以使用如下命令进行端到端推理并对结果进行可视化。

|

||||

|

||||

|

||||

```python

|

||||

! python tools/infer/predict_system.py \

|

||||

--det_model_dir=output/CCPD/det/infer/ \

|

||||

--rec_model_dir=output/CCPD/rec/infer/ \

|

||||

--image_dir="/home/aistudio/data/CCPD2020/ccpd_green/test/04131106321839081-92_258-159&509_530&611-527&611_172&599_159&509_530&525-0_0_3_32_30_31_30_30-109-106.jpg" \

|

||||

--rec_image_shape=3,48,320

|

||||

```

|

||||

推理结果如下

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### 4.4 实验总结

|

||||

|

||||

我们分别使用PP-OCRv3中英文超轻量预训练模型在车牌数据集上进行了直接评估和finetune 和finetune+量化3种方案的实验,指标对比如下:

|

||||

|

||||

- 检测

|

||||

|

||||

|方案|hmeans|模型大小|预测速度(lite)|

|

||||

|---|---|---|---|

|

||||

|PP-OCRv3中英文超轻量检测预训练模型|76.12%|

|

||||

|PP-OCRv3中英文超轻量检测预训练模型 fine-tune|99%|2.5m||

|

||||

|PP-OCRv3中英文超轻量检测预训练模型 fine-tune+量化|98.91%|1m||

|

||||

|

||||

- 识别

|

||||

|

||||

|方案|acc|模型大小|预测速度(lite)|

|

||||

|---|---|---|---|

|

||||

|PP-OCRv3中英文超轻量识别预训练模型|0%|x|x|x|

|

||||

|PP-OCRv3中英文超轻量识别预训练模型+后处理去掉多识别的`·`|90.97%|x|x|

|

||||

|PP-OCRv3中英文超轻量识别预训练模型 fine-tune|94.36%|10.3m||

|

||||

|PP-OCRv3中英文超轻量识别预训练模型 fine-tune + 量化|94.36%|4.8m||

|

||||

@ -41,6 +41,12 @@ On Total-Text dataset, the text detection result is as follows:

|

||||

| --- | --- | --- | --- | --- | --- |

|

||||

|SAST|ResNet50_vd|89.63%|78.44%|83.66%|[trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/det_r50_vd_sast_totaltext_v2.0_train.tar)|

|

||||

|

||||

On CTW1500 dataset, the text detection result is as follows:

|

||||

|

||||

|Model|Backbone|Precision|Recall|Hmean| Download link|

|

||||

| --- | --- | --- | --- | --- |---|

|

||||

|FCE|ResNet50_dcn|88.39%|82.18%|85.27%| [trained model](https://paddleocr.bj.bcebos.com/contribution/det_r50_dcn_fce_ctw_v2.0_train.tar) |

|

||||

|

||||

**Note:** Additional data, like icdar2013, icdar2017, COCO-Text, ArT, was added to the model training of SAST. Download English public dataset in organized format used by PaddleOCR from:

|

||||

* [Baidu Drive](https://pan.baidu.com/s/12cPnZcVuV1zn5DOd4mqjVw) (download code: 2bpi).

|

||||

* [Google Drive](https://drive.google.com/drive/folders/1ll2-XEVyCQLpJjawLDiRlvo_i4BqHCJe?usp=sharing)

|

||||

|

||||

Loading…

x

Reference in New Issue

Block a user