@@ -100,7 +100,7 @@ Considering that the features of some channels will be suppressed if the convolu

The recognition module of PP-OCRv3 is optimized based on the text recognition algorithm [SVTR](https://arxiv.org/abs/2205.00159). RNN is abandoned in SVTR, and the context information of the text line image is more effectively mined by introducing the Transformers structure, thereby improving the text recognition ability.

-The recognition accuracy of SVTR_inty outperforms PP-OCRv2 recognition model by 5.3%, while the prediction speed nearly 11 times slower. It takes nearly 100ms to predict a text line on CPU. Therefore, as shown in the figure below, PP-OCRv3 adopts the following six optimization strategies to accelerate the recognition model.

+The recognition accuracy of SVTR_tiny outperforms PP-OCRv2 recognition model by 5.3%, while the prediction speed nearly 11 times slower. It takes nearly 100ms to predict a text line on CPU. Therefore, as shown in the figure below, PP-OCRv3 adopts the following six optimization strategies to accelerate the recognition model.

diff --git a/doc/doc_en/algorithm_rec_starnet.md b/doc/doc_en/algorithm_rec_starnet.md

new file mode 100644

index 000000000..dbb53a9c7

--- /dev/null

+++ b/doc/doc_en/algorithm_rec_starnet.md

@@ -0,0 +1,139 @@

+# STAR-Net

+

+- [1. Introduction](#1)

+- [2. Environment](#2)

+- [3. Model Training / Evaluation / Prediction](#3)

+ - [3.1 Training](#3-1)

+ - [3.2 Evaluation](#3-2)

+ - [3.3 Prediction](#3-3)

+- [4. Inference and Deployment](#4)

+ - [4.1 Python Inference](#4-1)

+ - [4.2 C++ Inference](#4-2)

+ - [4.3 Serving](#4-3)

+ - [4.4 More](#4-4)

+- [5. FAQ](#5)

+

+

+## 1. Introduction

+

+Paper information:

+> [STAR-Net: a spatial attention residue network for scene text recognition.](http://www.bmva.org/bmvc/2016/papers/paper043/paper043.pdf)

+> Wei Liu, Chaofeng Chen, Kwan-Yee K. Wong, Zhizhong Su and Junyu Han.

+> BMVC, pages 43.1-43.13, 2016

+

+Refer to [DTRB](https://arxiv.org/abs/1904.01906) text Recognition Training and Evaluation Process . Using MJSynth and SynthText two text recognition datasets for training, and evaluating on IIIT, SVT, IC03, IC13, IC15, SVTP, CUTE datasets, the algorithm reproduction effect is as follows:

+

+|Models|Backbone Networks|Avg Accuracy|Configuration Files|Download Links|

+| --- | --- | --- | --- | --- |

+|StarNet|Resnet34_vd|84.44%|[configs/rec/rec_r34_vd_tps_bilstm_ctc.yml](../../configs/rec/rec_r34_vd_tps_bilstm_ctc.yml)|[trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/rec_r34_vd_tps_bilstm_ctc_v2.0_train.tar)|

+|StarNet|MobileNetV3|81.42%|[configs/rec/rec_mv3_tps_bilstm_ctc.yml](../../configs/rec/rec_mv3_tps_bilstm_ctc.yml)|[ trained model](https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/rec_mv3_tps_bilstm_ctc_v2.0_train.tar)|

+

+

+

+## 2. Environment

+Please refer to [Operating Environment Preparation](./environment_en.md) to configure the PaddleOCR operating environment, and refer to [Project Clone](./clone_en.md) to clone the project code.

+

+

+## 3. Model Training / Evaluation / Prediction

+

+Please refer to [Text Recognition Training Tutorial](./recognition_en.md). PaddleOCR modularizes the code, and training different recognition models only requires **changing the configuration file**. Take the backbone network based on Resnet34_vd as an example:

+

+

+### 3.1 Training

+After the data preparation is complete, the training can be started. The training command is as follows:

+

+````

+#Single card training (long training period, not recommended)

+python3 tools/train.py -c configs/rec/rec_r34_vd_tps_bilstm_ctc.yml #Multi-card training, specify the card number through the --gpus parameter

+python3 -m paddle.distributed.launch --gpus '0,1,2,3' tools/train.py -c rec_r34_vd_tps_bilstm_ctc.yml

+ ````

+

+

+### 3.2 Evaluation

+

+````

+# GPU evaluation, Global.pretrained_model is the model to be evaluated

+python3 -m paddle.distributed.launch --gpus '0' tools/eval.py -c configs/rec/rec_r34_vd_tps_bilstm_ctc.yml -o Global.pretrained_model={path/to/weights}/best_accuracy

+ ````

+

+

+### 3.3 Prediction

+

+````

+# The configuration file used for prediction must match the training

+python3 tools/infer_rec.py -c configs/rec/rec_r34_vd_tps_bilstm_ctc.yml -o Global.pretrained_model={path/to/weights}/best_accuracy Global.infer_img=doc/imgs_words/en/word_1.png

+ ````

+

+

+## 4. Inference

+

+

+### 4.1 Python Inference

+First, convert the model saved during the STAR-Net text recognition training process into an inference model. Take the model trained on the MJSynth and SynthText text recognition datasets based on the Resnet34_vd backbone network as an example [Model download address]( https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/rec_r34_vd_none_bilstm_ctc_v2.0_train.tar) , which can be converted using the following command:

+

+```shell

+python3 tools/export_model.py -c configs/rec/rec_r34_vd_tps_bilstm_ctc.yml -o Global.pretrained_model=./rec_r34_vd_tps_bilstm_ctc_v2.0_train/best_accuracy Global.save_inference_dir=./inference/rec_starnet

+ ````

+

+STAR-Net text recognition model inference, you can execute the following commands:

+

+```shell

+python3 tools/infer/predict_rec.py --image_dir="./doc/imgs_words_en/word_336.png" --rec_model_dir="./inference/rec_starnet/" --rec_image_shape="3, 32, 100" --rec_char_dict_path="./ppocr/utils/ic15_dict.txt"

+ ````

+

+

+

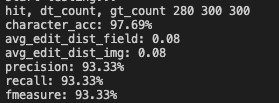

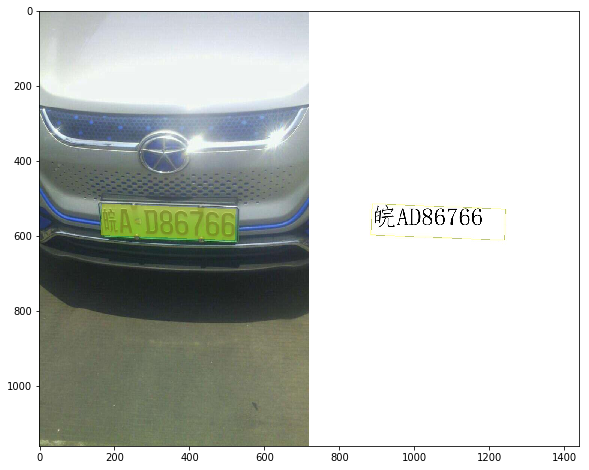

+The inference results are as follows:

+

+

+```bash

+Predicts of ./doc/imgs_words_en/word_336.png:('super', 0.9999073)

+```

+

+**Attention** Since the above model refers to the [DTRB](https://arxiv.org/abs/1904.01906) text recognition training and evaluation process, it is different from the ultra-lightweight Chinese recognition model training in two aspects:

+

+- The image resolutions used during training are different. The image resolutions used for training the above models are [3, 32, 100], while for Chinese model training, in order to ensure the recognition effect of long texts, the image resolutions used during training are [ 3, 32, 320]. The default shape parameter of the predictive inference program is the image resolution used for training Chinese, i.e. [3, 32, 320]. Therefore, when inferring the above English model here, it is necessary to set the shape of the recognized image through the parameter rec_image_shape.

+

+- Character list, the experiment in the DTRB paper is only for 26 lowercase English letters and 10 numbers, a total of 36 characters. All uppercase and lowercase characters are converted to lowercase characters, and characters not listed above are ignored and considered spaces. Therefore, there is no input character dictionary here, but a dictionary is generated by the following command. Therefore, the parameter rec_char_dict_path needs to be set during inference, which is specified as an English dictionary "./ppocr/utils/ic15_dict.txt".

+

+```

+self.character_str = "0123456789abcdefghijklmnopqrstuvwxyz"

+dict_character = list(self.character_str)

+

+

+ ```

+

+

+### 4.2 C++ Inference

+

+After preparing the inference model, refer to the [cpp infer](../../deploy/cpp_infer/) tutorial to operate.

+

+

+### 4.3 Serving

+

+After preparing the inference model, refer to the [pdserving](../../deploy/pdserving/) tutorial for Serving deployment, including two modes: Python Serving and C++ Serving.

+

+

+### 4.4 More

+

+The STAR-Net model also supports the following inference deployment methods:

+

+- Paddle2ONNX Inference: After preparing the inference model, refer to the [paddle2onnx](../../deploy/paddle2onnx/) tutorial.

+

+

+## 5. FAQ

+

+## Quote

+

+```bibtex

+@inproceedings{liu2016star,

+ title={STAR-Net: a spatial attention residue network for scene text recognition.},

+ author={Liu, Wei and Chen, Chaofeng and Wong, Kwan-Yee K and Su, Zhizhong and Han, Junyu},

+ booktitle={BMVC},

+ volume={2},

+ pages={7},

+ year={2016}

+}

+```

+

+

diff --git a/doc/doc_en/detection_en.md b/doc/doc_en/detection_en.md

index 76e0f8509..f85bf585c 100644

--- a/doc/doc_en/detection_en.md

+++ b/doc/doc_en/detection_en.md

@@ -159,7 +159,7 @@ python3 -m paddle.distributed.launch --ips="xx.xx.xx.xx,xx.xx.xx.xx" --gpus '0,1

-o Global.pretrained_model=./pretrain_models/MobileNetV3_large_x0_5_pretrained

```

-**Note:** When using multi-machine and multi-gpu training, you need to replace the ips value in the above command with the address of your machine, and the machines need to be able to ping each other. In addition, training needs to be launched separately on multiple machines. The command to view the ip address of the machine is `ifconfig`.

+**Note:** (1) When using multi-machine and multi-gpu training, you need to replace the ips value in the above command with the address of your machine, and the machines need to be able to ping each other. (2) Training needs to be launched separately on multiple machines. The command to view the ip address of the machine is `ifconfig`. (3) For more details about the distributed training speedup ratio, please refer to [Distributed Training Tutorial](./distributed_training_en.md).

### 2.6 Training with knowledge distillation

diff --git a/doc/doc_en/distributed_training.md b/doc/doc_en/distributed_training_en.md

similarity index 70%

rename from doc/doc_en/distributed_training.md

rename to doc/doc_en/distributed_training_en.md

index 2822ee5e4..5a219ed2b 100644

--- a/doc/doc_en/distributed_training.md

+++ b/doc/doc_en/distributed_training_en.md

@@ -40,11 +40,17 @@ python3 -m paddle.distributed.launch \

## Performance comparison

-* Based on 26W public recognition dataset (LSVT, rctw, mtwi), training on single 8-card P40 and dual 8-card P40, the final time consumption is as follows.

+* On two 8-card P40 graphics cards, the final time consumption and speedup ratio for public recognition dataset (LSVT, RCTW, MTWI) containing 260k images are as follows.

-| Model | Config file | Number of machines | Number of GPUs per machine | Training time | Recognition acc | Speedup ratio |

-| :-------: | :------------: | :----------------: | :----------------------------: | :------------------: | :--------------: | :-----------: |

-| CRNN | configs/rec/ch_ppocr_v2.0/rec_chinese_lite_train_v2.0.yml | 1 | 8 | 60h | 66.7% | - |

-| CRNN | configs/rec/ch_ppocr_v2.0/rec_chinese_lite_train_v2.0.yml | 2 | 8 | 40h | 67.0% | 150% |

-It can be seen that the training time is shortened from 60h to 40h, the speedup ratio can reach 150% (60h / 40h), and the efficiency is 75% (60h / (40h * 2)).

+| Model | Config file | Recognition acc | single 8-card training time | two 8-card training time | Speedup ratio |

+|------|-----|--------|--------|--------|-----|

+| CRNN | [rec_chinese_lite_train_v2.0.yml](../../configs/rec/ch_ppocr_v2.0/rec_chinese_lite_train_v2.0.yml) | 67.0% | 2.50d | 1.67d | **1.5** |

+

+

+* On four 8-card V100 graphics cards, the final time consumption and speedup ratio for full data are as follows.

+

+

+| Model | Config file | Recognition acc | single 8-card training time | four 8-card training time | Speedup ratio |

+|------|-----|--------|--------|--------|-----|

+| SVTR | [ch_PP-OCRv3_rec_distillation.yml](../../configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml) | 74.0% | 10d | 2.84d | **3.5** |

diff --git a/doc/doc_en/ppocr_introduction_en.md b/doc/doc_en/ppocr_introduction_en.md

index b13d7f9bf..d28ccb352 100644

--- a/doc/doc_en/ppocr_introduction_en.md

+++ b/doc/doc_en/ppocr_introduction_en.md

@@ -29,10 +29,10 @@ PP-OCR pipeline is as follows:

PP-OCR system is in continuous optimization. At present, PP-OCR and PP-OCRv2 have been released:

-PP-OCR adopts 19 effective strategies from 8 aspects including backbone network selection and adjustment, prediction head design, data augmentation, learning rate transformation strategy, regularization parameter selection, pre-training model use, and automatic model tailoring and quantization to optimize and slim down the models of each module (as shown in the green box above). The final results are an ultra-lightweight Chinese and English OCR model with an overall size of 3.5M and a 2.8M English digital OCR model. For more details, please refer to the PP-OCR technical article (https://arxiv.org/abs/2009.09941).

+PP-OCR adopts 19 effective strategies from 8 aspects including backbone network selection and adjustment, prediction head design, data augmentation, learning rate transformation strategy, regularization parameter selection, pre-training model use, and automatic model tailoring and quantization to optimize and slim down the models of each module (as shown in the green box above). The final results are an ultra-lightweight Chinese and English OCR model with an overall size of 3.5M and a 2.8M English digital OCR model. For more details, please refer to [PP-OCR technical report](https://arxiv.org/abs/2009.09941).

#### PP-OCRv2

-On the basis of PP-OCR, PP-OCRv2 is further optimized in five aspects. The detection model adopts CML(Collaborative Mutual Learning) knowledge distillation strategy and CopyPaste data expansion strategy. The recognition model adopts LCNet lightweight backbone network, U-DML knowledge distillation strategy and enhanced CTC loss function improvement (as shown in the red box above), which further improves the inference speed and prediction effect. For more details, please refer to the technical report of PP-OCRv2 (https://arxiv.org/abs/2109.03144).

+On the basis of PP-OCR, PP-OCRv2 is further optimized in five aspects. The detection model adopts CML(Collaborative Mutual Learning) knowledge distillation strategy and CopyPaste data expansion strategy. The recognition model adopts LCNet lightweight backbone network, U-DML knowledge distillation strategy and enhanced CTC loss function improvement (as shown in the red box above), which further improves the inference speed and prediction effect. For more details, please refer to [PP-OCRv2 technical report](https://arxiv.org/abs/2109.03144).

#### PP-OCRv3

@@ -46,7 +46,7 @@ PP-OCRv3 pipeline is as follows:

-For more details, please refer to [PP-OCRv3 technical report](./PP-OCRv3_introduction_en.md).

+For more details, please refer to [PP-OCRv3 technical report](https://arxiv.org/abs/2206.03001v2).

## 2. Features

diff --git a/doc/doc_en/quickstart_en.md b/doc/doc_en/quickstart_en.md

index d7aeb7773..c678dc476 100644

--- a/doc/doc_en/quickstart_en.md

+++ b/doc/doc_en/quickstart_en.md

@@ -119,7 +119,18 @@ If you do not use the provided test image, you can replace the following `--imag

['PAIN', 0.9934559464454651]

```

-If you need to use the 2.0 model, please specify the parameter `--ocr_version PP-OCR`, paddleocr uses the PP-OCRv3 model by default(`--ocr_version PP-OCRv3`). More whl package usage can be found in [whl package](./whl_en.md)

+**Version**

+paddleocr uses the PP-OCRv3 model by default(`--ocr_version PP-OCRv3`). If you want to use other versions, you can set the parameter `--ocr_version`, the specific version description is as follows:

+| version name | description |

+| --- | --- |

+| PP-OCRv3 | support Chinese and English detection and recognition, direction classifier, support multilingual recognition |

+| PP-OCRv2 | only supports Chinese and English detection and recognition, direction classifier, multilingual model is not updated |

+| PP-OCR | support Chinese and English detection and recognition, direction classifier, support multilingual recognition |

+

+If you want to add your own trained model, you can add model links and keys in [paddleocr](../../paddleocr.py) and recompile.

+

+More whl package usage can be found in [whl package](./whl_en.md)

+

#### 2.1.2 Multi-language Model

diff --git a/doc/doc_en/recognition_en.md b/doc/doc_en/recognition_en.md

index 60b4a1b26..7d31b0ffe 100644

--- a/doc/doc_en/recognition_en.md

+++ b/doc/doc_en/recognition_en.md

@@ -306,7 +306,7 @@ python3 -m paddle.distributed.launch --ips="xx.xx.xx.xx,xx.xx.xx.xx" --gpus '0,1

-o Global.pretrained_model=./pretrain_models/rec_mv3_none_bilstm_ctc_v2.0_train

```

-**Note:** When using multi-machine and multi-gpu training, you need to replace the ips value in the above command with the address of your machine, and the machines need to be able to ping each other. In addition, training needs to be launched separately on multiple machines. The command to view the ip address of the machine is `ifconfig`.

+**Note:** (1) When using multi-machine and multi-gpu training, you need to replace the ips value in the above command with the address of your machine, and the machines need to be able to ping each other. (2) Training needs to be launched separately on multiple machines. The command to view the ip address of the machine is `ifconfig`. (3) For more details about the distributed training speedup ratio, please refer to [Distributed Training Tutorial](./distributed_training_en.md).

### 2.6 Training with Knowledge Distillation

diff --git a/paddleocr.py b/paddleocr.py

index a1265f79d..470dc60da 100644

--- a/paddleocr.py

+++ b/paddleocr.py

@@ -154,7 +154,13 @@ MODEL_URLS = {

'https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_rec_infer.tar',

'dict_path': './ppocr/utils/ppocr_keys_v1.txt'

}

- }

+ },

+ 'cls': {

+ 'ch': {

+ 'url':

+ 'https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar',

+ }

+ },

},

'PP-OCR': {

'det': {

diff --git a/ppocr/data/imaug/__init__.py b/ppocr/data/imaug/__init__.py

index 548832fb0..f0fd578f6 100644

--- a/ppocr/data/imaug/__init__.py

+++ b/ppocr/data/imaug/__init__.py

@@ -22,7 +22,7 @@ from .make_shrink_map import MakeShrinkMap

from .random_crop_data import EastRandomCropData, RandomCropImgMask

from .make_pse_gt import MakePseGt

-from .rec_img_aug import RecAug, RecConAug, RecResizeImg, ClsResizeImg, \

+from .rec_img_aug import BaseDataAugmentation, RecAug, RecConAug, RecResizeImg, ClsResizeImg, \

SRNRecResizeImg, NRTRRecResizeImg, SARRecResizeImg, PRENResizeImg

from .ssl_img_aug import SSLRotateResize

from .randaugment import RandAugment

diff --git a/ppocr/data/imaug/rec_img_aug.py b/ppocr/data/imaug/rec_img_aug.py

index 7483dffe5..32de2b3fc 100644

--- a/ppocr/data/imaug/rec_img_aug.py

+++ b/ppocr/data/imaug/rec_img_aug.py

@@ -22,13 +22,74 @@ from .text_image_aug import tia_perspective, tia_stretch, tia_distort

class RecAug(object):

- def __init__(self, use_tia=True, aug_prob=0.4, **kwargs):

- self.use_tia = use_tia

- self.aug_prob = aug_prob

+ def __init__(self,

+ tia_prob=0.4,

+ crop_prob=0.4,

+ reverse_prob=0.4,

+ noise_prob=0.4,

+ jitter_prob=0.4,

+ blur_prob=0.4,

+ hsv_aug_prob=0.4,

+ **kwargs):

+ self.tia_prob = tia_prob

+ self.bda = BaseDataAugmentation(crop_prob, reverse_prob, noise_prob,

+ jitter_prob, blur_prob, hsv_aug_prob)

def __call__(self, data):

img = data['image']

- img = warp(img, 10, self.use_tia, self.aug_prob)

+ h, w, _ = img.shape

+

+ # tia

+ if random.random() <= self.tia_prob:

+ if h >= 20 and w >= 20:

+ img = tia_distort(img, random.randint(3, 6))

+ img = tia_stretch(img, random.randint(3, 6))

+ img = tia_perspective(img)

+

+ # bda

+ data['image'] = img

+ data = self.bda(data)

+ return data

+

+

+class BaseDataAugmentation(object):

+ def __init__(self,

+ crop_prob=0.4,

+ reverse_prob=0.4,

+ noise_prob=0.4,

+ jitter_prob=0.4,

+ blur_prob=0.4,

+ hsv_aug_prob=0.4,

+ **kwargs):

+ self.crop_prob = crop_prob

+ self.reverse_prob = reverse_prob

+ self.noise_prob = noise_prob

+ self.jitter_prob = jitter_prob

+ self.blur_prob = blur_prob

+ self.hsv_aug_prob = hsv_aug_prob

+

+ def __call__(self, data):

+ img = data['image']

+ h, w, _ = img.shape

+

+ if random.random() <= self.crop_prob and h >= 20 and w >= 20:

+ img = get_crop(img)

+

+ if random.random() <= self.blur_prob:

+ img = blur(img)

+

+ if random.random() <= self.hsv_aug_prob:

+ img = hsv_aug(img)

+

+ if random.random() <= self.jitter_prob:

+ img = jitter(img)

+

+ if random.random() <= self.noise_prob:

+ img = add_gasuss_noise(img)

+

+ if random.random() <= self.reverse_prob:

+ img = 255 - img

+

data['image'] = img

return data

@@ -359,7 +420,7 @@ def flag():

return 1 if random.random() > 0.5000001 else -1

-def cvtColor(img):

+def hsv_aug(img):

"""

cvtColor

"""

@@ -427,50 +488,6 @@ def get_crop(image):

return crop_img

-class Config:

- """

- Config

- """

-

- def __init__(self, use_tia):

- self.anglex = random.random() * 30

- self.angley = random.random() * 15

- self.anglez = random.random() * 10

- self.fov = 42

- self.r = 0

- self.shearx = random.random() * 0.3

- self.sheary = random.random() * 0.05

- self.borderMode = cv2.BORDER_REPLICATE

- self.use_tia = use_tia

-

- def make(self, w, h, ang):

- """

- make

- """

- self.anglex = random.random() * 5 * flag()

- self.angley = random.random() * 5 * flag()

- self.anglez = -1 * random.random() * int(ang) * flag()

- self.fov = 42

- self.r = 0

- self.shearx = 0

- self.sheary = 0

- self.borderMode = cv2.BORDER_REPLICATE

- self.w = w

- self.h = h

-

- self.perspective = self.use_tia

- self.stretch = self.use_tia

- self.distort = self.use_tia

-

- self.crop = True

- self.affine = False

- self.reverse = True

- self.noise = True

- self.jitter = True

- self.blur = True

- self.color = True

-

-

def rad(x):

"""

rad

@@ -554,48 +571,3 @@ def get_warpAffine(config):

rz = np.array([[np.cos(rad(anglez)), np.sin(rad(anglez)), 0],

[-np.sin(rad(anglez)), np.cos(rad(anglez)), 0]], np.float32)

return rz

-

-

-def warp(img, ang, use_tia=True, prob=0.4):

- """

- warp

- """

- h, w, _ = img.shape

- config = Config(use_tia=use_tia)

- config.make(w, h, ang)

- new_img = img

-

- if config.distort:

- img_height, img_width = img.shape[0:2]

- if random.random() <= prob and img_height >= 20 and img_width >= 20:

- new_img = tia_distort(new_img, random.randint(3, 6))

-

- if config.stretch:

- img_height, img_width = img.shape[0:2]

- if random.random() <= prob and img_height >= 20 and img_width >= 20:

- new_img = tia_stretch(new_img, random.randint(3, 6))

-

- if config.perspective:

- if random.random() <= prob:

- new_img = tia_perspective(new_img)

-

- if config.crop:

- img_height, img_width = img.shape[0:2]

- if random.random() <= prob and img_height >= 20 and img_width >= 20:

- new_img = get_crop(new_img)

-

- if config.blur:

- if random.random() <= prob:

- new_img = blur(new_img)

- if config.color:

- if random.random() <= prob:

- new_img = cvtColor(new_img)

- if config.jitter:

- new_img = jitter(new_img)

- if config.noise:

- if random.random() <= prob:

- new_img = add_gasuss_noise(new_img)

- if config.reverse:

- if random.random() <= prob:

- new_img = 255 - new_img

- return new_img

diff --git a/ppocr/data/simple_dataset.py b/ppocr/data/simple_dataset.py

index b5da9b889..402f1e38f 100644

--- a/ppocr/data/simple_dataset.py

+++ b/ppocr/data/simple_dataset.py

@@ -33,7 +33,7 @@ class SimpleDataSet(Dataset):

self.delimiter = dataset_config.get('delimiter', '\t')

label_file_list = dataset_config.pop('label_file_list')

data_source_num = len(label_file_list)

- ratio_list = dataset_config.get("ratio_list", [1.0])

+ ratio_list = dataset_config.get("ratio_list", 1.0)

if isinstance(ratio_list, (float, int)):

ratio_list = [float(ratio_list)] * int(data_source_num)

diff --git a/ppocr/losses/rec_aster_loss.py b/ppocr/losses/rec_aster_loss.py

index fbb99d29a..52605e46d 100644

--- a/ppocr/losses/rec_aster_loss.py

+++ b/ppocr/losses/rec_aster_loss.py

@@ -27,12 +27,12 @@ class CosineEmbeddingLoss(nn.Layer):

self.epsilon = 1e-12

def forward(self, x1, x2, target):

- similarity = paddle.fluid.layers.reduce_sum(

+ similarity = paddle.sum(

x1 * x2, dim=-1) / (paddle.norm(

x1, axis=-1) * paddle.norm(

x2, axis=-1) + self.epsilon)

one_list = paddle.full_like(target, fill_value=1)

- out = paddle.fluid.layers.reduce_mean(

+ out = paddle.mean(

paddle.where(

paddle.equal(target, one_list), 1. - similarity,

paddle.maximum(

diff --git a/ppocr/losses/table_att_loss.py b/ppocr/losses/table_att_loss.py

index d7fd99e69..51377efa2 100644

--- a/ppocr/losses/table_att_loss.py

+++ b/ppocr/losses/table_att_loss.py

@@ -19,7 +19,6 @@ from __future__ import print_function

import paddle

from paddle import nn

from paddle.nn import functional as F

-from paddle import fluid

class TableAttentionLoss(nn.Layer):

def __init__(self, structure_weight, loc_weight, use_giou=False, giou_weight=1.0, **kwargs):

@@ -36,13 +35,13 @@ class TableAttentionLoss(nn.Layer):

:param bbox:[[x1,y1,x2,y2], [x1,y1,x2,y2],,,]

:return: loss

'''

- ix1 = fluid.layers.elementwise_max(preds[:, 0], bbox[:, 0])

- iy1 = fluid.layers.elementwise_max(preds[:, 1], bbox[:, 1])

- ix2 = fluid.layers.elementwise_min(preds[:, 2], bbox[:, 2])

- iy2 = fluid.layers.elementwise_min(preds[:, 3], bbox[:, 3])

+ ix1 = paddle.maximum(preds[:, 0], bbox[:, 0])

+ iy1 = paddle.maximum(preds[:, 1], bbox[:, 1])

+ ix2 = paddle.minimum(preds[:, 2], bbox[:, 2])

+ iy2 = paddle.minimum(preds[:, 3], bbox[:, 3])

- iw = fluid.layers.clip(ix2 - ix1 + 1e-3, 0., 1e10)

- ih = fluid.layers.clip(iy2 - iy1 + 1e-3, 0., 1e10)

+ iw = paddle.clip(ix2 - ix1 + 1e-3, 0., 1e10)

+ ih = paddle.clip(iy2 - iy1 + 1e-3, 0., 1e10)

# overlap

inters = iw * ih

@@ -55,12 +54,12 @@ class TableAttentionLoss(nn.Layer):

# ious

ious = inters / uni

- ex1 = fluid.layers.elementwise_min(preds[:, 0], bbox[:, 0])

- ey1 = fluid.layers.elementwise_min(preds[:, 1], bbox[:, 1])

- ex2 = fluid.layers.elementwise_max(preds[:, 2], bbox[:, 2])

- ey2 = fluid.layers.elementwise_max(preds[:, 3], bbox[:, 3])

- ew = fluid.layers.clip(ex2 - ex1 + 1e-3, 0., 1e10)

- eh = fluid.layers.clip(ey2 - ey1 + 1e-3, 0., 1e10)

+ ex1 = paddle.minimum(preds[:, 0], bbox[:, 0])

+ ey1 = paddle.minimum(preds[:, 1], bbox[:, 1])

+ ex2 = paddle.maximum(preds[:, 2], bbox[:, 2])

+ ey2 = paddle.maximum(preds[:, 3], bbox[:, 3])

+ ew = paddle.clip(ex2 - ex1 + 1e-3, 0., 1e10)

+ eh = paddle.clip(ey2 - ey1 + 1e-3, 0., 1e10)

# enclose erea

enclose = ew * eh + eps

diff --git a/ppocr/modeling/backbones/kie_unet_sdmgr.py b/ppocr/modeling/backbones/kie_unet_sdmgr.py

index 545e4e751..4b1bd8030 100644

--- a/ppocr/modeling/backbones/kie_unet_sdmgr.py

+++ b/ppocr/modeling/backbones/kie_unet_sdmgr.py

@@ -175,12 +175,7 @@ class Kie_backbone(nn.Layer):

img, relations, texts, gt_bboxes, tag, img_size)

x = self.img_feat(img)

boxes, rois_num = self.bbox2roi(gt_bboxes)

- feats = paddle.fluid.layers.roi_align(

- x,

- boxes,

- spatial_scale=1.0,

- pooled_height=7,

- pooled_width=7,

- rois_num=rois_num)

+ feats = paddle.vision.ops.roi_align(

+ x, boxes, spatial_scale=1.0, output_size=7, boxes_num=rois_num)

feats = self.maxpool(feats).squeeze(-1).squeeze(-1)

return [relations, texts, feats]

diff --git a/ppocr/modeling/backbones/rec_resnet_fpn.py b/ppocr/modeling/backbones/rec_resnet_fpn.py

index a7e876a2b..79efd6e41 100644

--- a/ppocr/modeling/backbones/rec_resnet_fpn.py

+++ b/ppocr/modeling/backbones/rec_resnet_fpn.py

@@ -18,7 +18,6 @@ from __future__ import print_function

from paddle import nn, ParamAttr

from paddle.nn import functional as F

-import paddle.fluid as fluid

import paddle

import numpy as np

diff --git a/ppocr/modeling/heads/rec_srn_head.py b/ppocr/modeling/heads/rec_srn_head.py

index 8d59e4711..1070d8cd6 100644

--- a/ppocr/modeling/heads/rec_srn_head.py

+++ b/ppocr/modeling/heads/rec_srn_head.py

@@ -20,13 +20,11 @@ import math

import paddle

from paddle import nn, ParamAttr

from paddle.nn import functional as F

-import paddle.fluid as fluid

import numpy as np

from .self_attention import WrapEncoderForFeature

from .self_attention import WrapEncoder

from paddle.static import Program

from ppocr.modeling.backbones.rec_resnet_fpn import ResNetFPN

-import paddle.fluid.framework as framework

from collections import OrderedDict

gradient_clip = 10

diff --git a/ppocr/modeling/heads/self_attention.py b/ppocr/modeling/heads/self_attention.py

index 6c27fdbe4..6e4c65e39 100644

--- a/ppocr/modeling/heads/self_attention.py

+++ b/ppocr/modeling/heads/self_attention.py

@@ -22,7 +22,6 @@ import paddle

from paddle import ParamAttr, nn

from paddle import nn, ParamAttr

from paddle.nn import functional as F

-import paddle.fluid as fluid

import numpy as np

gradient_clip = 10

@@ -288,10 +287,10 @@ class PrePostProcessLayer(nn.Layer):

"layer_norm_%d" % len(self.sublayers()),

paddle.nn.LayerNorm(

normalized_shape=d_model,

- weight_attr=fluid.ParamAttr(

- initializer=fluid.initializer.Constant(1.)),

- bias_attr=fluid.ParamAttr(

- initializer=fluid.initializer.Constant(0.)))))

+ weight_attr=paddle.ParamAttr(

+ initializer=paddle.nn.initializer.Constant(1.)),

+ bias_attr=paddle.ParamAttr(

+ initializer=paddle.nn.initializer.Constant(0.)))))

elif cmd == "d": # add dropout

self.functors.append(lambda x: F.dropout(

x, p=dropout_rate, mode="downscale_in_infer")

@@ -324,7 +323,7 @@ class PrepareEncoder(nn.Layer):

def forward(self, src_word, src_pos):

src_word_emb = src_word

- src_word_emb = fluid.layers.cast(src_word_emb, 'float32')

+ src_word_emb = paddle.cast(src_word_emb, 'float32')

src_word_emb = paddle.scale(x=src_word_emb, scale=self.src_emb_dim**0.5)

src_pos = paddle.squeeze(src_pos, axis=-1)

src_pos_enc = self.emb(src_pos)

@@ -367,7 +366,7 @@ class PrepareDecoder(nn.Layer):

self.dropout_rate = dropout_rate

def forward(self, src_word, src_pos):

- src_word = fluid.layers.cast(src_word, 'int64')

+ src_word = paddle.cast(src_word, 'int64')

src_word = paddle.squeeze(src_word, axis=-1)

src_word_emb = self.emb0(src_word)

src_word_emb = paddle.scale(x=src_word_emb, scale=self.src_emb_dim**0.5)

diff --git a/ppstructure/table/README.md b/ppstructure/table/README.md

index d21ef4aa3..b6804c6f0 100644

--- a/ppstructure/table/README.md

+++ b/ppstructure/table/README.md

@@ -18,7 +18,7 @@ The table recognition mainly contains three models

The table recognition flow chart is as follows

-

+

1. The coordinates of single-line text is detected by DB model, and then sends it to the recognition model to get the recognition result.

2. The table structure and cell coordinates is predicted by RARE model.

diff --git a/ppstructure/table/predict_table.py b/ppstructure/table/predict_table.py

index 402d6c241..aa0545958 100644

--- a/ppstructure/table/predict_table.py

+++ b/ppstructure/table/predict_table.py

@@ -28,6 +28,7 @@ import numpy as np

import time

import tools.infer.predict_rec as predict_rec

import tools.infer.predict_det as predict_det

+import tools.infer.utility as utility

from ppocr.utils.utility import get_image_file_list, check_and_read_gif

from ppocr.utils.logging import get_logger

from ppstructure.table.matcher import distance, compute_iou

@@ -59,11 +60,37 @@ class TableSystem(object):

self.text_recognizer = predict_rec.TextRecognizer(

args) if text_recognizer is None else text_recognizer

self.table_structurer = predict_strture.TableStructurer(args)

+ self.benchmark = args.benchmark

+ self.predictor, self.input_tensor, self.output_tensors, self.config = utility.create_predictor(

+ args, 'table', logger)

+ if args.benchmark:

+ import auto_log

+ pid = os.getpid()

+ gpu_id = utility.get_infer_gpuid()

+ self.autolog = auto_log.AutoLogger(

+ model_name="table",

+ model_precision=args.precision,

+ batch_size=1,

+ data_shape="dynamic",

+ save_path=None, #args.save_log_path,

+ inference_config=self.config,

+ pids=pid,

+ process_name=None,

+ gpu_ids=gpu_id if args.use_gpu else None,

+ time_keys=[

+ 'preprocess_time', 'inference_time', 'postprocess_time'

+ ],

+ warmup=0,

+ logger=logger)

def __call__(self, img, return_ocr_result_in_table=False):

result = dict()

ori_im = img.copy()

+ if self.benchmark:

+ self.autolog.times.start()

structure_res, elapse = self.table_structurer(copy.deepcopy(img))

+ if self.benchmark:

+ self.autolog.times.stamp()

dt_boxes, elapse = self.text_detector(copy.deepcopy(img))

dt_boxes = sorted_boxes(dt_boxes)

if return_ocr_result_in_table:

@@ -77,13 +104,11 @@ class TableSystem(object):

box = [x_min, y_min, x_max, y_max]

r_boxes.append(box)

dt_boxes = np.array(r_boxes)

-

logger.debug("dt_boxes num : {}, elapse : {}".format(

len(dt_boxes), elapse))

if dt_boxes is None:

return None, None

img_crop_list = []

-

for i in range(len(dt_boxes)):

det_box = dt_boxes[i]

x0, y0, x1, y1 = expand(2, det_box, ori_im.shape)

@@ -92,10 +117,14 @@ class TableSystem(object):

rec_res, elapse = self.text_recognizer(img_crop_list)

logger.debug("rec_res num : {}, elapse : {}".format(

len(rec_res), elapse))

+ if self.benchmark:

+ self.autolog.times.stamp()

if return_ocr_result_in_table:

result['rec_res'] = rec_res

pred_html, pred = self.rebuild_table(structure_res, dt_boxes, rec_res)

result['html'] = pred_html

+ if self.benchmark:

+ self.autolog.times.end(stamp=True)

return result

def rebuild_table(self, structure_res, dt_boxes, rec_res):

@@ -213,6 +242,8 @@ def main(args):

logger.info('excel saved to {}'.format(excel_path))

elapse = time.time() - starttime

logger.info("Predict time : {:.3f}s".format(elapse))

+ if args.benchmark:

+ text_sys.autolog.report()

if __name__ == "__main__":

diff --git a/test_tipc/build_server.sh b/test_tipc/build_server.sh

new file mode 100644

index 000000000..317335978

--- /dev/null

+++ b/test_tipc/build_server.sh

@@ -0,0 +1,69 @@

+#使用镜像:

+#registry.baidubce.com/paddlepaddle/paddle:latest-dev-cuda10.1-cudnn7-gcc82

+

+#编译Serving Server:

+

+#client和app可以直接使用release版本

+

+#server因为加入了自定义OP,需要重新编译

+

+apt-get update

+apt install -y libcurl4-openssl-dev libbz2-dev

+wget https://paddle-serving.bj.bcebos.com/others/centos_ssl.tar && tar xf centos_ssl.tar && rm -rf centos_ssl.tar && mv libcrypto.so.1.0.2k /usr/lib/libcrypto.so.1.0.2k && mv libssl.so.1.0.2k /usr/lib/libssl.so.1.0.2k && ln -sf /usr/lib/libcrypto.so.1.0.2k /usr/lib/libcrypto.so.10 && ln -sf /usr/lib/libssl.so.1.0.2k /usr/lib/libssl.so.10 && ln -sf /usr/lib/libcrypto.so.10 /usr/lib/libcrypto.so && ln -sf /usr/lib/libssl.so.10 /usr/lib/libssl.so

+

+# 安装go依赖

+rm -rf /usr/local/go

+wget -qO- https://paddle-ci.cdn.bcebos.com/go1.17.2.linux-amd64.tar.gz | tar -xz -C /usr/local

+export GOROOT=/usr/local/go

+export GOPATH=/root/gopath

+export PATH=$PATH:$GOPATH/bin:$GOROOT/bin

+go env -w GO111MODULE=on

+go env -w GOPROXY=https://goproxy.cn,direct

+go install github.com/grpc-ecosystem/grpc-gateway/protoc-gen-grpc-gateway@v1.15.2

+go install github.com/grpc-ecosystem/grpc-gateway/protoc-gen-swagger@v1.15.2

+go install github.com/golang/protobuf/protoc-gen-go@v1.4.3

+go install google.golang.org/grpc@v1.33.0

+go env -w GO111MODULE=auto

+

+# 下载opencv库

+wget https://paddle-qa.bj.bcebos.com/PaddleServing/opencv3.tar.gz && tar -xvf opencv3.tar.gz && rm -rf opencv3.tar.gz

+export OPENCV_DIR=$PWD/opencv3

+

+# clone Serving

+git clone https://github.com/PaddlePaddle/Serving.git -b develop --depth=1

+cd Serving

+export Serving_repo_path=$PWD

+git submodule update --init --recursive

+python -m pip install -r python/requirements.txt

+

+

+export PYTHON_INCLUDE_DIR=$(python -c "from distutils.sysconfig import get_python_inc; print(get_python_inc())")

+export PYTHON_LIBRARIES=$(python -c "import distutils.sysconfig as sysconfig; print(sysconfig.get_config_var('LIBDIR'))")

+export PYTHON_EXECUTABLE=`which python`

+

+export CUDA_PATH='/usr/local/cuda'

+export CUDNN_LIBRARY='/usr/local/cuda/lib64/'

+export CUDA_CUDART_LIBRARY='/usr/local/cuda/lib64/'

+export TENSORRT_LIBRARY_PATH='/usr/local/TensorRT6-cuda10.1-cudnn7/targets/x86_64-linux-gnu/'

+

+# cp 自定义OP代码

+cp -rf ../deploy/pdserving/general_detection_op.cpp ${Serving_repo_path}/core/general-server/op

+

+# 编译Server, export SERVING_BIN

+mkdir server-build-gpu-opencv && cd server-build-gpu-opencv

+cmake -DPYTHON_INCLUDE_DIR=$PYTHON_INCLUDE_DIR \

+ -DPYTHON_LIBRARIES=$PYTHON_LIBRARIES \

+ -DPYTHON_EXECUTABLE=$PYTHON_EXECUTABLE \

+ -DCUDA_TOOLKIT_ROOT_DIR=${CUDA_PATH} \

+ -DCUDNN_LIBRARY=${CUDNN_LIBRARY} \

+ -DCUDA_CUDART_LIBRARY=${CUDA_CUDART_LIBRARY} \

+ -DTENSORRT_ROOT=${TENSORRT_LIBRARY_PATH} \

+ -DOPENCV_DIR=${OPENCV_DIR} \

+ -DWITH_OPENCV=ON \

+ -DSERVER=ON \

+ -DWITH_GPU=ON ..

+make -j32

+

+python -m pip install python/dist/paddle*

+export SERVING_BIN=$PWD/core/general-server/serving

+cd ../../

diff --git a/test_tipc/common_func.sh b/test_tipc/common_func.sh

index 85dfe2172..f7d8a1e04 100644

--- a/test_tipc/common_func.sh

+++ b/test_tipc/common_func.sh

@@ -57,10 +57,11 @@ function status_check(){

last_status=$1 # the exit code

run_command=$2

run_log=$3

+ model_name=$4

if [ $last_status -eq 0 ]; then

- echo -e "\033[33m Run successfully with command - ${run_command}! \033[0m" | tee -a ${run_log}

+ echo -e "\033[33m Run successfully with command - ${model_name} - ${run_command}! \033[0m" | tee -a ${run_log}

else

- echo -e "\033[33m Run failed with command - ${run_command}! \033[0m" | tee -a ${run_log}

+ echo -e "\033[33m Run failed with command - ${model_name} - ${run_command}! \033[0m" | tee -a ${run_log}

fi

}

diff --git a/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt

new file mode 100644

index 000000000..a0c49a081

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt

@@ -0,0 +1,20 @@

+===========================cpp_infer_params===========================

+model_name:ch_PP-OCRv2

+use_opencv:True

+infer_model:./inference/ch_PP-OCRv2_det_infer/

+infer_quant:False

+inference:./deploy/cpp_infer/build/ppocr --rec_char_dict_path=./ppocr/utils/ppocr_keys_v1.txt --rec_img_h=32

+--use_gpu:True|False

+--enable_mkldnn:False

+--cpu_threads:6

+--rec_batch_num:1

+--use_tensorrt:False

+--precision:fp32

+--det_model_dir:

+--image_dir:./inference/ch_det_data_50/all-sum-510/

+--rec_model_dir:./inference/ch_PP-OCRv2_rec_infer/

+--benchmark:True

+--det:True

+--rec:True

+--cls:False

+--use_angle_cls:False

\ No newline at end of file

diff --git a/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_infer_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_infer_python_linux_gpu_cpu.txt

index fcac6e398..32b290a9e 100644

--- a/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_infer_python_linux_gpu_cpu.txt

+++ b/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_infer_python_linux_gpu_cpu.txt

@@ -6,10 +6,10 @@ infer_export:null

infer_quant:False

inference:tools/infer/predict_system.py

--use_gpu:False|True

---enable_mkldnn:False|True

---cpu_threads:1|6

+--enable_mkldnn:False

+--cpu_threads:6

--rec_batch_num:1

---use_tensorrt:False|True

+--use_tensorrt:False

--precision:fp32

--det_model_dir:

--image_dir:./inference/ch_det_data_50/all-sum-510/

diff --git a/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_paddle2onnx_python_linux_cpu.txt b/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_paddle2onnx_python_linux_cpu.txt

new file mode 100644

index 000000000..24eb620ee

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_paddle2onnx_python_linux_cpu.txt

@@ -0,0 +1,17 @@

+===========================paddle2onnx_params===========================

+model_name:ch_PP-OCRv2

+python:python3.7

+2onnx: paddle2onnx

+--det_model_dir:./inference/ch_PP-OCRv2_det_infer/

+--model_filename:inference.pdmodel

+--params_filename:inference.pdiparams

+--det_save_file:./inference/det_v2_onnx/model.onnx

+--rec_model_dir:./inference/ch_PP-OCRv2_rec_infer/

+--rec_save_file:./inference/rec_v2_onnx/model.onnx

+--opset_version:10

+--enable_onnx_checker:True

+inference:tools/infer/predict_system.py --rec_image_shape="3,32,320"

+--use_gpu:True|False

+--det_model_dir:

+--rec_model_dir:

+--image_dir:./inference/ch_det_data_50/all-sum-510/00008790.jpg

\ No newline at end of file

diff --git a/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_serving_cpp_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_serving_cpp_linux_gpu_cpu.txt

new file mode 100644

index 000000000..f0456b5c3

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_serving_cpp_linux_gpu_cpu.txt

@@ -0,0 +1,19 @@

+===========================serving_params===========================

+model_name:ch_PP-OCRv2

+python:python3.7

+trans_model:-m paddle_serving_client.convert

+--det_dirname:./inference/ch_PP-OCRv2_det_infer/

+--model_filename:inference.pdmodel

+--params_filename:inference.pdiparams

+--det_serving_server:./deploy/pdserving/ppocr_det_v2_serving/

+--det_serving_client:./deploy/pdserving/ppocr_det_v2_client/

+--rec_dirname:./inference/ch_PP-OCRv2_rec_infer/

+--rec_serving_server:./deploy/pdserving/ppocr_rec_v2_serving/

+--rec_serving_client:./deploy/pdserving/ppocr_rec_v2_client/

+serving_dir:./deploy/pdserving

+web_service:-m paddle_serving_server.serve

+--op:GeneralDetectionOp GeneralInferOp

+--port:8181

+--gpu_id:"0"|null

+cpp_client:ocr_cpp_client.py

+--image_dir:../../doc/imgs/1.jpg

diff --git a/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt

new file mode 100644

index 000000000..4ad64db03

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt

@@ -0,0 +1,23 @@

+===========================serving_params===========================

+model_name:ch_PP-OCRv2

+python:python3.7

+trans_model:-m paddle_serving_client.convert

+--det_dirname:./inference/ch_PP-OCRv2_det_infer/

+--model_filename:inference.pdmodel

+--params_filename:inference.pdiparams

+--det_serving_server:./deploy/pdserving/ppocr_det_v2_serving/

+--det_serving_client:./deploy/pdserving/ppocr_det_v2_client/

+--rec_dirname:./inference/ch_PP-OCRv2_rec_infer/

+--rec_serving_server:./deploy/pdserving/ppocr_rec_v2_serving/

+--rec_serving_client:./deploy/pdserving/ppocr_rec_v2_client/

+serving_dir:./deploy/pdserving

+web_service:web_service.py --config=config.yml --opt op.det.concurrency="1" op.rec.concurrency="1"

+op.det.local_service_conf.devices:gpu|null

+op.det.local_service_conf.use_mkldnn:False

+op.det.local_service_conf.thread_num:6

+op.det.local_service_conf.use_trt:False

+op.det.local_service_conf.precision:fp32

+op.det.local_service_conf.model_config:

+op.rec.local_service_conf.model_config:

+pipline:pipeline_http_client.py

+--image_dir:../../doc/imgs/1.jpg

diff --git a/test_tipc/configs/ch_PP-OCRv2_det/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt

new file mode 100644

index 000000000..7eccbd725

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_det/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt

@@ -0,0 +1,20 @@

+===========================cpp_infer_params===========================

+model_name:ch_PP-OCRv2_det

+use_opencv:True

+infer_model:./inference/ch_PP-OCRv2_det_infer/

+infer_quant:False

+inference:./deploy/cpp_infer/build/ppocr

+--use_gpu:True|False

+--enable_mkldnn:False

+--cpu_threads:6

+--rec_batch_num:1

+--use_tensorrt:False

+--precision:fp32

+--det_model_dir:

+--image_dir:./inference/ch_det_data_50/all-sum-510/

+null:null

+--benchmark:True

+--det:True

+--rec:False

+--cls:False

+--use_angle_cls:False

\ No newline at end of file

diff --git a/test_tipc/configs/ch_PP-OCRv2_det/model_linux_gpu_normal_normal_paddle2onnx_python_linux_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det/model_linux_gpu_normal_normal_paddle2onnx_python_linux_cpu.txt

new file mode 100644

index 000000000..2e7906076

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_det/model_linux_gpu_normal_normal_paddle2onnx_python_linux_cpu.txt

@@ -0,0 +1,17 @@

+===========================paddle2onnx_params===========================

+model_name:ch_PP-OCRv2_det

+python:python3.7

+2onnx: paddle2onnx

+--det_model_dir:./inference/ch_PP-OCRv2_det_infer/

+--model_filename:inference.pdmodel

+--params_filename:inference.pdiparams

+--det_save_file:./inference/det_v2_onnx/model.onnx

+--rec_model_dir:

+--rec_save_file:

+--opset_version:10

+--enable_onnx_checker:True

+inference:tools/infer/predict_det.py

+--use_gpu:True|False

+--det_model_dir:

+--rec_model_dir:

+--image_dir:./inference/ch_det_data_50/all-sum-510/

\ No newline at end of file

diff --git a/test_tipc/configs/ch_PP-OCRv2_det/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt

new file mode 100644

index 000000000..587a7d7ea

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_det/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt

@@ -0,0 +1,23 @@

+===========================serving_params===========================

+model_name:ch_PP-OCRv2_det

+python:python3.7

+trans_model:-m paddle_serving_client.convert

+--det_dirname:./inference/ch_PP-OCRv2_det_infer/

+--model_filename:inference.pdmodel

+--params_filename:inference.pdiparams

+--det_serving_server:./deploy/pdserving/ppocr_det_v2_serving/

+--det_serving_client:./deploy/pdserving/ppocr_det_v2_client/

+--rec_dirname:null

+--rec_serving_server:null

+--rec_serving_client:null

+serving_dir:./deploy/pdserving

+web_service:web_service_det.py --config=config.yml --opt op.det.concurrency="1"

+op.det.local_service_conf.devices:gpu|null

+op.det.local_service_conf.use_mkldnn:False

+op.det.local_service_conf.thread_num:6

+op.det.local_service_conf.use_trt:False

+op.det.local_service_conf.precision:fp32

+op.det.local_service_conf.model_config:

+op.rec.local_service_conf.model_config:

+pipline:pipeline_http_client.py

+--image_dir:../../doc/imgs/1.jpg

diff --git a/test_tipc/configs/ch_PP-OCRv2_det/train_infer_python.txt b/test_tipc/configs/ch_PP-OCRv2_det/train_infer_python.txt

index 797cf53a1..cab0cb0aa 100644

--- a/test_tipc/configs/ch_PP-OCRv2_det/train_infer_python.txt

+++ b/test_tipc/configs/ch_PP-OCRv2_det/train_infer_python.txt

@@ -1,10 +1,10 @@

===========================train_params===========================

-model_name:ch_PPOCRv2_det

+model_name:ch_PP-OCRv2_det

python:python3.7

gpu_list:0|0,1

Global.use_gpu:True|True

Global.auto_cast:fp32

-Global.epoch_num:lite_train_lite_infer=1|whole_train_whole_infer=500

+Global.epoch_num:lite_train_lite_infer=1|whole_train_whole_infer=50

Global.save_model_dir:./output/

Train.loader.batch_size_per_card:lite_train_lite_infer=2|whole_train_whole_infer=4

Global.pretrained_model:null

@@ -39,11 +39,11 @@ infer_export:null

infer_quant:False

inference:tools/infer/predict_det.py

--use_gpu:True|False

---enable_mkldnn:True|False

---cpu_threads:1|6

+--enable_mkldnn:False

+--cpu_threads:6

--rec_batch_num:1

---use_tensorrt:False|True

---precision:fp32|fp16|int8

+--use_tensorrt:False

+--precision:fp32

--det_model_dir:

--image_dir:./inference/ch_det_data_50/all-sum-510/

null:null

diff --git a/test_tipc/configs/ch_PP-OCRv2_det/train_linux_gpu_fleet_normal_infer_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det/train_linux_gpu_fleet_normal_infer_python_linux_gpu_cpu.txt

new file mode 100644

index 000000000..91a6288eb

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_det/train_linux_gpu_fleet_normal_infer_python_linux_gpu_cpu.txt

@@ -0,0 +1,53 @@

+===========================train_params===========================

+model_name:ch_PP-OCRv2_det

+python:python3.7

+gpu_list:192.168.0.1,192.168.0.2;0,1

+Global.use_gpu:True

+Global.auto_cast:fp32

+Global.epoch_num:lite_train_lite_infer=1|whole_train_whole_infer=50

+Global.save_model_dir:./output/

+Train.loader.batch_size_per_card:lite_train_lite_infer=2|whole_train_whole_infer=4

+Global.pretrained_model:null

+train_model_name:latest

+train_infer_img_dir:./train_data/icdar2015/text_localization/ch4_test_images/

+null:null

+##

+trainer:norm_train

+norm_train:tools/train.py -c configs/det/ch_PP-OCRv2/ch_PP-OCRv2_det_cml.yml -o

+pact_train:null

+fpgm_train:null

+distill_train:null

+null:null

+null:null

+##

+===========================eval_params===========================

+eval:null

+null:null

+##

+===========================infer_params===========================

+Global.save_inference_dir:./output/

+Global.checkpoints:

+norm_export:tools/export_model.py -c configs/det/ch_PP-OCRv2/ch_PP-OCRv2_det_cml.yml -o

+quant_export:null

+fpgm_export:

+distill_export:null

+export1:null

+export2:null

+inference_dir:Student

+infer_model:./inference/ch_PP-OCRv2_det_infer/

+infer_export:null

+infer_quant:False

+inference:tools/infer/predict_det.py

+--use_gpu:False

+--enable_mkldnn:False

+--cpu_threads:6

+--rec_batch_num:1

+--use_tensorrt:False

+--precision:fp32

+--det_model_dir:

+--image_dir:./inference/ch_det_data_50/all-sum-510/

+null:null

+--benchmark:True

+null:null

+===========================infer_benchmark_params==========================

+random_infer_input:[{float32,[3,640,640]}];[{float32,[3,960,960]}]

diff --git a/test_tipc/configs/ch_PP-OCRv2_det/train_linux_gpu_normal_amp_infer_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det/train_linux_gpu_normal_amp_infer_python_linux_gpu_cpu.txt

index 033d40a80..85b0ebcb9 100644

--- a/test_tipc/configs/ch_PP-OCRv2_det/train_linux_gpu_normal_amp_infer_python_linux_gpu_cpu.txt

+++ b/test_tipc/configs/ch_PP-OCRv2_det/train_linux_gpu_normal_amp_infer_python_linux_gpu_cpu.txt

@@ -1,10 +1,10 @@

===========================train_params===========================

-model_name:ch_PPOCRv2_det

+model_name:ch_PP-OCRv2_det

python:python3.7

gpu_list:0|0,1

Global.use_gpu:True|True

Global.auto_cast:amp

-Global.epoch_num:lite_train_lite_infer=1|whole_train_whole_infer=500

+Global.epoch_num:lite_train_lite_infer=1|whole_train_whole_infer=50

Global.save_model_dir:./output/

Train.loader.batch_size_per_card:lite_train_lite_infer=2|whole_train_whole_infer=4

Global.pretrained_model:null

@@ -39,11 +39,11 @@ infer_export:null

infer_quant:False

inference:tools/infer/predict_det.py

--use_gpu:True|False

---enable_mkldnn:True|False

---cpu_threads:1|6

+--enable_mkldnn:False

+--cpu_threads:6

--rec_batch_num:1

---use_tensorrt:False|True

---precision:fp32|fp16|int8

+--use_tensorrt:False

+--precision:fp32

--det_model_dir:

--image_dir:./inference/ch_det_data_50/all-sum-510/

null:null

diff --git a/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt

new file mode 100644

index 000000000..1975e099d

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt

@@ -0,0 +1,20 @@

+===========================cpp_infer_params===========================

+model_name:ch_PP-OCRv2_det_KL

+use_opencv:True

+infer_model:./inference/ch_PP-OCRv2_det_klquant_infer

+infer_quant:False

+inference:./deploy/cpp_infer/build/ppocr

+--use_gpu:True|False

+--enable_mkldnn:False

+--cpu_threads:6

+--rec_batch_num:1

+--use_tensorrt:False

+--precision:fp32

+--det_model_dir:

+--image_dir:./inference/ch_det_data_50/all-sum-510/

+null:null

+--benchmark:True

+--det:True

+--rec:False

+--cls:False

+--use_angle_cls:False

\ No newline at end of file

diff --git a/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_infer_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_infer_python_linux_gpu_cpu.txt

index 1aad65b68..ccc9e5ced 100644

--- a/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_infer_python_linux_gpu_cpu.txt

+++ b/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_infer_python_linux_gpu_cpu.txt

@@ -1,5 +1,5 @@

===========================kl_quant_params===========================

-model_name:PPOCRv2_ocr_det_kl

+model_name:ch_PP-OCRv2_det_KL

python:python3.7

Global.pretrained_model:null

Global.save_inference_dir:null

@@ -8,10 +8,10 @@ infer_export:deploy/slim/quantization/quant_kl.py -c configs/det/ch_PP-OCRv2/ch_

infer_quant:True

inference:tools/infer/predict_det.py

--use_gpu:False|True

---enable_mkldnn:True

---cpu_threads:1|6

+--enable_mkldnn:False

+--cpu_threads:6

--rec_batch_num:1

---use_tensorrt:False|True

+--use_tensorrt:False

--precision:int8

--det_model_dir:

--image_dir:./inference/ch_det_data_50/all-sum-510/

diff --git a/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_serving_cpp_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_serving_cpp_linux_gpu_cpu.txt

new file mode 100644

index 000000000..e306b0a92

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_serving_cpp_linux_gpu_cpu.txt

@@ -0,0 +1,19 @@

+===========================serving_params===========================

+model_name:ch_PP-OCRv2_det_KL

+python:python3.7

+trans_model:-m paddle_serving_client.convert

+--det_dirname:./inference/ch_PP-OCRv2_det_klquant_infer/

+--model_filename:inference.pdmodel

+--params_filename:inference.pdiparams

+--det_serving_server:./deploy/pdserving/ppocr_det_v2_kl_serving/

+--det_serving_client:./deploy/pdserving/ppocr_det_v2_kl_client/

+--rec_dirname:./inference/ch_PP-OCRv2_rec_klquant_infer/

+--rec_serving_server:./deploy/pdserving/ppocr_rec_v2_kl_serving/

+--rec_serving_client:./deploy/pdserving/ppocr_rec_v2_kl_client/

+serving_dir:./deploy/pdserving

+web_service:-m paddle_serving_server.serve

+--op:GeneralDetectionOp GeneralInferOp

+--port:8181

+--gpu_id:"0"|null

+cpp_client:ocr_cpp_client.py

+--image_dir:../../doc/imgs/1.jpg

diff --git a/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt

new file mode 100644

index 000000000..2c96d2bfd

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_det_KL/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt

@@ -0,0 +1,23 @@

+===========================serving_params===========================

+model_name:ch_PP-OCRv2_det_KL

+python:python3.7

+trans_model:-m paddle_serving_client.convert

+--det_dirname:./inference/ch_PP-OCRv2_det_klquant_infer/

+--model_filename:inference.pdmodel

+--params_filename:inference.pdiparams

+--det_serving_server:./deploy/pdserving/ppocr_det_v2_kl_serving/

+--det_serving_client:./deploy/pdserving/ppocr_det_v2_kl_client/

+--rec_dirname:null

+--rec_serving_server:null

+--rec_serving_client:null

+serving_dir:./deploy/pdserving

+web_service:web_service_det.py --config=config.yml --opt op.det.concurrency="1"

+op.det.local_service_conf.devices:gpu|null

+op.det.local_service_conf.use_mkldnn:False

+op.det.local_service_conf.thread_num:6

+op.det.local_service_conf.use_trt:False

+op.det.local_service_conf.precision:fp32

+op.det.local_service_conf.model_config:

+op.rec.local_service_conf.model_config:

+pipline:pipeline_http_client.py

+--image_dir:../../doc/imgs/1.jpg

diff --git a/test_tipc/configs/ch_PP-OCRv2_det_PACT/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det_PACT/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt

new file mode 100644

index 000000000..43ef97d50

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_det_PACT/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt

@@ -0,0 +1,20 @@

+===========================cpp_infer_params===========================

+model_name:ch_PP-OCRv2_det_PACT

+use_opencv:True

+infer_model:./inference/ch_PP-OCRv2_det_pact_infer

+infer_quant:False

+inference:./deploy/cpp_infer/build/ppocr

+--use_gpu:True|False

+--enable_mkldnn:False

+--cpu_threads:6

+--rec_batch_num:1

+--use_tensorrt:False

+--precision:fp32

+--det_model_dir:

+--image_dir:./inference/ch_det_data_50/all-sum-510/

+null:null

+--benchmark:True

+--det:True

+--rec:False

+--cls:False

+--use_angle_cls:False

\ No newline at end of file

diff --git a/test_tipc/configs/ch_PP-OCRv2_det_PACT/model_linux_gpu_normal_normal_serving_cpp_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det_PACT/model_linux_gpu_normal_normal_serving_cpp_linux_gpu_cpu.txt

new file mode 100644

index 000000000..b2d929b99

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_det_PACT/model_linux_gpu_normal_normal_serving_cpp_linux_gpu_cpu.txt

@@ -0,0 +1,19 @@

+===========================serving_params===========================

+model_name:ch_PP-OCRv2_det_PACT

+python:python3.7

+trans_model:-m paddle_serving_client.convert

+--det_dirname:./inference/ch_PP-OCRv2_det_pact_infer/

+--model_filename:inference.pdmodel

+--params_filename:inference.pdiparams

+--det_serving_server:./deploy/pdserving/ppocr_det_v2_pact_serving/

+--det_serving_client:./deploy/pdserving/ppocr_det_v2_pact_client/

+--rec_dirname:./inference/ch_PP-OCRv2_rec_pact_infer/

+--rec_serving_server:./deploy/pdserving/ppocr_rec_v2_pact_serving/

+--rec_serving_client:./deploy/pdserving/ppocr_rec_v2_pact_client/

+serving_dir:./deploy/pdserving

+web_service:-m paddle_serving_server.serve

+--op:GeneralDetectionOp GeneralInferOp

+--port:8181

+--gpu_id:"0"|null

+cpp_client:ocr_cpp_client.py

+--image_dir:../../doc/imgs/1.jpg

diff --git a/test_tipc/configs/ch_PP-OCRv2_det_PACT/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det_PACT/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt

new file mode 100644

index 000000000..d5d99ab56

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_det_PACT/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt

@@ -0,0 +1,23 @@

+===========================serving_params===========================

+model_name:ch_PP-OCRv2_det_PACT

+python:python3.7

+trans_model:-m paddle_serving_client.convert

+--det_dirname:./inference/ch_PP-OCRv2_det_pact_infer/

+--model_filename:inference.pdmodel

+--params_filename:inference.pdiparams

+--det_serving_server:./deploy/pdserving/ppocr_det_v2_pact_serving/

+--det_serving_client:./deploy/pdserving/ppocr_det_v2_pact_client/

+--rec_dirname:null

+--rec_serving_server:null

+--rec_serving_client:null

+serving_dir:./deploy/pdserving

+web_service:web_service_det.py --config=config.yml --opt op.det.concurrency="1"

+op.det.local_service_conf.devices:gpu|null

+op.det.local_service_conf.use_mkldnn:False

+op.det.local_service_conf.thread_num:6

+op.det.local_service_conf.use_trt:False

+op.det.local_service_conf.precision:fp32

+op.det.local_service_conf.model_config:

+op.rec.local_service_conf.model_config:

+pipline:pipeline_http_client.py

+--image_dir:../../doc/imgs/1.jpg

diff --git a/test_tipc/configs/ch_PP-OCRv2_det_PACT/train_infer_python.txt b/test_tipc/configs/ch_PP-OCRv2_det_PACT/train_infer_python.txt

index 038fa8506..1a20f97fd 100644

--- a/test_tipc/configs/ch_PP-OCRv2_det_PACT/train_infer_python.txt

+++ b/test_tipc/configs/ch_PP-OCRv2_det_PACT/train_infer_python.txt

@@ -1,10 +1,10 @@

===========================train_params===========================

-model_name:ch_PPOCRv2_det_PACT

+model_name:ch_PP-OCRv2_det_PACT

python:python3.7

gpu_list:0|0,1

Global.use_gpu:True|True

Global.auto_cast:fp32

-Global.epoch_num:lite_train_lite_infer=1|whole_train_whole_infer=500

+Global.epoch_num:lite_train_lite_infer=1|whole_train_whole_infer=50

Global.save_model_dir:./output/

Train.loader.batch_size_per_card:lite_train_lite_infer=1|whole_train_whole_infer=4

Global.pretrained_model:null

@@ -39,11 +39,11 @@ infer_export:null

infer_quant:False

inference:tools/infer/predict_det.py

--use_gpu:True|False

---enable_mkldnn:True|False

---cpu_threads:1|6

+--enable_mkldnn:False

+--cpu_threads:6

--rec_batch_num:1

---use_tensorrt:False|True

---precision:fp32|fp16|int8

+--use_tensorrt:False

+--precision:fp32

--det_model_dir:

--image_dir:./inference/ch_det_data_50/all-sum-510/

null:null

diff --git a/test_tipc/configs/ch_PP-OCRv2_det_PACT/train_linux_gpu_normal_amp_infer_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_det_PACT/train_linux_gpu_normal_amp_infer_python_linux_gpu_cpu.txt

index d922a4a5d..3afc0acb7 100644

--- a/test_tipc/configs/ch_PP-OCRv2_det_PACT/train_linux_gpu_normal_amp_infer_python_linux_gpu_cpu.txt

+++ b/test_tipc/configs/ch_PP-OCRv2_det_PACT/train_linux_gpu_normal_amp_infer_python_linux_gpu_cpu.txt

@@ -1,10 +1,10 @@

===========================train_params===========================

-model_name:ch_PPOCRv2_det_PACT

+model_name:ch_PP-OCRv2_det_PACT

python:python3.7

gpu_list:0|0,1

Global.use_gpu:True|True

Global.auto_cast:amp

-Global.epoch_num:lite_train_lite_infer=1|whole_train_whole_infer=500

+Global.epoch_num:lite_train_lite_infer=1|whole_train_whole_infer=50

Global.save_model_dir:./output/

Train.loader.batch_size_per_card:lite_train_lite_infer=2|whole_train_whole_infer=4

Global.pretrained_model:null

@@ -39,11 +39,11 @@ infer_export:null

infer_quant:False

inference:tools/infer/predict_det.py

--use_gpu:True|False

---enable_mkldnn:True|False

---cpu_threads:1|6

+--enable_mkldnn:False

+--cpu_threads:6

--rec_batch_num:1

---use_tensorrt:False|True

---precision:fp32|fp16|int8

+--use_tensorrt:False

+--precision:fp32

--det_model_dir:

--image_dir:./inference/ch_det_data_50/all-sum-510/

null:null

diff --git a/test_tipc/configs/ch_PP-OCRv2_rec/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_rec/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt

new file mode 100644

index 000000000..b1bff00b0

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_rec/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt

@@ -0,0 +1,20 @@

+===========================cpp_infer_params===========================

+model_name:ch_PP-OCRv2_rec

+use_opencv:True

+infer_model:./inference/ch_PP-OCRv2_rec_infer/

+infer_quant:False

+inference:./deploy/cpp_infer/build/ppocr --rec_char_dict_path=./ppocr/utils/ppocr_keys_v1.txt --rec_img_h=32

+--use_gpu:True|False

+--enable_mkldnn:False

+--cpu_threads:6

+--rec_batch_num:6

+--use_tensorrt:False

+--precision:fp32

+--rec_model_dir:

+--image_dir:./inference/rec_inference/

+null:null

+--benchmark:True

+--det:False

+--rec:True

+--cls:False

+--use_angle_cls:False

\ No newline at end of file

diff --git a/test_tipc/configs/ch_PP-OCRv2_rec/model_linux_gpu_normal_normal_paddle2onnx_python_linux_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_rec/model_linux_gpu_normal_normal_paddle2onnx_python_linux_cpu.txt

new file mode 100644

index 000000000..e374a5d82

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_rec/model_linux_gpu_normal_normal_paddle2onnx_python_linux_cpu.txt

@@ -0,0 +1,17 @@

+===========================paddle2onnx_params===========================

+model_name:ch_PP-OCRv2_rec

+python:python3.7

+2onnx: paddle2onnx

+--det_model_dir:

+--model_filename:inference.pdmodel

+--params_filename:inference.pdiparams

+--det_save_file:

+--rec_model_dir:./inference/ch_PP-OCRv2_rec_infer/

+--rec_save_file:./inference/rec_v2_onnx/model.onnx

+--opset_version:10

+--enable_onnx_checker:True

+inference:tools/infer/predict_rec.py --rec_image_shape="3,32,320"

+--use_gpu:True|False

+--det_model_dir:

+--rec_model_dir:

+--image_dir:./inference/rec_inference/

\ No newline at end of file

diff --git a/test_tipc/configs/ch_PP-OCRv2_rec/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_rec/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt

new file mode 100644

index 000000000..e9e90d372

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_rec/model_linux_gpu_normal_normal_serving_python_linux_gpu_cpu.txt

@@ -0,0 +1,23 @@

+===========================serving_params===========================

+model_name:ch_PP-OCRv2_rec

+python:python3.7

+trans_model:-m paddle_serving_client.convert

+--det_dirname:null

+--model_filename:inference.pdmodel

+--params_filename:inference.pdiparams

+--det_serving_server:null

+--det_serving_client:null

+--rec_dirname:./inference/ch_PP-OCRv2_rec_infer/

+--rec_serving_server:./deploy/pdserving/ppocr_rec_v2_serving/

+--rec_serving_client:./deploy/pdserving/ppocr_rec_v2_client/

+serving_dir:./deploy/pdserving

+web_service:web_service_rec.py --config=config.yml --opt op.rec.concurrency="1"

+op.det.local_service_conf.devices:gpu|null

+op.det.local_service_conf.use_mkldnn:False

+op.det.local_service_conf.thread_num:6

+op.det.local_service_conf.use_trt:False

+op.det.local_service_conf.precision:fp32

+op.det.local_service_conf.model_config:

+op.rec.local_service_conf.model_config:

+pipline:pipeline_http_client.py --det=False

+--image_dir:../../inference/rec_inference

diff --git a/test_tipc/configs/ch_PP-OCRv2_rec/train_infer_python.txt b/test_tipc/configs/ch_PP-OCRv2_rec/train_infer_python.txt

index 188eb3ccc..df42b342b 100644

--- a/test_tipc/configs/ch_PP-OCRv2_rec/train_infer_python.txt

+++ b/test_tipc/configs/ch_PP-OCRv2_rec/train_infer_python.txt

@@ -1,10 +1,10 @@

===========================train_params===========================

-model_name:PPOCRv2_ocr_rec

+model_name:ch_PP-OCRv2_rec

python:python3.7

gpu_list:0|0,1

Global.use_gpu:True|True

Global.auto_cast:fp32

-Global.epoch_num:lite_train_lite_infer=3|whole_train_whole_infer=300

+Global.epoch_num:lite_train_lite_infer=3|whole_train_whole_infer=50

Global.save_model_dir:./output/

Train.loader.batch_size_per_card:lite_train_lite_infer=16|whole_train_whole_infer=128

Global.pretrained_model:null

@@ -39,11 +39,11 @@ infer_export:null

infer_quant:False

inference:tools/infer/predict_rec.py

--use_gpu:True|False

---enable_mkldnn:True|False

---cpu_threads:1|6

+--enable_mkldnn:False

+--cpu_threads:6

--rec_batch_num:1|6

---use_tensorrt:False|True

---precision:fp32|int8

+--use_tensorrt:False

+--precision:fp32

--rec_model_dir:

--image_dir:./inference/rec_inference

null:null

diff --git a/test_tipc/configs/ch_PP-OCRv2_rec/train_linux_gpu_fleet_normal_infer_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_rec/train_linux_gpu_fleet_normal_infer_python_linux_gpu_cpu.txt

new file mode 100644

index 000000000..5795bc27e

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_rec/train_linux_gpu_fleet_normal_infer_python_linux_gpu_cpu.txt

@@ -0,0 +1,53 @@

+===========================train_params===========================

+model_name:ch_PP-OCRv2_rec

+python:python3.7

+gpu_list:192.168.0.1,192.168.0.2;0,1

+Global.use_gpu:True

+Global.auto_cast:fp32

+Global.epoch_num:lite_train_lite_infer=3|whole_train_whole_infer=50

+Global.save_model_dir:./output/

+Train.loader.batch_size_per_card:lite_train_lite_infer=16|whole_train_whole_infer=128

+Global.pretrained_model:null

+train_model_name:latest

+train_infer_img_dir:./inference/rec_inference

+null:null

+##

+trainer:norm_train

+norm_train:tools/train.py -c test_tipc/configs/ch_PP-OCRv2_rec/ch_PP-OCRv2_rec_distillation.yml -o

+pact_train:null

+fpgm_train:null

+distill_train:null

+null:null

+null:null

+##

+===========================eval_params===========================

+eval:null

+null:null

+##

+===========================infer_params===========================

+Global.save_inference_dir:./output/

+Global.checkpoints:

+norm_export:tools/export_model.py -c test_tipc/configs/ch_PP-OCRv2_rec/ch_PP-OCRv2_rec_distillation.yml -o

+quant_export:

+fpgm_export:

+distill_export:null

+export1:null

+export2:null

+inference_dir:Student

+infer_model:./inference/ch_PP-OCRv2_rec_infer

+infer_export:null

+infer_quant:False

+inference:tools/infer/predict_rec.py

+--use_gpu:False

+--enable_mkldnn:False

+--cpu_threads:6

+--rec_batch_num:1|6

+--use_tensorrt:False

+--precision:fp32

+--rec_model_dir:

+--image_dir:./inference/rec_inference

+null:null

+--benchmark:True

+null:null

+===========================infer_benchmark_params==========================

+random_infer_input:[{float32,[3,32,320]}]

diff --git a/test_tipc/configs/ch_PP-OCRv2_rec/train_linux_gpu_normal_amp_infer_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_rec/train_linux_gpu_normal_amp_infer_python_linux_gpu_cpu.txt

index 7c438cb8a..1b8800f5c 100644

--- a/test_tipc/configs/ch_PP-OCRv2_rec/train_linux_gpu_normal_amp_infer_python_linux_gpu_cpu.txt

+++ b/test_tipc/configs/ch_PP-OCRv2_rec/train_linux_gpu_normal_amp_infer_python_linux_gpu_cpu.txt

@@ -1,10 +1,10 @@

===========================train_params===========================

-model_name:PPOCRv2_ocr_rec

+model_name:ch_PP-OCRv2_rec

python:python3.7

gpu_list:0|0,1

Global.use_gpu:True|True

Global.auto_cast:amp

-Global.epoch_num:lite_train_lite_infer=3|whole_train_whole_infer=300

+Global.epoch_num:lite_train_lite_infer=3|whole_train_whole_infer=50

Global.save_model_dir:./output/

Train.loader.batch_size_per_card:lite_train_lite_infer=16|whole_train_whole_infer=128

Global.pretrained_model:null

@@ -39,11 +39,11 @@ infer_export:null

infer_quant:False

inference:tools/infer/predict_rec.py

--use_gpu:True|False

---enable_mkldnn:True|False

---cpu_threads:1|6

+--enable_mkldnn:False

+--cpu_threads:6

--rec_batch_num:1|6

---use_tensorrt:False|True

---precision:fp32|int8

+--use_tensorrt:False

+--precision:fp32

--rec_model_dir:

--image_dir:./inference/rec_inference

null:null

diff --git a/test_tipc/configs/ch_PP-OCRv2_rec_KL/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_rec_KL/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt

new file mode 100644

index 000000000..95e4062d1

--- /dev/null

+++ b/test_tipc/configs/ch_PP-OCRv2_rec_KL/model_linux_gpu_normal_normal_infer_cpp_linux_gpu_cpu.txt

@@ -0,0 +1,20 @@

+===========================cpp_infer_params===========================

+model_name:ch_PP-OCRv2_rec_KL

+use_opencv:True

+infer_model:./inference/ch_PP-OCRv2_rec_klquant_infer

+infer_quant:False

+inference:./deploy/cpp_infer/build/ppocr --rec_char_dict_path=./ppocr/utils/ppocr_keys_v1.txt --rec_img_h=32

+--use_gpu:True|False

+--enable_mkldnn:False

+--cpu_threads:6

+--rec_batch_num:6

+--use_tensorrt:False

+--precision:fp32

+--rec_model_dir:

+--image_dir:./inference/rec_inference/

+null:null

+--benchmark:True

+--det:False

+--rec:True

+--cls:False

+--use_angle_cls:False

\ No newline at end of file

diff --git a/test_tipc/configs/ch_PP-OCRv2_rec_KL/model_linux_gpu_normal_normal_infer_python_linux_gpu_cpu.txt b/test_tipc/configs/ch_PP-OCRv2_rec_KL/model_linux_gpu_normal_normal_infer_python_linux_gpu_cpu.txt

index 083a3ae26..c30e0858e 100644

--- a/test_tipc/configs/ch_PP-OCRv2_rec_KL/model_linux_gpu_normal_normal_infer_python_linux_gpu_cpu.txt

+++ b/test_tipc/configs/ch_PP-OCRv2_rec_KL/model_linux_gpu_normal_normal_infer_python_linux_gpu_cpu.txt

@@ -1,17 +1,17 @@

===========================kl_quant_params===========================

-model_name:PPOCRv2_ocr_rec_kl

+model_name:ch_PP-OCRv2_rec_KL