mirror of

https://github.com/PaddlePaddle/PaddleOCR.git

synced 2025-06-03 21:53:39 +08:00

Merge remote-tracking branch 'PaddlePaddle/dygraph' into dygraph

This commit is contained in:

commit

56feca0106

@ -8,7 +8,6 @@ PaddleOCR同时支持动态图与静态图两种编程范式

|

||||

- 静态图版本:develop分支

|

||||

|

||||

**近期更新**

|

||||

- 【预告】 PaddleOCR研发团队对最新发版内容技术深入解读,4月13日晚上19:00,[直播地址](https://live.bilibili.com/21689802)

|

||||

- 2021.4.8 release 2.1版本,新增AAAI 2021论文[端到端识别算法PGNet](./doc/doc_ch/pgnet.md)开源,[多语言模型](./doc/doc_ch/multi_languages.md)支持种类增加到80+。

|

||||

- 2021.2.1 [FAQ](./doc/doc_ch/FAQ.md)新增5个高频问题,总数162个,每周一都会更新,欢迎大家持续关注。

|

||||

- 2021.1.21 更新多语言识别模型,目前支持语种超过27种,包括中文简体、中文繁体、英文、法文、德文、韩文、日文、意大利文、西班牙文、葡萄牙文、俄罗斯文、阿拉伯文等,后续计划可以参考[多语言研发计划](https://github.com/PaddlePaddle/PaddleOCR/issues/1048)

|

||||

@ -80,7 +79,7 @@ PaddleOCR同时支持动态图与静态图两种编程范式

|

||||

- 算法介绍

|

||||

- [文本检测](./doc/doc_ch/algorithm_overview.md)

|

||||

- [文本识别](./doc/doc_ch/algorithm_overview.md)

|

||||

- [PP-OCR Pipline](#PP-OCR)

|

||||

- [PP-OCR Pipeline](#PP-OCR)

|

||||

- [端到端PGNet算法](./doc/doc_ch/pgnet.md)

|

||||

- 模型训练/评估

|

||||

- [文本检测](./doc/doc_ch/detection.md)

|

||||

@ -115,7 +114,7 @@ PaddleOCR同时支持动态图与静态图两种编程范式

|

||||

|

||||

|

||||

<a name="PP-OCR"></a>

|

||||

## PP-OCR Pipline

|

||||

## PP-OCR Pipeline

|

||||

<div align="center">

|

||||

<img src="./doc/ppocr_framework.png" width="800">

|

||||

</div>

|

||||

|

||||

@ -49,6 +49,8 @@ public:

|

||||

|

||||

this->det_db_unclip_ratio = stod(config_map_["det_db_unclip_ratio"]);

|

||||

|

||||

this->use_polygon_score = bool(stoi(config_map_["use_polygon_score"]));

|

||||

|

||||

this->det_model_dir.assign(config_map_["det_model_dir"]);

|

||||

|

||||

this->rec_model_dir.assign(config_map_["rec_model_dir"]);

|

||||

@ -86,6 +88,8 @@ public:

|

||||

|

||||

double det_db_unclip_ratio = 2.0;

|

||||

|

||||

bool use_polygon_score = false;

|

||||

|

||||

std::string det_model_dir;

|

||||

|

||||

std::string rec_model_dir;

|

||||

|

||||

@ -44,7 +44,8 @@ public:

|

||||

const bool &use_mkldnn, const int &max_side_len,

|

||||

const double &det_db_thresh,

|

||||

const double &det_db_box_thresh,

|

||||

const double &det_db_unclip_ratio, const bool &visualize,

|

||||

const double &det_db_unclip_ratio,

|

||||

const bool &use_polygon_score, const bool &visualize,

|

||||

const bool &use_tensorrt, const bool &use_fp16) {

|

||||

this->use_gpu_ = use_gpu;

|

||||

this->gpu_id_ = gpu_id;

|

||||

@ -57,6 +58,7 @@ public:

|

||||

this->det_db_thresh_ = det_db_thresh;

|

||||

this->det_db_box_thresh_ = det_db_box_thresh;

|

||||

this->det_db_unclip_ratio_ = det_db_unclip_ratio;

|

||||

this->use_polygon_score_ = use_polygon_score;

|

||||

|

||||

this->visualize_ = visualize;

|

||||

this->use_tensorrt_ = use_tensorrt;

|

||||

@ -85,6 +87,7 @@ private:

|

||||

double det_db_thresh_ = 0.3;

|

||||

double det_db_box_thresh_ = 0.5;

|

||||

double det_db_unclip_ratio_ = 2.0;

|

||||

bool use_polygon_score_ = false;

|

||||

|

||||

bool visualize_ = true;

|

||||

bool use_tensorrt_ = false;

|

||||

|

||||

@ -55,7 +55,8 @@ public:

|

||||

|

||||

std::vector<std::vector<std::vector<int>>>

|

||||

BoxesFromBitmap(const cv::Mat pred, const cv::Mat bitmap,

|

||||

const float &box_thresh, const float &det_db_unclip_ratio);

|

||||

const float &box_thresh, const float &det_db_unclip_ratio,

|

||||

const bool &use_polygon_score);

|

||||

|

||||

std::vector<std::vector<std::vector<int>>>

|

||||

FilterTagDetRes(std::vector<std::vector<std::vector<int>>> boxes,

|

||||

|

||||

@ -183,7 +183,7 @@ cmake .. \

|

||||

make -j

|

||||

```

|

||||

|

||||

`OPENCV_DIR`为opencv编译安装的地址;`LIB_DIR`为下载(`paddle_inference`文件夹)或者编译生成的Paddle预测库地址(`build/paddle_inference_install_dir`文件夹);`CUDA_LIB_DIR`为cuda库文件地址,在docker中;为`/usr/local/cuda/lib64`;`CUDNN_LIB_DIR`为cudnn库文件地址,在docker中为`/usr/lib/x86_64-linux-gnu/`。

|

||||

`OPENCV_DIR`为opencv编译安装的地址;`LIB_DIR`为下载(`paddle_inference`文件夹)或者编译生成的Paddle预测库地址(`build/paddle_inference_install_dir`文件夹);`CUDA_LIB_DIR`为cuda库文件地址,在docker中为`/usr/local/cuda/lib64`;`CUDNN_LIB_DIR`为cudnn库文件地址,在docker中为`/usr/lib/x86_64-linux-gnu/`。

|

||||

|

||||

|

||||

* 编译完成之后,会在`build`文件夹下生成一个名为`ocr_system`的可执行文件。

|

||||

@ -211,6 +211,7 @@ max_side_len 960 # 输入图像长宽大于960时,等比例缩放图像,使

|

||||

det_db_thresh 0.3 # 用于过滤DB预测的二值化图像,设置为0.-0.3对结果影响不明显

|

||||

det_db_box_thresh 0.5 # DB后处理过滤box的阈值,如果检测存在漏框情况,可酌情减小

|

||||

det_db_unclip_ratio 1.6 # 表示文本框的紧致程度,越小则文本框更靠近文本

|

||||

use_polygon_score 1 # 是否使用多边形框计算bbox score,0表示使用矩形框计算。矩形框计算速度更快,多边形框对弯曲文本区域计算更准确。

|

||||

det_model_dir ./inference/det_db # 检测模型inference model地址

|

||||

|

||||

# cls config

|

||||

|

||||

@ -217,6 +217,7 @@ max_side_len 960 # Limit the maximum image height and width to 960

|

||||

det_db_thresh 0.3 # Used to filter the binarized image of DB prediction, setting 0.-0.3 has no obvious effect on the result

|

||||

det_db_box_thresh 0.5 # DDB post-processing filter box threshold, if there is a missing box detected, it can be reduced as appropriate

|

||||

det_db_unclip_ratio 1.6 # Indicates the compactness of the text box, the smaller the value, the closer the text box to the text

|

||||

use_polygon_score 1 # Whether to use polygon box to calculate bbox score, 0 means to use rectangle box to calculate. Use rectangular box to calculate faster, and polygonal box more accurate for curved text area.

|

||||

det_model_dir ./inference/det_db # Address of detection inference model

|

||||

|

||||

# cls config

|

||||

|

||||

@ -59,7 +59,8 @@ int main(int argc, char **argv) {

|

||||

config.gpu_mem, config.cpu_math_library_num_threads,

|

||||

config.use_mkldnn, config.max_side_len, config.det_db_thresh,

|

||||

config.det_db_box_thresh, config.det_db_unclip_ratio,

|

||||

config.visualize, config.use_tensorrt, config.use_fp16);

|

||||

config.use_polygon_score, config.visualize,

|

||||

config.use_tensorrt, config.use_fp16);

|

||||

|

||||

Classifier *cls = nullptr;

|

||||

if (config.use_angle_cls == true) {

|

||||

|

||||

@ -109,9 +109,9 @@ void DBDetector::Run(cv::Mat &img,

|

||||

cv::Mat dilation_map;

|

||||

cv::Mat dila_ele = cv::getStructuringElement(cv::MORPH_RECT, cv::Size(2, 2));

|

||||

cv::dilate(bit_map, dilation_map, dila_ele);

|

||||

boxes = post_processor_.BoxesFromBitmap(pred_map, dilation_map,

|

||||

this->det_db_box_thresh_,

|

||||

this->det_db_unclip_ratio_);

|

||||

boxes = post_processor_.BoxesFromBitmap(

|

||||

pred_map, dilation_map, this->det_db_box_thresh_,

|

||||

this->det_db_unclip_ratio_, this->use_polygon_score_);

|

||||

|

||||

boxes = post_processor_.FilterTagDetRes(boxes, ratio_h, ratio_w, srcimg);

|

||||

|

||||

|

||||

@ -160,35 +160,49 @@ std::vector<std::vector<float>> PostProcessor::GetMiniBoxes(cv::RotatedRect box,

|

||||

}

|

||||

|

||||

float PostProcessor::PolygonScoreAcc(std::vector<cv::Point> contour,

|

||||

cv::Mat pred){

|

||||

cv::Mat pred) {

|

||||

int width = pred.cols;

|

||||

int height = pred.rows;

|

||||

std::vector<float> box_x;

|

||||

std::vector<float> box_y;

|

||||

for(int i=0; i<contour.size(); ++i){

|

||||

for (int i = 0; i < contour.size(); ++i) {

|

||||

box_x.push_back(contour[i].x);

|

||||

box_y.push_back(contour[i].y);

|

||||

}

|

||||

|

||||

int xmin = clamp(int(std::floor(*(std::min_element(box_x.begin(), box_x.end())))), 0, width - 1);

|

||||

int xmax = clamp(int(std::ceil(*(std::max_element(box_x.begin(), box_x.end())))), 0, width - 1);

|

||||

int ymin = clamp(int(std::floor(*(std::min_element(box_y.begin(), box_y.end())))), 0, height - 1);

|

||||

int ymax = clamp(int(std::ceil(*(std::max_element(box_y.begin(), box_y.end())))), 0, height - 1);

|

||||

int xmin =

|

||||

clamp(int(std::floor(*(std::min_element(box_x.begin(), box_x.end())))), 0,

|

||||

width - 1);

|

||||

int xmax =

|

||||

clamp(int(std::ceil(*(std::max_element(box_x.begin(), box_x.end())))), 0,

|

||||

width - 1);

|

||||

int ymin =

|

||||

clamp(int(std::floor(*(std::min_element(box_y.begin(), box_y.end())))), 0,

|

||||

height - 1);

|

||||

int ymax =

|

||||

clamp(int(std::ceil(*(std::max_element(box_y.begin(), box_y.end())))), 0,

|

||||

height - 1);

|

||||

|

||||

cv::Mat mask;

|

||||

mask = cv::Mat::zeros(ymax - ymin + 1, xmax - xmin + 1, CV_8UC1);

|

||||

|

||||

cv::Point rook_point[contour.size()];

|

||||

for(int i=0; i<contour.size(); ++i){

|

||||

|

||||

cv::Point* rook_point = new cv::Point[contour.size()];

|

||||

|

||||

for (int i = 0; i < contour.size(); ++i) {

|

||||

rook_point[i] = cv::Point(int(box_x[i]) - xmin, int(box_y[i]) - ymin);

|

||||

}

|

||||

const cv::Point *ppt[1] = {rook_point};

|

||||

int npt[] = {int(contour.size())};

|

||||

|

||||

|

||||

cv::fillPoly(mask, ppt, npt, 1, cv::Scalar(1));

|

||||

|

||||

cv::Mat croppedImg;

|

||||

pred(cv::Rect(xmin, ymin, xmax - xmin + 1, ymax - ymin + 1)).copyTo(croppedImg);

|

||||

float score = cv::mean(croppedImg, mask)[0];

|

||||

|

||||

delete []rook_point;

|

||||

return score;

|

||||

}

|

||||

|

||||

@ -230,10 +244,9 @@ float PostProcessor::BoxScoreFast(std::vector<std::vector<float>> box_array,

|

||||

return score;

|

||||

}

|

||||

|

||||

std::vector<std::vector<std::vector<int>>>

|

||||

PostProcessor::BoxesFromBitmap(const cv::Mat pred, const cv::Mat bitmap,

|

||||

const float &box_thresh,

|

||||

const float &det_db_unclip_ratio) {

|

||||

std::vector<std::vector<std::vector<int>>> PostProcessor::BoxesFromBitmap(

|

||||

const cv::Mat pred, const cv::Mat bitmap, const float &box_thresh,

|

||||

const float &det_db_unclip_ratio, const bool &use_polygon_score) {

|

||||

const int min_size = 3;

|

||||

const int max_candidates = 1000;

|

||||

|

||||

@ -267,9 +280,12 @@ PostProcessor::BoxesFromBitmap(const cv::Mat pred, const cv::Mat bitmap,

|

||||

}

|

||||

|

||||

float score;

|

||||

score = BoxScoreFast(array, pred);

|

||||

/* compute using polygon*/

|

||||

// score = PolygonScoreAcc(contours[_i], pred);

|

||||

if (use_polygon_score)

|

||||

/* compute using polygon*/

|

||||

score = PolygonScoreAcc(contours[_i], pred);

|

||||

else

|

||||

score = BoxScoreFast(array, pred);

|

||||

|

||||

if (score < box_thresh)

|

||||

continue;

|

||||

|

||||

|

||||

@ -10,6 +10,7 @@ max_side_len 960

|

||||

det_db_thresh 0.3

|

||||

det_db_box_thresh 0.5

|

||||

det_db_unclip_ratio 1.6

|

||||

use_polygon_score 1

|

||||

det_model_dir ./inference/ch_ppocr_mobile_v2.0_det_infer/

|

||||

|

||||

# cls config

|

||||

|

||||

@ -11,7 +11,7 @@ PaddleOCR 旨在打造一套丰富、领先、且实用的OCR工具库,不仅

|

||||

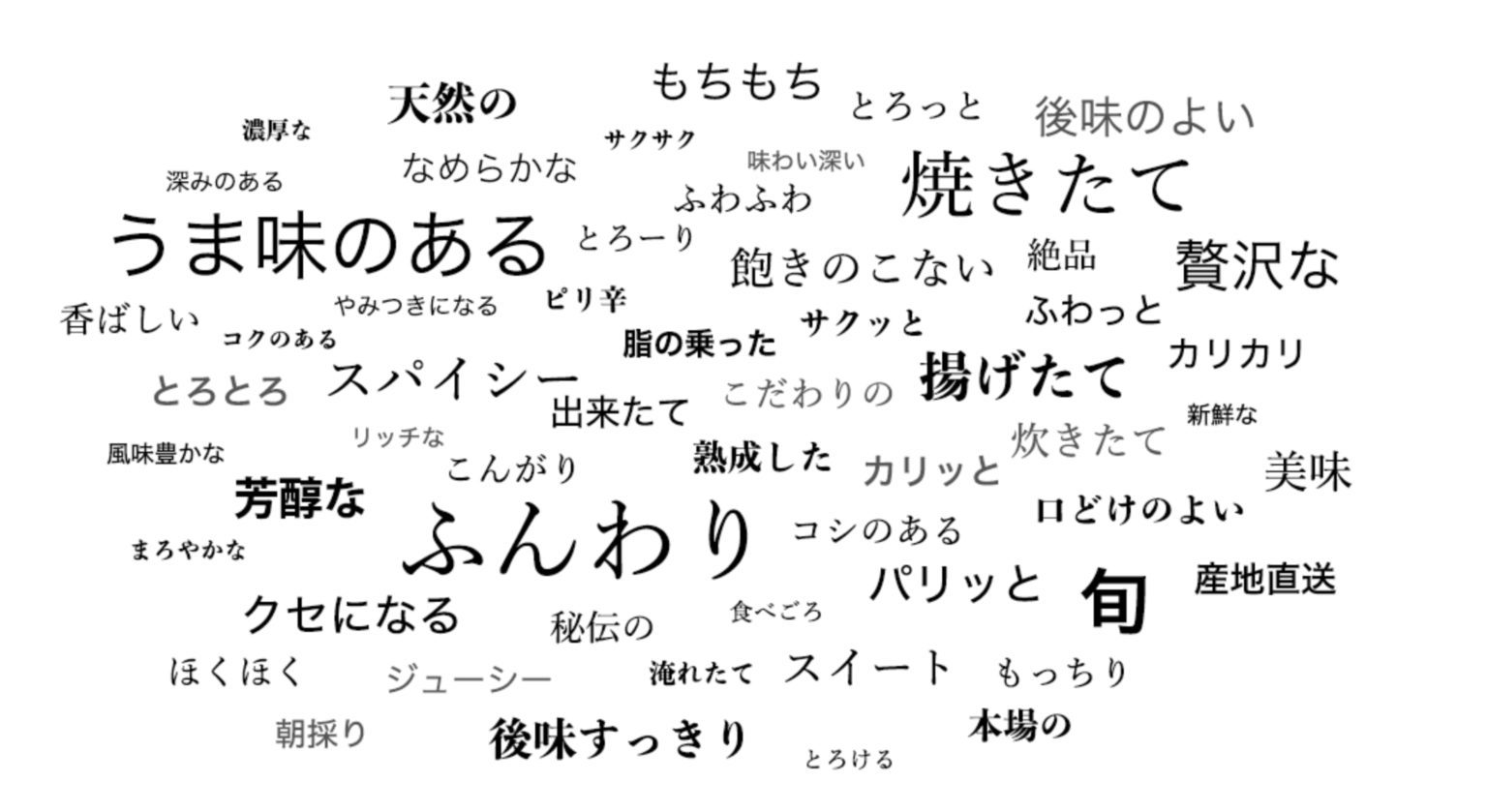

其中英文模型支持,大小写字母和常见标点的检测识别,并优化了空格字符的识别:

|

||||

|

||||

<div align="center">

|

||||

<img src="../imgs_results/multi_lang/en_1.jpg" width="400" height="600">

|

||||

<img src="../imgs_results/multi_lang/img_12.jpg" width="900" height="300">

|

||||

</div>

|

||||

|

||||

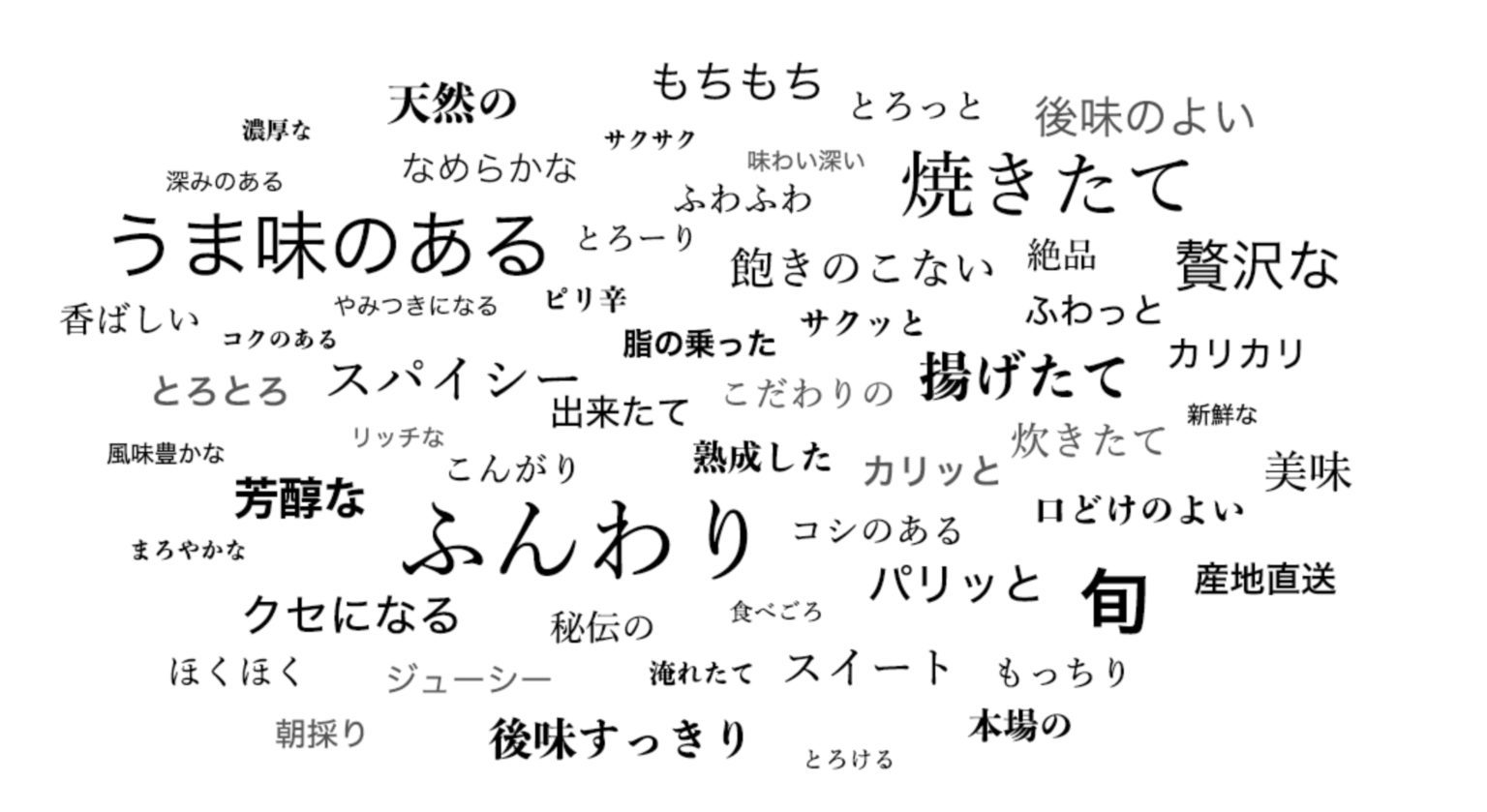

小语种模型覆盖了拉丁语系、阿拉伯语系、中文繁体、韩语、日语等等:

|

||||

@ -19,6 +19,8 @@ PaddleOCR 旨在打造一套丰富、领先、且实用的OCR工具库,不仅

|

||||

<div align="center">

|

||||

<img src="../imgs_results/multi_lang/japan_2.jpg" width="600" height="300">

|

||||

<img src="../imgs_results/multi_lang/french_0.jpg" width="300" height="300">

|

||||

<img src="../imgs_results/multi_lang/korean_0.jpg" width="500" height="300">

|

||||

<img src="../imgs_results/multi_lang/arabic_0.jpg" width="300" height="300">

|

||||

</div>

|

||||

|

||||

|

||||

@ -30,14 +32,9 @@ PaddleOCR 旨在打造一套丰富、领先、且实用的OCR工具库,不仅

|

||||

|

||||

- [2 快速使用](#快速使用)

|

||||

- [2.1 命令行运行](#命令行运行)

|

||||

- [2.1.1 整图预测](#bash_检测+识别)

|

||||

- [2.1.2 识别预测](#bash_识别)

|

||||

- [2.1.3 检测预测](#bash_检测)

|

||||

- [2.2 python 脚本运行](#python_脚本运行)

|

||||

- [2.2.1 整图预测](#python_检测+识别)

|

||||

- [2.2.2 识别预测](#python_识别)

|

||||

- [2.2.3 检测预测](#python_检测)

|

||||

- [3 自定义训练](#自定义训练)

|

||||

- [4 预测部署](#预测部署)

|

||||

- [4 支持语种及缩写](#语种缩写)

|

||||

|

||||

<a name="安装"></a>

|

||||

@ -50,7 +47,7 @@ PaddleOCR 旨在打造一套丰富、领先、且实用的OCR工具库,不仅

|

||||

pip install paddlepaddle

|

||||

|

||||

# gpu

|

||||

pip instll paddlepaddle-gpu

|

||||

pip install paddlepaddle-gpu

|

||||

```

|

||||

|

||||

<a name="paddleocr_package_安装"></a>

|

||||

@ -108,8 +105,6 @@ paddleocr --image_dir doc/imgs/japan_2.jpg --lang=japan

|

||||

paddleocr --image_dir doc/imgs_words/japan/1.jpg --det false --lang=japan

|

||||

```

|

||||

|

||||

|

||||

|

||||

结果是一个tuple,返回识别结果和识别置信度

|

||||

|

||||

```text

|

||||

@ -145,6 +140,9 @@ from paddleocr import PaddleOCR, draw_ocr

|

||||

ocr = PaddleOCR(lang="korean") # 首次执行会自动下载模型文件

|

||||

img_path = 'doc/imgs/korean_1.jpg '

|

||||

result = ocr.ocr(img_path)

|

||||

# 可通过参数控制单独执行识别、检测

|

||||

# result = ocr.ocr(img_path, det=False) 只执行识别

|

||||

# result = ocr.ocr(img_path, rec=False) 只执行检测

|

||||

# 打印检测框和识别结果

|

||||

for line in result:

|

||||

print(line)

|

||||

@ -166,59 +164,7 @@ im_show.save('result.jpg')

|

||||

<img src="https://raw.githubusercontent.com/PaddlePaddle/PaddleOCR/release/2.1/doc/imgs_results/korean.jpg" width="800">

|

||||

</div>

|

||||

|

||||

* 识别预测

|

||||

|

||||

```

|

||||

from paddleocr import PaddleOCR

|

||||

ocr = PaddleOCR(lang="german")

|

||||

img_path = 'PaddleOCR/doc/imgs_words/german/1.jpg'

|

||||

result = ocr.ocr(img_path, det=False, cls=True)

|

||||

for line in result:

|

||||

print(line)

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

结果是一个tuple,只包含识别结果和识别置信度

|

||||

|

||||

```

|

||||

('leider auch jetzt', 0.97538936)

|

||||

```

|

||||

|

||||

* 检测预测

|

||||

|

||||

```python

|

||||

from paddleocr import PaddleOCR, draw_ocr

|

||||

ocr = PaddleOCR() # need to run only once to download and load model into memory

|

||||

img_path = 'PaddleOCR/doc/imgs_en/img_12.jpg'

|

||||

result = ocr.ocr(img_path, rec=False)

|

||||

for line in result:

|

||||

print(line)

|

||||

|

||||

# 显示结果

|

||||

from PIL import Image

|

||||

|

||||

image = Image.open(img_path).convert('RGB')

|

||||

im_show = draw_ocr(image, result, txts=None, scores=None, font_path='/path/to/PaddleOCR/doc/fonts/simfang.ttf')

|

||||

im_show = Image.fromarray(im_show)

|

||||

im_show.save('result.jpg')

|

||||

```

|

||||

结果是一个list,每个item只包含文本框

|

||||

```bash

|

||||

[[26.0, 457.0], [137.0, 457.0], [137.0, 477.0], [26.0, 477.0]]

|

||||

[[25.0, 425.0], [372.0, 425.0], [372.0, 448.0], [25.0, 448.0]]

|

||||

[[128.0, 397.0], [273.0, 397.0], [273.0, 414.0], [128.0, 414.0]]

|

||||

......

|

||||

```

|

||||

|

||||

结果可视化 :

|

||||

|

||||

<div align="center">

|

||||

<img src="https://raw.githubusercontent.com/PaddlePaddle/PaddleOCR/release/2.1/doc/imgs_results/whl/12_det.jpg" width="800">

|

||||

</div>

|

||||

|

||||

ppocr 还支持方向分类, 更多使用方式请参考:[whl包使用说明](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.0/doc/doc_ch/whl.md)。

|

||||

ppocr 还支持方向分类, 更多使用方式请参考:[whl包使用说明](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.0/doc/doc_ch/whl.md)

|

||||

|

||||

<a name="自定义训练"></a>

|

||||

## 3 自定义训练

|

||||

@ -229,84 +175,58 @@ ppocr 支持使用自己的数据进行自定义训练或finetune, 其中识别

|

||||

具体数据准备、训练过程可参考:[文本检测](../doc_ch/detection.md)、[文本识别](../doc_ch/recognition.md),更多功能如预测部署、

|

||||

数据标注等功能可以阅读完整的[文档教程](../../README_ch.md)。

|

||||

|

||||

<a name="语种缩写"></a>

|

||||

## 4 支持语种及缩写

|

||||

<a name="预测部署"></a>

|

||||

## 4 预测部署

|

||||

|

||||

| 语种 | 描述 | 缩写 |

|

||||

| --- | --- | --- |

|

||||

|中文|chinese and english|ch|

|

||||

|英文|english|en|

|

||||

|法文|french|fr|

|

||||

|德文|german|german|

|

||||

|日文|japan|japan|

|

||||

|韩文|korean|korean|

|

||||

|中文繁体|chinese traditional |chinese_cht|

|

||||

|意大利文| Italian |it|

|

||||

|西班牙文|Spanish |es|

|

||||

|葡萄牙文| Portuguese|pt|

|

||||

|俄罗斯文|Russia|ru|

|

||||

|阿拉伯文|Arabic|ar|

|

||||

|印地文|Hindi|hi|

|

||||

|维吾尔|Uyghur|ug|

|

||||

|波斯文|Persian|fa|

|

||||

|乌尔都文|Urdu|ur|

|

||||

|塞尔维亚文(latin)| Serbian(latin) |rs_latin|

|

||||

|欧西坦文|Occitan |oc|

|

||||

|马拉地文|Marathi|mr|

|

||||

|尼泊尔文|Nepali|ne|

|

||||

|塞尔维亚文(cyrillic)|Serbian(cyrillic)|rs_cyrillic|

|

||||

|保加利亚文|Bulgarian |bg|

|

||||

|乌克兰文|Ukranian|uk|

|

||||

|白俄罗斯文|Belarusian|be|

|

||||

|泰卢固文|Telugu |te|

|

||||

|泰米尔文|Tamil |ta|

|

||||

|南非荷兰文 |Afrikaans |af|

|

||||

|阿塞拜疆文 |Azerbaijani |az|

|

||||

|波斯尼亚文|Bosnian|bs|

|

||||

|捷克文|Czech|cs|

|

||||

|威尔士文 |Welsh |cy|

|

||||

|丹麦文 |Danish|da|

|

||||

|爱沙尼亚文 |Estonian |et|

|

||||

|爱尔兰文 |Irish |ga|

|

||||

|克罗地亚文|Croatian |hr|

|

||||

|匈牙利文|Hungarian |hu|

|

||||

|印尼文|Indonesian|id|

|

||||

|冰岛文 |Icelandic|is|

|

||||

|库尔德文 |Kurdish|ku|

|

||||

|立陶宛文|Lithuanian |lt|

|

||||

|拉脱维亚文 |Latvian |lv|

|

||||

|毛利文|Maori|mi|

|

||||

|马来文 |Malay|ms|

|

||||

|马耳他文 |Maltese |mt|

|

||||

|荷兰文 |Dutch |nl|

|

||||

|挪威文 |Norwegian |no|

|

||||

|波兰文|Polish |pl|

|

||||

| 罗马尼亚文|Romanian |ro|

|

||||

| 斯洛伐克文|Slovak |sk|

|

||||

| 斯洛文尼亚文|Slovenian |sl|

|

||||

| 阿尔巴尼亚文|Albanian |sq|

|

||||

| 瑞典文|Swedish |sv|

|

||||

| 西瓦希里文|Swahili |sw|

|

||||

| 塔加洛文|Tagalog |tl|

|

||||

| 土耳其文|Turkish |tr|

|

||||

| 乌兹别克文|Uzbek |uz|

|

||||

| 越南文|Vietnamese |vi|

|

||||

| 蒙古文|Mongolian |mn|

|

||||

| 阿巴扎文|Abaza |abq|

|

||||

| 阿迪赫文|Adyghe |ady|

|

||||

| 卡巴丹文|Kabardian |kbd|

|

||||

| 阿瓦尔文|Avar |ava|

|

||||

| 达尔瓦文|Dargwa |dar|

|

||||

| 因古什文|Ingush |inh|

|

||||

| 拉克文|Lak |lbe|

|

||||

| 莱兹甘文|Lezghian |lez|

|

||||

|塔巴萨兰文 |Tabassaran |tab|

|

||||

| 比尔哈文|Bihari |bh|

|

||||

| 迈蒂利文|Maithili |mai|

|

||||

| 昂加文|Angika |ang|

|

||||

| 孟加拉文|Bhojpuri |bho|

|

||||

| 摩揭陀文 |Magahi |mah|

|

||||

| 那格浦尔文|Nagpur |sck|

|

||||

| 尼瓦尔文|Newari |new|

|

||||

| 保加利亚文 |Goan Konkani|gom|

|

||||

| 沙特阿拉伯文|Saudi Arabia|sa|

|

||||

除了安装whl包进行快速预测,ppocr 也提供了多种预测部署方式,如有需求可阅读相关文档:

|

||||

- [基于Python脚本预测引擎推理](./inference.md)

|

||||

- [基于C++预测引擎推理](../../deploy/cpp_infer/readme.md)

|

||||

- [服务化部署](../../deploy/hubserving/readme.md)

|

||||

- [端侧部署](https://github.com/PaddlePaddle/PaddleOCR/blob/develop/deploy/lite/readme.md)

|

||||

- [Benchmark](./benchmark.md)

|

||||

|

||||

|

||||

|

||||

<a name="语种缩写"></a>

|

||||

## 5 支持语种及缩写

|

||||

|

||||

| 语种 | 描述 | 缩写 | | 语种 | 描述 | 缩写 |

|

||||

| --- | --- | --- | ---|--- | --- | --- |

|

||||

|中文|chinese and english|ch| |保加利亚文|Bulgarian |bg|

|

||||

|英文|english|en| |乌克兰文|Ukranian|uk|

|

||||

|法文|french|fr| |白俄罗斯文|Belarusian|be|

|

||||

|德文|german|german| |泰卢固文|Telugu |te|

|

||||

|日文|japan|japan| | |阿巴扎文|Abaza |abq|

|

||||

|韩文|korean|korean| |泰米尔文|Tamil |ta|

|

||||

|中文繁体|chinese traditional |ch_tra| |南非荷兰文 |Afrikaans |af|

|

||||

|意大利文| Italian |it| |阿塞拜疆文 |Azerbaijani |az|

|

||||

|西班牙文|Spanish |es| |波斯尼亚文|Bosnian|bs|

|

||||

|葡萄牙文| Portuguese|pt| |捷克文|Czech|cs|

|

||||

|俄罗斯文|Russia|ru| |威尔士文 |Welsh |cy|

|

||||

|阿拉伯文|Arabic|ar| |丹麦文 |Danish|da|

|

||||

|印地文|Hindi|hi| |爱沙尼亚文 |Estonian |et|

|

||||

|维吾尔|Uyghur|ug| |爱尔兰文 |Irish |ga|

|

||||

|波斯文|Persian|fa| |克罗地亚文|Croatian |hr|

|

||||

|乌尔都文|Urdu|ur| |匈牙利文|Hungarian |hu|

|

||||

|塞尔维亚文(latin)| Serbian(latin) |rs_latin| |印尼文|Indonesian|id|

|

||||

|欧西坦文|Occitan |oc| |冰岛文 |Icelandic|is|

|

||||

|马拉地文|Marathi|mr| |库尔德文 |Kurdish|ku|

|

||||

|尼泊尔文|Nepali|ne| |立陶宛文|Lithuanian |lt|

|

||||

|塞尔维亚文(cyrillic)|Serbian(cyrillic)|rs_cyrillic| |拉脱维亚文 |Latvian |lv|

|

||||

|毛利文|Maori|mi| | 达尔瓦文|Dargwa |dar|

|

||||

|马来文 |Malay|ms| | 因古什文|Ingush |inh|

|

||||

|马耳他文 |Maltese |mt| | 拉克文|Lak |lbe|

|

||||

|荷兰文 |Dutch |nl| | 莱兹甘文|Lezghian |lez|

|

||||

|挪威文 |Norwegian |no| |塔巴萨兰文 |Tabassaran |tab|

|

||||

|波兰文|Polish |pl| | 比尔哈文|Bihari |bh|

|

||||

| 罗马尼亚文|Romanian |ro| | 迈蒂利文|Maithili |mai|

|

||||

| 斯洛伐克文|Slovak |sk| | 昂加文|Angika |ang|

|

||||

| 斯洛文尼亚文|Slovenian |sl| | 孟加拉文|Bhojpuri |bho|

|

||||

| 阿尔巴尼亚文|Albanian |sq| | 摩揭陀文 |Magahi |mah|

|

||||

| 瑞典文|Swedish |sv| | 那格浦尔文|Nagpur |sck|

|

||||

| 西瓦希里文|Swahili |sw| | 尼瓦尔文|Newari |new|

|

||||

| 塔加洛文|Tagalog |tl| | 保加利亚文 |Goan Konkani|gom|

|

||||

| 土耳其文|Turkish |tr| | 沙特阿拉伯文|Saudi Arabia|sa|

|

||||

| 乌兹别克文|Uzbek |uz| | 阿瓦尔文|Avar |ava|

|

||||

| 越南文|Vietnamese |vi| | 阿瓦尔文|Avar |ava|

|

||||

| 蒙古文|Mongolian |mn| | 阿迪赫文|Adyghe |ady|

|

||||

|

||||

@ -13,7 +13,7 @@ Among them, the English model supports the detection and recognition of uppercas

|

||||

letters and common punctuation, and the recognition of space characters is optimized:

|

||||

|

||||

<div align="center">

|

||||

<img src="../imgs_results/multi_lang/en_1.jpg" width="400" height="600">

|

||||

<img src="../imgs_results/multi_lang/img_12.jpg" width="900" height="300">

|

||||

</div>

|

||||

|

||||

The multilingual models cover Latin, Arabic, Traditional Chinese, Korean, Japanese, etc.:

|

||||

@ -21,6 +21,8 @@ The multilingual models cover Latin, Arabic, Traditional Chinese, Korean, Japane

|

||||

<div align="center">

|

||||

<img src="../imgs_results/multi_lang/japan_2.jpg" width="600" height="300">

|

||||

<img src="../imgs_results/multi_lang/french_0.jpg" width="300" height="300">

|

||||

<img src="../imgs_results/multi_lang/korean_0.jpg" width="500" height="300">

|

||||

<img src="../imgs_results/multi_lang/arabic_0.jpg" width="300" height="300">

|

||||

</div>

|

||||

|

||||

This document will briefly introduce how to use the multilingual model.

|

||||

@ -31,14 +33,9 @@ This document will briefly introduce how to use the multilingual model.

|

||||

|

||||

- [2 Quick Use](#Quick_Use)

|

||||

- [2.1 Command line operation](#Command_line_operation)

|

||||

- [2.1.1 Prediction of the whole image](#bash_detection+recognition)

|

||||

- [2.1.2 Recognition](#bash_Recognition)

|

||||

- [2.1.3 Detection](#bash_detection)

|

||||

- [2.2 python script running](#python_Script_running)

|

||||

- [2.2.1 Whole image prediction](#python_detection+recognition)

|

||||

- [2.2.2 Recognition](#python_Recognition)

|

||||

- [2.2.3 Detection](#python_detection)

|

||||

- [3 Custom Training](#Custom_Training)

|

||||

- [4 Inference and Deployment](#inference)

|

||||

- [4 Supported languages and abbreviations](#language_abbreviations)

|

||||

|

||||

<a name="Install"></a>

|

||||

@ -51,7 +48,7 @@ This document will briefly introduce how to use the multilingual model.

|

||||

pip install paddlepaddle

|

||||

|

||||

# gpu

|

||||

pip instll paddlepaddle-gpu

|

||||

pip install paddlepaddle-gpu

|

||||

```

|

||||

|

||||

<a name="paddleocr_package_install"></a>

|

||||

@ -89,7 +86,7 @@ The specific supported [language] (#language_abbreviations) can be viewed in the

|

||||

|

||||

paddleocr --image_dir doc/imgs/japan_2.jpg --lang=japan

|

||||

```

|

||||

|

||||

|

||||

|

||||

The result is a list, each item contains a text box, text and recognition confidence

|

||||

```text

|

||||

@ -106,7 +103,7 @@ The result is a list, each item contains a text box, text and recognition confid

|

||||

paddleocr --image_dir doc/imgs_words/japan/1.jpg --det false --lang=japan

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

The result is a tuple, which returns the recognition result and recognition confidence

|

||||

|

||||

@ -143,6 +140,9 @@ from paddleocr import PaddleOCR, draw_ocr

|

||||

ocr = PaddleOCR(lang="korean") # The model file will be downloaded automatically when executed for the first time

|

||||

img_path ='doc/imgs/korean_1.jpg'

|

||||

result = ocr.ocr(img_path)

|

||||

# Recognition and detection can be performed separately through parameter control

|

||||

# result = ocr.ocr(img_path, det=False) Only perform recognition

|

||||

# result = ocr.ocr(img_path, rec=False) Only perform detection

|

||||

# Print detection frame and recognition result

|

||||

for line in result:

|

||||

print(line)

|

||||

@ -162,54 +162,6 @@ Visualization of results:

|

||||

|

||||

|

||||

|

||||

* Recognition

|

||||

|

||||

```

|

||||

from paddleocr import PaddleOCR

|

||||

ocr = PaddleOCR(lang="german")

|

||||

img_path ='PaddleOCR/doc/imgs_words/german/1.jpg'

|

||||

result = ocr.ocr(img_path, det=False, cls=True)

|

||||

for line in result:

|

||||

print(line)

|

||||

```

|

||||

|

||||

|

||||

|

||||

The result is a tuple, which only contains the recognition result and recognition confidence

|

||||

|

||||

```

|

||||

('leider auch jetzt', 0.97538936)

|

||||

```

|

||||

|

||||

* Detection

|

||||

|

||||

```python

|

||||

from paddleocr import PaddleOCR, draw_ocr

|

||||

ocr = PaddleOCR() # need to run only once to download and load model into memory

|

||||

img_path ='PaddleOCR/doc/imgs_en/img_12.jpg'

|

||||

result = ocr.ocr(img_path, rec=False)

|

||||

for line in result:

|

||||

print(line)

|

||||

|

||||

# show result

|

||||

from PIL import Image

|

||||

|

||||

image = Image.open(img_path).convert('RGB')

|

||||

im_show = draw_ocr(image, result, txts=None, scores=None, font_path='/path/to/PaddleOCR/doc/fonts/simfang.ttf')

|

||||

im_show = Image.fromarray(im_show)

|

||||

im_show.save('result.jpg')

|

||||

```

|

||||

The result is a list, each item contains only text boxes

|

||||

```bash

|

||||

[[26.0, 457.0], [137.0, 457.0], [137.0, 477.0], [26.0, 477.0]]

|

||||

[[25.0, 425.0], [372.0, 425.0], [372.0, 448.0], [25.0, 448.0]]

|

||||

[[128.0, 397.0], [273.0, 397.0], [273.0, 414.0], [128.0, 414.0]]

|

||||

......

|

||||

```

|

||||

|

||||

Visualization of results:

|

||||

|

||||

|

||||

ppocr also supports direction classification. For more usage methods, please refer to: [whl package instructions](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.0/doc/doc_ch/whl.md).

|

||||

|

||||

<a name="Custom_training"></a>

|

||||

@ -221,84 +173,61 @@ Modify the training data path, dictionary and other parameters.

|

||||

For specific data preparation and training process, please refer to: [Text Detection](../doc_en/detection_en.md), [Text Recognition](../doc_en/recognition_en.md), more functions such as predictive deployment,

|

||||

For functions such as data annotation, you can read the complete [Document Tutorial](../../README.md).

|

||||

|

||||

<a name="language_abbreviation"></a>

|

||||

## 4 Support languages and abbreviations

|

||||

|

||||

| Language | Abbreviation |

|

||||

| --- | --- |

|

||||

|chinese and english|ch|

|

||||

|english|en|

|

||||

|french|fr|

|

||||

|german|german|

|

||||

|japan|japan|

|

||||

|korean|korean|

|

||||

|chinese traditional |chinese_cht|

|

||||

| Italian |it|

|

||||

|Spanish |es|

|

||||

| Portuguese|pt|

|

||||

|Russia|ru|

|

||||

|Arabic|ar|

|

||||

|Hindi|hi|

|

||||

|Uyghur|ug|

|

||||

|Persian|fa|

|

||||

|Urdu|ur|

|

||||

| Serbian(latin) |rs_latin|

|

||||

|Occitan |oc|

|

||||

|Marathi|mr|

|

||||

|Nepali|ne|

|

||||

|Serbian(cyrillic)|rs_cyrillic|

|

||||

|Bulgarian |bg|

|

||||

|Ukranian|uk|

|

||||

|Belarusian|be|

|

||||

|Telugu |te|

|

||||

|Tamil |ta|

|

||||

|Afrikaans |af|

|

||||

|Azerbaijani |az|

|

||||

|Bosnian|bs|

|

||||

|Czech|cs|

|

||||

|Welsh |cy|

|

||||

|Danish|da|

|

||||

|Estonian |et|

|

||||

|Irish |ga|

|

||||

|Croatian |hr|

|

||||

|Hungarian |hu|

|

||||

|Indonesian|id|

|

||||

|Icelandic|is|

|

||||

|Kurdish|ku|

|

||||

|Lithuanian |lt|

|

||||

|Latvian |lv|

|

||||

|Maori|mi|

|

||||

|Malay|ms|

|

||||

|Maltese |mt|

|

||||

|Dutch |nl|

|

||||

|Norwegian |no|

|

||||

|Polish |pl|

|

||||

|Romanian |ro|

|

||||

|Slovak |sk|

|

||||

|Slovenian |sl|

|

||||

|Albanian |sq|

|

||||

|Swedish |sv|

|

||||

|Swahili |sw|

|

||||

|Tagalog |tl|

|

||||

|Turkish |tr|

|

||||

|Uzbek |uz|

|

||||

|Vietnamese |vi|

|

||||

|Mongolian |mn|

|

||||

|Abaza |abq|

|

||||

|Adyghe |ady|

|

||||

|Kabardian |kbd|

|

||||

|Avar |ava|

|

||||

|Dargwa |dar|

|

||||

|Ingush |inh|

|

||||

|Lak |lbe|

|

||||

|Lezghian |lez|

|

||||

|Tabassaran |tab|

|

||||

|Bihari |bh|

|

||||

|Maithili |mai|

|

||||

|Angika |ang|

|

||||

|Bhojpuri |bho|

|

||||

|Magahi |mah|

|

||||

|Nagpur |sck|

|

||||

|Newari |new|

|

||||

|Goan Konkani|gom|

|

||||

|Saudi Arabia|sa|

|

||||

<a name="inference"></a>

|

||||

## 4 Inference and Deployment

|

||||

|

||||

In addition to installing the whl package for quick forecasting,

|

||||

ppocr also provides a variety of forecasting deployment methods.

|

||||

If necessary, you can read related documents:

|

||||

|

||||

- [Python Inference](./inference_en.md)

|

||||

- [C++ Inference](../../deploy/cpp_infer/readme_en.md)

|

||||

- [Serving](../../deploy/hubserving/readme_en.md)

|

||||

- [Mobile](https://github.com/PaddlePaddle/PaddleOCR/blob/develop/deploy/lite/readme_en.md)

|

||||

- [Benchmark](./benchmark_en.md)

|

||||

|

||||

|

||||

<a name="language_abbreviations"></a>

|

||||

## 5 Support languages and abbreviations

|

||||

|

||||

| Language | Abbreviation | | Language | Abbreviation |

|

||||

| --- | --- | --- | --- | --- |

|

||||

|chinese and english|ch| |Arabic|ar|

|

||||

|english|en| |Hindi|hi|

|

||||

|french|fr| |Uyghur|ug|

|

||||

|german|german| |Persian|fa|

|

||||

|japan|japan| |Urdu|ur|

|

||||

|korean|korean| | Serbian(latin) |rs_latin|

|

||||

|chinese traditional |ch_tra| |Occitan |oc|

|

||||

| Italian |it| |Marathi|mr|

|

||||

|Spanish |es| |Nepali|ne|

|

||||

| Portuguese|pt| |Serbian(cyrillic)|rs_cyrillic|

|

||||

|Russia|ru||Bulgarian |bg|

|

||||

|Ukranian|uk| |Estonian |et|

|

||||

|Belarusian|be| |Irish |ga|

|

||||

|Telugu |te| |Croatian |hr|

|

||||

|Saudi Arabia|sa| |Hungarian |hu|

|

||||

|Tamil |ta| |Indonesian|id|

|

||||

|Afrikaans |af| |Icelandic|is|

|

||||

|Azerbaijani |az||Kurdish|ku|

|

||||

|Bosnian|bs| |Lithuanian |lt|

|

||||

|Czech|cs| |Latvian |lv|

|

||||

|Welsh |cy| |Maori|mi|

|

||||

|Danish|da| |Malay|ms|

|

||||

|Maltese |mt| |Adyghe |ady|

|

||||

|Dutch |nl| |Kabardian |kbd|

|

||||

|Norwegian |no| |Avar |ava|

|

||||

|Polish |pl| |Dargwa |dar|

|

||||

|Romanian |ro| |Ingush |inh|

|

||||

|Slovak |sk| |Lak |lbe|

|

||||

|Slovenian |sl| |Lezghian |lez|

|

||||

|Albanian |sq| |Tabassaran |tab|

|

||||

|Swedish |sv| |Bihari |bh|

|

||||

|Swahili |sw| |Maithili |mai|

|

||||

|Tagalog |tl| |Angika |ang|

|

||||

|Turkish |tr| |Bhojpuri |bho|

|

||||

|Uzbek |uz| |Magahi |mah|

|

||||

|Vietnamese |vi| |Nagpur |sck|

|

||||

|Mongolian |mn| |Newari |new|

|

||||

|Abaza |abq| |Goan Konkani|gom|

|

||||

|

||||

BIN

doc/imgs_results/multi_lang/arabic_0.jpg

Normal file

BIN

doc/imgs_results/multi_lang/arabic_0.jpg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 21 KiB |

BIN

doc/imgs_results/multi_lang/img_12.jpg

Normal file

BIN

doc/imgs_results/multi_lang/img_12.jpg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 561 KiB |

BIN

doc/imgs_results/multi_lang/korean_0.jpg

Normal file

BIN

doc/imgs_results/multi_lang/korean_0.jpg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 921 KiB |

@ -249,7 +249,7 @@ class ResNet(nn.Layer):

|

||||

name=conv_name))

|

||||

shortcut = True

|

||||

self.block_list.append(bottleneck_block)

|

||||

self.out_channels = num_filters[block]

|

||||

self.out_channels = num_filters[block] * 4

|

||||

else:

|

||||

for block in range(len(depth)):

|

||||

shortcut = False

|

||||

|

||||

@ -218,6 +218,7 @@ class SRNLabelDecode(BaseRecLabelDecode):

|

||||

**kwargs):

|

||||

super(SRNLabelDecode, self).__init__(character_dict_path,

|

||||

character_type, use_space_char)

|

||||

self.max_text_length = kwargs.get('max_text_length', 25)

|

||||

|

||||

def __call__(self, preds, label=None, *args, **kwargs):

|

||||

pred = preds['predict']

|

||||

@ -229,9 +230,9 @@ class SRNLabelDecode(BaseRecLabelDecode):

|

||||

preds_idx = np.argmax(pred, axis=1)

|

||||

preds_prob = np.max(pred, axis=1)

|

||||

|

||||

preds_idx = np.reshape(preds_idx, [-1, 25])

|

||||

preds_idx = np.reshape(preds_idx, [-1, self.max_text_length])

|

||||

|

||||

preds_prob = np.reshape(preds_prob, [-1, 25])

|

||||

preds_prob = np.reshape(preds_prob, [-1, self.max_text_length])

|

||||

|

||||

text = self.decode(preds_idx, preds_prob)

|

||||

|

||||

|

||||

@ -121,7 +121,7 @@ def init_model(config, model, logger, optimizer=None, lr_scheduler=None):

|

||||

return best_model_dict

|

||||

|

||||

|

||||

def save_model(net,

|

||||

def save_model(model,

|

||||

optimizer,

|

||||

model_path,

|

||||

logger,

|

||||

@ -133,7 +133,7 @@ def save_model(net,

|

||||

"""

|

||||

_mkdir_if_not_exist(model_path, logger)

|

||||

model_prefix = os.path.join(model_path, prefix)

|

||||

paddle.save(net.state_dict(), model_prefix + '.pdparams')

|

||||

paddle.save(model.state_dict(), model_prefix + '.pdparams')

|

||||

paddle.save(optimizer.state_dict(), model_prefix + '.pdopt')

|

||||

|

||||

# save metric and config

|

||||

|

||||

@ -53,17 +53,19 @@ def main():

|

||||

save_path = '{}/inference'.format(config['Global']['save_inference_dir'])

|

||||

|

||||

if config['Architecture']['algorithm'] == "SRN":

|

||||

max_text_length = config['Architecture']['Head']['max_text_length']

|

||||

other_shape = [

|

||||

paddle.static.InputSpec(

|

||||

shape=[None, 1, 64, 256], dtype='float32'), [

|

||||

paddle.static.InputSpec(

|

||||

shape=[None, 256, 1],

|

||||

dtype="int64"), paddle.static.InputSpec(

|

||||

shape=[None, 25, 1],

|

||||

dtype="int64"), paddle.static.InputSpec(

|

||||

shape=[None, 8, 25, 25], dtype="int64"),

|

||||

shape=[None, max_text_length, 1], dtype="int64"),

|

||||

paddle.static.InputSpec(

|

||||

shape=[None, 8, 25, 25], dtype="int64")

|

||||

shape=[None, 8, max_text_length, max_text_length],

|

||||

dtype="int64"), paddle.static.InputSpec(

|

||||

shape=[None, 8, max_text_length, max_text_length],

|

||||

dtype="int64")

|

||||

]

|

||||

]

|

||||

model = to_static(model, input_spec=other_shape)

|

||||

|

||||

@ -18,6 +18,7 @@ from __future__ import print_function

|

||||

|

||||

import os

|

||||

import sys

|

||||

import platform

|

||||

import yaml

|

||||

import time

|

||||

import shutil

|

||||

@ -159,6 +160,8 @@ def train(config,

|

||||

eval_batch_step = config['Global']['eval_batch_step']

|

||||

|

||||

global_step = 0

|

||||

if 'global_step' in pre_best_model_dict:

|

||||

global_step = pre_best_model_dict['global_step']

|

||||

start_eval_step = 0

|

||||

if type(eval_batch_step) == list and len(eval_batch_step) >= 2:

|

||||

start_eval_step = eval_batch_step[0]

|

||||

@ -196,8 +199,12 @@ def train(config,

|

||||

train_reader_cost = 0.0

|

||||

batch_sum = 0

|

||||

batch_start = time.time()

|

||||

for idx, batch in enumerate(train_dataloader()):

|

||||

max_iter = len(train_dataloader) - 1 if platform.system(

|

||||

) == "Windows" else len(train_dataloader)

|

||||

for idx, batch in enumerate(train_dataloader):

|

||||

train_reader_cost += time.time() - batch_start

|

||||

if idx >= max_iter:

|

||||

break

|

||||

lr = optimizer.get_lr()

|

||||

images = batch[0]

|

||||

if use_srn:

|

||||

@ -285,7 +292,8 @@ def train(config,

|

||||

is_best=True,

|

||||

prefix='best_accuracy',

|

||||

best_model_dict=best_model_dict,

|

||||

epoch=epoch)

|

||||

epoch=epoch,

|

||||

global_step=global_step)

|

||||

best_str = 'best metric, {}'.format(', '.join([

|

||||

'{}: {}'.format(k, v) for k, v in best_model_dict.items()

|

||||

]))

|

||||

@ -307,7 +315,8 @@ def train(config,

|

||||

is_best=False,

|

||||

prefix='latest',

|

||||

best_model_dict=best_model_dict,

|

||||

epoch=epoch)

|

||||

epoch=epoch,

|

||||

global_step=global_step)

|

||||

if dist.get_rank() == 0 and epoch > 0 and epoch % save_epoch_step == 0:

|

||||

save_model(

|

||||

model,

|

||||

@ -317,7 +326,8 @@ def train(config,

|

||||

is_best=False,

|

||||

prefix='iter_epoch_{}'.format(epoch),

|

||||

best_model_dict=best_model_dict,

|

||||

epoch=epoch)

|

||||

epoch=epoch,

|

||||

global_step=global_step)

|

||||

best_str = 'best metric, {}'.format(', '.join(

|

||||

['{}: {}'.format(k, v) for k, v in best_model_dict.items()]))

|

||||

logger.info(best_str)

|

||||

@ -333,8 +343,10 @@ def eval(model, valid_dataloader, post_process_class, eval_class,

|

||||

total_frame = 0.0

|

||||

total_time = 0.0

|

||||

pbar = tqdm(total=len(valid_dataloader), desc='eval model:')

|

||||

max_iter = len(valid_dataloader) - 1 if platform.system(

|

||||

) == "Windows" else len(valid_dataloader)

|

||||

for idx, batch in enumerate(valid_dataloader):

|

||||

if idx >= len(valid_dataloader):

|

||||

if idx >= max_iter:

|

||||

break

|

||||

images = batch[0]

|

||||

start = time.time()

|

||||

|

||||

Loading…

x

Reference in New Issue

Block a user