merge upstream

commit

c11ec3ce5b

PPOCRLabel/libs

applications

光功率计数码管字符识别

configs

det

ch_ppocr_v2.0

deploy

doc

ppocr/data/imaug

|

|

@ -48,6 +48,7 @@ class Shape(object):

|

|||

|

||||

def __init__(self, label=None, line_color=None, difficult=False, key_cls="None", paintLabel=False):

|

||||

self.label = label

|

||||

self.idx = 0

|

||||

self.points = []

|

||||

self.fill = False

|

||||

self.selected = False

|

||||

|

|

|

|||

|

|

@ -72,6 +72,7 @@ PaddleOCR support a variety of cutting-edge algorithms related to OCR, and devel

|

|||

<img src="https://raw.githubusercontent.com/PaddlePaddle/PaddleOCR/dygraph/doc/joinus.PNG" width = "200" height = "200" />

|

||||

</div>

|

||||

<a name="Supported-Chinese-model-list"></a>

|

||||

|

||||

## PP-OCR Series Model List(Update on September 8th)

|

||||

|

||||

| Model introduction | Model name | Recommended scene | Detection model | Direction classifier | Recognition model |

|

||||

|

|

|

|||

|

|

@ -71,6 +71,8 @@ PaddleOCR旨在打造一套丰富、领先、且实用的OCR工具库,助力

|

|||

## 《动手学OCR》电子书

|

||||

- [《动手学OCR》电子书📚](./doc/doc_ch/ocr_book.md)

|

||||

|

||||

## 场景应用

|

||||

- PaddleOCR场景应用覆盖通用,制造、金融、交通行业的主要OCR垂类应用,在PP-OCR、PP-Structure的通用能力基础之上,以notebook的形式展示利用场景数据微调、模型优化方法、数据增广等内容,为开发者快速落地OCR应用提供示范与启发。详情可查看[README](./applications)。

|

||||

|

||||

<a name="开源社区"></a>

|

||||

## 开源社区

|

||||

|

|

|

|||

|

|

@ -0,0 +1,652 @@

|

|||

# 基于PP-OCRv3的PCB字符识别

|

||||

|

||||

- [1. 项目介绍](#1-项目介绍)

|

||||

- [2. 安装说明](#2-安装说明)

|

||||

- [3. 数据准备](#3-数据准备)

|

||||

- [4. 文本检测](#4-文本检测)

|

||||

- [4.1 预训练模型直接评估](#41-预训练模型直接评估)

|

||||

- [4.2 预训练模型+验证集padding直接评估](#42-预训练模型验证集padding直接评估)

|

||||

- [4.3 预训练模型+fine-tune](#43-预训练模型fine-tune)

|

||||

- [5. 文本识别](#5-文本识别)

|

||||

- [5.1 预训练模型直接评估](#51-预训练模型直接评估)

|

||||

- [5.2 三种fine-tune方案](#52-三种fine-tune方案)

|

||||

- [6. 模型导出](#6-模型导出)

|

||||

- [7. 端对端评测](#7-端对端评测)

|

||||

- [8. Jetson部署](#8-Jetson部署)

|

||||

- [9. 总结](#9-总结)

|

||||

- [更多资源](#更多资源)

|

||||

|

||||

# 1. 项目介绍

|

||||

|

||||

印刷电路板(PCB)是电子产品中的核心器件,对于板件质量的测试与监控是生产中必不可少的环节。在一些场景中,通过PCB中信号灯颜色和文字组合可以定位PCB局部模块质量问题,PCB文字识别中存在如下难点:

|

||||

|

||||

- 裁剪出的PCB图片宽高比例较小

|

||||

- 文字区域整体面积也较小

|

||||

- 包含垂直、水平多种方向文本

|

||||

|

||||

针对本场景,PaddleOCR基于全新的PP-OCRv3通过合成数据、微调以及其他场景适配方法完成小字符文本识别任务,满足企业上线要求。PCB检测、识别效果如 **图1** 所示:

|

||||

|

||||

<div align=center><img src='https://ai-studio-static-online.cdn.bcebos.com/95d8e95bf1ab476987f2519c0f8f0c60a0cdc2c444804ed6ab08f2f7ab054880', width='500'></div>

|

||||

<div align=center>图1 PCB检测识别效果</div>

|

||||

|

||||

注:欢迎在AIStudio领取免费算力体验线上实训,项目链接: [基于PP-OCRv3实现PCB字符识别](https://aistudio.baidu.com/aistudio/projectdetail/4008973)

|

||||

|

||||

# 2. 安装说明

|

||||

|

||||

|

||||

下载PaddleOCR源码,安装依赖环境。

|

||||

|

||||

|

||||

```python

|

||||

# 如仍需安装or安装更新,可以执行以下步骤

|

||||

git clone https://github.com/PaddlePaddle/PaddleOCR.git

|

||||

# git clone https://gitee.com/PaddlePaddle/PaddleOCR

|

||||

```

|

||||

|

||||

|

||||

```python

|

||||

# 安装依赖包

|

||||

pip install -r /home/aistudio/PaddleOCR/requirements.txt

|

||||

```

|

||||

|

||||

# 3. 数据准备

|

||||

|

||||

我们通过图片合成工具生成 **图2** 所示的PCB图片,整图只有高25、宽150左右、文字区域高9、宽45左右,包含垂直和水平2种方向的文本:

|

||||

|

||||

<div align=center><img src="https://ai-studio-static-online.cdn.bcebos.com/bb7a345687814a3d83a29790f2a2b7d081495b3a920b43988c93da6039cad653" width="1000" ></div>

|

||||

<div align=center>图2 数据集示例</div>

|

||||

|

||||

暂时不开源生成的PCB数据集,但是通过更换背景,通过如下代码生成数据即可:

|

||||

|

||||

```

|

||||

cd gen_data

|

||||

python3 gen.py --num_img=10

|

||||

```

|

||||

|

||||

生成图片参数解释:

|

||||

|

||||

```

|

||||

num_img:生成图片数量

|

||||

font_min_size、font_max_size:字体最大、最小尺寸

|

||||

bg_path:文字区域背景存放路径

|

||||

det_bg_path:整图背景存放路径

|

||||

fonts_path:字体路径

|

||||

corpus_path:语料路径

|

||||

output_dir:生成图片存储路径

|

||||

```

|

||||

|

||||

这里生成 **100张** 相同尺寸和文本的图片,如 **图3** 所示,方便大家跑通实验。通过如下代码解压数据集:

|

||||

|

||||

<div align=center><img src="https://ai-studio-static-online.cdn.bcebos.com/3277b750159f4b68b2b58506bfec9005d49aeb5fb1d9411e83f96f9ff7eb66a5" width="1000" ></div>

|

||||

<div align=center>图3 案例提供数据集示例</div>

|

||||

|

||||

|

||||

```python

|

||||

tar xf ./data/data148165/dataset.tar -C ./

|

||||

```

|

||||

|

||||

在生成数据集的时需要生成检测和识别训练需求的格式:

|

||||

|

||||

|

||||

- **文本检测**

|

||||

|

||||

标注文件格式如下,中间用'\t'分隔:

|

||||

|

||||

```

|

||||

" 图像文件名 json.dumps编码的图像标注信息"

|

||||

ch4_test_images/img_61.jpg [{"transcription": "MASA", "points": [[310, 104], [416, 141], [418, 216], [312, 179]]}, {...}]

|

||||

```

|

||||

|

||||

json.dumps编码前的图像标注信息是包含多个字典的list,字典中的 `points` 表示文本框的四个点的坐标(x, y),从左上角的点开始顺时针排列。 `transcription` 表示当前文本框的文字,***当其内容为“###”时,表示该文本框无效,在训练时会跳过。***

|

||||

|

||||

- **文本识别**

|

||||

|

||||

标注文件的格式如下, txt文件中默认请将图片路径和图片标签用'\t'分割,如用其他方式分割将造成训练报错。

|

||||

|

||||

```

|

||||

" 图像文件名 图像标注信息 "

|

||||

|

||||

train_data/rec/train/word_001.jpg 简单可依赖

|

||||

train_data/rec/train/word_002.jpg 用科技让复杂的世界更简单

|

||||

...

|

||||

```

|

||||

|

||||

|

||||

# 4. 文本检测

|

||||

|

||||

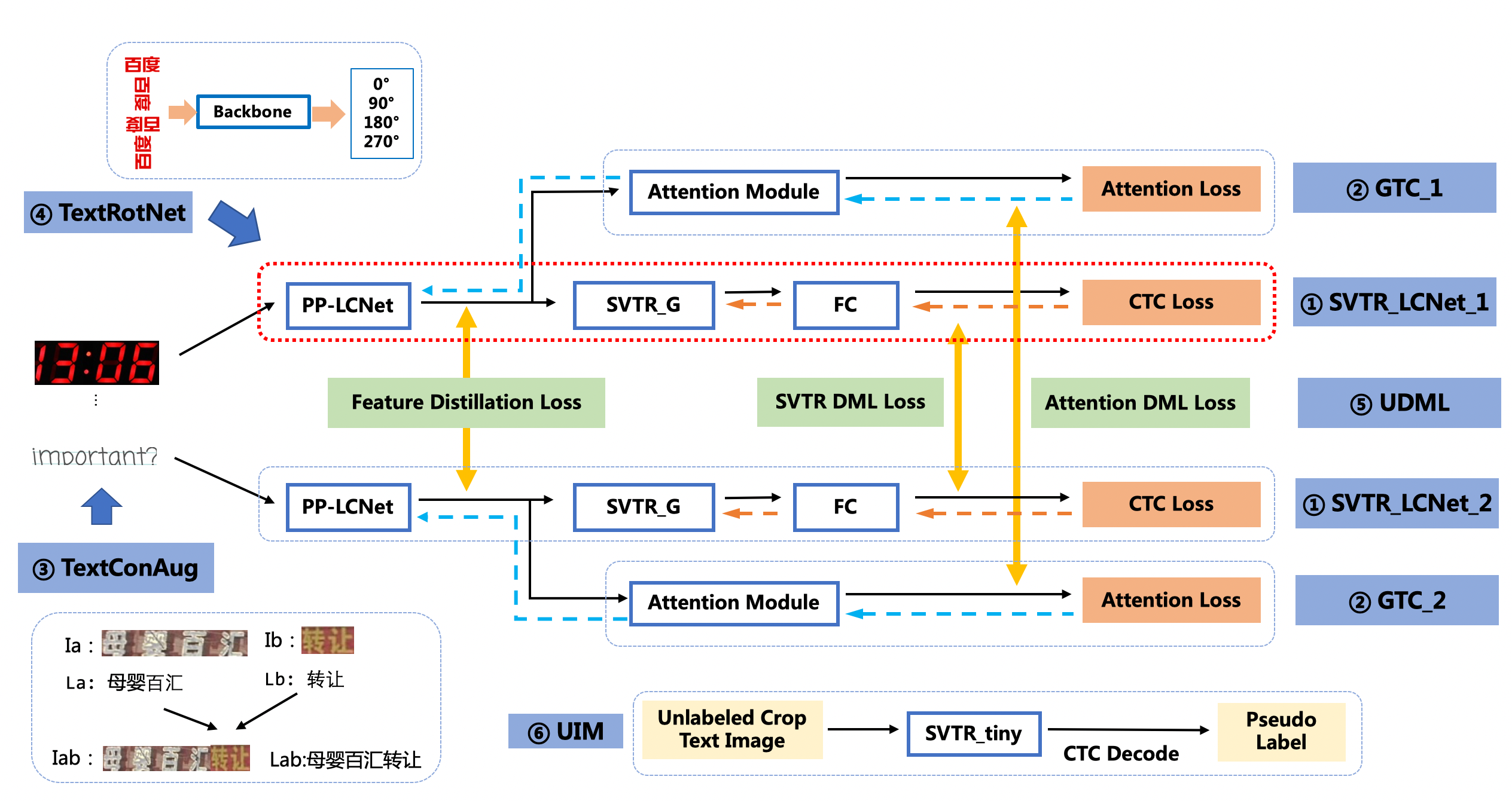

选用飞桨OCR开发套件[PaddleOCR](https://github.com/PaddlePaddle/PaddleOCR)中的PP-OCRv3模型进行文本检测和识别。针对检测模型和识别模型,进行了共计9个方面的升级:

|

||||

|

||||

- PP-OCRv3检测模型对PP-OCRv2中的CML协同互学习文本检测蒸馏策略进行了升级,分别针对教师模型和学生模型进行进一步效果优化。其中,在对教师模型优化时,提出了大感受野的PAN结构LK-PAN和引入了DML蒸馏策略;在对学生模型优化时,提出了残差注意力机制的FPN结构RSE-FPN。

|

||||

|

||||

- PP-OCRv3的识别模块是基于文本识别算法SVTR优化。SVTR不再采用RNN结构,通过引入Transformers结构更加有效地挖掘文本行图像的上下文信息,从而提升文本识别能力。PP-OCRv3通过轻量级文本识别网络SVTR_LCNet、Attention损失指导CTC损失训练策略、挖掘文字上下文信息的数据增广策略TextConAug、TextRotNet自监督预训练模型、UDML联合互学习策略、UIM无标注数据挖掘方案,6个方面进行模型加速和效果提升。

|

||||

|

||||

更多细节请参考PP-OCRv3[技术报告](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/PP-OCRv3_introduction.md)。

|

||||

|

||||

|

||||

我们使用 **3种方案** 进行检测模型的训练、评估:

|

||||

- **PP-OCRv3英文超轻量检测预训练模型直接评估**

|

||||

- PP-OCRv3英文超轻量检测预训练模型 + **验证集padding**直接评估

|

||||

- PP-OCRv3英文超轻量检测预训练模型 + **fine-tune**

|

||||

|

||||

## **4.1 预训练模型直接评估**

|

||||

|

||||

我们首先通过PaddleOCR提供的预训练模型在验证集上进行评估,如果评估指标能满足效果,可以直接使用预训练模型,不再需要训练。

|

||||

|

||||

使用预训练模型直接评估步骤如下:

|

||||

|

||||

**1)下载预训练模型**

|

||||

|

||||

|

||||

PaddleOCR已经提供了PP-OCR系列模型,部分模型展示如下表所示:

|

||||

|

||||

| 模型简介 | 模型名称 | 推荐场景 | 检测模型 | 方向分类器 | 识别模型 |

|

||||

| ------------------------------------- | ----------------------- | --------------- | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

|

||||

| 中英文超轻量PP-OCRv3模型(16.2M) | ch_PP-OCRv3_xx | 移动端&服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_distill_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_train.tar) |

|

||||

| 英文超轻量PP-OCRv3模型(13.4M) | en_PP-OCRv3_xx | 移动端&服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_det_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_det_distill_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_train.tar) |

|

||||

| 中英文超轻量PP-OCRv2模型(13.0M) | ch_PP-OCRv2_xx | 移动端&服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_distill_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_rec_train.tar) |

|

||||

| 中英文超轻量PP-OCR mobile模型(9.4M) | ch_ppocr_mobile_v2.0_xx | 移动端&服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_det_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_det_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_rec_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_rec_pre.tar) |

|

||||

| 中英文通用PP-OCR server模型(143.4M) | ch_ppocr_server_v2.0_xx | 服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_det_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_det_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_rec_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_rec_pre.tar) |

|

||||

|

||||

更多模型下载(包括多语言),可以参[考PP-OCR系列模型下载](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/models_list.md)

|

||||

|

||||

这里我们使用PP-OCRv3英文超轻量检测模型,下载并解压预训练模型:

|

||||

|

||||

|

||||

|

||||

|

||||

```python

|

||||

# 如果更换其他模型,更新下载链接和解压指令就可以

|

||||

cd /home/aistudio/PaddleOCR

|

||||

mkdir pretrain_models

|

||||

cd pretrain_models

|

||||

# 下载英文预训练模型

|

||||

wget https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_det_distill_train.tar

|

||||

tar xf en_PP-OCRv3_det_distill_train.tar && rm -rf en_PP-OCRv3_det_distill_train.tar

|

||||

%cd ..

|

||||

```

|

||||

|

||||

**模型评估**

|

||||

|

||||

|

||||

首先修改配置文件`configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml`中的以下字段:

|

||||

```

|

||||

Eval.dataset.data_dir:指向验证集图片存放目录,'/home/aistudio/dataset'

|

||||

Eval.dataset.label_file_list:指向验证集标注文件,'/home/aistudio/dataset/det_gt_val.txt'

|

||||

Eval.dataset.transforms.DetResizeForTest: 尺寸

|

||||

limit_side_len: 48

|

||||

limit_type: 'min'

|

||||

```

|

||||

|

||||

然后在验证集上进行评估,具体代码如下:

|

||||

|

||||

|

||||

|

||||

```python

|

||||

cd /home/aistudio/PaddleOCR

|

||||

python tools/eval.py \

|

||||

-c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml \

|

||||

-o Global.checkpoints="./pretrain_models/en_PP-OCRv3_det_distill_train/best_accuracy"

|

||||

```

|

||||

|

||||

## **4.2 预训练模型+验证集padding直接评估**

|

||||

|

||||

考虑到PCB图片比较小,宽度只有25左右、高度只有140-170左右,我们在原图的基础上进行padding,再进行检测评估,padding前后效果对比如 **图4** 所示:

|

||||

|

||||

<div align=center><img src='https://ai-studio-static-online.cdn.bcebos.com/e61e6ba685534eda992cea30a63a9c461646040ffd0c4d208a5eebb85897dcf7' width='600'></div>

|

||||

<div align=center>图4 padding前后对比图</div>

|

||||

|

||||

将图片都padding到300*300大小,因为坐标信息发生了变化,我们同时要修改标注文件,在`/home/aistudio/dataset`目录里也提供了padding之后的图片,大家也可以尝试训练和评估:

|

||||

|

||||

同上,我们需要修改配置文件`configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml`中的以下字段:

|

||||

```

|

||||

Eval.dataset.data_dir:指向验证集图片存放目录,'/home/aistudio/dataset'

|

||||

Eval.dataset.label_file_list:指向验证集标注文件,/home/aistudio/dataset/det_gt_padding_val.txt

|

||||

Eval.dataset.transforms.DetResizeForTest: 尺寸

|

||||

limit_side_len: 1100

|

||||

limit_type: 'min'

|

||||

```

|

||||

|

||||

如需获取已训练模型,请扫码填写问卷,加入PaddleOCR官方交流群获取全部OCR垂类模型下载链接、《动手学OCR》电子书等全套OCR学习资料🎁

|

||||

<div align="left">

|

||||

<img src="https://ai-studio-static-online.cdn.bcebos.com/dd721099bd50478f9d5fb13d8dd00fad69c22d6848244fd3a1d3980d7fefc63e" width = "150" height = "150" />

|

||||

</div>

|

||||

将下载或训练完成的模型放置在对应目录下即可完成模型评估。

|

||||

|

||||

|

||||

```python

|

||||

cd /home/aistudio/PaddleOCR

|

||||

python tools/eval.py \

|

||||

-c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml \

|

||||

-o Global.checkpoints="./pretrain_models/en_PP-OCRv3_det_distill_train/best_accuracy"

|

||||

```

|

||||

|

||||

## **4.3 预训练模型+fine-tune**

|

||||

|

||||

|

||||

基于预训练模型,在生成的1500图片上进行fine-tune训练和评估,其中train数据1200张,val数据300张,修改配置文件`configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml`中的以下字段:

|

||||

```

|

||||

Global.epoch_num: 这里设置为1,方便快速跑通,实际中根据数据量调整该值

|

||||

Global.save_model_dir:模型保存路径

|

||||

Global.pretrained_model:指向预训练模型路径,'./pretrain_models/en_PP-OCRv3_det_distill_train/student.pdparams'

|

||||

Optimizer.lr.learning_rate:调整学习率,本实验设置为0.0005

|

||||

Train.dataset.data_dir:指向训练集图片存放目录,'/home/aistudio/dataset'

|

||||

Train.dataset.label_file_list:指向训练集标注文件,'/home/aistudio/dataset/det_gt_train.txt'

|

||||

Train.dataset.transforms.EastRandomCropData.size:训练尺寸改为[480,64]

|

||||

Eval.dataset.data_dir:指向验证集图片存放目录,'/home/aistudio/dataset/'

|

||||

Eval.dataset.label_file_list:指向验证集标注文件,'/home/aistudio/dataset/det_gt_val.txt'

|

||||

Eval.dataset.transforms.DetResizeForTest:评估尺寸,添加如下参数

|

||||

limit_side_len: 64

|

||||

limit_type:'min'

|

||||

```

|

||||

执行下面命令启动训练:

|

||||

|

||||

|

||||

```python

|

||||

cd /home/aistudio/PaddleOCR/

|

||||

python tools/train.py \

|

||||

-c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml

|

||||

```

|

||||

|

||||

**模型评估**

|

||||

|

||||

|

||||

使用训练好的模型进行评估,更新模型路径`Global.checkpoints`:

|

||||

|

||||

|

||||

```python

|

||||

cd /home/aistudio/PaddleOCR/

|

||||

python3 tools/eval.py \

|

||||

-c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml \

|

||||

-o Global.checkpoints="./output/ch_PP-OCR_V3_det/latest"

|

||||

```

|

||||

|

||||

使用训练好的模型进行评估,指标如下所示:

|

||||

|

||||

|

||||

| 序号 | 方案 | hmean | 效果提升 | 实验分析 |

|

||||

| -------- | -------- | -------- | -------- | -------- |

|

||||

| 1 | PP-OCRv3英文超轻量检测预训练模型 | 64.64% | - | 提供的预训练模型具有泛化能力 |

|

||||

| 2 | PP-OCRv3英文超轻量检测预训练模型 + 验证集padding | 72.13% |+7.5% | padding可以提升尺寸较小图片的检测效果|

|

||||

| 3 | PP-OCRv3英文超轻量检测预训练模型 + fine-tune | 100% | +27.9% | fine-tune会提升垂类场景效果 |

|

||||

|

||||

|

||||

```

|

||||

注:上述实验结果均是在1500张图片(1200张训练集,300张测试集)上训练、评估的得到,AIstudio只提供了100张数据,所以指标有所差异属于正常,只要策略有效、规律相同即可。

|

||||

```

|

||||

|

||||

# 5. 文本识别

|

||||

|

||||

我们分别使用如下4种方案进行训练、评估:

|

||||

|

||||

- **方案1**:**PP-OCRv3中英文超轻量识别预训练模型直接评估**

|

||||

- **方案2**:PP-OCRv3中英文超轻量检测预训练模型 + **fine-tune**

|

||||

- **方案3**:PP-OCRv3中英文超轻量检测预训练模型 + fine-tune + **公开通用识别数据集**

|

||||

- **方案4**:PP-OCRv3中英文超轻量检测预训练模型 + fine-tune + **增加PCB图像数量**

|

||||

|

||||

|

||||

## **5.1 预训练模型直接评估**

|

||||

|

||||

同检测模型,我们首先使用PaddleOCR提供的识别预训练模型在PCB验证集上进行评估。

|

||||

|

||||

使用预训练模型直接评估步骤如下:

|

||||

|

||||

**1)下载预训练模型**

|

||||

|

||||

|

||||

我们使用PP-OCRv3中英文超轻量文本识别模型,下载并解压预训练模型:

|

||||

|

||||

|

||||

```python

|

||||

# 如果更换其他模型,更新下载链接和解压指令就可以

|

||||

cd /home/aistudio/PaddleOCR/pretrain_models/

|

||||

wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_train.tar

|

||||

tar xf ch_PP-OCRv3_rec_train.tar && rm -rf ch_PP-OCRv3_rec_train.tar

|

||||

cd ..

|

||||

```

|

||||

|

||||

**模型评估**

|

||||

|

||||

|

||||

首先修改配置文件`configs/det/ch_PP-OCRv3/ch_PP-OCRv2_rec_distillation.yml`中的以下字段:

|

||||

|

||||

```

|

||||

Metric.ignore_space: True:忽略空格

|

||||

Eval.dataset.data_dir:指向验证集图片存放目录,'/home/aistudio/dataset'

|

||||

Eval.dataset.label_file_list:指向验证集标注文件,'/home/aistudio/dataset/rec_gt_val.txt'

|

||||

```

|

||||

|

||||

我们使用下载的预训练模型进行评估:

|

||||

|

||||

|

||||

```python

|

||||

cd /home/aistudio/PaddleOCR

|

||||

python3 tools/eval.py \

|

||||

-c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml \

|

||||

-o Global.checkpoints=pretrain_models/ch_PP-OCRv3_rec_train/best_accuracy

|

||||

|

||||

```

|

||||

|

||||

## **5.2 三种fine-tune方案**

|

||||

|

||||

方案2、3、4训练和评估方式是相同的,因此在我们了解每个技术方案之后,再具体看修改哪些参数是相同,哪些是不同的。

|

||||

|

||||

**方案介绍:**

|

||||

|

||||

1) **方案2**:预训练模型 + **fine-tune**

|

||||

|

||||

- 在预训练模型的基础上进行fine-tune,使用1500张PCB进行训练和评估,其中训练集1200张,验证集300张。

|

||||

|

||||

|

||||

2) **方案3**:预训练模型 + fine-tune + **公开通用识别数据集**

|

||||

|

||||

- 当识别数据比较少的情况,可以考虑添加公开通用识别数据集。在方案2的基础上,添加公开通用识别数据集,如lsvt、rctw等。

|

||||

|

||||

3)**方案4**:预训练模型 + fine-tune + **增加PCB图像数量**

|

||||

|

||||

- 如果能够获取足够多真实场景,我们可以通过增加数据量提升模型效果。在方案2的基础上,增加PCB的数量到2W张左右。

|

||||

|

||||

|

||||

**参数修改:**

|

||||

|

||||

接着我们看需要修改的参数,以上方案均需要修改配置文件`configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml`的参数,**修改一次即可**:

|

||||

|

||||

```

|

||||

Global.pretrained_model:指向预训练模型路径,'pretrain_models/ch_PP-OCRv3_rec_train/best_accuracy'

|

||||

Optimizer.lr.values:学习率,本实验设置为0.0005

|

||||

Train.loader.batch_size_per_card: batch size,默认128,因为数据量小于128,因此我们设置为8,数据量大可以按默认的训练

|

||||

Eval.loader.batch_size_per_card: batch size,默认128,设置为4

|

||||

Metric.ignore_space: 忽略空格,本实验设置为True

|

||||

```

|

||||

|

||||

**更换不同的方案**每次需要修改的参数:

|

||||

```

|

||||

Global.epoch_num: 这里设置为1,方便快速跑通,实际中根据数据量调整该值

|

||||

Global.save_model_dir:指向模型保存路径

|

||||

Train.dataset.data_dir:指向训练集图片存放目录

|

||||

Train.dataset.label_file_list:指向训练集标注文件

|

||||

Eval.dataset.data_dir:指向验证集图片存放目录

|

||||

Eval.dataset.label_file_list:指向验证集标注文件

|

||||

```

|

||||

|

||||

同时**方案3**修改以下参数

|

||||

```

|

||||

Eval.dataset.label_file_list:添加公开通用识别数据标注文件

|

||||

Eval.dataset.ratio_list:数据和公开通用识别数据每次采样比例,按实际修改即可

|

||||

```

|

||||

如 **图5** 所示:

|

||||

<div align=center><img src='https://ai-studio-static-online.cdn.bcebos.com/0fa18b25819042d9bbf3397c3af0e21433b23d52f7a84b0a8681b8e6a308d433' wdith=''></div>

|

||||

<div align=center>图5 添加公开通用识别数据配置文件示例</div>

|

||||

|

||||

|

||||

我们提取Student模型的参数,在PCB数据集上进行fine-tune,可以参考如下代码:

|

||||

|

||||

|

||||

```python

|

||||

import paddle

|

||||

# 加载预训练模型

|

||||

all_params = paddle.load("./pretrain_models/ch_PP-OCRv3_rec_train/best_accuracy.pdparams")

|

||||

# 查看权重参数的keys

|

||||

print(all_params.keys())

|

||||

# 学生模型的权重提取

|

||||

s_params = {key[len("student_model."):]: all_params[key] for key in all_params if "student_model." in key}

|

||||

# 查看学生模型权重参数的keys

|

||||

print(s_params.keys())

|

||||

# 保存

|

||||

paddle.save(s_params, "./pretrain_models/ch_PP-OCRv3_rec_train/student.pdparams")

|

||||

```

|

||||

|

||||

修改参数后,**每个方案**都执行如下命令启动训练:

|

||||

|

||||

|

||||

|

||||

```python

|

||||

cd /home/aistudio/PaddleOCR/

|

||||

python3 tools/train.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml

|

||||

```

|

||||

|

||||

|

||||

使用训练好的模型进行评估,更新模型路径`Global.checkpoints`:

|

||||

|

||||

|

||||

```python

|

||||

cd /home/aistudio/PaddleOCR/

|

||||

python3 tools/eval.py \

|

||||

-c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml \

|

||||

-o Global.checkpoints=./output/rec_ppocr_v3/latest

|

||||

```

|

||||

|

||||

所有方案评估指标如下:

|

||||

|

||||

| 序号 | 方案 | acc | 效果提升 | 实验分析 |

|

||||

| -------- | -------- | -------- | -------- | -------- |

|

||||

| 1 | PP-OCRv3中英文超轻量识别预训练模型直接评估 | 46.67% | - | 提供的预训练模型具有泛化能力 |

|

||||

| 2 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune | 42.02% |-4.6% | 在数据量不足的情况,反而比预训练模型效果低(也可以通过调整超参数再试试)|

|

||||

| 3 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 公开通用识别数据集 | 77% | +30% | 在数据量不足的情况下,可以考虑补充公开数据训练 |

|

||||

| 4 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 增加PCB图像数量 | 99.99% | +23% | 如果能获取更多数据量的情况,可以通过增加数据量提升效果 |

|

||||

|

||||

```

|

||||

注:上述实验结果均是在1500张图片(1200张训练集,300张测试集)、2W张图片、添加公开通用识别数据集上训练、评估的得到,AIstudio只提供了100张数据,所以指标有所差异属于正常,只要策略有效、规律相同即可。

|

||||

```

|

||||

|

||||

# 6. 模型导出

|

||||

|

||||

inference 模型(paddle.jit.save保存的模型) 一般是模型训练,把模型结构和模型参数保存在文件中的固化模型,多用于预测部署场景。 训练过程中保存的模型是checkpoints模型,保存的只有模型的参数,多用于恢复训练等。 与checkpoints模型相比,inference 模型会额外保存模型的结构信息,在预测部署、加速推理上性能优越,灵活方便,适合于实际系统集成。

|

||||

|

||||

|

||||

```python

|

||||

# 导出检测模型

|

||||

python3 tools/export_model.py \

|

||||

-c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml \

|

||||

-o Global.pretrained_model="./output/ch_PP-OCR_V3_det/latest" \

|

||||

Global.save_inference_dir="./inference_model/ch_PP-OCR_V3_det/"

|

||||

```

|

||||

|

||||

因为上述模型只训练了1个epoch,因此我们使用训练最优的模型进行预测,存储在`/home/aistudio/best_models/`目录下,解压即可

|

||||

|

||||

|

||||

```python

|

||||

cd /home/aistudio/best_models/

|

||||

wget https://paddleocr.bj.bcebos.com/fanliku/PCB/det_ppocr_v3_en_infer_PCB.tar

|

||||

tar xf /home/aistudio/best_models/det_ppocr_v3_en_infer_PCB.tar -C /home/aistudio/PaddleOCR/pretrain_models/

|

||||

```

|

||||

|

||||

|

||||

```python

|

||||

# 检测模型inference模型预测

|

||||

cd /home/aistudio/PaddleOCR/

|

||||

python3 tools/infer/predict_det.py \

|

||||

--image_dir="/home/aistudio/dataset/imgs/0000.jpg" \

|

||||

--det_algorithm="DB" \

|

||||

--det_model_dir="./pretrain_models/det_ppocr_v3_en_infer_PCB/" \

|

||||

--det_limit_side_len=48 \

|

||||

--det_limit_type='min' \

|

||||

--det_db_unclip_ratio=2.5 \

|

||||

--use_gpu=True

|

||||

```

|

||||

|

||||

结果存储在`inference_results`目录下,检测如下图所示:

|

||||

<div align=center><img src='https://ai-studio-static-online.cdn.bcebos.com/5939ae15a1f0445aaeec15c68107dbd897740a1ddd284bf8b583bb6242099157' width=''></div>

|

||||

<div align=center>图6 检测结果</div>

|

||||

|

||||

|

||||

同理,导出识别模型并进行推理。

|

||||

|

||||

```python

|

||||

# 导出识别模型

|

||||

python3 tools/export_model.py \

|

||||

-c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml \

|

||||

-o Global.pretrained_model="./output/rec_ppocr_v3/latest" \

|

||||

Global.save_inference_dir="./inference_model/rec_ppocr_v3/"

|

||||

|

||||

```

|

||||

|

||||

同检测模型,识别模型也只训练了1个epoch,因此我们使用训练最优的模型进行预测,存储在`/home/aistudio/best_models/`目录下,解压即可

|

||||

|

||||

|

||||

```python

|

||||

cd /home/aistudio/best_models/

|

||||

wget https://paddleocr.bj.bcebos.com/fanliku/PCB/rec_ppocr_v3_ch_infer_PCB.tar

|

||||

tar xf /home/aistudio/best_models/rec_ppocr_v3_ch_infer_PCB.tar -C /home/aistudio/PaddleOCR/pretrain_models/

|

||||

```

|

||||

|

||||

|

||||

```python

|

||||

# 识别模型inference模型预测

|

||||

cd /home/aistudio/PaddleOCR/

|

||||

python3 tools/infer/predict_rec.py \

|

||||

--image_dir="../test_imgs/0000_rec.jpg" \

|

||||

--rec_model_dir="./pretrain_models/rec_ppocr_v3_ch_infer_PCB" \

|

||||

--rec_image_shape="3, 48, 320" \

|

||||

--use_space_char=False \

|

||||

--use_gpu=True

|

||||

```

|

||||

|

||||

```python

|

||||

# 检测+识别模型inference模型预测

|

||||

cd /home/aistudio/PaddleOCR/

|

||||

python3 tools/infer/predict_system.py \

|

||||

--image_dir="../test_imgs/0000.jpg" \

|

||||

--det_model_dir="./pretrain_models/det_ppocr_v3_en_infer_PCB" \

|

||||

--det_limit_side_len=48 \

|

||||

--det_limit_type='min' \

|

||||

--det_db_unclip_ratio=2.5 \

|

||||

--rec_model_dir="./pretrain_models/rec_ppocr_v3_ch_infer_PCB" \

|

||||

--rec_image_shape="3, 48, 320" \

|

||||

--draw_img_save_dir=./det_rec_infer/ \

|

||||

--use_space_char=False \

|

||||

--use_angle_cls=False \

|

||||

--use_gpu=True

|

||||

|

||||

```

|

||||

|

||||

端到端预测结果存储在`det_res_infer`文件夹内,结果如下图所示:

|

||||

<div align=center><img src='https://ai-studio-static-online.cdn.bcebos.com/c570f343c29846c792da56ebaca16c50708477514dd048cea8bef37ffa85d03f'></div>

|

||||

<div align=center>图7 检测+识别结果</div>

|

||||

|

||||

# 7. 端对端评测

|

||||

|

||||

接下来介绍文本检测+文本识别的端对端指标评估方式。主要分为三步:

|

||||

|

||||

1)首先运行`tools/infer/predict_system.py`,将`image_dir`改为需要评估的数据文件家,得到保存的结果:

|

||||

|

||||

|

||||

```python

|

||||

# 检测+识别模型inference模型预测

|

||||

python3 tools/infer/predict_system.py \

|

||||

--image_dir="../dataset/imgs/" \

|

||||

--det_model_dir="./pretrain_models/det_ppocr_v3_en_infer_PCB" \

|

||||

--det_limit_side_len=48 \

|

||||

--det_limit_type='min' \

|

||||

--det_db_unclip_ratio=2.5 \

|

||||

--rec_model_dir="./pretrain_models/rec_ppocr_v3_ch_infer_PCB" \

|

||||

--rec_image_shape="3, 48, 320" \

|

||||

--draw_img_save_dir=./det_rec_infer/ \

|

||||

--use_space_char=False \

|

||||

--use_angle_cls=False \

|

||||

--use_gpu=True

|

||||

```

|

||||

|

||||

得到保存结果,文本检测识别可视化图保存在`det_rec_infer/`目录下,预测结果保存在`det_rec_infer/system_results.txt`中,格式如下:`0018.jpg [{"transcription": "E295", "points": [[88, 33], [137, 33], [137, 40], [88, 40]]}]`

|

||||

|

||||

2)然后将步骤一保存的数据转换为端对端评测需要的数据格式: 修改 `tools/end2end/convert_ppocr_label.py`中的代码,convert_label函数中设置输入标签路径,Mode,保存标签路径等,对预测数据的GTlabel和预测结果的label格式进行转换。

|

||||

```

|

||||

ppocr_label_gt = "/home/aistudio/dataset/det_gt_val.txt"

|

||||

convert_label(ppocr_label_gt, "gt", "./save_gt_label/")

|

||||

|

||||

ppocr_label_gt = "/home/aistudio/PaddleOCR/PCB_result/det_rec_infer/system_results.txt"

|

||||

convert_label(ppocr_label_gt, "pred", "./save_PPOCRV2_infer/")

|

||||

```

|

||||

|

||||

运行`convert_ppocr_label.py`:

|

||||

|

||||

|

||||

```python

|

||||

python3 tools/end2end/convert_ppocr_label.py

|

||||

```

|

||||

|

||||

得到如下结果:

|

||||

```

|

||||

├── ./save_gt_label/

|

||||

├── ./save_PPOCRV2_infer/

|

||||

```

|

||||

|

||||

3) 最后,执行端对端评测,运行`tools/end2end/eval_end2end.py`计算端对端指标,运行方式如下:

|

||||

|

||||

|

||||

```python

|

||||

pip install editdistance

|

||||

python3 tools/end2end/eval_end2end.py ./save_gt_label/ ./save_PPOCRV2_infer/

|

||||

```

|

||||

|

||||

使用`预训练模型+fine-tune'检测模型`、`预训练模型 + 2W张PCB图片funetune`识别模型,在300张PCB图片上评估得到如下结果,fmeasure为主要关注的指标:

|

||||

<div align=center><img src='https://ai-studio-static-online.cdn.bcebos.com/37206ea48a244212ae7a821d50d1fd51faf3d7fe97ac47a29f04dfcbb377b019', width='700'></div>

|

||||

<div align=center>图8 端到端评估指标</div>

|

||||

|

||||

```

|

||||

注: 使用上述命令不能跑出该结果,因为数据集不相同,可以更换为自己训练好的模型,按上述流程运行

|

||||

```

|

||||

|

||||

# 8. Jetson部署

|

||||

|

||||

我们只需要以下步骤就可以完成Jetson nano部署模型,简单易操作:

|

||||

|

||||

**1、在Jetson nano开发版上环境准备:**

|

||||

|

||||

* 安装PaddlePaddle

|

||||

|

||||

* 下载PaddleOCR并安装依赖

|

||||

|

||||

**2、执行预测**

|

||||

|

||||

* 将推理模型下载到jetson

|

||||

|

||||

* 执行检测、识别、串联预测即可

|

||||

|

||||

详细[参考流程](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/deploy/Jetson/readme_ch.md)。

|

||||

|

||||

# 9. 总结

|

||||

|

||||

检测实验分别使用PP-OCRv3预训练模型在PCB数据集上进行了直接评估、验证集padding、 fine-tune 3种方案,识别实验分别使用PP-OCRv3预训练模型在PCB数据集上进行了直接评估、 fine-tune、添加公开通用识别数据集、增加PCB图片数量4种方案,指标对比如下:

|

||||

|

||||

* 检测

|

||||

|

||||

|

||||

| 序号 | 方案 | hmean | 效果提升 | 实验分析 |

|

||||

| ---- | -------------------------------------------------------- | ------ | -------- | ------------------------------------- |

|

||||

| 1 | PP-OCRv3英文超轻量检测预训练模型直接评估 | 64.64% | - | 提供的预训练模型具有泛化能力 |

|

||||

| 2 | PP-OCRv3英文超轻量检测预训练模型 + 验证集padding直接评估 | 72.13% | +7.5% | padding可以提升尺寸较小图片的检测效果 |

|

||||

| 3 | PP-OCRv3英文超轻量检测预训练模型 + fine-tune | 100% | +27.9% | fine-tune会提升垂类场景效果 |

|

||||

|

||||

* 识别

|

||||

|

||||

| 序号 | 方案 | acc | 效果提升 | 实验分析 |

|

||||

| ---- | ------------------------------------------------------------ | ------ | -------- | ------------------------------------------------------------ |

|

||||

| 1 | PP-OCRv3中英文超轻量识别预训练模型直接评估 | 46.67% | - | 提供的预训练模型具有泛化能力 |

|

||||

| 2 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune | 42.02% | -4.6% | 在数据量不足的情况,反而比预训练模型效果低(也可以通过调整超参数再试试) |

|

||||

| 3 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 公开通用识别数据集 | 77% | +30% | 在数据量不足的情况下,可以考虑补充公开数据训练 |

|

||||

| 4 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 增加PCB图像数量 | 99.99% | +23% | 如果能获取更多数据量的情况,可以通过增加数据量提升效果 |

|

||||

|

||||

* 端到端

|

||||

|

||||

| det | rec | fmeasure |

|

||||

| --------------------------------------------- | ------------------------------------------------------------ | -------- |

|

||||

| PP-OCRv3英文超轻量检测预训练模型 + fine-tune | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 增加PCB图像数量 | 93.3% |

|

||||

|

||||

*结论*

|

||||

|

||||

PP-OCRv3的检测模型在未经过fine-tune的情况下,在PCB数据集上也有64.64%的精度,说明具有泛化能力。验证集padding之后,精度提升7.5%,在图片尺寸较小的情况,我们可以通过padding的方式提升检测效果。经过 fine-tune 后能够极大的提升检测效果,精度达到100%。

|

||||

|

||||

PP-OCRv3的识别模型方案1和方案2对比可以发现,当数据量不足的情况,预训练模型精度可能比fine-tune效果还要高,所以我们可以先尝试预训练模型直接评估。如果在数据量不足的情况下想进一步提升模型效果,可以通过添加公开通用识别数据集,识别效果提升30%,非常有效。最后如果我们能够采集足够多的真实场景数据集,可以通过增加数据量提升模型效果,精度达到99.99%。

|

||||

|

||||

# 更多资源

|

||||

|

||||

- 更多深度学习知识、产业案例、面试宝典等,请参考:[awesome-DeepLearning](https://github.com/paddlepaddle/awesome-DeepLearning)

|

||||

|

||||

- 更多PaddleOCR使用教程,请参考:[PaddleOCR](https://github.com/PaddlePaddle/PaddleOCR/tree/dygraph)

|

||||

|

||||

|

||||

- 飞桨框架相关资料,请参考:[飞桨深度学习平台](https://www.paddlepaddle.org.cn/?fr=paddleEdu_aistudio)

|

||||

|

||||

# 参考

|

||||

|

||||

* 数据生成代码库:https://github.com/zcswdt/Color_OCR_image_generator

|

||||

Binary file not shown.

|

After Width: | Height: | Size: 2.0 KiB |

|

|

@ -0,0 +1,30 @@

|

|||

5ZQ

|

||||

I4UL

|

||||

PWL

|

||||

SNOG

|

||||

ZL02

|

||||

1C30

|

||||

O3H

|

||||

YHRS

|

||||

N03S

|

||||

1U5Y

|

||||

JTK

|

||||

EN4F

|

||||

YKJ

|

||||

DWNH

|

||||

R42W

|

||||

X0V

|

||||

4OF5

|

||||

08AM

|

||||

Y93S

|

||||

GWE2

|

||||

0KR

|

||||

9U2A

|

||||

DBQ

|

||||

Y6J

|

||||

ROZ

|

||||

K06

|

||||

KIEY

|

||||

NZQJ

|

||||

UN1B

|

||||

6X4

|

||||

Binary file not shown.

|

After Width: | Height: | Size: 145 B |

Binary file not shown.

|

After Width: | Height: | Size: 141 B |

|

|

@ -0,0 +1,261 @@

|

|||

# copyright (c) 2020 PaddlePaddle Authors. All Rights Reserve.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

"""

|

||||

This code is refer from:

|

||||

https://github.com/zcswdt/Color_OCR_image_generator

|

||||

"""

|

||||

import os

|

||||

import random

|

||||

from PIL import Image, ImageDraw, ImageFont

|

||||

import json

|

||||

import argparse

|

||||

|

||||

|

||||

def get_char_lines(txt_root_path):

|

||||

"""

|

||||

desc:get corpus line

|

||||

"""

|

||||

txt_files = os.listdir(txt_root_path)

|

||||

char_lines = []

|

||||

for txt in txt_files:

|

||||

f = open(os.path.join(txt_root_path, txt), mode='r', encoding='utf-8')

|

||||

lines = f.readlines()

|

||||

f.close()

|

||||

for line in lines:

|

||||

char_lines.append(line.strip())

|

||||

return char_lines

|

||||

|

||||

|

||||

def get_horizontal_text_picture(image_file, chars, fonts_list, cf):

|

||||

"""

|

||||

desc:gen horizontal text picture

|

||||

"""

|

||||

img = Image.open(image_file)

|

||||

if img.mode != 'RGB':

|

||||

img = img.convert('RGB')

|

||||

img_w, img_h = img.size

|

||||

|

||||

# random choice font

|

||||

font_path = random.choice(fonts_list)

|

||||

# random choice font size

|

||||

font_size = random.randint(cf.font_min_size, cf.font_max_size)

|

||||

font = ImageFont.truetype(font_path, font_size)

|

||||

|

||||

ch_w = []

|

||||

ch_h = []

|

||||

for ch in chars:

|

||||

wt, ht = font.getsize(ch)

|

||||

ch_w.append(wt)

|

||||

ch_h.append(ht)

|

||||

f_w = sum(ch_w)

|

||||

f_h = max(ch_h)

|

||||

|

||||

# add space

|

||||

char_space_width = max(ch_w)

|

||||

f_w += (char_space_width * (len(chars) - 1))

|

||||

|

||||

x1 = random.randint(0, img_w - f_w)

|

||||

y1 = random.randint(0, img_h - f_h)

|

||||

x2 = x1 + f_w

|

||||

y2 = y1 + f_h

|

||||

|

||||

crop_y1 = y1

|

||||

crop_x1 = x1

|

||||

crop_y2 = y2

|

||||

crop_x2 = x2

|

||||

|

||||

best_color = (0, 0, 0)

|

||||

draw = ImageDraw.Draw(img)

|

||||

for i, ch in enumerate(chars):

|

||||

draw.text((x1, y1), ch, best_color, font=font)

|

||||

x1 += (ch_w[i] + char_space_width)

|

||||

crop_img = img.crop((crop_x1, crop_y1, crop_x2, crop_y2))

|

||||

return crop_img, chars

|

||||

|

||||

|

||||

def get_vertical_text_picture(image_file, chars, fonts_list, cf):

|

||||

"""

|

||||

desc:gen vertical text picture

|

||||

"""

|

||||

img = Image.open(image_file)

|

||||

if img.mode != 'RGB':

|

||||

img = img.convert('RGB')

|

||||

img_w, img_h = img.size

|

||||

# random choice font

|

||||

font_path = random.choice(fonts_list)

|

||||

# random choice font size

|

||||

font_size = random.randint(cf.font_min_size, cf.font_max_size)

|

||||

font = ImageFont.truetype(font_path, font_size)

|

||||

|

||||

ch_w = []

|

||||

ch_h = []

|

||||

for ch in chars:

|

||||

wt, ht = font.getsize(ch)

|

||||

ch_w.append(wt)

|

||||

ch_h.append(ht)

|

||||

f_w = max(ch_w)

|

||||

f_h = sum(ch_h)

|

||||

|

||||

x1 = random.randint(0, img_w - f_w)

|

||||

y1 = random.randint(0, img_h - f_h)

|

||||

x2 = x1 + f_w

|

||||

y2 = y1 + f_h

|

||||

|

||||

crop_y1 = y1

|

||||

crop_x1 = x1

|

||||

crop_y2 = y2

|

||||

crop_x2 = x2

|

||||

|

||||

best_color = (0, 0, 0)

|

||||

draw = ImageDraw.Draw(img)

|

||||

i = 0

|

||||

for ch in chars:

|

||||

draw.text((x1, y1), ch, best_color, font=font)

|

||||

y1 = y1 + ch_h[i]

|

||||

i = i + 1

|

||||

crop_img = img.crop((crop_x1, crop_y1, crop_x2, crop_y2))

|

||||

crop_img = crop_img.transpose(Image.ROTATE_90)

|

||||

return crop_img, chars

|

||||

|

||||

|

||||

def get_fonts(fonts_path):

|

||||

"""

|

||||

desc: get all fonts

|

||||

"""

|

||||

font_files = os.listdir(fonts_path)

|

||||

fonts_list=[]

|

||||

for font_file in font_files:

|

||||

font_path=os.path.join(fonts_path, font_file)

|

||||

fonts_list.append(font_path)

|

||||

return fonts_list

|

||||

|

||||

if __name__ == '__main__':

|

||||

parser = argparse.ArgumentParser()

|

||||

parser.add_argument('--num_img', type=int, default=30, help="Number of images to generate")

|

||||

parser.add_argument('--font_min_size', type=int, default=11)

|

||||

parser.add_argument('--font_max_size', type=int, default=12,

|

||||

help="Help adjust the size of the generated text and the size of the picture")

|

||||

parser.add_argument('--bg_path', type=str, default='./background',

|

||||

help='The generated text pictures will be pasted onto the pictures of this folder')

|

||||

parser.add_argument('--det_bg_path', type=str, default='./det_background',

|

||||

help='The generated text pictures will use the pictures of this folder as the background')

|

||||

parser.add_argument('--fonts_path', type=str, default='../../StyleText/fonts',

|

||||

help='The font used to generate the picture')

|

||||

parser.add_argument('--corpus_path', type=str, default='./corpus',

|

||||

help='The corpus used to generate the text picture')

|

||||

parser.add_argument('--output_dir', type=str, default='./output/', help='Images save dir')

|

||||

|

||||

|

||||

cf = parser.parse_args()

|

||||

# save path

|

||||

if not os.path.exists(cf.output_dir):

|

||||

os.mkdir(cf.output_dir)

|

||||

|

||||

# get corpus

|

||||

txt_root_path = cf.corpus_path

|

||||

char_lines = get_char_lines(txt_root_path=txt_root_path)

|

||||

|

||||

# get all fonts

|

||||

fonts_path = cf.fonts_path

|

||||

fonts_list = get_fonts(fonts_path)

|

||||

|

||||

# rec bg

|

||||

img_root_path = cf.bg_path

|

||||

imnames=os.listdir(img_root_path)

|

||||

|

||||

# det bg

|

||||

det_bg_path = cf.det_bg_path

|

||||

bg_pics = os.listdir(det_bg_path)

|

||||

|

||||

# OCR det files

|

||||

det_val_file = open(cf.output_dir + 'det_gt_val.txt', 'w', encoding='utf-8')

|

||||

det_train_file = open(cf.output_dir + 'det_gt_train.txt', 'w', encoding='utf-8')

|

||||

# det imgs

|

||||

det_save_dir = 'imgs/'

|

||||

if not os.path.exists(cf.output_dir + det_save_dir):

|

||||

os.mkdir(cf.output_dir + det_save_dir)

|

||||

det_val_save_dir = 'imgs_val/'

|

||||

if not os.path.exists(cf.output_dir + det_val_save_dir):

|

||||

os.mkdir(cf.output_dir + det_val_save_dir)

|

||||

|

||||

# OCR rec files

|

||||

rec_val_file = open(cf.output_dir + 'rec_gt_val.txt', 'w', encoding='utf-8')

|

||||

rec_train_file = open(cf.output_dir + 'rec_gt_train.txt', 'w', encoding='utf-8')

|

||||

# rec imgs

|

||||

rec_save_dir = 'rec_imgs/'

|

||||

if not os.path.exists(cf.output_dir + rec_save_dir):

|

||||

os.mkdir(cf.output_dir + rec_save_dir)

|

||||

rec_val_save_dir = 'rec_imgs_val/'

|

||||

if not os.path.exists(cf.output_dir + rec_val_save_dir):

|

||||

os.mkdir(cf.output_dir + rec_val_save_dir)

|

||||

|

||||

|

||||

val_ratio = cf.num_img * 0.2 # val dataset ratio

|

||||

|

||||

print('start generating...')

|

||||

for i in range(0, cf.num_img):

|

||||

imname = random.choice(imnames)

|

||||

img_path = os.path.join(img_root_path, imname)

|

||||

|

||||

rnd = random.random()

|

||||

# gen horizontal text picture

|

||||

if rnd < 0.5:

|

||||

gen_img, chars = get_horizontal_text_picture(img_path, char_lines[i], fonts_list, cf)

|

||||

ori_w, ori_h = gen_img.size

|

||||

gen_img = gen_img.crop((0, 3, ori_w, ori_h))

|

||||

# gen vertical text picture

|

||||

else:

|

||||

gen_img, chars = get_vertical_text_picture(img_path, char_lines[i], fonts_list, cf)

|

||||

ori_w, ori_h = gen_img.size

|

||||

gen_img = gen_img.crop((3, 0, ori_w, ori_h))

|

||||

|

||||

ori_w, ori_h = gen_img.size

|

||||

|

||||

# rec imgs

|

||||

save_img_name = str(i).zfill(4) + '.jpg'

|

||||

if i < val_ratio:

|

||||

save_dir = os.path.join(rec_val_save_dir, save_img_name)

|

||||

line = save_dir + '\t' + char_lines[i] + '\n'

|

||||

rec_val_file.write(line)

|

||||

else:

|

||||

save_dir = os.path.join(rec_save_dir, save_img_name)

|

||||

line = save_dir + '\t' + char_lines[i] + '\n'

|

||||

rec_train_file.write(line)

|

||||

gen_img.save(cf.output_dir + save_dir, quality = 95, subsampling=0)

|

||||

|

||||

# det img

|

||||

# random choice bg

|

||||

bg_pic = random.sample(bg_pics, 1)[0]

|

||||

det_img = Image.open(os.path.join(det_bg_path, bg_pic))

|

||||

# the PCB position is fixed, modify it according to your own scenario

|

||||

if bg_pic == '1.png':

|

||||

x1 = 38

|

||||

y1 = 3

|

||||

else:

|

||||

x1 = 34

|

||||

y1 = 1

|

||||

|

||||

det_img.paste(gen_img, (x1, y1))

|

||||

# text pos

|

||||

chars_pos = [[x1, y1], [x1 + ori_w, y1], [x1 + ori_w, y1 + ori_h], [x1, y1 + ori_h]]

|

||||

label = [{"transcription":char_lines[i], "points":chars_pos}]

|

||||

if i < val_ratio:

|

||||

save_dir = os.path.join(det_val_save_dir, save_img_name)

|

||||

det_val_file.write(save_dir + '\t' + json.dumps(

|

||||

label, ensure_ascii=False) + '\n')

|

||||

else:

|

||||

save_dir = os.path.join(det_save_dir, save_img_name)

|

||||

det_train_file.write(save_dir + '\t' + json.dumps(

|

||||

label, ensure_ascii=False) + '\n')

|

||||

det_img.save(cf.output_dir + save_dir, quality = 95, subsampling=0)

|

||||

|

|

@ -0,0 +1,41 @@

|

|||

# 场景应用

|

||||

|

||||

PaddleOCR场景应用覆盖通用,制造、金融、交通行业的主要OCR垂类应用,在PP-OCR、PP-Structure的通用能力基础之上,以notebook的形式展示利用场景数据微调、模型优化方法、数据增广等内容,为开发者快速落地OCR应用提供示范与启发。

|

||||

|

||||

> 如需下载全部垂类模型,可以扫描下方二维码,关注公众号填写问卷后,加入PaddleOCR官方交流群获取20G OCR学习大礼包(内含《动手学OCR》电子书、课程回放视频、前沿论文等重磅资料)

|

||||

|

||||

<div align="center">

|

||||

<img src="https://ai-studio-static-online.cdn.bcebos.com/dd721099bd50478f9d5fb13d8dd00fad69c22d6848244fd3a1d3980d7fefc63e" width = "150" height = "150" />

|

||||

</div>

|

||||

|

||||

|

||||

> 如果您是企业开发者且未在下述场景中找到合适的方案,可以填写[OCR应用合作调研问卷](https://paddle.wjx.cn/vj/QwF7GKw.aspx),免费与官方团队展开不同层次的合作,包括但不限于问题抽象、确定技术方案、项目答疑、共同研发等。如果您已经使用PaddleOCR落地项目,也可以填写此问卷,与飞桨平台共同宣传推广,提升企业技术品宣。期待您的提交!

|

||||

|

||||

## 通用

|

||||

|

||||

| 类别 | 亮点 | 类别 | 亮点 |

|

||||

| ------------------------------------------------- | -------- | ---------- | ------------ |

|

||||

| [高精度中文识别模型SVTR](./高精度中文识别模型.md) | 新增模型 | 手写体识别 | 新增字形支持 |

|

||||

|

||||

## 制造

|

||||

|

||||

| 类别 | 亮点 | 类别 | 亮点 |

|

||||

| ------------------------------------------------------------ | ------------------------------ | ------------------------------------------- | -------------------- |

|

||||

| [数码管识别](./光功率计数码管字符识别/光功率计数码管字符识别.md) | 数码管数据合成、漏识别调优 | 电表识别 | 大分辨率图像检测调优 |

|

||||

| [液晶屏读数识别](./液晶屏读数识别.md) | 检测模型蒸馏、Serving部署 | [PCB文字识别](./PCB字符识别/PCB字符识别.md) | 小尺寸文本检测与识别 |

|

||||

| [包装生产日期](./包装生产日期识别.md) | 点阵字符合成、过曝过暗文字识别 | 液晶屏缺陷检测 | 非文字字符识别 |

|

||||

|

||||

## 金融

|

||||

|

||||

| 类别 | 亮点 | 类别 | 亮点 |

|

||||

| ------------------------------ | ------------------------ | ------------ | --------------------- |

|

||||

| [表单VQA](./多模态表单识别.md) | 多模态通用表单结构化提取 | 通用卡证识别 | 通用结构化提取 |

|

||||

| 增值税发票 | 尽请期待 | 身份证识别 | 结构化提取、图像阴影 |

|

||||

| 印章检测与识别 | 端到端弯曲文本识别 | 合同比对 | 密集文本检测、NLP串联 |

|

||||

|

||||

## 交通

|

||||

|

||||

| 类别 | 亮点 | 类别 | 亮点 |

|

||||

| ------------------------------- | ------------------------------ | ---------- | -------- |

|

||||

| [车牌识别](./轻量级车牌识别.md) | 多角度图像、轻量模型、端侧部署 | 快递单识别 | 尽请期待 |

|

||||

| 驾驶证/行驶证识别 | 尽请期待 | | |

|

||||

|

|

@ -0,0 +1,43 @@

|

|||

46.39

|

||||

40.08

|

||||

89.52

|

||||

-71.93

|

||||

23.19

|

||||

-81.02

|

||||

-34.09

|

||||

05.87

|

||||

-67.80

|

||||

-51.56

|

||||

-34.58

|

||||

37.91

|

||||

56.98

|

||||

29.01

|

||||

-90.13

|

||||

35.55

|

||||

66.07

|

||||

-90.35

|

||||

-50.93

|

||||

42.42

|

||||

21.40

|

||||

-30.99

|

||||

-71.78

|

||||

25.60

|

||||

-48.69

|

||||

-72.28

|

||||

-17.55

|

||||

-99.93

|

||||

-47.35

|

||||

-64.89

|

||||

-31.28

|

||||

-90.01

|

||||

05.17

|

||||

30.91

|

||||

30.56

|

||||

-06.90

|

||||

79.05

|

||||

67.74

|

||||

-32.31

|

||||

94.22

|

||||

28.75

|

||||

51.03

|

||||

-58.96

|

||||

Binary file not shown.

Binary file not shown.

|

|

@ -0,0 +1,467 @@

|

|||

# 光功率计数码管字符识别

|

||||

|

||||

本案例将使用OCR技术自动识别光功率计显示屏文字,通过本章您可以掌握:

|

||||

|

||||

- PaddleOCR快速使用

|

||||

- 数据合成方法

|

||||

- 数据挖掘方法

|

||||

- 基于现有数据微调

|

||||

|

||||

## 1. 背景介绍

|

||||

|

||||

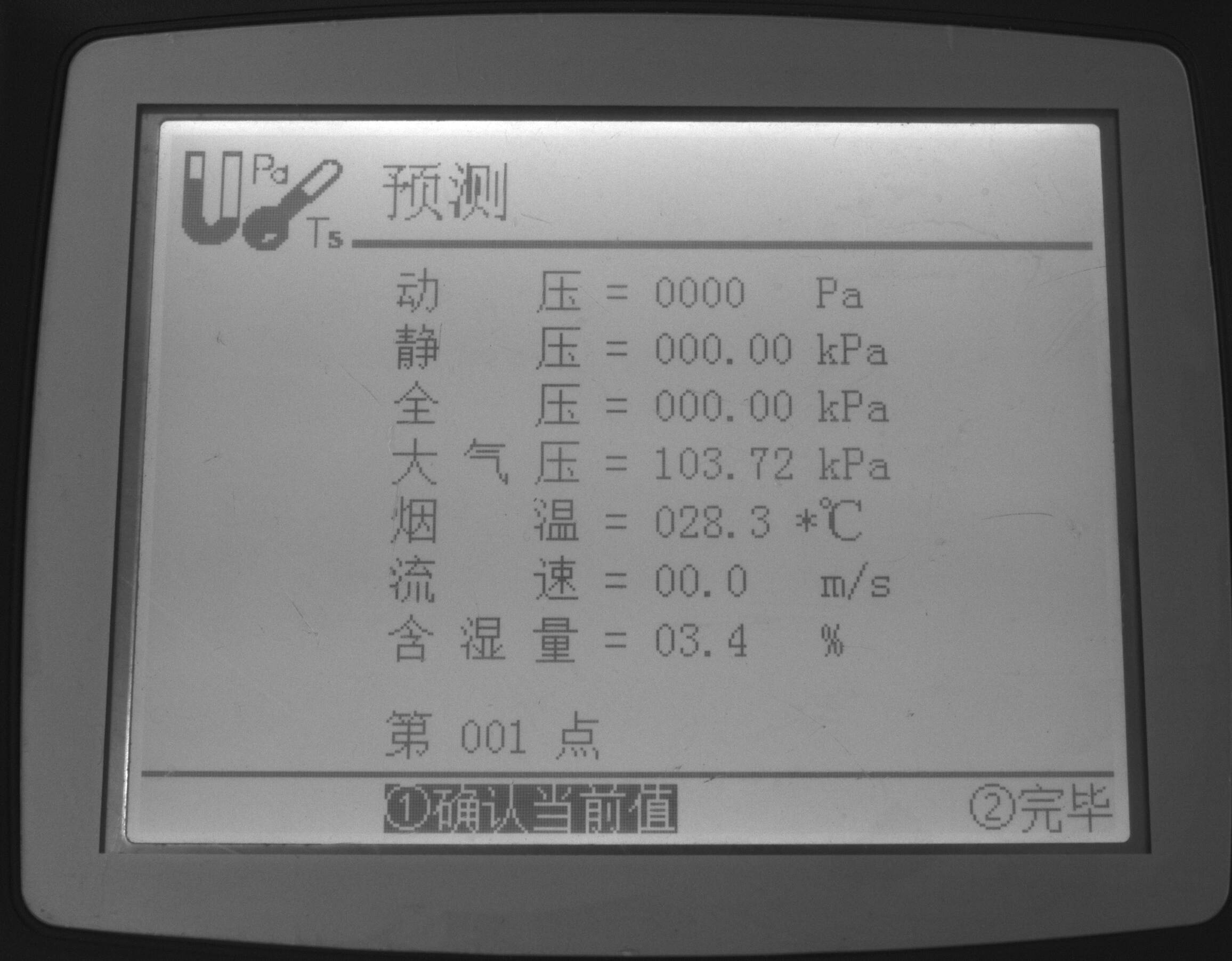

光功率计(optical power meter )是指用于测量绝对光功率或通过一段光纤的光功率相对损耗的仪器。在光纤系统中,测量光功率是最基本的,非常像电子学中的万用表;在光纤测量中,光功率计是重负荷常用表。

|

||||

|

||||

<img src="https://bkimg.cdn.bcebos.com/pic/a08b87d6277f9e2f999f5e3e1c30e924b899f35a?x-bce-process=image/watermark,image_d2F0ZXIvYmFpa2U5Mg==,g_7,xp_5,yp_5/format,f_auto" width="400">

|

||||

|

||||

目前光功率计缺少将数据直接输出的功能,需要人工读数。这一项工作单调重复,如果可以使用机器替代人工,将节约大量成本。针对上述问题,希望通过摄像头拍照->智能读数的方式高效地完成此任务。

|

||||

|

||||

为实现智能读数,通常会采取文本检测+文本识别的方案:

|

||||

|

||||

第一步,使用文本检测模型定位出光功率计中的数字部分;

|

||||

|

||||

第二步,使用文本识别模型获得准确的数字和单位信息。

|

||||

|

||||

本项目主要介绍如何完成第二步文本识别部分,包括:真实评估集的建立、训练数据的合成、基于 PP-OCRv3 和 SVTR_Tiny 两个模型进行训练,以及评估和推理。

|

||||

|

||||

本项目难点如下:

|

||||

|

||||

- 光功率计数码管字符数据较少,难以获取。

|

||||

- 数码管中小数点占像素较少,容易漏识别。

|

||||

|

||||

针对以上问题, 本例选用 PP-OCRv3 和 SVTR_Tiny 两个高精度模型训练,同时提供了真实数据挖掘案例和数据合成案例。基于 PP-OCRv3 模型,在构建的真实评估集上精度从 52% 提升至 72%,SVTR_Tiny 模型精度可达到 78.9%。

|

||||

|

||||

aistudio项目链接: [光功率计数码管字符识别](https://aistudio.baidu.com/aistudio/projectdetail/4049044?contributionType=1)

|

||||

|

||||

## 2. PaddleOCR 快速使用

|

||||

|

||||

PaddleOCR 旨在打造一套丰富、领先、且实用的OCR工具库,助力开发者训练出更好的模型,并应用落地。

|

||||

|

||||

|

||||

|

||||

|

||||

官方提供了适用于通用场景的高精轻量模型,首先使用官方提供的 PP-OCRv3 模型预测图片,验证下当前模型在光功率计场景上的效果。

|

||||

|

||||

- 准备环境

|

||||

|

||||

```

|

||||

python3 -m pip install -U pip

|

||||

python3 -m pip install paddleocr

|

||||

```

|

||||

|

||||

|

||||

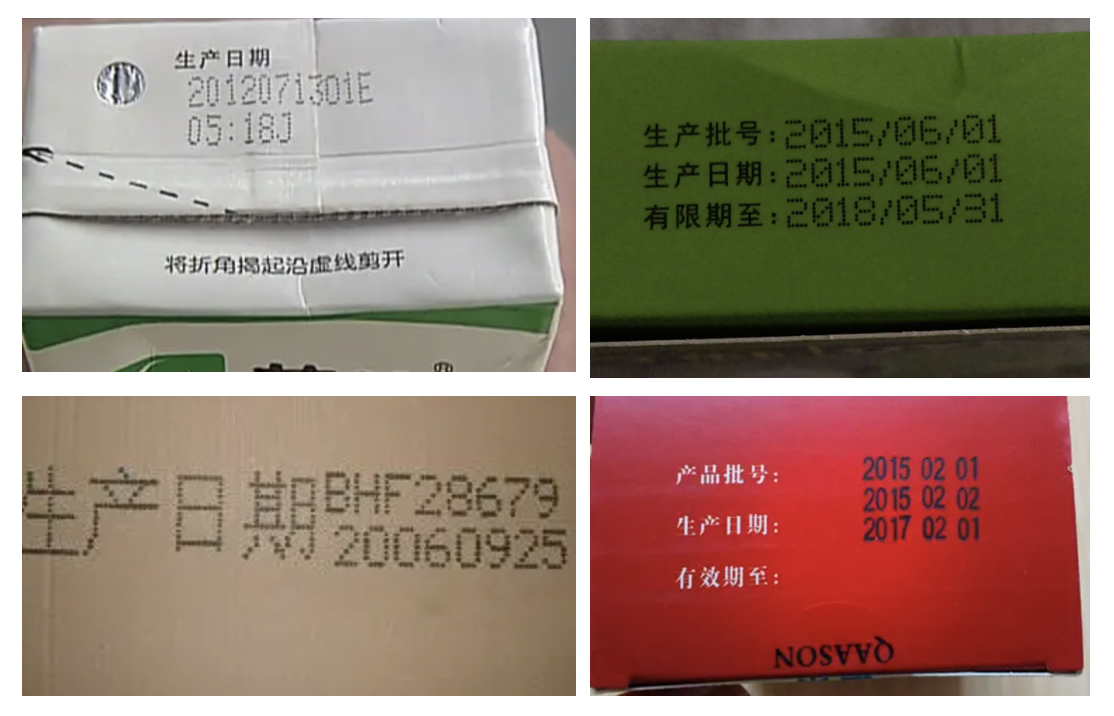

- 测试效果

|

||||

|

||||

测试图:

|

||||

|

||||

|

||||

|

||||

|

||||

```

|

||||

paddleocr --lang=ch --det=Fase --image_dir=data

|

||||

```

|

||||

|

||||

得到如下测试结果:

|

||||

|

||||

```

|

||||

('.7000', 0.6885431408882141)

|

||||

```

|

||||

|

||||

发现数字识别较准,然而对负号和小数点识别不准确。 由于PP-OCRv3的训练数据大多为通用场景数据,在特定的场景上效果可能不够好。因此需要基于场景数据进行微调。

|

||||

|

||||

下面就主要介绍如何在光功率计(数码管)场景上微调训练。

|

||||

|

||||

|

||||

## 3. 开始训练

|

||||

|

||||

### 3.1 数据准备

|

||||

|

||||

特定的工业场景往往很难获取开源的真实数据集,光功率计也是如此。在实际工业场景中,可以通过摄像头采集的方法收集大量真实数据,本例中重点介绍数据合成方法和真实数据挖掘方法,如何利用有限的数据优化模型精度。

|

||||

|

||||

数据集分为两个部分:合成数据,真实数据, 其中合成数据由 text_renderer 工具批量生成得到, 真实数据通过爬虫等方式在百度图片中搜索并使用 PPOCRLabel 标注得到。

|

||||

|

||||

|

||||

- 合成数据

|

||||

|

||||

本例中数据合成工具使用的是 [text_renderer](https://github.com/Sanster/text_renderer), 该工具可以合成用于文本识别训练的文本行数据:

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

```

|

||||

export https_proxy=http://172.19.57.45:3128

|

||||

git clone https://github.com/oh-my-ocr/text_renderer

|

||||

```

|

||||

|

||||

```

|

||||

import os

|

||||

python3 setup.py develop

|

||||

python3 -m pip install -r docker/requirements.txt

|

||||

python3 main.py \

|

||||

--config example_data/example.py \

|

||||

--dataset img \

|

||||

--num_processes 2 \

|

||||

--log_period 10

|

||||

```

|

||||

|

||||

给定字体和语料,就可以合成较为丰富样式的文本行数据。 光功率计识别场景,目标是正确识别数码管文本,因此需要收集部分数码管字体,训练语料,用于合成文本识别数据。

|

||||

|

||||

将收集好的语料存放在 example_data 路径下:

|

||||

|

||||

```

|

||||

ln -s ./fonts/DS* text_renderer/example_data/font/

|

||||

ln -s ./corpus/digital.txt text_renderer/example_data/text/

|

||||

```

|

||||

|

||||

修改 text_renderer/example_data/font_list/font_list.txt ,选择需要的字体开始合成:

|

||||

|

||||

```

|

||||

python3 main.py \

|

||||

--config example_data/digital_example.py \

|

||||

--dataset img \

|

||||

--num_processes 2 \

|

||||

--log_period 10

|

||||

```

|

||||

|

||||

合成图片会被存在目录 text_renderer/example_data/digital/chn_data 下

|

||||

|

||||

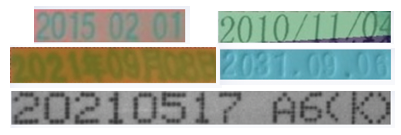

查看合成的数据样例:

|

||||

|

||||

|

||||

|

||||

|

||||

- 真实数据挖掘

|

||||

|

||||

模型训练需要使用真实数据作为评价指标,否则很容易过拟合到简单的合成数据中。没有开源数据的情况下,可以利用部分无标注数据+标注工具获得真实数据。

|

||||

|

||||

|

||||

1. 数据搜集

|

||||

|

||||

使用[爬虫工具](https://github.com/Joeclinton1/google-images-download.git)获得无标注数据

|

||||

|

||||

2. [PPOCRLabel](https://github.com/PaddlePaddle/PaddleOCR/tree/release/2.5/PPOCRLabel) 完成半自动标注

|

||||

|

||||

PPOCRLabel是一款适用于OCR领域的半自动化图形标注工具,内置PP-OCR模型对数据自动标注和重新识别。使用Python3和PyQT5编写,支持矩形框标注、表格标注、不规则文本标注、关键信息标注模式,导出格式可直接用于PaddleOCR检测和识别模型的训练。

|

||||

|

||||

|

||||

|

||||

|

||||

收集完数据后就可以进行分配了,验证集中一般都是真实数据,训练集中包含合成数据+真实数据。本例中标注了155张图片,其中训练集和验证集的数目为100和55。

|

||||

|

||||

|

||||

最终 `data` 文件夹应包含以下几部分:

|

||||

|

||||

```

|

||||

|-data

|

||||

|- synth_train.txt

|

||||

|- real_train.txt

|

||||

|- real_eval.txt

|

||||

|- synthetic_data

|

||||

|- word_001.png

|

||||

|- word_002.jpg

|

||||

|- word_003.jpg

|

||||

| ...

|

||||

|- real_data

|

||||

|- word_001.png

|

||||

|- word_002.jpg

|

||||

|- word_003.jpg

|

||||

| ...

|

||||

...

|

||||

```

|

||||

|

||||

### 3.2 模型选择

|

||||

|

||||

本案例提供了2种文本识别模型:PP-OCRv3 识别模型 和 SVTR_Tiny:

|

||||

|

||||

[PP-OCRv3 识别模型](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/PP-OCRv3_introduction.md):PP-OCRv3的识别模块是基于文本识别算法SVTR优化。SVTR不再采用RNN结构,通过引入Transformers结构更加有效地挖掘文本行图像的上下文信息,从而提升文本识别能力。并进行了一系列结构改进加速模型预测。

|

||||

|

||||

[SVTR_Tiny](https://arxiv.org/abs/2205.00159):SVTR提出了一种用于场景文本识别的单视觉模型,该模型在patch-wise image tokenization框架内,完全摒弃了序列建模,在精度具有竞争力的前提下,模型参数量更少,速度更快。

|

||||

|

||||

以上两个策略在自建中文数据集上的精度和速度对比如下:

|

||||

|

||||

| ID | 策略 | 模型大小 | 精度 | 预测耗时(CPU + MKLDNN)|

|

||||

|-----|-----|--------|----| --- |

|

||||

| 01 | PP-OCRv2 | 8M | 74.8% | 8.54ms |

|

||||

| 02 | SVTR_Tiny | 21M | 80.1% | 97ms |

|

||||

| 03 | SVTR_LCNet(h32) | 12M | 71.9% | 6.6ms |

|

||||

| 04 | SVTR_LCNet(h48) | 12M | 73.98% | 7.6ms |

|

||||

| 05 | + GTC | 12M | 75.8% | 7.6ms |

|

||||

| 06 | + TextConAug | 12M | 76.3% | 7.6ms |

|

||||

| 07 | + TextRotNet | 12M | 76.9% | 7.6ms |

|

||||

| 08 | + UDML | 12M | 78.4% | 7.6ms |

|

||||

| 09 | + UIM | 12M | 79.4% | 7.6ms |

|

||||

|

||||

|

||||

### 3.3 开始训练

|

||||

|

||||

首先下载 PaddleOCR 代码库

|

||||

|

||||

```

|

||||

git clone -b release/2.5 https://github.com/PaddlePaddle/PaddleOCR.git

|

||||

```

|

||||

|

||||

PaddleOCR提供了训练脚本、评估脚本和预测脚本,本节将以 PP-OCRv3 中文识别模型为例:

|

||||

|

||||

**Step1:下载预训练模型**

|

||||

|

||||

首先下载 pretrain model,您可以下载训练好的模型在自定义数据上进行finetune

|

||||

|

||||

```

|

||||

cd PaddleOCR/

|

||||

# 下载PP-OCRv3 中文预训练模型

|

||||

wget -P ./pretrain_models/ https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_train.tar

|

||||

# 解压模型参数

|

||||

cd pretrain_models

|

||||

tar -xf ch_PP-OCRv3_rec_train.tar && rm -rf ch_PP-OCRv3_rec_train.tar

|

||||

```

|

||||

|

||||

**Step2:自定义字典文件**

|

||||

|

||||

接下来需要提供一个字典({word_dict_name}.txt),使模型在训练时,可以将所有出现的字符映射为字典的索引。

|

||||

|

||||

因此字典需要包含所有希望被正确识别的字符,{word_dict_name}.txt需要写成如下格式,并以 `utf-8` 编码格式保存:

|

||||

|

||||

```

|

||||

0

|

||||

1

|

||||

2

|

||||

3

|

||||

4

|

||||

5

|

||||

6

|

||||

7

|

||||

8

|

||||

9

|

||||

-

|

||||

.

|

||||

```

|

||||

|

||||

word_dict.txt 每行有一个单字,将字符与数字索引映射在一起,“3.14” 将被映射成 [3, 11, 1, 4]

|

||||

|

||||

* 内置字典

|

||||

|

||||

PaddleOCR内置了一部分字典,可以按需使用。

|

||||

|

||||

`ppocr/utils/ppocr_keys_v1.txt` 是一个包含6623个字符的中文字典

|

||||

|

||||

`ppocr/utils/ic15_dict.txt` 是一个包含36个字符的英文字典

|

||||

|

||||

* 自定义字典

|

||||

|

||||

内置字典面向通用场景,具体的工业场景中,可能需要识别特殊字符,或者只需识别某几个字符,此时自定义字典会更提升模型精度。例如在光功率计场景中,需要识别数字和单位。

|

||||

|

||||

遍历真实数据标签中的字符,制作字典`digital_dict.txt`如下所示:

|

||||

|

||||

```

|

||||

-

|

||||

.

|

||||

0

|

||||

1

|

||||

2

|

||||

3

|

||||

4

|

||||

5

|

||||

6

|

||||

7

|

||||

8

|

||||

9

|

||||

B

|

||||

E

|

||||

F

|

||||

H

|

||||

L

|

||||

N

|

||||

T

|

||||

W

|

||||

d

|

||||

k

|

||||

m

|

||||

n

|

||||

o

|

||||

z

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

**Step3:修改配置文件**

|

||||

|

||||

为了更好的使用预训练模型,训练推荐使用[ch_PP-OCRv3_rec_distillation.yml](../../configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml)配置文件,并参考下列说明修改配置文件:

|

||||

|

||||

以 `ch_PP-OCRv3_rec_distillation.yml` 为例:

|

||||

```

|

||||

Global:

|

||||

...

|

||||

# 添加自定义字典,如修改字典请将路径指向新字典

|

||||

character_dict_path: ppocr/utils/dict/digital_dict.txt

|

||||

...

|

||||

# 识别空格

|

||||

use_space_char: True

|

||||

|

||||

|

||||

Optimizer:

|

||||

...

|

||||

# 添加学习率衰减策略

|

||||

lr:

|

||||

name: Cosine

|

||||

learning_rate: 0.001

|

||||

...

|

||||

|

||||

...

|

||||

|

||||

Train:

|

||||

dataset:

|

||||

# 数据集格式,支持LMDBDataSet以及SimpleDataSet

|

||||

name: SimpleDataSet

|

||||

# 数据集路径

|

||||

data_dir: ./data/

|

||||

# 训练集标签文件

|

||||

label_file_list:

|

||||

- ./train_data/digital_img/digital_train.txt #11w

|

||||

- ./train_data/digital_img/real_train.txt #100

|

||||

- ./train_data/digital_img/dbm_img/dbm.txt #3w

|

||||

ratio_list:

|

||||

- 0.3

|

||||

- 1.0

|

||||

- 1.0

|

||||

transforms:

|

||||

...

|

||||

- RecResizeImg:

|

||||

# 修改 image_shape 以适应长文本

|

||||

image_shape: [3, 48, 320]

|

||||

...

|

||||

loader:

|

||||

...

|

||||

# 单卡训练的batch_size

|

||||

batch_size_per_card: 256

|

||||

...

|

||||

|

||||

Eval:

|

||||

dataset:

|

||||

# 数据集格式,支持LMDBDataSet以及SimpleDataSet

|

||||

name: SimpleDataSet

|

||||

# 数据集路径

|

||||

data_dir: ./data

|

||||

# 验证集标签文件

|

||||

label_file_list:

|

||||

- ./train_data/digital_img/real_val.txt

|

||||

transforms:

|

||||

...

|

||||

- RecResizeImg:

|

||||

# 修改 image_shape 以适应长文本

|

||||

image_shape: [3, 48, 320]

|

||||

...

|

||||

loader:

|

||||

# 单卡验证的batch_size

|

||||

batch_size_per_card: 256

|

||||

...

|

||||

```

|

||||

**注意,训练/预测/评估时的配置文件请务必与训练一致。**

|

||||

|

||||

**Step4:启动训练**

|

||||

|

||||

*如果您安装的是cpu版本,请将配置文件中的 `use_gpu` 字段修改为false*

|

||||

|

||||

```

|

||||

# GPU训练 支持单卡,多卡训练

|

||||

# 训练数码管数据 训练日志会自动保存为 "{save_model_dir}" 下的train.log

|

||||

|

||||

#单卡训练(训练周期长,不建议)

|

||||

python3 tools/train.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml -o Global.pretrained_model=./pretrain_models/ch_PP-OCRv3_rec_train/best_accuracy

|

||||

|

||||

#多卡训练,通过--gpus参数指定卡号

|

||||

python3 -m paddle.distributed.launch --gpus '0,1,2,3' tools/train.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml -o Global.pretrained_model=./pretrain_models/en_PP-OCRv3_rec_train/best_accuracy

|

||||

```

|

||||

|

||||

|

||||

PaddleOCR支持训练和评估交替进行, 可以在 `configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml` 中修改 `eval_batch_step` 设置评估频率,默认每500个iter评估一次。评估过程中默认将最佳acc模型,保存为 `output/ch_PP-OCRv3_rec_distill/best_accuracy` 。

|

||||

|

||||

如果验证集很大,测试将会比较耗时,建议减少评估次数,或训练完再进行评估。

|

||||

|

||||

### SVTR_Tiny 训练

|

||||

|

||||

SVTR_Tiny 训练步骤与上面一致,SVTR支持的配置和模型训练权重可以参考[算法介绍文档](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/algorithm_rec_svtr.md)

|

||||

|

||||

**Step1:下载预训练模型**

|

||||

|

||||

```

|

||||

# 下载 SVTR_Tiny 中文识别预训练模型和配置文件

|

||||

wget https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/rec_svtr_tiny_none_ctc_ch_train.tar

|

||||

# 解压模型参数

|

||||

tar -xf rec_svtr_tiny_none_ctc_ch_train.tar && rm -rf rec_svtr_tiny_none_ctc_ch_train.tar

|

||||

```

|

||||

**Step2:自定义字典文件**

|

||||

|

||||

字典依然使用自定义的 digital_dict.txt

|

||||

|

||||

**Step3:修改配置文件**

|

||||

|

||||

配置文件中对应修改字典路径和数据路径

|

||||

|

||||

**Step4:启动训练**

|

||||

|

||||

```

|

||||

## 单卡训练

|

||||

python tools/train.py -c rec_svtr_tiny_none_ctc_ch_train/rec_svtr_tiny_6local_6global_stn_ch.yml \

|

||||

-o Global.pretrained_model=./rec_svtr_tiny_none_ctc_ch_train/best_accuracy

|

||||

```

|

||||

|

||||

### 3.4 验证效果

|

||||

|

||||

如需获取已训练模型,请扫码填写问卷,加入PaddleOCR官方交流群获取全部OCR垂类模型下载链接、《动手学OCR》电子书等全套OCR学习资料🎁

|

||||

<div align="left">

|

||||

<img src="https://ai-studio-static-online.cdn.bcebos.com/dd721099bd50478f9d5fb13d8dd00fad69c22d6848244fd3a1d3980d7fefc63e" width = "150" height = "150" />

|

||||

</div>

|

||||

将下载或训练完成的模型放置在对应目录下即可完成模型推理

|

||||

|

||||

* 指标评估

|

||||

|

||||

训练中模型参数默认保存在`Global.save_model_dir`目录下。在评估指标时,需要设置`Global.checkpoints`指向保存的参数文件。评估数据集可以通过 `configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml` 修改Eval中的 `label_file_path` 设置。

|

||||

|

||||

```

|

||||

# GPU 评估, Global.checkpoints 为待测权重

|

||||

python3 -m paddle.distributed.launch --gpus '0' tools/eval.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml -o Global.checkpoints={path/to/weights}/best_accuracy

|

||||

```

|

||||

|

||||

* 测试识别效果

|

||||

|

||||

使用 PaddleOCR 训练好的模型,可以通过以下脚本进行快速预测。

|

||||

|

||||

默认预测图片存储在 `infer_img` 里,通过 `-o Global.checkpoints` 加载训练好的参数文件:

|

||||

|

||||

根据配置文件中设置的 `save_model_dir` 和 `save_epoch_step` 字段,会有以下几种参数被保存下来:

|

||||

|

||||

```

|

||||

output/rec/

|

||||

├── best_accuracy.pdopt

|

||||

├── best_accuracy.pdparams

|

||||

├── best_accuracy.states

|

||||

├── config.yml

|

||||

├── iter_epoch_3.pdopt

|

||||

├── iter_epoch_3.pdparams

|

||||

├── iter_epoch_3.states

|

||||