67 KiB

comments

| comments |

|---|

| true |

General OCR Pipeline Usage Guide

1. OCR Pipeline Introduction

OCR (Optical Character Recognition) is a technology that converts text in images into editable text. It is widely used in document digitization, information extraction, and data processing. OCR can recognize printed text, handwritten text, and even certain types of fonts and symbols.

The General OCR Pipeline is designed to solve text recognition tasks by extracting text information from images and outputting it in text format. This pipeline integrates the industry-renowned PP-OCRv3 and PP-OCRv4 end-to-end OCR systems, supporting recognition for over 80 languages. Additionally, it includes functionalities for image orientation correction and distortion correction. Based on this pipeline, millisecond-level accurate text prediction can be achieved on CPUs, covering various scenarios such as general, manufacturing, finance, and transportation. The pipeline also offers flexible service-oriented deployment options, supporting calls in multiple programming languages across various hardware platforms. Furthermore, it provides secondary development capabilities, allowing you to fine-tune models on your own datasets, with trained models seamlessly integrable.

The General OCR Pipeline consists of the following 5 modules. Each module can be independently trained and inferred, and includes multiple models. For detailed information, click the corresponding module to view its documentation.

- Document Image Orientation Classification Module (Optional)

- Text Image Unwarping Module (Optional)

- Text Line Orientation Classification Module (Optional)

- Text Detection Module

- Text Recognition Module

In this pipeline, you can select models based on the benchmark test data provided below.

Document Image Orientation Classification Module (Optional):

| Model | Model Download Link | Top-1 Acc (%) | GPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

Model Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-LCNet_x1_0_doc_ori | Inference Model/Training Model | 99.06 | 2.31 / 0.43 | 3.37 / 1.27 | 7 | Document image classification model based on PP-LCNet_x1_0, with four categories: 0°, 90°, 180°, and 270°. |

Text Image Unwar'p Module (Optional):

| Model | Model Download Link | CER | Model Size (MB) | Description |

|---|---|---|---|---|

| UVDoc | Inference Model/Training Model | 0.179 | 30.3 | High-precision Text Image Unwarping model. |

Text Detection Module:

| Model | Model Download Link | Detection Hmean (%) | GPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

Model Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-OCRv5_server_det | Inference Model/Training Model | 83.8 | 89.55 / 70.19 | 371.65 / 371.65 | 84.3 | PP-OCRv5 server-side text detection model with higher accuracy, suitable for deployment on high-performance servers |

| PP-OCRv5_mobile_det | Inference Model/Training Model | 79.0 | 8.79 / 3.13 | 51.00 / 28.58 | 4.7 | PP-OCRv5 mobile-side text detection model with higher efficiency, suitable for deployment on edge devices |

| PP-OCRv4_server_det | Inference Model/Training Model | 69.2 | 83.34 / 80.91 | 442.58 / 442.58 | 109 | PP-OCRv4 server-side text detection model with higher accuracy, suitable for deployment on high-performance servers |

| PP-OCRv4_mobile_det | Inference Model/Training Model | 63.8 | 8.79 / 3.13 | 51.00 / 28.58 | 4.7 | PP-OCRv4 mobile-side text detection model with higher efficiency, suitable for deployment on edge devices |

Text Recognition Module:

| Model | Download Links | Avg Accuracy(%) | GPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

Model Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-OCRv5_server_rec | Inference Model/Training Model | 86.38 | - | - | 205 | PP-OCRv5_server_rec is a next-generation text recognition model designed to efficiently and accurately support Simplified Chinese, Traditional Chinese, English, and Japanese, as well as complex scenarios like handwriting, vertical text, pinyin, and rare characters. It balances recognition performance with inference speed and robustness, providing reliable support for document understanding across diverse scenarios. |

| PP-OCRv5_mobile_rec | Inference Model/Training Model | 81.29 | - | - | 128 | PP-OCRv5_mobile_rec is a next-generation lightweight text recognition model optimized for efficiency and accuracy across Simplified Chinese, Traditional Chinese, English, and Japanese, including complex scenarios like handwriting and vertical text. It delivers robust performance while maintaining fast inference speeds. |

| PP-OCRv4_server_rec_doc | Inference Model/Training Model | 86.58 | 6.65 / 2.38 | 32.92 / 32.92 | 181 | PP-OCRv4_server_rec_doc is trained on a hybrid dataset of Chinese document data and PP-OCR training data, enhancing recognition for Traditional Chinese, Japanese, and special characters. It supports 15,000+ characters and improves both document-specific and general text recognition. |

| PP-OCRv4_mobile_rec | Inference Model/Training Model | 83.28 | 4.82 / 1.20 | 16.74 / 4.64 | 88 | PP-OCRv4's lightweight recognition model, optimized for fast inference on edge devices and various hardware platforms. |

| PP-OCRv4_server_rec | Inference Model/Training Model | 85.19 | 6.58 / 2.43 | 33.17 / 33.17 | 151 | PP-OCRv4's server-side model, delivering high accuracy for deployment on various servers. |

| en_PP-OCRv4_mobile_rec | Inference Model/Training Model | 70.39 | 4.81 / 0.75 | 16.10 / 5.31 | 66 | An ultra-lightweight English recognition model based on PP-OCRv4, supporting English and numeric characters. |

❗ The above table highlights 6 core models from the text recognition module, which includes 10 full models in total, covering multiple multilingual recognition models. For the complete list:

👉 Full Model Details

- PP-OCRv5 Multi-Scene Models

| Model | Download Links | Chinese Accuracy(%) | English Accuracy(%) | Traditional Chinese Accuracy(%) | Japanese Accuracy(%) | GPU Inference Time (ms) [Standard / High-Performance] |

CPU Inference Time (ms) [Standard / High-Performance] |

Model Size (MB) | Description |

|---|---|---|---|---|---|---|---|---|---|

| PP-OCRv5_server_rec | Inference Model/Training Model | 86.38 | 64.70 | 93.29 | 60.35 | - | - | 205 | PP-OCRv5_server_rec is a next-generation text recognition model supporting Simplified Chinese, Traditional Chinese, English, and Japanese, including complex scenarios like handwriting and vertical text. |

| PP-OCRv5_mobile_rec | Inference Model/Training Model | 81.29 | 66.00 | 83.55 | 54.65 | - | - | 128 | PP-OCRv5_mobile_rec is a lightweight version optimized for efficiency and accuracy across multiple languages and scenarios. |

- Chinese Recognition Models

| Model | Download Links | Accuracy(%) | GPU Inference Time (ms) [Standard / High-Performance] |

CPU Inference Time (ms) [Standard / High-Performance] |

Model Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-OCRv4_server_rec_doc | Inference Model/Training Model | 86.58 | 6.65 / 2.38 | 32.92 / 32.92 | 181 | Enhanced for document text recognition, supporting 15,000+ characters including Traditional Chinese and Japanese. |

| PP-OCRv4_mobile_rec | Inference Model/Training Model | 83.28 | 4.82 / 1.20 | 16.74 / 4.64 | 88 | Lightweight model optimized for edge devices. |

| PP-OCRv4_server_rec | Inference Model/Training Model | 85.19 | 6.58 / 2.43 | 33.17 / 33.17 | 151 | High-accuracy server-side model. |

| PP-OCRv3_mobile_rec | Inference Model/Training Model | 75.43 | 5.87 / 1.19 | 9.07 / 4.28 | 138 | Lightweight PP-OCRv3 model for edge devices. |

| Model | Download Links | Accuracy(%) | GPU Inference Time (ms) [Standard / High-Performance] |

CPU Inference Time (ms) [Standard / High-Performance] |

Model Size (MB) | Description |

|---|---|---|---|---|---|---|

| ch_SVTRv2_rec | Inference Model/Training Model | 68.81 | 8.08 / 2.74 | 50.17 / 42.50 | 126 | SVTRv2, developed by FVL's OpenOCR team, won first prize in the PaddleOCR Algorithm Challenge, improving end-to-end recognition accuracy by 6% over PP-OCRv4. |

| Model | Download Links | Accuracy(%) | GPU Inference Time (ms) [Standard / High-Performance] |

CPU Inference Time (ms) [Standard / High-Performance] |

Model Size (MB) | Description |

|---|---|---|---|---|---|---|

| ch_RepSVTR_rec | Inference Model/Training Model | 65.07 | 5.93 / 1.62 | 20.73 / 7.32 | 70 | RepSVTR, a mobile-optimized version of SVTRv2, won first prize in the PaddleOCR Challenge, improving accuracy by 2.5% over PP-OCRv4 with comparable speed. |

- English Recognition Models

| Model | Download Links | Accuracy(%) | GPU Inference Time (ms) [Standard / High-Performance] |

CPU Inference Time (ms) [Standard / High-Performance] |

Model Size (MB) | Description |

|---|---|---|---|---|---|---|

| en_PP-OCRv4_mobile_rec | Inference Model/Training Model | 70.39 | 4.81 / 0.75 | 16.10 / 5.31 | 66 | Ultra-lightweight English recognition model supporting English and numeric characters. |

| en_PP-OCRv3_mobile_rec | Inference Model/Training Model | 70.69 | 5.44 / 0.75 | 8.65 / 5.57 | 85 | PP-OCRv3-based English recognition model. |

- Multilingual Recognition Models

| Model | Download Link | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

Model Size (M) | Description |

|---|---|---|---|---|---|---|

| korean_PP-OCRv3_mobile_rec | Inference Model/Training Model | 60.21 | 5.40 / 0.97 | 9.11 / 4.05 | 114 M | Ultra-lightweight Korean recognition model based on PP-OCRv3, supporting Korean and numeric characters |

| japan_PP-OCRv3_mobile_rec | Inference Model/Training Model | 45.69 | 5.70 / 1.02 | 8.48 / 4.07 | 120 M | Ultra-lightweight Japanese recognition model based on PP-OCRv3, supporting Japanese and numeric characters |

| chinese_cht_PP-OCRv3_mobile_rec | Inference Model/Training Model | 82.06 | 5.90 / 1.28 | 9.28 / 4.34 | 152 M | Ultra-lightweight Traditional Chinese recognition model based on PP-OCRv3, supporting Traditional Chinese and numeric characters |

| te_PP-OCRv3_mobile_rec | Inference Model/Training Model | 95.88 | 5.42 / 0.82 | 8.10 / 6.91 | 85 M | Ultra-lightweight Telugu recognition model based on PP-OCRv3, supporting Telugu and numeric characters |

| ka_PP-OCRv3_mobile_rec | Inference Model/Training Model | 96.96 | 5.25 / 0.79 | 9.09 / 3.86 | 85 M | Ultra-lightweight Kannada recognition model based on PP-OCRv3, supporting Kannada and numeric characters |

| ta_PP-OCRv3_mobile_rec | Inference Model/Training Model | 76.83 | 5.23 / 0.75 | 10.13 / 4.30 | 85 M | Ultra-lightweight Tamil recognition model based on PP-OCRv3, supporting Tamil and numeric characters |

| latin_PP-OCRv3_mobile_rec | Inference Model/Training Model | 76.93 | 5.20 / 0.79 | 8.83 / 7.15 | 85 M | Ultra-lightweight Latin recognition model based on PP-OCRv3, supporting Latin and numeric characters |

| arabic_PP-OCRv3_mobile_rec | Inference Model/Training Model | 73.55 | 5.35 / 0.79 | 8.80 / 4.56 | 85 M | Ultra-lightweight Arabic script recognition model based on PP-OCRv3, supporting Arabic script and numeric characters |

| cyrillic_PP-OCRv3_mobile_rec | Inference Model/Training Model | 94.28 | 5.23 / 0.76 | 8.89 / 3.88 | 85 M | Ultra-lightweight Cyrillic script recognition model based on PP-OCRv3, supporting Cyrillic script and numeric characters |

| devanagari_PP-OCRv3_mobile_rec | Inference Model/Training Model | 96.44 | 5.22 / 0.79 | 8.56 / 4.06 | 85 M | Ultra-lightweight Devanagari script recognition model based on PP-OCRv3, supporting Devanagari script and numeric characters |

Test Environment Details:

- Performance Test Environment

- Test Datasets:

- Document Image Orientation Classification Model: PaddleX in-house dataset covering ID cards and documents, with 1,000 images.

- Text Image Correction Model: DocUNet.

- Text Detection Model: PaddleOCR in-house Chinese dataset covering street views, web images, documents, and handwriting, with 500 images for detection.

- Chinese Recognition Model: PaddleOCR in-house Chinese dataset covering street views, web images, documents, and handwriting, with 11,000 images for recognition.

- ch_SVTRv2_rec: PaddleOCR Algorithm Challenge - Task 1: OCR End-to-End Recognition A-set evaluation data.

- ch_RepSVTR_rec: PaddleOCR Algorithm Challenge - Task 1: OCR End-to-End Recognition B-set evaluation data.

- English Recognition Model: PaddleX in-house English dataset.

- Multilingual Recognition Model: PaddleX in-house multilingual dataset.

- Text Line Orientation Classification Model: PaddleX in-house dataset covering ID cards and documents, with 1,000 images.

- Hardware Configuration:

- GPU: NVIDIA Tesla T4

- CPU: Intel Xeon Gold 6271C @ 2.60GHz

- Other Environment: Ubuntu 20.04 / cuDNN 8.6 / TensorRT 8.5.2.2

- Test Datasets:

- Inference Mode Description

| Mode | GPU Configuration | CPU Configuration | Acceleration Techniques |

|---|---|---|---|

| Standard Mode | FP32 Precision / No TRT Acceleration | FP32 Precision / 8 Threads | PaddleInference |

| High-Performance Mode | Optimal combination of precision types and acceleration strategies | FP32 Precision / 8 Threads | Optimal backend selection (Paddle/OpenVINO/TRT, etc.) |

If you prioritize model accuracy, choose models with higher accuracy; if inference speed is critical, select faster models; if model size matters, opt for smaller models.

2. Quick Start

Before using the general OCR pipeline locally, ensure you have installed the wheel package by following the Installation Guide. Once installed, you can experience OCR via the command line or Python integration.

2.1 Command Line

Run a single command to quickly test the OCR pipeline:

# Default: Uses PP-OCRv5 model

paddleocr ocr -i https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/general_ocr_002.png \

--use_doc_orientation_classify False \

--use_doc_unwarping False \

--use_textline_orientation False \

--save_path ./output \

--device gpu:0

# Use PP-OCRv4 model by --ocr_version PP-OCRv4

paddleocr ocr -i ./general_ocr_002.png --ocr_version PP-OCRv4

More command-line parameters available. Click to expand for details.

| Parameter | Description | Type | Default |

|---|---|---|---|

input |

Input data (required). Supports:

|

Python Var|str|list |

|

save_path |

Path to save inference results. If None, results are not saved locally. |

str |

|

doc_orientation_classify_model_name |

Name of the document orientation classification model. If None, the default pipeline model is used. |

str |

None |

doc_orientation_classify_model_dir |

Directory path of the document orientation classification model. If None, the official model is downloaded. |

str |

None |

doc_unwarping_model_name |

Name of the text image correction model. If None, the default pipeline model is used. |

str |

None |

doc_unwarping_model_dir |

Directory path of the text image correction model. If None, the official model is downloaded. |

str |

None |

text_detection_model_name |

Name of the text detection model. If None, the default pipeline model is used. |

str |

None |

text_detection_model_dir |

Directory path of the text detection model. If None, the official model is downloaded. |

str |

None |

text_line_orientation_model_name |

Name of the text line orientation model. If None, the default pipeline model is used. |

str |

None |

text_line_orientation_model_dir |

Directory path of the text line orientation model. If None, the official model is downloaded. |

str |

None |

text_line_orientation_batch_size |

Batch size for the text line orientation model. If None, defaults to 1. |

int |

None |

text_recognition_model_name |

Name of the text recognition model. If None, the default pipeline model is used. |

str |

None |

text_recognition_model_dir |

Directory path of the text recognition model. If None, the official model is downloaded. |

str |

None |

text_recognition_batch_size |

Batch size for the text recognition model. If None, defaults to 1. |

int |

None |

use_doc_orientation_classify |

Whether to enable document orientation classification. If None, defaults to pipeline initialization value (True). |

bool |

None |

use_doc_unwarping |

Whether to enable text image correction. If None, defaults to pipeline initialization value (True). |

bool |

None |

use_textline_orientation |

Whether to enable text line orientation classification. If None, defaults to pipeline initialization value (True). |

bool |

None |

text_det_limit_side_len |

Maximum side length limit for text detection.

|

int |

None |

text_det_limit_type |

Side length limit type for text detection.

|

str |

None |

text_det_thresh |

Pixel threshold for text detection. Pixels with scores > this threshold are considered text.

|

float |

None |

text_det_box_thresh |

Box threshold for text detection. Detected regions with average scores > this threshold are retained.

|

float |

None |

text_det_unclip_ratio |

Expansion ratio for text detection. Larger values expand text regions more.

|

float |

None |

text_det_input_shape |

Input shape for text detection. | tuple |

None |

text_rec_score_thresh |

Score threshold for text recognition. Results with scores > this threshold are retained.

|

float |

None |

text_rec_input_shape |

Input shape for text recognition. | tuple |

None |

lang |

Specifies the OCR model language.

|

str |

None |

ocr_version |

OCR model version.

|

str |

None |

device |

Device for inference. Supports:

|

str |

None |

enable_hpi |

Whether to enable high-performance inference. | bool |

False |

use_tensorrt |

Whether to use TensorRT for acceleration. | bool |

False |

min_subgraph_size |

Minimum subgraph size for model optimization. | int |

3 |

precision |

Computation precision (e.g., fp32, fp16). |

str |

fp32 |

enable_mkldnn |

Whether to enable MKL-DNN acceleration. If None, enabled by default. |

bool |

None |

cpu_threads |

Number of CPU threads for inference. | int |

8 |

paddlex_config |

Path to PaddleX pipeline configuration file. | str |

None |

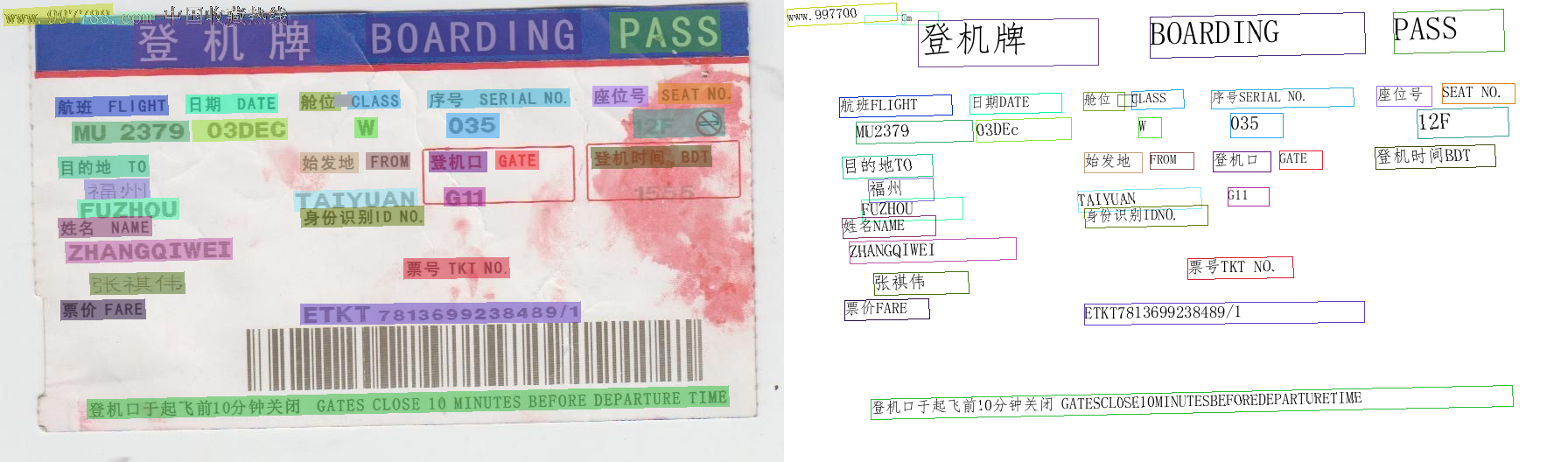

Results are printed to the terminal:

{'res': {'input_path': './general_ocr_002.png', 'page_index': None, 'model_settings': {'use_doc_preprocessor': True, 'use_textline_orientation': False}, 'doc_preprocessor_res': {'input_path': None, 'page_index': None, 'model_settings': {'use_doc_orientation_classify': False, 'use_doc_unwarping': False}, 'angle': -1}, 'dt_polys': array([[[ 3, 10],

...,

[ 4, 30]],

...,

[[ 99, 456],

...,

[ 99, 479]]], dtype=int16), 'text_det_params': {'limit_side_len': 736, 'limit_type': 'min', 'thresh': 0.3, 'max_side_limit': 4000, 'box_thresh': 0.6, 'unclip_ratio': 1.5}, 'text_type': 'general', 'textline_orientation_angles': array([-1, ..., -1]), 'text_rec_score_thresh': 0.0, 'rec_texts': ['www.997700', '', 'Cm', '登机牌', 'BOARDING', 'PASS', 'CLASS', '序号SERIAL NO.', '座位号', 'SEAT NO.', '航班FLIGHT', '日期DATE', '舱位', '', 'W', '035', '12F', 'MU2379', '03DEc', '始发地', 'FROM', '登机口', 'GATE', '登机时间BDT', '目的地TO', '福州', 'TAIYUAN', 'G11', 'FUZHOU', '身份识别IDNO.', '姓名NAME', 'ZHANGQIWEI', '票号TKT NO.', '张祺伟', '票价FARE', 'ETKT7813699238489/1', '登机口于起飞前10分钟关闭 GATESCL0SE10MINUTESBEFOREDEPARTURETIME'], 'rec_scores': array([0.67634439, ..., 0.97416091]), 'rec_polys': array([[[ 3, 10],

...,

[ 4, 30]],

...,

[[ 99, 456],

...,

[ 99, 479]]], dtype=int16), 'rec_boxes': array([[ 3, ..., 30],

...,

[ 99, ..., 479]], dtype=int16)}}

If save_path is specified, the visualization results will be saved under save_path. The visualization output is shown below:

2.2 Python Script Integration

The command-line method is for quick testing. For project integration, you can achieve OCR inference with just a few lines of code:

from paddleocr import PaddleOCR

ocr = PaddleOCR(

use_doc_orientation_classify=False, # Disables document orientation classification model via this parameter

use_doc_unwarping=False, # Disables text image rectification model via this parameter

use_textline_orientation=False, # Disables text line orientation classification model via this parameter

)

# ocr = PaddleOCR(lang="en") # Uses English model by specifying language parameter

# ocr = PaddleOCR(ocr_version="PP-OCRv4") # Uses other PP-OCR versions via version parameter

# ocr = PaddleOCR(device="gpu") # Enables GPU acceleration for model inference via device parameter

result = ocr.predict("./general_ocr_002.png")

for res in result:

res.print()

res.save_to_img("output")

res.save_to_json("output")

The Python script above performs the following steps:

(1) Initialize the OCR pipeline with PaddleOCR(). Parameter details:

| Parameter | Description | Type | Default |

|---|---|---|---|

doc_orientation_classify_model_name |

Name of the document orientation model. If None, uses the default pipeline model. |

str |

None |

doc_orientation_classify_model_dir |

Directory path of the document orientation model. If None, downloads the official model. |

str |

None |

doc_unwarping_model_name |

Name of the text image correction model. If None, uses the default pipeline model. |

str |

None |

doc_unwarping_model_dir |

Directory path of the text image correction model. If None, downloads the official model. |

str |

None |

text_detection_model_name |

Name of the text detection model. If None, uses the default pipeline model. |

str |

None |

text_detection_model_dir |

Directory path of the text detection model. If None, downloads the official model. |

str |

None |

text_line_orientation_model_name |

Name of the text line orientation model. If None, uses the default pipeline model. |

str |

None |

text_line_orientation_model_dir |

Directory path of the text line orientation model. If None, downloads the official model. |

str |

None |

text_line_orientation_batch_size |

Batch size for the text line orientation model. If None, defaults to 1. |

int |

None |

text_recognition_model_name |

Name of the text recognition model. If None, uses the default pipeline model. |

str |

None |

text_recognition_model_dir |

Directory path of the text recognition model. If None, downloads the official model. |

str |

None |

text_recognition_batch_size |

Batch size for the text recognition model. If None, defaults to 1. |

int |

None |

use_doc_orientation_classify |

Whether to enable document orientation classification. If None, defaults to pipeline initialization (True). |

bool |

None |

use_doc_unwarping |

Whether to enable text image correction. If None, defaults to pipeline initialization (True). |

bool |

None |

use_textline_orientation |

Whether to enable text line orientation classification. If None, defaults to pipeline initialization (True). |

bool |

None |

text_det_limit_side_len |

Maximum side length limit for text detection.

|

int |

None |

text_det_limit_type |

Side length limit type for text detection.

|

str |

None |

text_det_thresh |

Pixel threshold for text detection. Pixels with scores > this threshold are considered text.

|

float |

None |

text_det_box_thresh |

Box threshold for text detection. Detected regions with average scores > this threshold are retained.

|

float |

None |

text_det_unclip_ratio |

Expansion ratio for text detection. Larger values expand text regions more.

|

float |

None |

text_det_input_shape |

Input shape for text detection. | tuple |

None |

text_rec_score_thresh |

Score threshold for text recognition. Results with scores > this threshold are retained.

|

float |

None |

text_rec_input_shape |

Input shape for text recognition. | tuple |

None |

lang |

Specifies the OCR model language.

|

str |

None |

ocr_version |

OCR model version.

|

str |

None |

device |

Device for inference. Supports:

|

str |

None |

enable_hpi |

Whether to enable high-performance inference. | bool |

False |

use_tensorrt |

Whether to use TensorRT for acceleration. | bool |

False |

min_subgraph_size |

Minimum subgraph size for model optimization. | int |

3 |

precision |

Computation precision (e.g., fp32, fp16). |

str |

fp32 |

enable_mkldnn |

Whether to enable MKL-DNN acceleration. If None, enabled by default. |

bool |

None |

cpu_threads |

Number of CPU threads for inference. | int |

8 |

paddlex_config |

Path to PaddleX pipeline configuration file. | str |

None |

(2) Call the predict() method for inference. Alternatively, predict_iter() returns a generator for memory-efficient batch processing. Parameters:

| Parameter | Description | Type | Default |

|---|---|---|---|

input |

Input data (required). Supports:

|

Python Var|str|list |

|

device |

Same as initialization. | str |

None |

use_doc_orientation_classify |

Whether to enable document orientation classification during inference. | bool |

None |

use_doc_unwarping |

Whether to enable text image correction during inference. | bool |

None |

use_textline_orientation |

Whether to enable text line orientation classification during inference. | bool |

None |

text_det_limit_side_len |

Same as initialization. | int |

None |

text_det_limit_type |

Same as initialization. | str |

None |

text_det_thresh |

Same as initialization. | float |

None |

text_det_box_thresh |

Same as initialization. | float |

None |

text_det_unclip_ratio |

Same as initialization. | float |

None |

text_rec_score_thresh |

Same as initialization. | float |

None |

(3) Processing prediction results: Each sample's prediction result is a corresponding Result object, supporting printing, saving as images, and saving as json files:

| Method | Description | Parameter | Type | Explanation | Default |

|---|---|---|---|---|---|

print() |

Print results to terminal | format_json |

bool |

Whether to format output with JSON indentation |

True |

indent |

int |

Indentation level for prettifying JSON output (only when format_json=True) |

4 | ||

ensure_ascii |

bool |

Whether to escape non-ASCII characters to Unicode (only when format_json=True) |

False |

||

save_to_json() |

Save results as JSON file | save_path |

str |

Output file path (uses input filename when directory specified) | None |

indent |

int |

Indentation level for prettifying JSON output (only when format_json=True) |

4 | ||

ensure_ascii |

bool |

Whether to escape non-ASCII characters (only when format_json=True) |

False |

||

save_to_img() |

Save results as image file | save_path |

str |

Output path (supports directory or file path) | None |

-

The

print()method outputs results to terminal with the following structure:-

input_path:(str)Input image path -

page_index:(Union[int, None])PDF page number (if input is PDF), otherwiseNone -

model_settings:(Dict[str, bool])Pipeline configurationuse_doc_preprocessor:(bool)Whether document preprocessing is enableduse_textline_orientation:(bool)Whether text line orientation classification is enabled

-

doc_preprocessor_res:(Dict[str, Union[str, Dict[str, bool], int]])Document preprocessing results (only whenuse_doc_preprocessor=True)input_path:(Union[str, None])Preprocessor input path (Nonefornumpy.ndarrayinput)model_settings:(Dict)Preprocessor configurationuse_doc_orientation_classify:(bool)Whether document orientation classification is enableduse_doc_unwarping:(bool)Whether text image correction is enabled

angle:(int)Document orientation prediction (0-3 for 0°,90°,180°,270°; -1 if disabled)

-

dt_polys:(List[numpy.ndarray])Text detection polygons (4 vertices per box, shape=(4,2), dtype=int16) -

dt_scores:(List[float])Text detection confidence scores -

text_det_params:(Dict[str, Dict[str, int, float]])Text detection parameterslimit_side_len:(int)Image side length limitlimit_type:(str)Length limit handling methodthresh:(float)Text pixel classification thresholdbox_thresh:(float)Detection box confidence thresholdunclip_ratio:(float)Text region expansion ratiotext_type:(str)Fixed as "general"

-

textline_orientation_angles:(List[int])Text line orientation predictions (actual angles when enabled, [-1,-1,-1] when disabled) -

text_rec_score_thresh:(float)Text recognition score threshold -

rec_texts:(List[str])Recognized texts (filtered bytext_rec_score_thresh) -

rec_scores:(List[float])Recognition confidence scores (filtered) -

rec_polys:(List[numpy.ndarray])Filtered detection polygons (same format asdt_polys) -

rec_boxes:(numpy.ndarray)Rectangular bounding boxes (shape=(n,4), dtype=int16) with [x_min, y_min, x_max, y_max] coordinates

-

-

save_to_json()saves results to specifiedsave_path:- Directory: saves as

save_path/{your_img_basename}_res.json - File: saves directly to specified path

- Note: Converts

numpy.arrayto lists since JSON doesn't support numpy arrays

- Directory: saves as

-

save_to_img()saves visualization results:- Directory: saves as

save_path/{your_img_basename}_ocr_res_img.{your_img_extension} - File: saves directly (not recommended for multiple images to avoid overwriting)

- Directory: saves as

- Additionally, results with visualizations and predictions can be obtained through the following attributes:

| Attribute | Description |

|---|---|

json |

Retrieves prediction results in json format |

img |

Retrieves visualized images in dict format |

- The

jsonattribute returns prediction results as a dict, with content identical to what's saved by thesave_to_json()method. - The

imgattribute returns prediction results as a dictionary containing twoImage.Imageobjects under keysocr_res_img(OCR result visualization) andpreprocessed_img(preprocessing visualization). If the image preprocessing submodule isn't used, onlyocr_res_imgwill be present.

3. Development Integration/Deployment

If the general OCR pipeline meets your requirements for inference speed and accuracy, you can proceed directly with development integration/deployment.

If you need to apply the general OCR pipeline directly in your Python project, you can refer to the sample code in 2.2 Python Script Integration.

Additionally, PaddleOCR provides two other deployment methods, detailed as follows:

🚀 High-Performance Inference: In real-world production environments, many applications have stringent performance requirements (especially for response speed) to ensure system efficiency and smooth user experience. To address this, PaddleOCR offers high-performance inference capabilities, which deeply optimize model inference and pre/post-processing to achieve significant end-to-end speed improvements. For detailed high-performance inference workflows, refer to the High-Performance Inference Guide.

☁️ Service Deployment: Service deployment is a common form of deployment in production environments. By encapsulating inference functionality as a service, clients can access these services via network requests to obtain inference results. For detailed pipeline service deployment workflows, refer to the Service Deployment Guide.

Below are the API reference for basic service deployment and examples of multi-language service calls:

API Reference

For the main operations provided by the service:

- The HTTP request method is POST.

- Both the request body and response body are JSON data (JSON objects).

- When the request is processed successfully, the response status code is

200, and the response body has the following attributes:

| Name | Type | Description |

|---|---|---|

logId |

string |

UUID of the request. |

errorCode |

integer |

Error code. Fixed as 0. |

errorMsg |

string |

Error message. Fixed as "Success". |

result |

object |

Operation result. |

- When the request fails, the response body has the following attributes:

| Name | Type | Description |

|---|---|---|

logId |

string |

UUID of the request. |

errorCode |

integer |

Error code. Same as the response status code. |

errorMsg |

string |

Error message. |

The main operations provided by the service are as follows:

infer

Obtain OCR results for an image.

POST /ocr

- The request body has the following attributes:

| Name | Type | Description | Required |

|---|---|---|---|

file |

string |

A server-accessible URL to an image or PDF file, or the Base64-encoded content of such a file. By default, for PDF files with more than 10 pages, only the first 10 pages are processed. To remove the page limit, add the following configuration to the pipeline config file: |

Yes |

fileType |

integer | null |

File type. 0 for PDF, 1 for image. If omitted, the type is inferred from the URL. |

No |

useDocOrientationClassify |

boolean | null |

Refer to the use_doc_orientation_classify parameter in the pipeline object's predict method. |

No |

useDocUnwarping |

boolean | null |

Refer to the use_doc_unwarping parameter in the pipeline object's predict method. |

No |

useTextlineOrientation |

boolean | null |

Refer to the use_textline_orientation parameter in the pipeline object's predict method. |

No |

textDetLimitSideLen |

integer | null |

Refer to the text_det_limit_side_len parameter in the pipeline object's predict method. |

No |

textDetLimitType |

string | null |

Refer to the text_det_limit_type parameter in the pipeline object's predict method. |

No |

textDetThresh |

number | null |

Refer to the text_det_thresh parameter in the pipeline object's predict method. |

No |

textDetBoxThresh |

number | null |

Refer to the text_det_box_thresh parameter in the pipeline object's predict method. |

No |

textDetUnclipRatio |

number | null |

Refer to the text_det_unclip_ratio parameter in the pipeline object's predict method. |

No |

textRecScoreThresh |

number | null |

Refer to the text_rec_score_thresh parameter in the pipeline object's predict method. |

No |

- When the request is successful, the

resultin the response body has the following attributes:

| Name | Type | Description |

|---|---|---|

ocrResults |

object |

OCR results. The array length is 1 (for image input) or the number of processed document pages (for PDF input). For PDF input, each element represents the result for a corresponding page. |

dataInfo |

object |

Input data information. |

Each element in ocrResults is an object with the following attributes:

| Name | Type | Description |

|---|---|---|

prunedResult |

object |

A simplified version of the res field in the JSON output of the pipeline object's predict method, excluding input_path and page_index. |

ocrImage |

string | null |

OCR result image with detected text regions highlighted. JPEG format, Base64-encoded. |

docPreprocessingImage |

string | null |

Visualization of preprocessing results. JPEG format, Base64-encoded. |

inputImage |

string | null |

Input image. JPEG format, Base64-encoded. |

Multi-Language Service Call Examples

Python

import base64

import requests

API_URL = "http://localhost:8080/ocr"

file_path = "./demo.jpg"

with open(file_path, "rb") as file:

file_bytes = file.read()

file_data = base64.b64encode(file_bytes).decode("ascii")

payload = {"file": file_data, "fileType": 1}

response = requests.post(API_URL, json=payload)

assert response.status_code == 200

result = response.json()["result"]

for i, res in enumerate(result["ocrResults"]):

print(res["prunedResult"])

ocr_img_path = f"ocr_{i}.jpg"

with open(ocr_img_path, "wb") as f:

f.write(base64.b64decode(res["ocrImage"]))

print(f"Output image saved at {ocr_img_path}")

4. Custom Development

The general OCR pipeline consists of multiple modules. If the pipeline's performance does not meet expectations, the issue may stem from any of these modules. You can analyze poorly recognized images to identify the problematic module and refer to the corresponding fine-tuning tutorials in the table below for adjustments.

| Scenario | Module to Fine-Tune | Fine-Tuning Reference |

|---|---|---|

| Inaccurate whole-image rotation correction | Document orientation classification module | Link |

| Inaccurate image distortion correction | Text image unwarping module | Fine-tuning not supported |

| Inaccurate textline rotation correction | Textline orientation classification module | Link |

| Text detection misses | Text detection module | Link |

| Incorrect text recognition | Text recognition module | Link |