mirror of

https://github.com/open-mmlab/mmdeploy.git

synced 2025-01-14 08:09:43 +08:00

* make -install -> make install (#621) change `make -install` to `make install` https://github.com/open-mmlab/mmdeploy/issues/618 * [Fix] fix csharp api detector release result (#620) * fix csharp api detector release result * fix wrong count arg of xxx_release_result in c# api * [Enhancement] Support two-stage rotated detector TensorRT. (#530) * upload * add fake_multiclass_nms_rotated * delete unused code * align with pytorch * Update delta_midpointoffset_rbbox_coder.py * add trt rotated roi align * add index feature in nms * not good * fix index * add ut * add benchmark * move to csrc/mmdeploy * update unit test Co-authored-by: zytx121 <592267829@qq.com> * Reduce mmcls version dependency (#635) * fix shufflenetv2 with trt (#645) * fix shufflenetv2 and pspnet * fix ci * remove print * ' -> " (#654) If there is a variable in the string, single quotes will ignored it, while double quotes will bring the variable into the string after parsing * ' -> " (#655) same with https://github.com/open-mmlab/mmdeploy/pull/654 * Support deployment of Segmenter (#587) * support segmentor with ncnn * update regression yml * replace chunk with split to support ts * update regression yml * update docs * fix segmenter ncnn inference failure brought by #477 * add test * fix test for ncnn and trt * fix lint * export nn.linear to Gemm op in onnx for ncnn * fix ci * simplify `Expand` (#617) * Fix typo (#625) * Add make install in en docs * Add make install in zh docs * Fix typo * Merge and add windows build Co-authored-by: tripleMu <865626@163.com> * [Enhancement] Fix ncnn unittest (#626) * optmize-csp-darknet * replace floordiv to torch.div * update csp_darknet default implement * fix test * [Enhancement] TensorRT Anchor generator plugin (#646) * custom trt anchor generator * add ut * add docstring, update doc * Add partition doc and sample code (#599) * update torch2onnx tool to support onnx partition * add model partition of yolov3 * add cn doc * update torch2onnx tool to support onnx partition * add model partition of yolov3 * add cn doc * add to index.rst * resolve comment * resolve comments * fix lint * change caption level in docs * update docs (#624) * Add java apis and demos (#563) * add java classifier detector * add segmentor * fix lint * add ImageRestorer java apis and demo * remove useless count parameter for Segmentor and Restorer, add PoseDetector * add RotatedDetection java api and demo * add Ocr java demo and apis * remove mmrotate ncnn java api and demo * fix lint * sync java api folder after rebase to master * fix include * remove record * fix java apis dir path in cmake * add java demo readme * fix lint mdformat * add test javaapi ci * fix lint * fix flake8 * fix test javaapi ci * refactor readme.md * fix install opencv for ci * fix install opencv : add permission * add all codebases and mmcv install * add torch * install mmdeploy * fix image path * fix picture path * fix import ncnn * fix import ncnn * add submodule of pybind * fix pybind submodule * change download to git clone for submodule * fix ncnn dir * fix README error * simplify the github ci * fix ci * fix yapf * add JNI as required * fix Capitalize * fix Capitalize * fix copyright * ignore .class changed * add OpenJDK installation docs * install target of javaapi * simplify ci * add jar * fix ci * fix ci * fix test java command * debugging what failed * debugging what failed * debugging what failed * add java version info * install openjdk * add java env var * fix export * fix export * fix export * fix export * fix picture path * fix picture path * fix file name * fix file name * fix README * remove java_api strategy * fix python version * format task name * move args position * extract common utils code * show image class result * add detector result * segmentation result format * add ImageRestorer result * add PoseDetection java result format * fix ci * stage ocr * add visualize * move utils * fix lint * fix ocr bugs * fix ci demo * fix java classpath for ci * fix popd * fix ocr demo text garbled * fix ci * fix ci * fix ci * fix path of utils ci * update the circleci config file by adding workflows both for linux, windows and linux-gpu (#368) * update circleci by adding more workflows * fix test workflow failure on windows platform * fix docker exec command for SDK unittests * Fixed tensorrt plugin not found in Windows (#672) * update introduction.png (#674) * [Enhancement] Add fuse select assign pass (#589) * Add fuse select assign pass * move code to csrc * add config flag * remove bool cast * fix export sdk info of input shape (#667) * Update get_started.md (#675) Fix backend model assignment * Update get_started.md (#676) Fix backend model assignment * [Fix] fix clang build (#677) * fix clang build * fix ndk build * fix ndk build * switch to `std::filesystem` for clang-7 and later * Deploy the Swin Transformer on TensorRT. (#652) * resolve conflicts * update ut and docs * fix ut * refine docstring * add comments and refine UT * resolve comments * resolve comments * update doc * add roll export * check backend * update regression test * bump version to 0.6.0 (#680) * bump vertion to 0.6.0 * update version * pass img_metas while exporting to onnx (#681) * pass img_metas while exporting to onnx * remove try-catch in tools for beter debugging * use get * fix typo * [Fix] fix ssd ncnn ut (#692) * fix ssd ncnn ut * fix yapf * fix passing img_metas to pytorch2onnx for mmedit (#700) * fix passing img_metas for mmdet3d (#707) * [Fix] Fix android build (#698) * fix android build * fix cmake * fix url link * fix wrong exit code in pipeline_manager (#715) * fix exit * change to general exit errorcode=1 * fix passing wrong backend type (#719) * Rename onnx2ncnn to mmdeploy_onnx2ncnn (#694) * improvement(tools/onnx2ncnn.py): rename to mmdeploy_onnx2ncnn * format(tools/deploy.py): clean code * fix(init_plugins.py): improve if condition * fix(CI): update target * fix(test_onnx2ncnn.py): update desc * Update init_plugins.py * [Fix] Fix mmdet ort static shape bug (#687) * fix shape * add device * fix yapf * fix rewriter for transforms * reverse image shape * fix ut of distance2bbox * fix rewriter name * fix c4 for torchscript (#724) * [Enhancement] Standardize C API (#634) * unify C API naming * fix demo and move apis/c/* -> apis/c/mmdeploy/* * fix lint * fix C# project * fix Java API * [Enhancement] Support Slide Vertex TRT (#650) * reorgnize mmrotate * fix * add hbb2obb * add ut * fix rotated nms * update docs * update benchmark * update test * remove ort regression test, remove comment * Fix get-started rendering issues in readthedocs (#740) * fix mermaid markdown rendering issue in readthedocs * fix error in C++ example * fix error in c++ example in zh_cn get_started doc * [Fix] set default topk for dump info (#702) * set default topk for dump info * remove redundant docstrings * add ci densenet * fix classification warnings * fix mmcls version * fix logger.warnings * add version control (#754) * fix satrn for ORT (#753) * fix satrn for ORT * move rewrite into pytorch * Add inference latency test tool (#665) * add profile tool * remove print envs in profile tool * set cudnn_benchmark to True * add doc * update tests * fix typo * support test with images from a directory * update doc * resolve comments * [Enhancement] Add CSE ONNX pass (#647) * Add fuse select assign pass * move code to csrc * add config flag * Add fuse select assign pass * Add CSE for ONNX * remove useless code * Test robot Just test robot * Update README.md Revert * [Fix] fix yolox point_generator (#758) * fix yolox point_generator * add a UT * resolve comments * fix comment lines * limit markdown version (#773) * [Enhancement] Better index put ONNX export. (#704) * Add rewriter for tensor setitem * add version check * Upgrade Dockerfile to use TensorRT==8.2.4.2 (#706) * Upgrade TensorRT to 8.2.4.2 * upgrade pytorch&mmcv in CPU Dockerfile * Delete redundant port example in Docker * change 160x160-608x608 to 64x64-608x608 for yolov3 * [Fix] reduce log verbosity & improve error reporting (#755) * reduce log verbosity & improve error reporting * improve error reporting * [Enhancement] Support latest ppl.nn & ppl.cv (#564) * support latest ppl.nn * fix pplnn for model convertor * fix lint * update memory policy * import algo from buffer * update ppl.cv * use `ppl.cv==0.7.0` * document supported ppl.nn version * skip pplnn dependency when building shared libs * [Fix][P0] Fix for torch1.12 (#751) * fix for torch1.12 * add comment * fix check env (#785) * [Fix] fix cascade mask rcnn (#787) * fix cascade mask rcnn * fix lint * add regression * [Feature] Support RoITransRoIHead (#713) * [Feature] Support RoITransRoIHead * Add docs * Add mmrotate models regression test * Add a draft for test code * change the argument name * fix test code * fix minor change for not class agnostic case * fix sample for test code * fix sample for test code * Add mmrotate in requirements * Revert "Add mmrotate in requirements" This reverts commit 043490075e6dbe4a8fb98e94b2b583b91fc5038d. * [Fix] fix triu (#792) * fix triu * triu -> triu_default * [Enhancement] Install Optimizer by setuptools (#690) * Add fuse select assign pass * move code to csrc * add config flag * Add fuse select assign pass * Add CSE for ONNX * remove useless code * Install optimizer by setup tools * fix comment * [Feature] support MMRotate model with le135 (#788) * support MMRotate model with le135 * cse before fuse select assign * remove unused import * [Fix] Support macOS build (#762) * fix macOS build * fix missing * add option to build & install examples (#822) * [Fix] Fix setup on non-linux-x64 (#811) * fix setup * replace long to int64_t * [Feature] support build single sdk library (#806) * build single lib for c api * update csharp doc & project * update test build * fix test build * fix * update document for building android sdk (#817) Co-authored-by: dwSun <dwsunny@icloud.com> * [Enhancement] support kwargs in SDK python bindings (#794) * support-kwargs * make '__call__' as single image inference and add 'batch' API to deal with batch images inference * fix linting error and typo * fix lint * improvement(sdk): add sdk code coverage (#808) * feat(doc): add CI * CI(sdk): add sdk coverage * style(test): code format * fix(CI): update coverage.info path * improvement(CI): use internal image * improvement(CI): push coverage info once * [Feature] Add C++ API for SDK (#831) * add C++ API * unify result type & add examples * minor fix * install cxx API headers * fix Mat, add more examples * fix monolithic build & fix lint * install examples correctly * fix lint * feat(tools/deploy.py): support snpe (#789) * fix(tools/deploy.py): support snpe * improvement(backend/snpe): review advices * docs(backend/snpe): update build * docs(backend/snpe): server support specify port * docs(backend/snpe): update path * fix(backend/snpe): time counter missing argument * docs(backend/snpe): add missing argument * docs(backend/snpe): update download and using * improvement(snpe_net.cpp): load model with modeldata * Support setup on environment with no PyTorch (#843) * support test with multi batch (#829) * support test with multi batch * resolve comment * import algorithm from buffer (#793) * [Enhancement] build sdk python api in standard-alone manner (#810) * build sdk python api in standard-alone manner * enable MMDEPLOY_BUILD_SDK_MONOLITHIC and MMDEPLOY_BUILD_EXAMPLES in prebuild config * link mmdeploy to python target when monolithic option is on * checkin README to describe precompiled package build procedure * use packaging.version.parse(python_version) instead of list(python_version) * fix according to review results * rebase master * rollback cmake.in and apis/python/CMakeLists.txt * reorganize files in install/example * let cmake detect visual studio instead of specifying 2019 * rename whl name of precompiled package * fix according to review results * Fix SDK backend (#844) * fix mmpose python api (#852) * add prebuild package usage docs on windows (#816) * add prebuild package usage docs on windows * fix lint * update * try fix lint * add en docs * update * update * udpate faq * fix typo (#862) * [Enhancement] Improve get_started documents and bump version to 0.7.0 (#813) * simplify commands in get_started * add installation commands for Windows * fix typo * limit markdown and sphinx_markdown_tables version * adopt html <details open> tag * bump mmdeploy version * bump mmdeploy version * update get_started * update get_started * use python3.8 instead of python3.7 * remove duplicate section * resolve issue #856 * update according to review results * add reference to prebuilt_package_windows.md * fix error when build sdk demos * improvement(dockerfile): use make -j$(nporc) when build ncnn (#840) * use make -j$(nporc) when build ncnn * improve cpu dockerfile * fix error when set device cpu && fix docs error (#866) * [Feature]support pointpillar nus version (#391) * support pointpillar nus version * support pointpillar nus version * add regression test config for mmdet3d * fix exit with no error code * fix cfg * fix worksize * fix worksize * fix cfg * support nus pp * fix yaml * fix yaml * fix yaml * add ut * fix ut Co-authored-by: RunningLeon <mnsheng@yeah.net> * Fix doc error of building C examples (#879) * fix doc error of building C demo examples Path error in cmake compilation of C demo examples * fix en doc error of building C demo examples Path error in cmake compilation of C demo examples * fix adaptive_avg_pool exporting to onnx (#857) * fix adaptive_avg_pool exporting to onnx * remove debug codes * fix ci * resolve comment * docs(project): sync en and zh docs (#842) * docs(en): update file structure * docs(zh_cn): update * docs(structure): update * docs(snpe): update * docs(README): update * fix(CI): update * fix(CI): index.rst error * fix(docs): update * fix(docs): remove mermaid * fix(docs): remove useless * fix(docs): update link * docs(en): update * docs(en): update * docs(zh_cn): remove \[ * docs(zh_cn): format * docs(en): remove blank * fix(CI): doc link error * docs(project): remove "./" prefix * docs(zh_cn): fix mdformat * docs(en): update title * fix(CI): update docs * fix mmdeploy_pplnn_net build error when target device is cpu (#896) * docs(zh_cn): add architect (#882) * docs(zh_cn): add architect docs(en): add architect fix(docs): readthedocs index * docs(en): update architect.md * docs(README.md): update * docs(architecture): fix review advices * add device backend check (#886) * add device backend check * safe check * only activated for tensorrt and openvino * resolve comments * support multi-batch test in profile tool (#868) * test batch profile with resnet pspnet yolov3 srcnn * update doc * update docs * fix ut * fix mmdet * support batch mmorc and mmrotate * fix mmcls export to sdk * resolve comments * rename to fix #819 * fix conflicts with master * [Fix] fix device error in dump-info (#912) * fix device error in dump-info * fix UT * improvement(cmake): simplify build option and doc (#832) * improvement(cmake): simplify build option improvement(cmake): convert target_backends with directory * fix(dockerfile): build error * fix(CI): circle CI * fix(docs): snpe and cmake option * fix(docs): revert update cmake * fix(docs): revert * update(docs): remove useless * set test_mode for mmdet (#920) * fix * update * [Doc] How to write a customized TensorRT plugin (#290) * first edition * fix lint * add 06, 07 * resolve comments * update index.rst * update title * update img * [Feature] add swin for cls (#911) * add swin for cls * add ut and doc * reduce trt batch size * add regression test * resolve comments * remove useless rewriting logic * docs(mmdet3d): give detail model path (#940) * add cflags explicitly in ci (#945) * improvement(installation): add script install mmdeploy (#919) * feat(tools): add build ubuntu x64 ncnn * ci(tools): add ncnn auto install * fix(ci): auto install ncnn * fix(tools): no interactive * docs(build): add script build * CI(ncnn): script install ncnn * docs(zh_cn): fix error os * fix * CI(tools/script): test ort install passed * update * CI(tools): support pplnn * CI(build): add pplnn * docs(tools): update * fix * CI(tools): script install torchscript * docs(build): add torchscript * fix(tools): clean code and doc * update * fix(CI): requirements install failed * debug CI * update * update * update * feat(tools/script): support user specify make jobs * fix(tools/script): fix build pplnn with cuda * fix(tools/script): torchscript add tips and simplify install mmcv * fix(tools/script): check nvcc version first * fix(tools/scripts): pplnn checkout * fix(CI): add simple check install succcess * fix * debug CI * fix * fix(CI): pplnn install mis wheel * fix(CI): build error * fix(CI): remove misleading message * Support risc-v platform (#910) * add ppl.nn riscv engine * update ppl.nn riscv engine * udpate riscv service (ncnn backend) * update _build_wrapper for ncnn * fix build * fix lint * update default uri * update file structure & add cn doc * remove copy input data * update docs * remove ncnn server * fix docs * update zh doc * update toolchain * remove unused * update doc * update doc * update doc * rename cross build dirname * add riscv.md to build_from_source.md * update cls model * test ci * test ci * test ci * test ci * test ci * update ci * update ci * [Feature] TorchScript SDK backend (#890) * WIP SDK torchscript support * support detection task * make torchvision optional * force link torchvision if enabled * support torch-1.12 * fix export & sync cuda stream * hide internal classes * handle error * set `MMDEPLOY_USE_CUDA` when CUDA is enabled * [Bug] fix setitem with scalar or single element tensor (#941) * fix setitem * add copy symbolic * docs(convert_model): update description (#956) * [Enhancement] Support DETR (#924) * add detr support * fix softmax * add reg test, update document * fix ut failed (#951) * [Enhancement] Rewriter support pre-import function (#899) * support preimport * update rewriter * fix batched nms ort * add_multi_label_postprocess (#950) * 'add_multi_label_postprocess' * fix pre-commit * delete partial_sort * delete idx * delete num_classes and num_classes_ * Fix right brackets and spelling errors in lines 19 and 20 Co-authored-by: gaoying <gaoying@xiaobaishiji.com> * fix ci (#964) * [Fix] Close onnx optimizer for ncnn (#961) * close onnx optimizer for ncnn * fix docformatter * fix lint * remove Release dir in mmdeploy package (#960) * CI(tools/scripts): add submodule init and update (#977) * fix mmroate (#976) * Fix mmseg pointrend (#903) * support mmseg:pointrend * update docs * update docs for torchscript * resolve comments * Add CI to test full pipeline (#966) * add mmcls full pipeline test ci * update * update * add mmcv * install torch * install mmdeploy * change clone with https * install mmcls * update * change mmcls version * add mmcv version * update mmcls version * test sdk * tast with imagnet * sed pipeline * print env * update * move to backend-ort ci * install mim * fix regression test (#958) * fix reg * set sdk wrapper device id * resolve comment * fix(CI): typo (#983) * fix(CI): ort test all pipeline (#985) * add missing sqrt for PAAHead's score calculation (#984) Co-authored-by: xianghongyi1 <xianghongyi1@sensetime.com> * Fix: skip tests for uninstalled codebases (#987) * skip tests if codebase not installed * skip ort run test * fix mmseg * [Feature] Ascend backend (#747) * add acl backend * support dynamic batch size and dynamic image size * add preliminary ascend backend * support dtypes other than float * support dynamic_dims in SDK * fix dynamic batch size * better error handling * remove debug info * [WIP] dynamic shape support * fix static shape * fix dynamic batch size * add retinanet support * fix dynamic image size * fix dynamic image size * fix dynamic dims * fix dynamic dims * simplify config files * fix yolox support * fix negative index * support faster rcnn * add seg config * update benchmark * fix onnx2ascend dynamic shape * update docstring and benchmark * add unit test, update documents * fix wrapper * fix ut * fix for vit * error handling * context handling & multi-device support * build with stub libraries * add ci * fix lint * fix lint * update doc ref * fix typo * down with `target_link_directories` * setup python * makedir * fix ci * fix ci * remove verbose logs * fix UBs * export Error * fix lint * update checkenv Co-authored-by: grimoire <yaoqian@sensetime.com> * fix(backend): disable cublaslt for cu102 (#947) * fix(backend): disable cublaslt for cu102 * fix * fix(backend): update * fix(tensorrt/util.py): add find cuda version * fix * fix(CI): first use cmd to get cuda version * docs(tensorrt/utils.py): update docstring * TensorRT dot product attention ops (#949) * add detr support * fix softmax * add placeholder * add implement * add docs and ut * update testcase * update docs * update docs * fix mmdet showresult (#999) * fix mmdet showresult * Consider compatibility * mmdet showresult add *args * Revert "mmdet showresult add *args" This reverts commit 82265a31cf910618a1dff4aab65e9dc793a623c4. Co-authored-by: whhuang <whhuang@hitotek.com> * support coreml (#760) * sdk inference * fix typo * fix typo * add convert things * fix missling name * add cls support * add more pytorch rewriter * add det support * support det wip * make Model export model_path * fix nms * add output back * add docstring * fix lint * add coreml build action * add zh docs * add coreml backend check * update ci * update * update * update * update * update * fix lint * update configs * add return value when error occured * update docs * update docs * update docs * fix lint * udpate docs * udpate docs * update Co-authored-by: grimoire <streetyao@live.com> * fix mmdet ut (#1001) * [Feature] Add option to fuse transform. (#741) * add collect_impl.cpp to cuda device * add dummy compute node wich device elena * add compiler & dynamic library loader * add code to compile with gen code(elena) * move folder * fix lint * add tracer module * add license * update type id * add fuse kernel registry * remove compilier & dynamic_library * update fuse kernel interface * Add elena-mmdeploy project in 3rd-party * Fix README.md * fix cmake file * Support cuda device and clang format all file * Add cudaStreamSynchronize for cudafree * fix cudaStreamSynchronize * rename to __tracer__ * remove unused code * update kernel * update extract elena script * update gitignore * fix ci * Change the crop_size to crop_h and crop_w in arglist * update Tracer * remove cond * avoid allocate memory * add build.sh for elena * remove code * update test * Support bilinear resize with float input * Rename elena-mmdeploy to delete * Introduce public submodule * use get_ref * update elena * update tools * update tools * update fuse transform docs * add fuse transform doc link to get_started * fix shape in crop * remove fuse_transform_ == true check * remove fuse_transform_ member * remove elena_int.h * doesn't dump transform_static.json * update tracer * update CVFusion to remove compile warning * remove mmcv version > 1.5.1 dep * fix tests * update docs * add elena use option * remove submodule of CVFusion * update doc * use auto * use throw_exception(eEntryNotFound); * update Co-authored-by: cx <cx@ubuntu20.04> Co-authored-by: miraclezqc <969226879@qq.com> * Add RKNN support. (#865) * save codes * support resnet and yolov3 * support yolox * fix lint * add mmseg support and a doc * add UT * update supported model list * fix ci * refine docstring * resolve comments * remote output_tensor_type * resolve comments * update readme * [Fix] Add isolated option for TorchScript SDK backend (#1002) * add option for TorchScript SDK backend * add doc * format * bump version to v0.8.0 (#1009) * fix(CI): update link checker (#1008) * New issue template (#1007) * update bug report * update issue template * update bug-report * fix mmdeploy builder on windows (#1018) * fix mmdeploy builder on windows * add pyyaml * fix lint * BUG P0 (#1044) * update api in doc (#1021) * fix two stage batch dynamic (#1046) * docs(scripts): update auto install desc (#1036) * Fix `RoIAlignFunction` error for CoreML backend (#1029) * Fixed typo for install commands for TensorRT runtime (#1025) * Fixed typo for install commands for TensorRT runtime * Apply typo-fix on 'cn' documentation Co-authored-by: Tümer Tosik <tumer_t@hotmail.de> * merge master@a1a19f0 documents to dev-1.x * missed ubuntu_utils.py * change benchmark reference in readme_zh-CN Co-authored-by: Ryan_Huang <44900829+DrRyanHuang@users.noreply.github.com> Co-authored-by: Chen Xin <xinchen.tju@gmail.com> Co-authored-by: q.yao <yaoqian@sensetime.com> Co-authored-by: zytx121 <592267829@qq.com> Co-authored-by: RunningLeon <mnsheng@yeah.net> Co-authored-by: Li Zhang <lzhang329@gmail.com> Co-authored-by: tripleMu <gpu@163.com> Co-authored-by: tripleMu <865626@163.com> Co-authored-by: hanrui1sensetime <83800577+hanrui1sensetime@users.noreply.github.com> Co-authored-by: Bryan Glen Suello <11388006+bgsuello@users.noreply.github.com> Co-authored-by: zambranohally <63218980+zambranohally@users.noreply.github.com> Co-authored-by: AllentDan <41138331+AllentDan@users.noreply.github.com> Co-authored-by: tpoisonooo <khj.application@aliyun.com> Co-authored-by: Hakjin Lee <nijkah@gmail.com> Co-authored-by: 孙德伟 <5899962+dwSun@users.noreply.github.com> Co-authored-by: dwSun <dwsunny@icloud.com> Co-authored-by: Chen Xin <irexyc@gmail.com> Co-authored-by: OldDreamInWind <108687632+OldDreamInWind@users.noreply.github.com> Co-authored-by: VVsssssk <88368822+VVsssssk@users.noreply.github.com> Co-authored-by: 梦阳 <49838178+liu-mengyang@users.noreply.github.com> Co-authored-by: gy77 <64619863+gy-7@users.noreply.github.com> Co-authored-by: gaoying <gaoying@xiaobaishiji.com> Co-authored-by: Hongyi Xiang <Groexhy@users.noreply.github.com> Co-authored-by: xianghongyi1 <xianghongyi1@sensetime.com> Co-authored-by: munhou <51435578+munhou@users.noreply.github.com> Co-authored-by: whhuang <whhuang@hitotek.com> Co-authored-by: grimoire <streetyao@live.com> Co-authored-by: cx <cx@ubuntu20.04> Co-authored-by: miraclezqc <969226879@qq.com> Co-authored-by: Jelle Maas <typiqally@gmail.com> Co-authored-by: ichitaka <tuemerffm@hotmail.com> Co-authored-by: Tümer Tosik <tumer_t@hotmail.de>

385 lines

12 KiB

Markdown

385 lines

12 KiB

Markdown

# How to use prebuilt package on Windows10

|

||

|

||

- [How to use prebuilt package on Windows10](#how-to-use-prebuilt-package-on-windows10)

|

||

- [Prerequisite](#prerequisite)

|

||

- [ONNX Runtime](#onnx-runtime)

|

||

- [TensorRT](#tensorrt)

|

||

- [Model Convert](#model-convert)

|

||

- [ONNX Runtime Example](#onnx-runtime-example)

|

||

- [TensorRT Example](#tensorrt-example)

|

||

- [Model Inference](#model-inference)

|

||

- [Backend Inference](#backend-inference)

|

||

- [ONNXRuntime](#onnxruntime)

|

||

- [TensorRT](#tensorrt-1)

|

||

- [Python SDK](#python-sdk)

|

||

- [ONNXRuntime](#onnxruntime-1)

|

||

- [TensorRT](#tensorrt-2)

|

||

- [C SDK](#c-sdk)

|

||

- [ONNXRuntime](#onnxruntime-2)

|

||

- [TensorRT](#tensorrt-3)

|

||

- [Troubleshooting](#troubleshooting)

|

||

|

||

______________________________________________________________________

|

||

|

||

This tutorial takes `mmdeploy-0.8.0-windows-amd64-onnxruntime1.8.1.zip` and `mmdeploy-0.8.0-windows-amd64-cuda11.1-tensorrt8.2.3.0.zip` as examples to show how to use the prebuilt packages.

|

||

|

||

The directory structure of the prebuilt package is as follows, where the `dist` folder is about model converter, and the `sdk` folder is related to model inference.

|

||

|

||

```

|

||

.

|

||

|-- dist

|

||

`-- sdk

|

||

|-- bin

|

||

|-- example

|

||

|-- include

|

||

|-- lib

|

||

`-- python

|

||

```

|

||

|

||

## Prerequisite

|

||

|

||

In order to use the prebuilt package, you need to install some third-party dependent libraries.

|

||

|

||

1. Follow the [get_started](../get_started.md) documentation to create a virtual python environment and install pytorch, torchvision and mmcv-full. To use the C interface of the SDK, you need to install [vs2019+](https://visualstudio.microsoft.com/), [OpenCV](https://github.com/opencv/opencv/releases).

|

||

|

||

:point_right: It is recommended to use `pip` instead of `conda` to install pytorch and torchvision

|

||

|

||

2. Clone the mmdeploy repository

|

||

|

||

```bash

|

||

git clone https://github.com/open-mmlab/mmdeploy.git

|

||

```

|

||

|

||

:point_right: The main purpose here is to use the configs, so there is no need to compile `mmdeploy`.

|

||

|

||

3. Install mmclassification

|

||

|

||

```bash

|

||

git clone https://github.com/open-mmlab/mmclassification.git

|

||

cd mmclassification

|

||

pip install -e .

|

||

```

|

||

|

||

4. Prepare a PyTorch model as our example

|

||

|

||

Download the pth [resnet18_8xb32_in1k_20210831-fbbb1da6.pth](https://download.openmmlab.com/mmclassification/v0/resnet/resnet18_8xb32_in1k_20210831-fbbb1da6.pth). The corresponding config of the model is [resnet18_8xb32_in1k.py](https://github.com/open-mmlab/mmclassification/blob/master/configs/resnet/resnet18_8xb32_in1k.py)

|

||

|

||

After the above work is done, the structure of the current working directory should be:

|

||

|

||

```

|

||

.

|

||

|-- mmclassification

|

||

|-- mmdeploy

|

||

|-- resnet18_8xb32_in1k_20210831-fbbb1da6.pth

|

||

```

|

||

|

||

### ONNX Runtime

|

||

|

||

In order to use `ONNX Runtime` backend, you should also do the following steps.

|

||

|

||

5. Install `mmdeploy` (Model Converter) and `mmdeploy_python` (SDK Python API).

|

||

|

||

```bash

|

||

# download mmdeploy-0.8.0-windows-amd64-onnxruntime1.8.1.zip

|

||

pip install .\mmdeploy-0.8.0-windows-amd64-onnxruntime1.8.1\dist\mmdeploy-0.8.0-py38-none-win_amd64.whl

|

||

pip install .\mmdeploy-0.8.0-windows-amd64-onnxruntime1.8.1\sdk\python\mmdeploy_python-0.8.0-cp38-none-win_amd64.whl

|

||

```

|

||

|

||

:point_right: If you have installed it before, please uninstall it first.

|

||

|

||

6. Install onnxruntime package

|

||

|

||

```

|

||

pip install onnxruntime==1.8.1

|

||

```

|

||

|

||

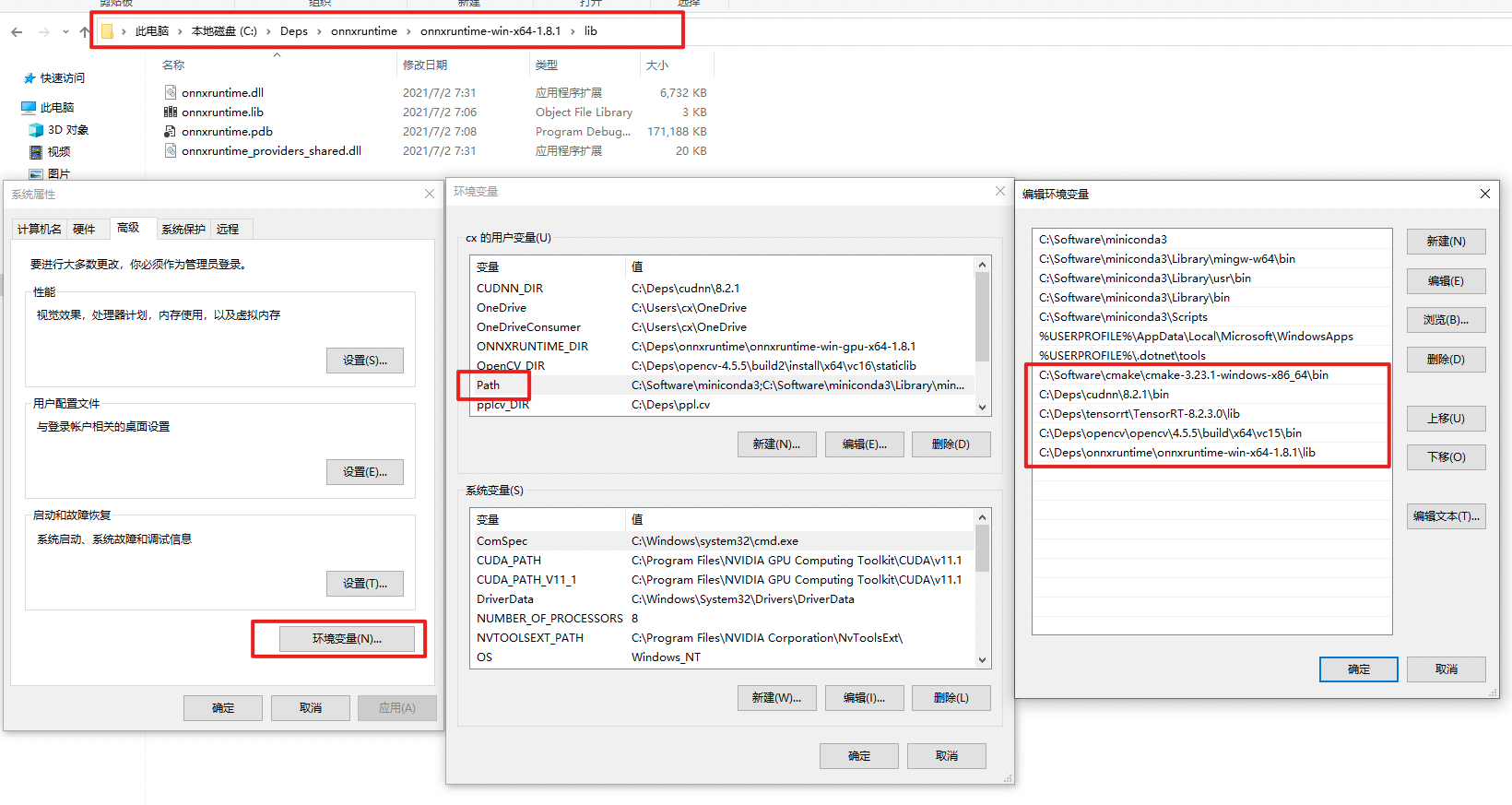

7. Download [`onnxruntime`](https://github.com/microsoft/onnxruntime/releases/tag/v1.8.1), and add environment variable.

|

||

|

||

As shown in the figure, add the lib directory of onnxruntime to the `PATH`.

|

||

|

||

|

||

:exclamation: Restart powershell to make the environment variables setting take effect. You can check whether the settings are in effect by `echo $env:PATH`.

|

||

|

||

### TensorRT

|

||

|

||

In order to use `TensorRT` backend, you should also do the following steps.

|

||

|

||

5. Install `mmdeploy` (Model Converter) and `mmdeploy_python` (SDK Python API).

|

||

|

||

```bash

|

||

# download mmdeploy-0.8.0-windows-amd64-cuda11.1-tensorrt8.2.3.0.zip

|

||

pip install .\mmdeploy-0.8.0-windows-amd64-cuda11.1-tensorrt8.2.3.0\dist\mmdeploy-0.8.0-py38-none-win_amd64.whl

|

||

pip install .\mmdeploy-0.8.0-windows-amd64-cuda11.1-tensorrt8.2.3.0\sdk\python\mmdeploy_python-0.8.0-cp38-none-win_amd64.whl

|

||

```

|

||

|

||

:point_right: If you have installed it before, please uninstall it first.

|

||

|

||

6. Install TensorRT related package and set environment variables

|

||

|

||

- CUDA Toolkit 11.1

|

||

- TensorRT 8.2.3.0

|

||

- cuDNN 8.2.1.0

|

||

|

||

Add the runtime libraries of TensorRT and cuDNN to the `PATH`. You can refer to the path setting of onnxruntime. Don't forget to install python package of TensorRT.

|

||

|

||

:exclamation: Restart powershell to make the environment variables setting take effect. You can check whether the settings are in effect by echo `$env:PATH`.

|

||

|

||

:exclamation: It is recommended to add only one version of the TensorRT/cuDNN runtime libraries to the `PATH`. It is better not to copy the runtime libraries of TensorRT/cuDNN to the cuda directory in `C:\`.

|

||

|

||

7. Install pycuda by `pip install pycuda`

|

||

|

||

## Model Convert

|

||

|

||

### ONNX Runtime Example

|

||

|

||

The following describes how to use the prebuilt package to do model conversion based on the previous downloaded pth.

|

||

|

||

After preparation work, the structure of the current working directory should be:

|

||

|

||

```

|

||

..

|

||

|-- mmdeploy-0.8.0-windows-amd64-onnxruntime1.8.1

|

||

|-- mmclassification

|

||

|-- mmdeploy

|

||

`-- resnet18_8xb32_in1k_20210831-fbbb1da6.pth

|

||

```

|

||

|

||

Model conversion can be performed like below:

|

||

|

||

```python

|

||

from mmdeploy.apis import torch2onnx

|

||

from mmdeploy.backend.sdk.export_info import export2SDK

|

||

|

||

img = 'mmclassification/demo/demo.JPEG'

|

||

work_dir = 'work_dir/onnx/resnet'

|

||

save_file = 'end2end.onnx'

|

||

deploy_cfg = 'mmdeploy/configs/mmcls/classification_onnxruntime_dynamic.py'

|

||

model_cfg = 'mmclassification/configs/resnet/resnet18_8xb32_in1k.py'

|

||

model_checkpoint = 'resnet18_8xb32_in1k_20210831-fbbb1da6.pth'

|

||

device = 'cpu'

|

||

|

||

# 1. convert model to onnx

|

||

torch2onnx(img, work_dir, save_file, deploy_cfg, model_cfg,

|

||

model_checkpoint, device)

|

||

|

||

# 2. extract pipeline info for sdk use (dump-info)

|

||

export2SDK(deploy_cfg, model_cfg, work_dir, pth=model_checkpoint)

|

||

```

|

||

|

||

The structure of the converted model directory:

|

||

|

||

```bash

|

||

.\work_dir\

|

||

`-- onnx

|

||

`-- resnet

|

||

|-- deploy.json

|

||

|-- detail.json

|

||

|-- end2end.onnx

|

||

`-- pipeline.json

|

||

```

|

||

|

||

### TensorRT Example

|

||

|

||

The following describes how to use the prebuilt package to do model conversion based on the previous downloaded pth.

|

||

|

||

After installation of mmdeploy-tensorrt prebuilt package, the structure of the current working directory should be:

|

||

|

||

```

|

||

..

|

||

|-- mmdeploy-0.8.0-windows-amd64-cuda11.1-tensorrt8.2.3.0

|

||

|-- mmclassification

|

||

|-- mmdeploy

|

||

`-- resnet18_8xb32_in1k_20210831-fbbb1da6.pth

|

||

```

|

||

|

||

Model conversion can be performed like below:

|

||

|

||

```python

|

||

from mmdeploy.apis import torch2onnx

|

||

from mmdeploy.apis.tensorrt import onnx2tensorrt

|

||

from mmdeploy.backend.sdk.export_info import export2SDK

|

||

import os

|

||

|

||

img = 'mmclassification/demo/demo.JPEG'

|

||

work_dir = 'work_dir/trt/resnet'

|

||

save_file = 'end2end.onnx'

|

||

deploy_cfg = 'mmdeploy/configs/mmcls/classification_tensorrt_static-224x224.py'

|

||

model_cfg = 'mmclassification/configs/resnet/resnet18_8xb32_in1k.py'

|

||

model_checkpoint = 'resnet18_8xb32_in1k_20210831-fbbb1da6.pth'

|

||

device = 'cpu'

|

||

|

||

# 1. convert model to IR(onnx)

|

||

torch2onnx(img, work_dir, save_file, deploy_cfg, model_cfg,

|

||

model_checkpoint, device)

|

||

|

||

# 2. convert IR to tensorrt

|

||

onnx_model = os.path.join(work_dir, save_file)

|

||

save_file = 'end2end.engine'

|

||

model_id = 0

|

||

device = 'cuda'

|

||

onnx2tensorrt(work_dir, save_file, model_id, deploy_cfg, onnx_model, device)

|

||

|

||

# 3. extract pipeline info for sdk use (dump-info)

|

||

export2SDK(deploy_cfg, model_cfg, work_dir, pth=model_checkpoint)

|

||

```

|

||

|

||

The structure of the converted model directory:

|

||

|

||

```

|

||

.\work_dir\

|

||

`-- trt

|

||

`-- resnet

|

||

|-- deploy.json

|

||

|-- detail.json

|

||

|-- end2end.engine

|

||

|-- end2end.onnx

|

||

`-- pipeline.json

|

||

```

|

||

|

||

## Model Inference

|

||

|

||

You can obtain two model folders after model conversion.

|

||

|

||

```

|

||

.\work_dir\onnx\resnet

|

||

.\work_dir\trt\resnet

|

||

```

|

||

|

||

The structure of current working directory:

|

||

|

||

```

|

||

.

|

||

|-- mmdeploy-0.8.0-windows-amd64-cuda11.1-tensorrt8.2.3.0

|

||

|-- mmdeploy-0.8.0-windows-amd64-onnxruntime1.8.1

|

||

|-- mmclassification

|

||

|-- mmdeploy

|

||

|-- resnet18_8xb32_in1k_20210831-fbbb1da6.pth

|

||

`-- work_dir

|

||

```

|

||

|

||

### Backend Inference

|

||

|

||

:exclamation: It should be emphasized that `inference_model` is not for deployment, but shields the difference of backend inference api(`TensorRT`, `ONNX Runtime` etc.). The main purpose of this api is to check whether the converted model can be inferred normally.

|

||

|

||

#### ONNXRuntime

|

||

|

||

```python

|

||

from mmdeploy.apis import inference_model

|

||

|

||

model_cfg = 'mmclassification/configs/resnet/resnet18_8xb32_in1k.py'

|

||

deploy_cfg = 'mmdeploy/configs/mmcls/classification_onnxruntime_dynamic.py'

|

||

backend_files = ['work_dir/onnx/resnet/end2end.onnx']

|

||

img = 'mmclassification/demo/demo.JPEG'

|

||

device = 'cpu'

|

||

result = inference_model(model_cfg, deploy_cfg, backend_files, img, device)

|

||

```

|

||

|

||

#### TensorRT

|

||

|

||

```python

|

||

from mmdeploy.apis import inference_model

|

||

|

||

model_cfg = 'mmclassification/configs/resnet/resnet18_8xb32_in1k.py'

|

||

deploy_cfg = 'mmdeploy/configs/mmcls/classification_tensorrt_static-224x224.py'

|

||

backend_files = ['work_dir/trt/resnet/end2end.engine']

|

||

img = 'mmclassification/demo/demo.JPEG'

|

||

device = 'cuda'

|

||

result = inference_model(model_cfg, deploy_cfg, backend_files, img, device)

|

||

```

|

||

|

||

### Python SDK

|

||

|

||

The following describes how to use the SDK's Python API for inference

|

||

|

||

#### ONNXRuntime

|

||

|

||

```bash

|

||

python .\mmdeploy\demo\python\image_classification.py cpu .\work_dir\onnx\resnet\ .\mmclassification\demo\demo.JPEG

|

||

```

|

||

|

||

#### TensorRT

|

||

|

||

```

|

||

python .\mmdeploy\demo\python\image_classification.py cuda .\work_dir\trt\resnet\ .\mmclassification\demo\demo.JPEG

|

||

```

|

||

|

||

### C SDK

|

||

|

||

The following describes how to use the SDK's C API for inference

|

||

|

||

#### ONNXRuntime

|

||

|

||

1. Build examples

|

||

|

||

Under `mmdeploy-0.8.0-windows-amd64-onnxruntime1.8.1\sdk\example` directory

|

||

|

||

```

|

||

// Path should be modified according to the actual location

|

||

mkdir build

|

||

cd build

|

||

cmake ..\cpp -A x64 -T v142 `

|

||

-DOpenCV_DIR=C:\Deps\opencv\build\x64\vc15\lib `

|

||

-DMMDeploy_DIR=C:\workspace\mmdeploy-0.8.0-windows-amd64-onnxruntime1.8.1\sdk\lib\cmake\MMDeploy `

|

||

-DONNXRUNTIME_DIR=C:\Deps\onnxruntime\onnxruntime-win-gpu-x64-1.8.1

|

||

|

||

cmake --build . --config Release

|

||

```

|

||

|

||

2. Add environment variables or copy the runtime libraries to the same level directory of exe

|

||

|

||

:point_right: The purpose is to make the exe find the relevant dll

|

||

|

||

If choose to add environment variables, add the runtime libraries path of `mmdeploy` (`mmdeploy-0.8.0-windows-amd64-onnxruntime1.8.1\sdk\bin`) to the `PATH`.

|

||

|

||

If choose to copy the dynamic libraries, copy the dll in the bin directory to the same level directory of the just compiled exe (build/Release).

|

||

|

||

3. Inference:

|

||

|

||

It is recommended to use `CMD` here.

|

||

|

||

Under `mmdeploy-0.8.0-windows-amd64-onnxruntime1.8.1\\sdk\\example\\build\\Release` directory:

|

||

|

||

```

|

||

.\image_classification.exe cpu C:\workspace\work_dir\onnx\resnet\ C:\workspace\mmclassification\demo\demo.JPEG

|

||

```

|

||

|

||

#### TensorRT

|

||

|

||

1. Build examples

|

||

|

||

Under `mmdeploy-0.8.0-windows-amd64-cuda11.1-tensorrt8.2.3.0\\sdk\\example` directory

|

||

|

||

```

|

||

// Path should be modified according to the actual location

|

||

mkdir build

|

||

cd build

|

||

cmake ..\cpp -A x64 -T v142 `

|

||

-DOpenCV_DIR=C:\Deps\opencv\build\x64\vc15\lib `

|

||

-DMMDeploy_DIR=C:\workspace\mmdeploy-0.8.0-windows-amd64-cuda11.1-tensorrt8 2.3.0\sdk\lib\cmake\MMDeploy `

|

||

-DTENSORRT_DIR=C:\Deps\tensorrt\TensorRT-8.2.3.0 `

|

||

-DCUDNN_DIR=C:\Deps\cudnn\8.2.1

|

||

cmake --build . --config Release

|

||

```

|

||

|

||

2. Add environment variables or copy the runtime libraries to the same level directory of exe

|

||

|

||

:point_right: The purpose is to make the exe find the relevant dll

|

||

|

||

If choose to add environment variables, add the runtime libraries path of `mmdeploy` (`mmdeploy-0.8.0-windows-amd64-cuda11.1-tensorrt8.2.3.0\sdk\bin`) to the `PATH`.

|

||

|

||

If choose to copy the dynamic libraries, copy the dll in the bin directory to the same level directory of the just compiled exe (build/Release).

|

||

|

||

3. Inference

|

||

|

||

It is recommended to use `CMD` here.

|

||

|

||

Under `mmdeploy-0.8.0-windows-amd64-cuda11.1-tensorrt8.2.3.0\\sdk\\example\\build\\Release` directory

|

||

|

||

```

|

||

.\image_classification.exe cuda C:\workspace\work_dir\trt\resnet C:\workspace\mmclassification\demo\demo.JPEG

|

||

```

|

||

|

||

## Troubleshooting

|

||

|

||

If you encounter problems, please refer to [FAQ](../faq.md)

|