Compare commits

45 Commits

| Author | SHA1 | Date |

|---|---|---|

|

|

390ba2fbb2 | |

|

|

d620552c2c | |

|

|

41fa84a9a9 | |

|

|

698782f920 | |

|

|

e60ab1dde3 | |

|

|

8ec837814e | |

|

|

a4475f5eea | |

|

|

a8c74c346d | |

|

|

9124ebf7a2 | |

|

|

2e0ab7a922 | |

|

|

fc59364d64 | |

|

|

4183cf0829 | |

|

|

cc3b74b5e8 | |

|

|

c9b59962d6 | |

|

|

5e736b143b | |

|

|

85c83ba616 | |

|

|

d1f1aabf81 | |

|

|

66fb81f7b3 | |

|

|

acbc5e46dc | |

|

|

9ecced821b | |

|

|

39ed23fae8 | |

|

|

e258c84824 | |

|

|

2c4516c622 | |

|

|

447d3bba2c | |

|

|

2fe0ecec3d | |

|

|

c423d0c1da | |

|

|

9b98405672 | |

|

|

4df682ba2d | |

|

|

ba5eed8409 | |

|

|

f79111ecc0 | |

|

|

b5f2d5860d | |

|

|

02f80e8bdd | |

|

|

cd298e3086 | |

|

|

396cac19cd | |

|

|

3d8a611eec | |

|

|

109cd44c7e | |

|

|

b51bf60964 | |

|

|

4a50213c69 | |

|

|

e4600a6993 | |

|

|

369f15e27a | |

|

|

1398e4200e | |

|

|

8e6fb12b1f | |

|

|

671f3bcdf4 | |

|

|

efcd364124 | |

|

|

504fa4f5cb |

|

|

@ -1,6 +1,8 @@

|

|||

name: deploy

|

||||

|

||||

on: push

|

||||

on:

|

||||

- push

|

||||

- workflow_dispatch

|

||||

|

||||

concurrency:

|

||||

group: ${{ github.workflow }}-${{ github.ref }}

|

||||

|

|

@ -9,13 +11,14 @@ concurrency:

|

|||

jobs:

|

||||

build-n-publish:

|

||||

runs-on: ubuntu-latest

|

||||

if: startsWith(github.event.ref, 'refs/tags')

|

||||

if: |

|

||||

startsWith(github.event.ref, 'refs/tags') || github.event_name == 'workflow_dispatch'

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- name: Set up Python 3.7

|

||||

uses: actions/setup-python@v2

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up Python 3.10.13

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: 3.7

|

||||

python-version: 3.10.13

|

||||

- name: Install wheel

|

||||

run: pip install wheel

|

||||

- name: Build MMEngine

|

||||

|

|

@ -27,13 +30,14 @@ jobs:

|

|||

|

||||

build-n-publish-lite:

|

||||

runs-on: ubuntu-latest

|

||||

if: startsWith(github.event.ref, 'refs/tags')

|

||||

if: |

|

||||

startsWith(github.event.ref, 'refs/tags') || github.event_name == 'workflow_dispatch'

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- name: Set up Python 3.7

|

||||

uses: actions/setup-python@v2

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up Python 3.10.13

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: 3.7

|

||||

python-version: 3.10.13

|

||||

- name: Install wheel

|

||||

run: pip install wheel

|

||||

- name: Build MMEngine-lite

|

||||

|

|

|

|||

|

|

@ -11,10 +11,10 @@ jobs:

|

|||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- name: Set up Python 3.7

|

||||

- name: Set up Python 3.10.15

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: 3.7

|

||||

python-version: '3.10.15'

|

||||

- name: Install pre-commit hook

|

||||

run: |

|

||||

pip install pre-commit

|

||||

|

|

|

|||

|

|

@ -1,5 +1,9 @@

|

|||

name: pr_stage_test

|

||||

|

||||

env:

|

||||

ACTIONS_ALLOW_USE_UNSECURE_NODE_VERSION: true

|

||||

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

paths-ignore:

|

||||

|

|

@ -21,152 +25,114 @@ concurrency:

|

|||

jobs:

|

||||

build_cpu:

|

||||

runs-on: ubuntu-22.04

|

||||

defaults:

|

||||

run:

|

||||

shell: bash -l {0}

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: [3.7]

|

||||

include:

|

||||

- torch: 1.8.1

|

||||

torchvision: 0.9.1

|

||||

python-version: ['3.9']

|

||||

torch: ['2.0.0']

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v4

|

||||

- name: Check out repo

|

||||

uses: actions/checkout@v3

|

||||

- name: Setup conda env

|

||||

uses: conda-incubator/setup-miniconda@v2

|

||||

with:

|

||||

auto-update-conda: true

|

||||

miniconda-version: "latest"

|

||||

use-only-tar-bz2: true

|

||||

activate-environment: test

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Upgrade pip

|

||||

run: python -m pip install pip --upgrade

|

||||

- name: Upgrade wheel

|

||||

run: python -m pip install wheel --upgrade

|

||||

- name: Install PyTorch

|

||||

run: pip install torch==${{matrix.torch}}+cpu torchvision==${{matrix.torchvision}}+cpu -f https://download.pytorch.org/whl/cpu/torch_stable.html

|

||||

- name: Build MMEngine from source

|

||||

run: pip install -e . -v

|

||||

- name: Install unit tests dependencies

|

||||

- name: Update pip

|

||||

run: |

|

||||

pip install -r requirements/tests.txt

|

||||

pip install openmim

|

||||

mim install mmcv

|

||||

- name: Run unittests and generate coverage report

|

||||

python -m pip install --upgrade pip wheel

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

coverage run --branch --source mmengine -m pytest tests/ --ignore tests/test_dist

|

||||

coverage xml

|

||||

coverage report -m

|

||||

# Upload coverage report for python3.7 && pytorch1.8.1 cpu

|

||||

- name: Upload coverage to Codecov

|

||||

apt-get update && apt-get install -y ffmpeg libsm6 libxext6 git ninja-build libglib2.0-0 libsm6 libxrender-dev libxext6

|

||||

python -m pip install torch==${{matrix.torch}}

|

||||

python -m pip install -e . -v

|

||||

python -m pip install -r requirements/tests.txt

|

||||

python -m pip install openmim

|

||||

mim install mmcv coverage

|

||||

- name: Run unit tests with coverage

|

||||

run: coverage run --branch --source mmengine -m pytest tests/ --ignore tests/test_dist

|

||||

- name: Upload Coverage to Codecov

|

||||

uses: codecov/codecov-action@v3

|

||||

with:

|

||||

file: ./coverage.xml

|

||||

flags: unittests

|

||||

env_vars: OS,PYTHON

|

||||

name: codecov-umbrella

|

||||

fail_ci_if_error: false

|

||||

|

||||

build_cu102:

|

||||

build_gpu:

|

||||

runs-on: ubuntu-22.04

|

||||

container:

|

||||

image: pytorch/pytorch:1.8.1-cuda10.2-cudnn7-devel

|

||||

defaults:

|

||||

run:

|

||||

shell: bash -l {0}

|

||||

env:

|

||||

MKL_THREADING_LAYER: GNU

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: [3.7]

|

||||

python-version: ['3.9','3.10']

|

||||

torch: ['2.0.0','2.3.1','2.5.1']

|

||||

cuda: ['cu118']

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v4

|

||||

- name: Check out repo

|

||||

uses: actions/checkout@v3

|

||||

- name: Setup conda env

|

||||

uses: conda-incubator/setup-miniconda@v2

|

||||

with:

|

||||

auto-update-conda: true

|

||||

miniconda-version: "latest"

|

||||

use-only-tar-bz2: true

|

||||

activate-environment: test

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Upgrade pip

|

||||

run: pip install pip --upgrade

|

||||

- name: Fetch GPG keys

|

||||

- name: Update pip

|

||||

run: |

|

||||

apt-key adv --fetch-keys https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/3bf863cc.pub

|

||||

apt-key adv --fetch-keys https://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu1804/x86_64/7fa2af80.pub

|

||||

- name: Install system dependencies

|

||||

run: apt-get update && apt-get install -y ffmpeg libsm6 libxext6 git ninja-build libglib2.0-0 libsm6 libxrender-dev libxext6

|

||||

- name: Build MMEngine from source

|

||||

run: pip install -e . -v

|

||||

- name: Install unit tests dependencies

|

||||

python -m pip install --upgrade pip wheel

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

pip install -r requirements/tests.txt

|

||||

pip install openmim

|

||||

mim install mmcv

|

||||

- name: Run unittests and generate coverage report

|

||||

run: |

|

||||

coverage run --branch --source mmengine -m pytest tests/ --ignore tests/test_dist

|

||||

coverage xml

|

||||

coverage report -m

|

||||

apt-get update && apt-get install -y ffmpeg libsm6 libxext6 git ninja-build libglib2.0-0 libsm6 libxrender-dev libxext6

|

||||

python -m pip install torch==${{matrix.torch}} --index-url https://download.pytorch.org/whl/${{matrix.cuda}}

|

||||

python -m pip install -e . -v

|

||||

python -m pip install -r requirements/tests.txt

|

||||

python -m pip install openmim

|

||||

mim install mmcv coverage

|

||||

- name: Run unit tests with coverage

|

||||

run: coverage run --branch --source mmengine -m pytest tests/ --ignore tests/test_dist

|

||||

- name: Upload Coverage to Codecov

|

||||

uses: codecov/codecov-action@v3

|

||||

|

||||

build_cu117:

|

||||

runs-on: ubuntu-22.04

|

||||

container:

|

||||

image: pytorch/pytorch:2.0.0-cuda11.7-cudnn8-devel

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: [3.9]

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Upgrade pip

|

||||

run: pip install pip --upgrade

|

||||

- name: Fetch GPG keys

|

||||

run: |

|

||||

apt-key adv --fetch-keys https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/3bf863cc.pub

|

||||

apt-key adv --fetch-keys https://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu1804/x86_64/7fa2af80.pub

|

||||

- name: Install system dependencies

|

||||

run: apt-get update && apt-get install -y git ffmpeg libturbojpeg

|

||||

- name: Build MMEngine from source

|

||||

run: pip install -e . -v

|

||||

- name: Install unit tests dependencies

|

||||

run: |

|

||||

pip install -r requirements/tests.txt

|

||||

pip install openmim

|

||||

mim install mmcv

|

||||

# Distributed related unit test may randomly error in PyTorch 1.13.0

|

||||

- name: Run unittests and generate coverage report

|

||||

run: |

|

||||

coverage run --branch --source mmengine -m pytest tests/ --ignore tests/test_dist/

|

||||

coverage xml

|

||||

coverage report -m

|

||||

|

||||

build_windows:

|

||||

runs-on: windows-2022

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: [3.7]

|

||||

platform: [cpu, cu111]

|

||||

torch: [1.8.1]

|

||||

torchvision: [0.9.1]

|

||||

include:

|

||||

- python-version: 3.8

|

||||

platform: cu118

|

||||

torch: 2.1.0

|

||||

torchvision: 0.16.0

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Upgrade pip

|

||||

# Windows CI could fail If we call `pip install pip --upgrade` directly.

|

||||

run: python -m pip install pip --upgrade

|

||||

- name: Install PyTorch

|

||||

run: pip install torch==${{matrix.torch}}+${{matrix.platform}} torchvision==${{matrix.torchvision}}+${{matrix.platform}} -f https://download.pytorch.org/whl/${{matrix.platform}}/torch_stable.html

|

||||

- name: Build MMEngine from source

|

||||

run: pip install -e . -v

|

||||

- name: Install unit tests dependencies

|

||||

run: |

|

||||

pip install -r requirements/tests.txt

|

||||

pip install openmim

|

||||

mim install mmcv

|

||||

- name: Run CPU unittests

|

||||

run: pytest tests/ --ignore tests/test_dist

|

||||

if: ${{ matrix.platform == 'cpu' }}

|

||||

- name: Run GPU unittests

|

||||

# Skip testing distributed related unit tests since the memory of windows CI is limited

|

||||

run: pytest tests/ --ignore tests/test_dist --ignore tests/test_optim/test_optimizer/test_optimizer_wrapper.py --ignore tests/test_model/test_wrappers/test_model_wrapper.py --ignore tests/test_hooks/test_sync_buffers_hook.py

|

||||

if: ${{ matrix.platform == 'cu111' }} || ${{ matrix.platform == 'cu118' }}

|

||||

# build_windows:

|

||||

# runs-on: windows-2022

|

||||

# strategy:

|

||||

# matrix:

|

||||

# python-version: [3.9]

|

||||

# platform: [cpu, cu111]

|

||||

# torch: [1.8.1]

|

||||

# torchvision: [0.9.1]

|

||||

# include:

|

||||

# - python-version: 3.8

|

||||

# platform: cu118

|

||||

# torch: 2.1.0

|

||||

# torchvision: 0.16.0

|

||||

# steps:

|

||||

# - uses: actions/checkout@v3

|

||||

# - name: Set up Python ${{ matrix.python-version }}

|

||||

# uses: actions/setup-python@v4

|

||||

# with:

|

||||

# python-version: ${{ matrix.python-version }}

|

||||

# - name: Upgrade pip

|

||||

# # Windows CI could fail If we call `pip install pip --upgrade` directly.

|

||||

# run: python -m pip install pip wheel --upgrade

|

||||

# - name: Install PyTorch

|

||||

# run: pip install torch==${{matrix.torch}}+${{matrix.platform}} torchvision==${{matrix.torchvision}}+${{matrix.platform}} -f https://download.pytorch.org/whl/${{matrix.platform}}/torch_stable.html

|

||||

# - name: Build MMEngine from source

|

||||

# run: pip install -e . -v

|

||||

# - name: Install unit tests dependencies

|

||||

# run: |

|

||||

# pip install -r requirements/tests.txt

|

||||

# pip install openmim

|

||||

# mim install mmcv

|

||||

# - name: Run CPU unittests

|

||||

# run: pytest tests/ --ignore tests/test_dist

|

||||

# if: ${{ matrix.platform == 'cpu' }}

|

||||

# - name: Run GPU unittests

|

||||

# # Skip testing distributed related unit tests since the memory of windows CI is limited

|

||||

# run: pytest tests/ --ignore tests/test_dist --ignore tests/test_optim/test_optimizer/test_optimizer_wrapper.py --ignore tests/test_model/test_wrappers/test_model_wrapper.py --ignore tests/test_hooks/test_sync_buffers_hook.py

|

||||

# if: ${{ matrix.platform == 'cu111' }} || ${{ matrix.platform == 'cu118' }}

|

||||

|

|

|

|||

10

.owners.yml

10

.owners.yml

|

|

@ -1,10 +0,0 @@

|

|||

assign:

|

||||

strategy:

|

||||

# random

|

||||

daily-shift-based

|

||||

scedule:

|

||||

'*/1 * * * *'

|

||||

assignees:

|

||||

- zhouzaida

|

||||

- HAOCHENYE

|

||||

- C1rN09

|

||||

|

|

@ -1,7 +1,11 @@

|

|||

exclude: ^tests/data/

|

||||

repos:

|

||||

- repo: https://gitee.com/openmmlab/mirrors-flake8

|

||||

rev: 5.0.4

|

||||

- repo: https://github.com/pre-commit/pre-commit

|

||||

rev: v4.0.0

|

||||

hooks:

|

||||

- id: validate_manifest

|

||||

- repo: https://github.com/PyCQA/flake8

|

||||

rev: 7.1.1

|

||||

hooks:

|

||||

- id: flake8

|

||||

- repo: https://gitee.com/openmmlab/mirrors-isort

|

||||

|

|

@ -13,7 +17,7 @@ repos:

|

|||

hooks:

|

||||

- id: yapf

|

||||

- repo: https://gitee.com/openmmlab/mirrors-pre-commit-hooks

|

||||

rev: v4.3.0

|

||||

rev: v5.0.0

|

||||

hooks:

|

||||

- id: trailing-whitespace

|

||||

- id: check-yaml

|

||||

|

|

@ -55,7 +59,7 @@ repos:

|

|||

args: ["mmengine", "tests"]

|

||||

- id: remove-improper-eol-in-cn-docs

|

||||

- repo: https://gitee.com/openmmlab/mirrors-mypy

|

||||

rev: v0.812

|

||||

rev: v1.2.0

|

||||

hooks:

|

||||

- id: mypy

|

||||

exclude: |-

|

||||

|

|

@ -63,3 +67,4 @@ repos:

|

|||

^examples

|

||||

| ^docs

|

||||

)

|

||||

additional_dependencies: ["types-setuptools", "types-requests", "types-PyYAML"]

|

||||

|

|

|

|||

|

|

@ -1,7 +1,11 @@

|

|||

exclude: ^tests/data/

|

||||

repos:

|

||||

- repo: https://github.com/pre-commit/pre-commit

|

||||

rev: v4.0.0

|

||||

hooks:

|

||||

- id: validate_manifest

|

||||

- repo: https://github.com/PyCQA/flake8

|

||||

rev: 5.0.4

|

||||

rev: 7.1.1

|

||||

hooks:

|

||||

- id: flake8

|

||||

- repo: https://github.com/PyCQA/isort

|

||||

|

|

@ -13,7 +17,7 @@ repos:

|

|||

hooks:

|

||||

- id: yapf

|

||||

- repo: https://github.com/pre-commit/pre-commit-hooks

|

||||

rev: v4.3.0

|

||||

rev: v5.0.0

|

||||

hooks:

|

||||

- id: trailing-whitespace

|

||||

- id: check-yaml

|

||||

|

|

@ -34,12 +38,8 @@ repos:

|

|||

- mdformat-openmmlab

|

||||

- mdformat_frontmatter

|

||||

- linkify-it-py

|

||||

- repo: https://github.com/codespell-project/codespell

|

||||

rev: v2.2.1

|

||||

hooks:

|

||||

- id: codespell

|

||||

- repo: https://github.com/myint/docformatter

|

||||

rev: v1.3.1

|

||||

rev: 06907d0

|

||||

hooks:

|

||||

- id: docformatter

|

||||

args: ["--in-place", "--wrap-descriptions", "79"]

|

||||

|

|

@ -55,7 +55,7 @@ repos:

|

|||

args: ["mmengine", "tests"]

|

||||

- id: remove-improper-eol-in-cn-docs

|

||||

- repo: https://github.com/pre-commit/mirrors-mypy

|

||||

rev: v0.812

|

||||

rev: v1.2.0

|

||||

hooks:

|

||||

- id: mypy

|

||||

exclude: |-

|

||||

|

|

@ -63,3 +63,4 @@ repos:

|

|||

^examples

|

||||

| ^docs

|

||||

)

|

||||

additional_dependencies: ["types-setuptools", "types-requests", "types-PyYAML"]

|

||||

|

|

|

|||

84

CODEOWNERS

84

CODEOWNERS

|

|

@ -1,84 +0,0 @@

|

|||

# IMPORTANT:

|

||||

# This file is ONLY used to subscribe for notifications for PRs

|

||||

# related to a specific file path, and each line is a file pattern followed by

|

||||

# one or more owners.

|

||||

|

||||

# Order is important; the last matching pattern takes the most

|

||||

# precedence.

|

||||

|

||||

# These owners will be the default owners for everything in

|

||||

# the repo. Unless a later match takes precedence,

|

||||

# @global-owner1 and @global-owner2 will be requested for

|

||||

# review when someone opens a pull request.

|

||||

* @zhouzaida @HAOCHENYE

|

||||

|

||||

# Docs

|

||||

/docs/ @C1rN09

|

||||

*.rst @zhouzaida @HAOCHENYE

|

||||

|

||||

# mmengine file

|

||||

# config

|

||||

/mmengine/config/ @HAOCHENYE

|

||||

|

||||

# dataset

|

||||

/mmengine/dataset/ @HAOCHENYE

|

||||

|

||||

# device

|

||||

/mmengine/device/ @zhouzaida

|

||||

|

||||

# dist

|

||||

/mmengine/dist/ @zhouzaida @C1rN09

|

||||

|

||||

# evaluator

|

||||

/mmengine/evaluator/ @RangiLyu @C1rN09

|

||||

|

||||

# fileio

|

||||

/mmengine/fileio/ @zhouzaida

|

||||

|

||||

# hooks

|

||||

/mmengine/hooks/ @zhouzaida @HAOCHENYE

|

||||

/mmengine/hooks/ema_hook.py @RangiLyu

|

||||

|

||||

# hub

|

||||

/mmengine/hub/ @HAOCHENYE @zhouzaida

|

||||

|

||||

# logging

|

||||

/mmengine/logging/ @HAOCHENYE

|

||||

|

||||

# model

|

||||

/mmengine/model/ @HAOCHENYE @C1rN09

|

||||

/mmengine/model/averaged_model.py @RangiLyu

|

||||

/mmengine/model/wrappers/fully_sharded_distributed.py @C1rN09

|

||||

|

||||

# optim

|

||||

/mmengine/optim/ @HAOCHENYE

|

||||

/mmengine/optim/scheduler/ @RangiLyu

|

||||

|

||||

# registry

|

||||

/mmengine/registry/ @C1rN09 @HAOCHENYE

|

||||

|

||||

# runner

|

||||

/mmengine/runner/ @zhouzaida @RangiLyu @HAOCHENYE

|

||||

/mmengine/runner/amp.py @HAOCHENYE

|

||||

/mmengine/runner/log_processor.py @HAOCHENYE

|

||||

/mmengine/runner/checkpoint.py @zhouzaida @C1rN09

|

||||

/mmengine/runner/priority.py @zhouzaida

|

||||

/mmengine/runner/utils.py @zhouzaida @HAOCHENYE

|

||||

|

||||

# structure

|

||||

/mmengine/structures/ @Harold-lkk @HAOCHENYE

|

||||

|

||||

# testing

|

||||

/mmengine/testing/ @zhouzaida

|

||||

|

||||

# utils

|

||||

/mmengine/utils/ @HAOCHENYE @zhouzaida

|

||||

|

||||

# visualization

|

||||

/mmengine/visualization/ @Harold-lkk @HAOCHENYE

|

||||

|

||||

# version

|

||||

/mmengine/__version__.py @zhouzaida

|

||||

|

||||

# unit test

|

||||

/tests/ @zhouzaida @HAOCHENYE

|

||||

70

README.md

70

README.md

|

|

@ -19,13 +19,14 @@

|

|||

<div> </div>

|

||||

|

||||

[](https://pypi.org/project/mmengine/)

|

||||

[](#installation)

|

||||

[](https://pypi.org/project/mmengine)

|

||||

[](https://github.com/open-mmlab/mmengine/blob/main/LICENSE)

|

||||

[](https://github.com/open-mmlab/mmengine/issues)

|

||||

[](https://github.com/open-mmlab/mmengine/issues)

|

||||

|

||||

[Introduction](#introduction) |

|

||||

[Installation](#installation) |

|

||||

[Get Started](#get-started) |

|

||||

[📘Documentation](https://mmengine.readthedocs.io/en/latest/) |

|

||||

[🛠️Installation](https://mmengine.readthedocs.io/en/latest/get_started/installation.html) |

|

||||

[🤔Reporting Issues](https://github.com/open-mmlab/mmengine/issues/new/choose)

|

||||

|

||||

</div>

|

||||

|

|

@ -58,58 +59,53 @@ English | [简体中文](README_zh-CN.md)

|

|||

|

||||

## What's New

|

||||

|

||||

v0.10.1 was released on 2023-11-22.

|

||||

v0.10.6 was released on 2025-01-13.

|

||||

|

||||

Highlights:

|

||||

|

||||

- Support installing mmengine-lite with no dependency on opencv. Refer to the [Installation](https://mmengine.readthedocs.io/en/latest/get_started/installation.html#install-mmengine) for more details.

|

||||

- Support custom `artifact_location` in MLflowVisBackend [#1505](#1505)

|

||||

- Enable `exclude_frozen_parameters` for `DeepSpeedEngine._zero3_consolidated_16bit_state_dict` [#1517](#1517)

|

||||

|

||||

- Support training with [ColossalAI](https://colossalai.org/). Refer to the [Training Large Models](https://mmengine.readthedocs.io/en/latest/common_usage/large_model_training.html#colossalai) for more detailed usages.

|

||||

|

||||

- Support gradient checkpointing. Refer to the [Save Memory on GPU](https://mmengine.readthedocs.io/en/latest/common_usage/save_gpu_memory.html#gradient-checkpointing) for more details.

|

||||

|

||||

- Supports multiple visualization backends, including `NeptuneVisBackend`, `DVCLiveVisBackend` and `AimVisBackend`. Refer to [Visualization Backends](https://mmengine.readthedocs.io/en/latest/common_usage/visualize_training_log.html) for more details.

|

||||

|

||||

Read [Changelog](./docs/en/notes/changelog.md#v0101-22112023) for more details.

|

||||

|

||||

## Table of Contents

|

||||

|

||||

- [Introduction](#introduction)

|

||||

- [Installation](#installation)

|

||||

- [Get Started](#get-started)

|

||||

- [Learn More](#learn-more)

|

||||

- [Contributing](#contributing)

|

||||

- [Citation](#citation)

|

||||

- [License](#license)

|

||||

- [Ecosystem](#ecosystem)

|

||||

- [Projects in OpenMMLab](#projects-in-openmmlab)

|

||||

Read [Changelog](./docs/en/notes/changelog.md#v0104-2342024) for more details.

|

||||

|

||||

## Introduction

|

||||

|

||||

MMEngine is a foundational library for training deep learning models based on PyTorch. It provides a solid engineering foundation and frees developers from writing redundant codes on workflows. It serves as the training engine of all OpenMMLab codebases, which support hundreds of algorithms in various research areas. Moreover, MMEngine is also generic to be applied to non-OpenMMLab projects.

|

||||

MMEngine is a foundational library for training deep learning models based on PyTorch. It serves as the training engine of all OpenMMLab codebases, which support hundreds of algorithms in various research areas. Moreover, MMEngine is also generic to be applied to non-OpenMMLab projects. Its highlights are as follows:

|

||||

|

||||

Major features:

|

||||

**Integrate mainstream large-scale model training frameworks**

|

||||

|

||||

1. **A universal and powerful runner**:

|

||||

- [ColossalAI](https://mmengine.readthedocs.io/en/latest/common_usage/large_model_training.html#colossalai)

|

||||

- [DeepSpeed](https://mmengine.readthedocs.io/en/latest/common_usage/large_model_training.html#deepspeed)

|

||||

- [FSDP](https://mmengine.readthedocs.io/en/latest/common_usage/large_model_training.html#fullyshardeddataparallel-fsdp)

|

||||

|

||||

- Supports training different tasks with a small amount of code, e.g., ImageNet can be trained with only 80 lines of code (400 lines of the original PyTorch example).

|

||||

- Easily compatible with models from popular algorithm libraries such as TIMM, TorchVision, and Detectron2.

|

||||

**Supports a variety of training strategies**

|

||||

|

||||

2. **Open architecture with unified interfaces**:

|

||||

- [Mixed Precision Training](https://mmengine.readthedocs.io/en/latest/common_usage/speed_up_training.html#mixed-precision-training)

|

||||

- [Gradient Accumulation](https://mmengine.readthedocs.io/en/latest/common_usage/save_gpu_memory.html#gradient-accumulation)

|

||||

- [Gradient Checkpointing](https://mmengine.readthedocs.io/en/latest/common_usage/save_gpu_memory.html#gradient-checkpointing)

|

||||

|

||||

- Handles different algorithm tasks with unified APIs, e.g., implement a method and apply it to all compatible models.

|

||||

- Provides a unified abstraction for upper-level algorithm libraries, which supports various back-end devices such as Nvidia CUDA, Mac MPS, AMD, MLU, and more for model training.

|

||||

**Provides a user-friendly configuration system**

|

||||

|

||||

3. **Customizable training process**:

|

||||

- [Pure Python-style configuration files, easy to navigate](https://mmengine.readthedocs.io/en/latest/advanced_tutorials/config.html#a-pure-python-style-configuration-file-beta)

|

||||

- [Plain-text-style configuration files, supporting JSON and YAML](https://mmengine.readthedocs.io/en/latest/advanced_tutorials/config.html)

|

||||

|

||||

- Defines the training process just like playing with Legos.

|

||||

- Provides rich components and strategies.

|

||||

- Complete controls on the training process with different levels of APIs.

|

||||

**Covers mainstream training monitoring platforms**

|

||||

|

||||

|

||||

- [TensorBoard](https://mmengine.readthedocs.io/en/latest/common_usage/visualize_training_log.html#tensorboard) | [WandB](https://mmengine.readthedocs.io/en/latest/common_usage/visualize_training_log.html#wandb) | [MLflow](https://mmengine.readthedocs.io/en/latest/common_usage/visualize_training_log.html#mlflow-wip)

|

||||

- [ClearML](https://mmengine.readthedocs.io/en/latest/common_usage/visualize_training_log.html#clearml) | [Neptune](https://mmengine.readthedocs.io/en/latest/common_usage/visualize_training_log.html#neptune) | [DVCLive](https://mmengine.readthedocs.io/en/latest/common_usage/visualize_training_log.html#dvclive) | [Aim](https://mmengine.readthedocs.io/en/latest/common_usage/visualize_training_log.html#aim)

|

||||

|

||||

## Installation

|

||||

|

||||

<details>

|

||||

<summary>Supported PyTorch Versions</summary>

|

||||

|

||||

| MMEngine | PyTorch | Python |

|

||||

| ------------------ | ------------ | -------------- |

|

||||

| main | >=1.6 \<=2.1 | >=3.8, \<=3.11 |

|

||||

| >=0.9.0, \<=0.10.4 | >=1.6 \<=2.1 | >=3.8, \<=3.11 |

|

||||

|

||||

</details>

|

||||

|

||||

Before installing MMEngine, please ensure that PyTorch has been successfully installed following the [official guide](https://pytorch.org/get-started/locally/).

|

||||

|

||||

Install MMEngine

|

||||

|

|

|

|||

|

|

@ -19,13 +19,14 @@

|

|||

<div> </div>

|

||||

|

||||

[](https://pypi.org/project/mmengine/)

|

||||

[](#安装)

|

||||

[](https://pypi.org/project/mmengine)

|

||||

[](https://github.com/open-mmlab/mmengine/blob/main/LICENSE)

|

||||

[](https://github.com/open-mmlab/mmengine/issues)

|

||||

[](https://github.com/open-mmlab/mmengine/issues)

|

||||

|

||||

[📘使用文档](https://mmengine.readthedocs.io/zh_CN/latest/) |

|

||||

[🛠️安装教程](https://mmengine.readthedocs.io/zh_CN/latest/get_started/installation.html) |

|

||||

[简介](#简介) |

|

||||

[安装](#安装) |

|

||||

[快速上手](#快速上手) |

|

||||

[📘用户文档](https://mmengine.readthedocs.io/zh_CN/latest/) |

|

||||

[🤔报告问题](https://github.com/open-mmlab/mmengine/issues/new/choose)

|

||||

|

||||

</div>

|

||||

|

|

@ -58,59 +59,58 @@

|

|||

|

||||

## 最近进展

|

||||

|

||||

最新版本 v0.10.1 在 2023.11.22 发布。

|

||||

最新版本 v0.10.5 在 2024.9.11 发布。

|

||||

|

||||

亮点:

|

||||

版本亮点:

|

||||

|

||||

- 支持安装不依赖于 opencv 的 mmengine-lite 版本。可阅读[安装文档](https://mmengine.readthedocs.io/zh-cn/latest/get_started/installation.html#mmengine)了解用法。

|

||||

- 支持在 MLFlowVisBackend 中自定义 `artifact_location` [#1505](#1505)

|

||||

- 支持在 `DeepSpeedEngine._zero3_consolidated_16bit_state_dict` 使用 `exclude_frozen_parameters` [#1517](#1517)

|

||||

|

||||

- 支持使用 [ColossalAI](https://colossalai.org/) 进行训练。可阅读[大模型训练](https://mmengine.readthedocs.io/zh_CN/latest/common_usage/large_model_training.html#colossalai)了解用法。

|

||||

|

||||

- 支持梯度检查点。详见[用法](https://mmengine.readthedocs.io/zh_CN/latest/common_usage/save_gpu_memory.html#id3)。

|

||||

|

||||

- 支持多种可视化后端,包括`NeptuneVisBackend`、`DVCLiveVisBackend` 和 `AimVisBackend`。可阅读[可视化后端](https://mmengine.readthedocs.io/zh_CN/latest/common_usage/visualize_training_log.html)了解用法。

|

||||

|

||||

如果想了解更多版本更新细节和历史信息,请阅读[更新日志](./docs/en/notes/changelog.md#v0101-22112023)

|

||||

|

||||

## 目录

|

||||

|

||||

- [简介](#简介)

|

||||

- [安装](#安装)

|

||||

- [快速上手](#快速上手)

|

||||

- [了解更多](#了解更多)

|

||||

- [贡献指南](#贡献指南)

|

||||

- [引用](#引用)

|

||||

- [开源许可证](#开源许可证)

|

||||

- [生态项目](#生态项目)

|

||||

- [OpenMMLab 的其他项目](#openmmlab-的其他项目)

|

||||

- [欢迎加入 OpenMMLab 社区](#欢迎加入-openmmlab-社区)

|

||||

如果想了解更多版本更新细节和历史信息,请阅读[更新日志](./docs/en/notes/changelog.md#v0104-2342024)。

|

||||

|

||||

## 简介

|

||||

|

||||

MMEngine 是一个基于 PyTorch 实现的,用于训练深度学习模型的基础库。它为开发人员提供了坚实的工程基础,以此避免在工作流上编写冗余代码。作为 OpenMMLab 所有代码库的训练引擎,其在不同研究领域支持了上百个算法。此外,MMEngine 也可以用于非 OpenMMLab 项目中。

|

||||

MMEngine 是一个基于 PyTorch 实现的,用于训练深度学习模型的基础库。它作为 OpenMMLab 所有代码库的训练引擎,其在不同研究领域支持了上百个算法。此外,MMEngine 也可以用于非 OpenMMLab 项目中。它的亮点如下:

|

||||

|

||||

主要特性:

|

||||

**集成主流的大模型训练框架**

|

||||

|

||||

1. **通用且强大的执行器**:

|

||||

- [ColossalAI](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/large_model_training.html#colossalai)

|

||||

- [DeepSpeed](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/large_model_training.html#deepspeed)

|

||||

- [FSDP](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/large_model_training.html#fullyshardeddataparallel-fsdp)

|

||||

|

||||

- 支持用少量代码训练不同的任务,例如仅使用 80 行代码就可以训练 ImageNet(原始 PyTorch 示例需要 400 行)。

|

||||

- 轻松兼容流行的算法库(如 TIMM、TorchVision 和 Detectron2)中的模型。

|

||||

**支持丰富的训练策略**

|

||||

|

||||

2. **接口统一的开放架构**:

|

||||

- [混合精度训练(Mixed Precision Training)](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/speed_up_training.html#id3)

|

||||

- [梯度累积(Gradient Accumulation)](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/save_gpu_memory.html#id2)

|

||||

- [梯度检查点(Gradient Checkpointing)](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/save_gpu_memory.html#id3)

|

||||

|

||||

- 使用统一的接口处理不同的算法任务,例如,实现一个方法并应用于所有的兼容性模型。

|

||||

- 上下游的对接更加统一便捷,在为上层算法库提供统一抽象的同时,支持多种后端设备。目前 MMEngine 支持 Nvidia CUDA、Mac MPS、AMD、MLU 等设备进行模型训练。

|

||||

**提供易用的配置系统**

|

||||

|

||||

3. **可定制的训练流程**:

|

||||

- [纯 Python 风格的配置文件,易于跳转](https://mmengine.readthedocs.io/zh-cn/latest/advanced_tutorials/config.html#python-beta)

|

||||

- [纯文本风格的配置文件,支持 JSON 和 YAML](https://mmengine.readthedocs.io/zh-cn/latest/advanced_tutorials/config.html#id1)

|

||||

|

||||

- 定义了“乐高”式的训练流程。

|

||||

- 提供了丰富的组件和策略。

|

||||

- 使用不同等级的 API 控制训练过程。

|

||||

**覆盖主流的训练监测平台**

|

||||

|

||||

|

||||

- [TensorBoard](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/visualize_training_log.html#tensorboard) | [WandB](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/visualize_training_log.html#wandb) | [MLflow](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/visualize_training_log.html#mlflow-wip)

|

||||

- [ClearML](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/visualize_training_log.html#clearml) | [Neptune](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/visualize_training_log.html#neptune) | [DVCLive](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/visualize_training_log.html#dvclive) | [Aim](https://mmengine.readthedocs.io/zh-cn/latest/common_usage/visualize_training_log.html#aim)

|

||||

|

||||

**兼容主流的训练芯片**

|

||||

|

||||

- 英伟达 CUDA | 苹果 MPS

|

||||

- 华为 Ascend | 寒武纪 MLU | 摩尔线程 MUSA

|

||||

|

||||

## 安装

|

||||

|

||||

<details>

|

||||

<summary>支持的 PyTorch 版本</summary>

|

||||

|

||||

| MMEngine | PyTorch | Python |

|

||||

| ------------------ | ------------ | -------------- |

|

||||

| main | >=1.6 \<=2.1 | >=3.8, \<=3.11 |

|

||||

| >=0.9.0, \<=0.10.4 | >=1.6 \<=2.1 | >=3.8, \<=3.11 |

|

||||

|

||||

</details>

|

||||

|

||||

在安装 MMEngine 之前,请确保 PyTorch 已成功安装在环境中,可以参考 [PyTorch 官方安装文档](https://pytorch.org/get-started/locally/)。

|

||||

|

||||

安装 MMEngine

|

||||

|

|

|

|||

|

|

@ -3,6 +3,9 @@ version: 2

|

|||

formats:

|

||||

- epub

|

||||

|

||||

sphinx:

|

||||

configuration: docs/en/conf.py

|

||||

|

||||

# Set the version of Python and other tools you might need

|

||||

build:

|

||||

os: ubuntu-22.04

|

||||

|

|

@ -1008,7 +1008,7 @@ In this section, we use MMDetection to demonstrate how to migrate the abstract d

|

|||

|

||||

### 1. Simplify the module interface

|

||||

|

||||

Detector's external interfaces can be significantly simplified and unified. In the training process of a single-stage detection and segmentation algorithm in MMDet 2.X, `SingleStageDetector` requires `img`, `img_metas`, `gt_bboxes`, `gt_labels` and `gt_bboxes_ignore` as the inputs, but `SingleStageInstanceSegmentor` requires `gt_masks` as well. This causes inconsistency in the training interface and affects flexibility.

|

||||

Detector's external interfaces can be significantly simplified and unified. In the training process of a single-stage detection and segmentation algorithm in MMDet 2.X, `SingleStageDetector` requires `img`, `img_metas`, `gt_bboxes`, `gt_labels` and `gt_bboxes_ignore` as the inputs, but `SingleStageInstanceSegmentor` requires `gt_masks` as well. This causes inconsistency in the training interface and affects flexibility.

|

||||

|

||||

```python

|

||||

class SingleStageDetector(BaseDetector):

|

||||

|

|

|

|||

|

|

@ -4,7 +4,7 @@ This document provides some third-party optimizers supported by MMEngine, which

|

|||

|

||||

## D-Adaptation

|

||||

|

||||

[D-Adaptation](https://github.com/facebookresearch/dadaptation) provides `DAdaptAdaGrad`, `DAdaptAdam` and `DAdaptSGD` optimziers。

|

||||

[D-Adaptation](https://github.com/facebookresearch/dadaptation) provides `DAdaptAdaGrad`, `DAdaptAdam` and `DAdaptSGD` optimizers.

|

||||

|

||||

```{note}

|

||||

If you use the optimizer provided by D-Adaptation, you need to upgrade mmengine to `0.6.0`.

|

||||

|

|

@ -35,7 +35,7 @@ runner.train()

|

|||

|

||||

## Lion-Pytorch

|

||||

|

||||

[lion-pytorch](https://github.com/lucidrains/lion-pytorch) provides the `Lion` optimizer。

|

||||

[lion-pytorch](https://github.com/lucidrains/lion-pytorch) provides the `Lion` optimizer.

|

||||

|

||||

```{note}

|

||||

If you use the optimizer provided by Lion-Pytorch, you need to upgrade mmengine to `0.6.0`.

|

||||

|

|

@ -93,7 +93,7 @@ runner.train()

|

|||

|

||||

## bitsandbytes

|

||||

|

||||

[bitsandbytes](https://github.com/TimDettmers/bitsandbytes) provides `AdamW8bit`, `Adam8bit`, `Adagrad8bit`, `PagedAdam8bit`, `PagedAdamW8bit`, `LAMB8bit`, `LARS8bit`, `RMSprop8bit`, `Lion8bit`, `PagedLion8bit` and `SGD8bit` optimziers。

|

||||

[bitsandbytes](https://github.com/TimDettmers/bitsandbytes) provides `AdamW8bit`, `Adam8bit`, `Adagrad8bit`, `PagedAdam8bit`, `PagedAdamW8bit`, `LAMB8bit`, `LARS8bit`, `RMSprop8bit`, `Lion8bit`, `PagedLion8bit` and `SGD8bit` optimizers.

|

||||

|

||||

```{note}

|

||||

If you use the optimizer provided by bitsandbytes, you need to upgrade mmengine to `0.9.0`.

|

||||

|

|

@ -124,7 +124,7 @@ runner.train()

|

|||

|

||||

## transformers

|

||||

|

||||

[transformers](https://github.com/huggingface/transformers) provides `Adafactor` optimzier。

|

||||

[transformers](https://github.com/huggingface/transformers) provides `Adafactor` optimzier.

|

||||

|

||||

```{note}

|

||||

If you use the optimizer provided by transformers, you need to upgrade mmengine to `0.9.0`.

|

||||

|

|

|

|||

|

|

@ -30,7 +30,7 @@ train_dataloader = dict(

|

|||

type=dataset_type,

|

||||

data_prefix='data/cifar10',

|

||||

test_mode=False,

|

||||

indices=5000, # set indices=5000,represent every epoch only iterator 5000 samples

|

||||

indices=5000, # set indices=5000, represent every epoch only iterator 5000 samples

|

||||

pipeline=train_pipeline),

|

||||

sampler=dict(type='DefaultSampler', shuffle=True),

|

||||

)

|

||||

|

|

|

|||

|

|

@ -26,7 +26,7 @@ On the first machine:

|

|||

|

||||

```bash

|

||||

python -m torch.distributed.launch \

|

||||

--nnodes 8 \

|

||||

--nnodes 2 \

|

||||

--node_rank 0 \

|

||||

--master_addr 127.0.0.1 \

|

||||

--master_port 29500 \

|

||||

|

|

@ -38,9 +38,9 @@ On the second machine:

|

|||

|

||||

```bash

|

||||

python -m torch.distributed.launch \

|

||||

--nnodes 8 \

|

||||

--nnodes 2 \

|

||||

--node_rank 1 \

|

||||

--master_addr 127.0.0.1 \

|

||||

--master_addr "ip_of_the_first_machine" \

|

||||

--master_port 29500 \

|

||||

--nproc_per_node=8 \

|

||||

examples/distributed_training.py --launcher pytorch

|

||||

|

|

|

|||

|

|

@ -87,10 +87,10 @@ OpenMMLab requires the `inferencer(img)` to output a `dict` containing two field

|

|||

|

||||

When performing inference, the following steps are typically executed:

|

||||

|

||||

1. preprocess:Input data preprocessing, including data reading, data preprocessing, data format conversion, etc.

|

||||

1. preprocess: Input data preprocessing, including data reading, data preprocessing, data format conversion, etc.

|

||||

2. forward: Execute `model.forwward`

|

||||

3. visualize:Visualization of predicted results.

|

||||

4. postprocess:Post-processing of predicted results, including result format conversion, exporting predicted results, etc.

|

||||

3. visualize: Visualization of predicted results.

|

||||

4. postprocess: Post-processing of predicted results, including result format conversion, exporting predicted results, etc.

|

||||

|

||||

To improve the user experience of the inferencer, we do not want users to have to configure parameters for each step when performing inference. In other words, we hope that users can simply configure parameters for the `__call__` interface without being aware of the above process and complete the inference.

|

||||

|

||||

|

|

@ -173,8 +173,8 @@ Initializes and returns the `visualizer` required by the inferencer, which is eq

|

|||

|

||||

Input arguments:

|

||||

|

||||

- inputs:Input data, passed into `__call__`, usually a list of image paths or image data.

|

||||

- batch_size:batch size, passed in by the user when calling `__call__`.

|

||||

- inputs: Input data, passed into `__call__`, usually a list of image paths or image data.

|

||||

- batch_size: batch size, passed in by the user when calling `__call__`.

|

||||

- Other parameters: Passed in by the user and specified in `preprocess_kwargs`.

|

||||

|

||||

Return:

|

||||

|

|

@ -187,7 +187,7 @@ The `preprocess` function is a generator function by default, which applies the

|

|||

|

||||

Input arguments:

|

||||

|

||||

- inputs:The batch data processed by `preprocess` function.

|

||||

- inputs: The batch data processed by `preprocess` function.

|

||||

- Other parameters: Passed in by the user and specified in `forward_kwargs`.

|

||||

|

||||

Return:

|

||||

|

|

@ -204,9 +204,9 @@ This is an abstract method that must be implemented by the subclass.

|

|||

|

||||

Input arguments:

|

||||

|

||||

- inputs:The input data, which is the raw data without preprocessing.

|

||||

- preds:Predicted results of the model.

|

||||

- show:Whether to visualize.

|

||||

- inputs: The input data, which is the raw data without preprocessing.

|

||||

- preds: Predicted results of the model.

|

||||

- show: Whether to visualize.

|

||||

- Other parameters: Passed in by the user and specified in `visualize_kwargs`.

|

||||

|

||||

Return:

|

||||

|

|

@ -221,12 +221,12 @@ This is an abstract method that must be implemented by the subclass.

|

|||

|

||||

Input arguments:

|

||||

|

||||

- preds:The predicted results of the model, which is a `list` type. Each element in the list represents the prediction result for a single data item. In the OpenMMLab series of algorithm libraries, the type of each element in the prediction result is `BaseDataElement`.

|

||||

- visualization:Visualization results

|

||||

- preds: The predicted results of the model, which is a `list` type. Each element in the list represents the prediction result for a single data item. In the OpenMMLab series of algorithm libraries, the type of each element in the prediction result is `BaseDataElement`.

|

||||

- visualization: Visualization results

|

||||

- return_datasample: Whether to maintain datasample for return. When set to `False`, the returned result is converted to a `dict`.

|

||||

- Other parameters: Passed in by the user and specified in `postprocess_kwargs`.

|

||||

|

||||

Return:

|

||||

Return:

|

||||

|

||||

- The type of the returned value is a dictionary containing both the visualization and prediction results. OpenMMLab requires the returned dictionary to have two keys: `predictions` and `visualization`.

|

||||

|

||||

|

|

@ -234,9 +234,9 @@ Return:

|

|||

|

||||

Input arguments:

|

||||

|

||||

- inputs:The input data, usually a list of image paths or image data. Each element in `inputs` can also be other types of data as long as it can be processed by the `pipeline` returned by [init_pipeline](#_init_pipeline). When there is only one inference data in `inputs`, it does not have to be a `list`, `__call__` will internally wrap it into a list for further processing.

|

||||

- inputs: The input data, usually a list of image paths or image data. Each element in `inputs` can also be other types of data as long as it can be processed by the `pipeline` returned by [init_pipeline](#_init_pipeline). When there is only one inference data in `inputs`, it does not have to be a `list`, `__call__` will internally wrap it into a list for further processing.

|

||||

- return_datasample: Whether to convert datasample to dict for return.

|

||||

- batch_size:Batch size for inference, which will be further passed to the `preprocess` function.

|

||||

- batch_size: Batch size for inference, which will be further passed to the `preprocess` function.

|

||||

- Other parameters: Additional parameters assigned to `preprocess`, `forward`, `visualize`, and `postprocess` methods.

|

||||

|

||||

Return:

|

||||

|

|

|

|||

|

|

@ -74,11 +74,11 @@ history_buffer.min()

|

|||

# 1, the global minimum

|

||||

|

||||

history_buffer.max(2)

|

||||

# 3,the maximum in [2, 3]

|

||||

# 3, the maximum in [2, 3]

|

||||

history_buffer.min()

|

||||

# 3, the global maximum

|

||||

history_buffer.mean(2)

|

||||

# 2.5,the mean value in [2, 3], (2 + 3) / (1 + 1)

|

||||

# 2.5, the mean value in [2, 3], (2 + 3) / (1 + 1)

|

||||

history_buffer.mean()

|

||||

# 2, the global mean, (1 + 2 + 3) / (1 + 1 + 1)

|

||||

history_buffer = HistoryBuffer([1, 2, 3], [2, 2, 2]) # Cases when counts are not 1

|

||||

|

|

@ -431,7 +431,7 @@ In the case of multiple processes in multiple nodes without storage, logs are or

|

|||

|

||||

```text

|

||||

# without shared storage

|

||||

# node 0:

|

||||

# node 0:

|

||||

work_dir/20230228_141908

|

||||

├── 20230306_183634_${hostname}_device0_rank0.log

|

||||

├── 20230306_183634_${hostname}_device1_rank1.log

|

||||

|

|

@ -442,7 +442,7 @@ work_dir/20230228_141908

|

|||

├── 20230306_183634_${hostname}_device6_rank6.log

|

||||

├── 20230306_183634_${hostname}_device7_rank7.log

|

||||

|

||||

# node 7:

|

||||

# node 7:

|

||||

work_dir/20230228_141908

|

||||

├── 20230306_183634_${hostname}_device0_rank56.log

|

||||

├── 20230306_183634_${hostname}_device1_rank57.log

|

||||

|

|

|

|||

|

|

@ -1,6 +1,6 @@

|

|||

# 15 minutes to get started with MMEngine

|

||||

|

||||

In this tutorial, we'll take training a ResNet-50 model on CIFAR-10 dataset as an example. We will build a complete and configurable pipeline for both training and validation in only 80 lines of code with `MMEgnine`.

|

||||

In this tutorial, we'll take training a ResNet-50 model on CIFAR-10 dataset as an example. We will build a complete and configurable pipeline for both training and validation in only 80 lines of code with `MMEngine`.

|

||||

The whole process includes the following steps:

|

||||

|

||||

- [15 minutes to get started with MMEngine](#15-minutes-to-get-started-with-mmengine)

|

||||

|

|

|

|||

|

|

@ -1,30 +1,29 @@

|

|||

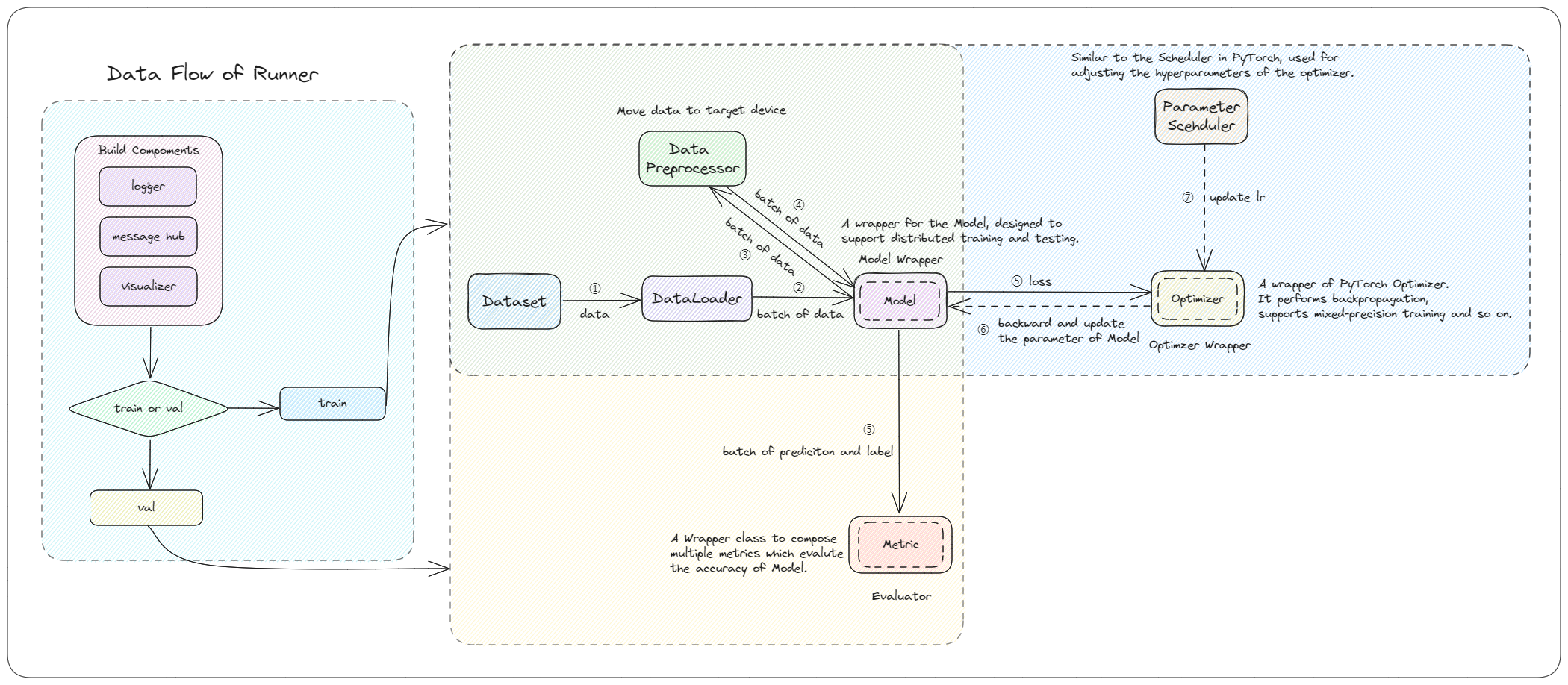

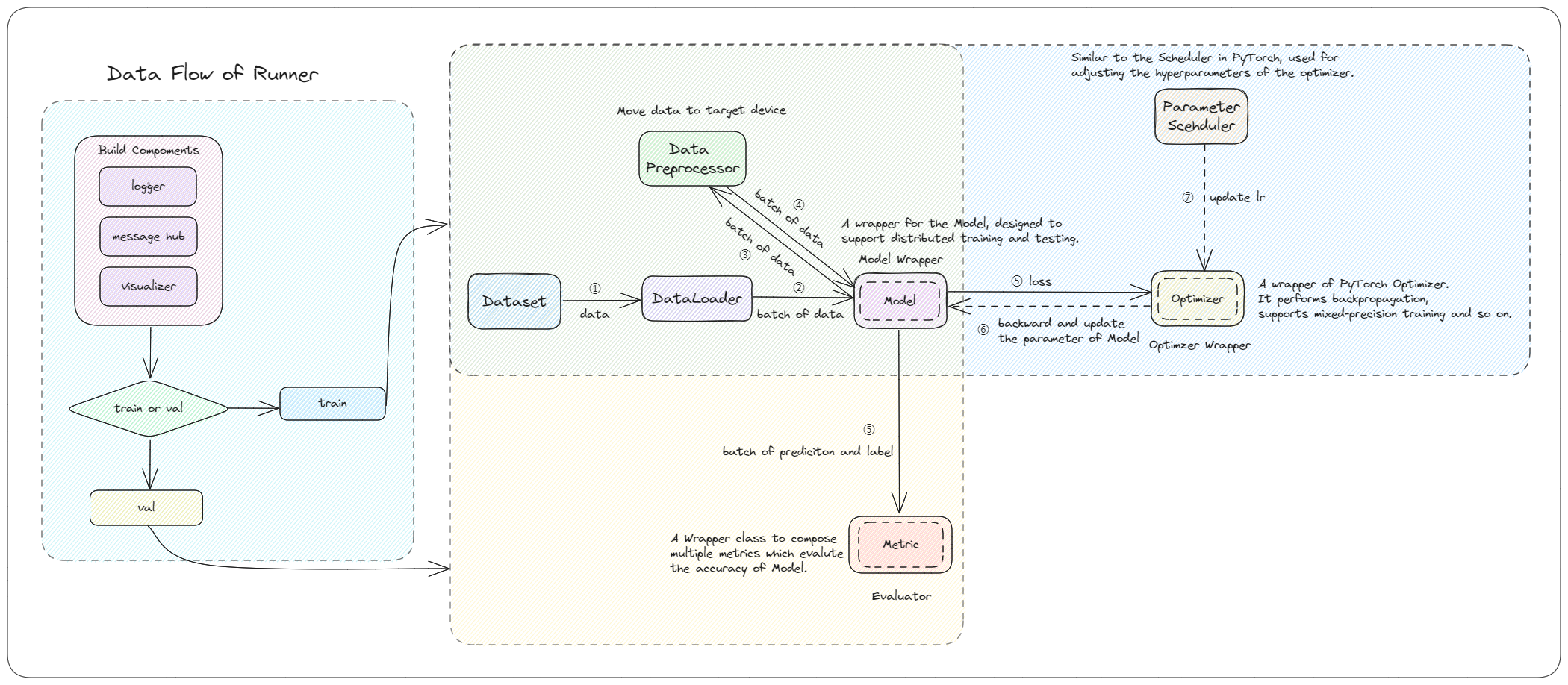

# Introduction

|

||||

|

||||

MMEngine is a foundational library for training deep learning models based on

|

||||

PyTorch. It supports running on Linux, Windows, and macOS. It has the

|

||||

following three features:

|

||||

PyTorch. It supports running on Linux, Windows, and macOS. Its highlights are as follows:

|

||||

|

||||

1. **Universal and powerful executor**:

|

||||

**Integrate mainstream large-scale model training frameworks**

|

||||

|

||||

- Supports training different tasks with minimal code, such as training

|

||||

ImageNet with just 80 lines of code (original PyTorch examples require

|

||||

400 lines).

|

||||

- Easily compatible with models from popular algorithm libraries like TIMM,

|

||||

TorchVision, and Detectron2.

|

||||

- [ColossalAI](../common_usage/large_model_training.md#colossalai)

|

||||

- [DeepSpeed](../common_usage/large_model_training.md#deepspeed)

|

||||

- [FSDP](../common_usage/large_model_training.md#fullyshardeddataparallel-fsdp)

|

||||

|

||||

2. **Open architecture with unified interfaces**:

|

||||

**Supports a variety of training strategies**

|

||||

|

||||

- Handles different tasks with a unified API: you can implement a method

|

||||

once and apply it to all compatible models.

|

||||

- Supports various backend devices through a simple, high-level

|

||||

abstraction. Currently, MMEngine supports model training on Nvidia CUDA,

|

||||

Mac MPS, AMD, MLU, and other devices.

|

||||

- [Mixed Precision Training](../common_usage/speed_up_training.md#mixed-precision-training)

|

||||

- [Gradient Accumulation](../common_usage/save_gpu_memory.md#gradient-accumulation)

|

||||

- [Gradient Checkpointing](../common_usage/save_gpu_memory.md#gradient-checkpointing)

|

||||

|

||||

3. **Customizable training process**:

|

||||

**Provides a user-friendly configuration system**

|

||||

|

||||

- Defines a highly modular training engine with "Lego"-like composability.

|

||||

- Offers a rich set of components and strategies.

|

||||

- Total control over the training process with different levels of APIs.

|

||||

- [Pure Python-style configuration files, easy to navigate](../advanced_tutorials/config.md#a-pure-python-style-configuration-file-beta)

|

||||

- [Plain-text-style configuration files, supporting JSON and YAML](../advanced_tutorials/config.html)

|

||||

|

||||

**Covers mainstream training monitoring platforms**

|

||||

|

||||

- [TensorBoard](../common_usage/visualize_training_log.md#tensorboard) | [WandB](../common_usage/visualize_training_log.md#wandb) | [MLflow](../common_usage/visualize_training_log.md#mlflow-wip)

|

||||

- [ClearML](../common_usage/visualize_training_log.md#clearml) | [Neptune](../common_usage/visualize_training_log.md#neptune) | [DVCLive](../common_usage/visualize_training_log.md#dvclive) | [Aim](../common_usage/visualize_training_log.md#aim)

|

||||

|

||||

## Architecture

|

||||

|

||||

|

|

|

|||

|

|

@ -156,7 +156,7 @@ This tutorial compares the difference in function, mount point, usage and implem

|

|||

<tr>

|

||||

<td>after each iteration</td>

|

||||

<td>after_train_iter</td>

|

||||

<td>after_train_iter, with additional args: batch_idx、data_batch, and outputs</td>

|

||||

<td>after_train_iter, with additional args: batch_idx, data_batch, and outputs</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td rowspan="6">Validation related</td>

|

||||

|

|

@ -187,7 +187,7 @@ This tutorial compares the difference in function, mount point, usage and implem

|

|||

<tr>

|

||||

<td>after each iteration</td>

|

||||

<td>after_val_iter</td>

|

||||

<td>after_val_iter, with additional args: batch_idx、data_batch and outputs</td>

|

||||

<td>after_val_iter, with additional args: batch_idx, data_batch and outputs</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td rowspan="6">Test related</td>

|

||||

|

|

@ -218,7 +218,7 @@ This tutorial compares the difference in function, mount point, usage and implem

|

|||

<tr>

|

||||

<td>after each iteration</td>

|

||||

<td>None</td>

|

||||

<td>after_test_iter, with additional args: batch_idx、data_batch and outputs</td>

|

||||

<td>after_test_iter, with additional args: batch_idx, data_batch and outputs</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

|

|

|

|||

|

|

@ -393,7 +393,7 @@ MMCV will wrap the model with distributed wrapper before building the runner, wh

|

|||

cfg = dict(model_wrapper_cfg='MMSeparateDistributedDataParallel')

|

||||

runner = Runner(

|

||||

model=model,

|

||||

..., # 其他配置

|

||||

...,

|

||||

launcher='pytorch',

|

||||

cfg=cfg)

|

||||

```

|

||||

|

|

|

|||

|

|

@ -1,5 +1,68 @@

|

|||

# Changelog of v0.x

|

||||

|

||||

## v0.10.5 (11/9/2024)

|

||||

|

||||

- Fix `_is_builtin_module`. by [@HAOCHENYE](https://github.com/HAOCHENYE) in https://github.com/open-mmlab/mmengine/pull/1571

|

||||

|

||||

## v0.10.4 (23/4/2024)

|

||||

|

||||

### New Features & Enhancements

|

||||

|

||||

- Support custom `artifact_location` in MLflowVisBackend. by [@daavoo](https://github.com/daavoo) in https://github.com/open-mmlab/mmengine/pull/1505

|

||||

- Add the supported pytorch versions in README by [@zhouzaida](https://github.com/zhouzaida) in https://github.com/open-mmlab/mmengine/pull/1512

|

||||

- Perform evaluation upon training completion by [@LZHgrla](https://github.com/LZHgrla) in https://github.com/open-mmlab/mmengine/pull/1529

|

||||

- Enable `exclude_frozen_parameters` for `DeepSpeedEngine._zero3_consolidated_16bit_state_dict` by [@LZHgrla](https://github.com/LZHgrla) in https://github.com/open-mmlab/mmengine/pull/1517

|

||||

|

||||

### Bug Fixes

|

||||

|

||||

- Fix warning capture by [@fanqiNO1](https://github.com/fanqiNO1) in https://github.com/open-mmlab/mmengine/pull/1494

|

||||

- Remove codeowners file by [@zhouzaida](https://github.com/zhouzaida) in https://github.com/open-mmlab/mmengine/pull/1496

|

||||

- Fix config of readthedocs by [@zhouzaida](https://github.com/zhouzaida) in https://github.com/open-mmlab/mmengine/pull/1511

|

||||

- Delete frozen parameters when using `paramwise_cfg` by [@LZHgrla](https://github.com/LZHgrla) in https://github.com/open-mmlab/mmengine/pull/1441

|

||||

|

||||

### Docs

|

||||

|

||||

- Refine mmengine intro by [@zhouzaida](https://github.com/zhouzaida) in https://github.com/open-mmlab/mmengine/pull/1479

|

||||

- Fix typo by [@zhouzaida](https://github.com/zhouzaida) in https://github.com/open-mmlab/mmengine/pull/1481

|

||||

- Fix typos and remove fullwidth unicode chars by [@evdcush](https://github.com/evdcush) in https://github.com/open-mmlab/mmengine/pull/1488

|

||||

- Fix docstring of Config by [@MambaWong](https://github.com/MambaWong) in https://github.com/open-mmlab/mmengine/pull/1506

|

||||

- Fix typo by [@hiramf](https://github.com/hiramf) in https://github.com/open-mmlab/mmengine/pull/1532

|

||||

|

||||

## v0.10.3 (24/1/2024)

|

||||

|

||||

### New Features & Enhancements

|

||||

|

||||

- Add the support for musa device support by [@hanhaowen-mt](https://github.com/hanhaowen-mt) in https://github.com/open-mmlab/mmengine/pull/1453

|

||||

- Support `save_optimizer=False` for DeepSpeed by [@LZHgrla](https://github.com/LZHgrla) in https://github.com/open-mmlab/mmengine/pull/1474

|

||||

- Update visualizer.py by [@Anm-pinellia](https://github.com/Anm-pinellia) in https://github.com/open-mmlab/mmengine/pull/1476

|

||||

|

||||

### Bug Fixes

|

||||

|

||||

- Fix `Config.to_dict` by [@HAOCHENYE](https://github.com/HAOCHENYE) in https://github.com/open-mmlab/mmengine/pull/1465

|

||||

- Fix the resume of iteration by [@LZHgrla](https://github.com/LZHgrla) in https://github.com/open-mmlab/mmengine/pull/1471

|

||||

- Fix `dist.collect_results` to keep all ranks' elements by [@LZHgrla](https://github.com/LZHgrla) in https://github.com/open-mmlab/mmengine/pull/1469

|

||||

|

||||

### Docs

|

||||

|

||||

- Add the usage of ProfilerHook by [@zhouzaida](https://github.com/zhouzaida) in https://github.com/open-mmlab/mmengine/pull/1466

|

||||

- Fix the nnodes in the doc of ddp training by [@XiwuChen](https://github.com/XiwuChen) in https://github.com/open-mmlab/mmengine/pull/1462

|

||||

|

||||

## v0.10.2 (26/12/2023)

|

||||

|

||||

### New Features & Enhancements

|

||||

|

||||

- Support multi-node distributed training with NPU backend by [@shun001](https://github.com/shun001) in https://github.com/open-mmlab/mmengine/pull/1459

|

||||

- Use `ImportError` to cover `ModuleNotFoundError` by [@del-zhenwu](https://github.com/del-zhenwu) in https://github.com/open-mmlab/mmengine/pull/1438

|

||||

|

||||

### Bug Fixes

|

||||

|

||||

- Fix bug in `load_model_state_dict` of `BaseStrategy` by [@SCZwangxiao](https://github.com/SCZwangxiao) in https://github.com/open-mmlab/mmengine/pull/1447

|

||||

- Fix placement policy in ColossalAIStrategy by [@fanqiNO1](https://github.com/fanqiNO1) in https://github.com/open-mmlab/mmengine/pull/1440

|

||||

|

||||

### Contributors

|

||||

|

||||

A total of 4 developers contributed to this release. Thanks [@shun001](https://github.com/shun001), [@del-zhenwu](https://github.com/del-zhenwu), [@SCZwangxiao](https://github.com/SCZwangxiao), [@fanqiNO1](https://github.com/fanqiNO1)

|

||||

|

||||

## v0.10.1 (22/11/2023)

|

||||

|

||||

### Bug Fixes

|

||||

|

|

@ -652,7 +715,7 @@ A total of 16 developers contributed to this release. Thanks [@BayMaxBHL](https:

|

|||

### Bug Fixes

|

||||

|

||||

- Fix error calculation of `eta_min` in `CosineRestartParamScheduler` by [@Z-Fran](https://github.com/Z-Fran) in https://github.com/open-mmlab/mmengine/pull/639

|

||||

- Fix `BaseDataPreprocessor.cast_data` could not handle string data by [@HAOCHENYE](https://github.com/HAOCHENYE) in https://github.com/open-mmlab/mmengine/pull/602

|

||||

- Fix `BaseDataPreprocessor.cast_data` could not handle string data by [@HAOCHENYE](https://github.com/HAOCHENYE) in https://github.com/open-mmlab/mmengine/pull/602

|

||||

- Make `autocast` compatible with mps by [@HAOCHENYE](https://github.com/HAOCHENYE) in https://github.com/open-mmlab/mmengine/pull/587

|

||||

- Fix error format of log message by [@HAOCHENYE](https://github.com/HAOCHENYE) in https://github.com/open-mmlab/mmengine/pull/508

|

||||

- Fix error implementation of `is_model_wrapper` by [@HAOCHENYE](https://github.com/HAOCHENYE) in https://github.com/open-mmlab/mmengine/pull/640

|

||||

|

|

|

|||

|

|

@ -31,11 +31,12 @@ Each hook has a corresponding priority. At each mount point, hooks with higher p

|

|||

|

||||

**custom hooks**

|

||||

|

||||

| Name | Function | Priority |

|

||||

| :---------------------------------: | :----------------------------------------------------------------------: | :---------: |

|

||||

| [EMAHook](#emahook) | apply Exponential Moving Average (EMA) on the model during training | NORMAL (50) |

|

||||

| [EmptyCacheHook](#emptycachehook) | Releases all unoccupied cached GPU memory during the process of training | NORMAL (50) |

|

||||

| [SyncBuffersHook](#syncbuffershook) | Synchronize model buffers at the end of each epoch | NORMAL (50) |

|

||||

| Name | Function | Priority |

|

||||

| :---------------------------------: | :----------------------------------------------------------------------: | :-----------: |

|

||||

| [EMAHook](#emahook) | Apply Exponential Moving Average (EMA) on the model during training | NORMAL (50) |

|

||||

| [EmptyCacheHook](#emptycachehook) | Releases all unoccupied cached GPU memory during the process of training | NORMAL (50) |

|

||||

| [SyncBuffersHook](#syncbuffershook) | Synchronize model buffers at the end of each epoch | NORMAL (50) |

|

||||

| [ProfilerHook](#profilerhook) | Analyze the execution time and GPU memory usage of model operators | VERY_LOW (90) |

|

||||

|

||||

```{note}

|

||||

It is not recommended to modify the priority of the default hooks, as hooks with lower priority may depend on hooks with higher priority. For example, `CheckpointHook` needs to have a lower priority than ParamSchedulerHook so that the saved optimizer state is correct. Also, the priority of custom hooks defaults to `NORMAL (50)`.

|

||||

|

|

@ -211,6 +212,20 @@ runner = Runner(custom_hooks=custom_hooks, ...)

|

|||

runner.train()

|

||||

```

|

||||

|

||||

### ProfilerHook

|

||||

|

||||

The [ProfilerHook](mmengine.hooks.ProfilerHook) is used to analyze the execution time and GPU memory occupancy of model operators.

|

||||

|

||||

```python

|

||||

custom_hooks = [dict(type='ProfilerHook', on_trace_ready=dict(type='tb_trace'))]

|

||||

runner = Runner(custom_hooks=custom_hooks, ...)

|

||||

runner.train()

|

||||

```

|

||||

|

||||

The profiling results will be saved in the tf_tracing_logs directory under `work_dirs/{timestamp}`, and can be visualized using TensorBoard with the command `tensorboard --logdir work_dirs/{timestamp}/tf_tracing_logs`.

|

||||

|

||||

For more information on the usage of the ProfilerHook, please refer to the [ProfilerHook](mmengine.hooks.ProfilerHook) documentation.

|

||||

|

||||

## Customize Your Hooks

|

||||

|

||||

If the built-in hooks provided by MMEngine do not cover your demands, you are encouraged to customize your own hooks by simply inheriting the base [hook](mmengine.hooks.Hook) class and overriding the corresponding mount point methods.

|

||||

|

|

|

|||

|

|

@ -43,7 +43,7 @@ Usually, we should define a model to implement the body of the algorithm. In MME

|

|||

Benefits from the `BaseModel`, we only need to make the model inherit from `BaseModel`, and implement the `forward` function to perform the training, testing, and validation process.

|

||||

|

||||

```{note}

|

||||

BaseModel inherits from [BaseModule](../advanced_tutorials/initialize.md),which can be used to initialize the model parameters dynamically.

|

||||

BaseModel inherits from [BaseModule](../advanced_tutorials/initialize.md), which can be used to initialize the model parameters dynamically.

|

||||

```

|

||||

|

||||