mirror of https://github.com/open-mmlab/mmocr.git

Compare commits

15 Commits

| Author | SHA1 | Date |

|---|---|---|

|

|

966296f26a | |

|

|

2caab0a4e7 | |

|

|

b18a09b2f0 | |

|

|

9551af6e5a | |

|

|

1dcd6fa695 | |

|

|

6b3f6f5285 | |

|

|

0cd2878b04 | |

|

|

bbe8964f00 | |

|

|

a344280bcb | |

|

|

4eb3cc7de5 | |

|

|

e9a31ddd70 | |

|

|

1e696887b9 | |

|

|

231cff5da2 | |

|

|

8afc79f370 | |

|

|

9e713c63fe |

.circleci

.github/ISSUE_TEMPLATE

configs/textrecog/satrn

dataset_zoo/iiit5k

docs

en

zh_cn

mmocr

apis/inferencers

datasets/preparers

dumpers

obtainers

models/textdet/detectors

requirements

tools

dataset_converters/textrecog

visualizations

|

|

@ -129,6 +129,7 @@ workflows:

|

|||

ignore:

|

||||

- dev-1.x

|

||||

- 1.x

|

||||

- main

|

||||

pr_stage_test:

|

||||

when:

|

||||

not:

|

||||

|

|

@ -141,6 +142,7 @@ workflows:

|

|||

ignore:

|

||||

- dev-1.x

|

||||

- test-1.x

|

||||

- main

|

||||

- build_cpu:

|

||||

name: minimum_version_cpu

|

||||

torch: 1.6.0

|

||||

|

|

@ -191,3 +193,4 @@ workflows:

|

|||

branches:

|

||||

only:

|

||||

- dev-1.x

|

||||

- main

|

||||

|

|

|

|||

|

|

@ -1,6 +1,6 @@

|

|||

name: "🐞 Bug report"

|

||||

description: "Create a report to help us reproduce and fix the bug"

|

||||

labels: bug

|

||||

labels: kind/bug

|

||||

title: "[Bug] "

|

||||

|

||||

body:

|

||||

|

|

|

|||

|

|

@ -196,11 +196,11 @@ We hope the toolbox and benchmark could serve the growing research community by

|

|||

If you find this project useful in your research, please consider cite:

|

||||

|

||||

```bibtex

|

||||

@article{mmocr2021,

|

||||

@article{mmocr2022,

|

||||

title={MMOCR: A Comprehensive Toolbox for Text Detection, Recognition and Understanding},

|

||||

author={Kuang, Zhanghui and Sun, Hongbin and Li, Zhizhong and Yue, Xiaoyu and Lin, Tsui Hin and Chen, Jianyong and Wei, Huaqiang and Zhu, Yiqin and Gao, Tong and Zhang, Wenwei and Chen, Kai and Zhang, Wayne and Lin, Dahua},

|

||||

journal= {arXiv preprint arXiv:2108.06543},

|

||||

year={2021}

|

||||

author={MMOCR Developer Team},

|

||||

howpublished = {\url{https://github.com/open-mmlab/mmocr}},

|

||||

year={2022}

|

||||

}

|

||||

```

|

||||

|

||||

|

|

|

|||

|

|

@ -232,10 +232,10 @@ MMOCR 是一款由来自不同高校和企业的研发人员共同参与贡献

|

|||

|

||||

## 欢迎加入 OpenMMLab 社区

|

||||

|

||||

扫描下方的二维码可关注 OpenMMLab 团队的 [知乎官方账号](https://www.zhihu.com/people/openmmlab),加入 OpenMMLab 团队的 [官方交流 QQ 群](https://r.vansin.top/?r=join-qq),或通过添加微信“Open小喵Lab”加入官方交流微信群。

|

||||

扫描下方的二维码可关注 OpenMMLab 团队的 知乎官方账号,扫描下方微信二维码添加喵喵好友,进入 MMOCR 微信交流社群。【加好友申请格式:研究方向+地区+学校/公司+姓名】

|

||||

|

||||

<div align="center">

|

||||

<img src="https://raw.githubusercontent.com/open-mmlab/mmcv/master/docs/en/_static/zhihu_qrcode.jpg" height="400" /> <img src="https://cdn.vansin.top/OpenMMLab/q3.png" height="400" /> <img src="https://raw.githubusercontent.com/open-mmlab/mmcv/master/docs/en/_static/wechat_qrcode.jpg" height="400" />

|

||||

<img src="https://raw.githubusercontent.com/open-mmlab/mmcv/master/docs/en/_static/zhihu_qrcode.jpg" height="400" /> <img src="https://github.com/open-mmlab/mmocr/assets/62195058/bf1e53fe-df4f-4296-9e1b-61db8971985e" height="400" />

|

||||

</div>

|

||||

|

||||

我们会在 OpenMMLab 社区为大家

|

||||

|

|

|

|||

|

|

@ -12,6 +12,8 @@ _base_ = [

|

|||

'_base_satrn_shallow.py',

|

||||

]

|

||||

|

||||

train_cfg = dict(type='EpochBasedTrainLoop', max_epochs=20, val_interval=1)

|

||||

|

||||

# dataset settings

|

||||

train_list = [_base_.mjsynth_textrecog_train, _base_.synthtext_textrecog_train]

|

||||

test_list = [

|

||||

|

|

|

|||

|

|

@ -17,7 +17,7 @@ train_preparer = dict(

|

|||

url='https://download.openmmlab.com/mmocr/data/mixture/IIIT5K/'

|

||||

'train_label.txt',

|

||||

save_name='iiit5k_train.txt',

|

||||

md5='f4731ce1eadc259532c2834266e5126d',

|

||||

md5='beee914aaf3ec5794622b843d743c5a6',

|

||||

content=['annotation'],

|

||||

mapping=[['iiit5k_train.txt', 'annotations/train.txt']])

|

||||

]),

|

||||

|

|

@ -47,7 +47,7 @@ test_preparer = dict(

|

|||

url='https://download.openmmlab.com/mmocr/data/mixture/IIIT5K/'

|

||||

'test_label.txt',

|

||||

save_name='iiit5k_test.txt',

|

||||

md5='82ecfa34a28d59284d1914dc906f5380',

|

||||

md5='117bcd9b4245f61fa57bfb37361674b3',

|

||||

content=['annotation'],

|

||||

mapping=[['iiit5k_test.txt', 'annotations/test.txt']])

|

||||

]),

|

||||

|

|

|

|||

File diff suppressed because it is too large

Load Diff

|

|

@ -127,24 +127,6 @@ html_theme_options = {

|

|||

},

|

||||

]

|

||||

},

|

||||

{

|

||||

'name':

|

||||

'Version',

|

||||

'children': [

|

||||

{

|

||||

'name': 'MMOCR 0.x',

|

||||

'url': 'https://mmocr.readthedocs.io/en/latest/',

|

||||

'description': 'Main branch'

|

||||

},

|

||||

{

|

||||

'name': 'MMOCR 1.x',

|

||||

'url': 'https://mmocr.readthedocs.io/en/dev-1.x/',

|

||||

'description': '1.x branch'

|

||||

},

|

||||

],

|

||||

'active':

|

||||

True,

|

||||

},

|

||||

],

|

||||

# Specify the language of shared menu

|

||||

'menu_lang':

|

||||

|

|

|

|||

|

|

@ -237,7 +237,8 @@ MMOCR has different version requirements on MMEngine, MMCV and MMDetection at ea

|

|||

|

||||

| MMOCR | MMEngine | MMCV | MMDetection |

|

||||

| -------------- | --------------------------- | -------------------------- | --------------------------- |

|

||||

| dev-1.x | 0.7.1 \<= mmengine \< 1.0.0 | 2.0.0rc4 \<= mmcv \< 2.1.0 | 3.0.0rc5 \<= mmdet \< 3.1.0 |

|

||||

| dev-1.x | 0.7.1 \<= mmengine \< 1.1.0 | 2.0.0rc4 \<= mmcv \< 2.1.0 | 3.0.0rc5 \<= mmdet \< 3.2.0 |

|

||||

| 1.0.1 | 0.7.1 \<= mmengine \< 1.1.0 | 2.0.0rc4 \<= mmcv \< 2.1.0 | 3.0.0rc5 \<= mmdet \< 3.2.0 |

|

||||

| 1.0.0 | 0.7.1 \<= mmengine \< 1.0.0 | 2.0.0rc4 \<= mmcv \< 2.1.0 | 3.0.0rc5 \<= mmdet \< 3.1.0 |

|

||||

| 1.0.0rc6 | 0.6.0 \<= mmengine \< 1.0.0 | 2.0.0rc4 \<= mmcv \< 2.1.0 | 3.0.0rc5 \<= mmdet \< 3.1.0 |

|

||||

| 1.0.0rc\[4-5\] | 0.1.0 \<= mmengine \< 1.0.0 | 2.0.0rc1 \<= mmcv \< 2.1.0 | 3.0.0rc0 \<= mmdet \< 3.1.0 |

|

||||

|

|

|

|||

|

|

@ -1,18 +1,17 @@

|

|||

# Dataset Preparer (Beta)

|

||||

|

||||

```{note}

|

||||

Dataset Preparer is still in beta version and might not be stable enough. You

|

||||

are welcome to try it out and report any issues to us.

|

||||

Dataset Preparer is still in beta version and might not be stable enough. You are welcome to try it out and report any issues to us.

|

||||

```

|

||||

|

||||

## One-click data preparation script

|

||||

|

||||

MMOCR provides a unified one-stop data preparation script `prepare_dataset.py`.

|

||||

|

||||

Only one line of command is needed to complete the data download, decompression, and format conversion.

|

||||

Only one line of command is needed to complete the data download, decompression, format conversion, and basic configure generation.

|

||||

|

||||

```bash

|

||||

python tools/dataset_converters/prepare_dataset.py [$DATASET_NAME] --task [$TASK] --nproc [$NPROC]

|

||||

python tools/dataset_converters/prepare_dataset.py [-h] [--nproc NPROC] [--task {textdet,textrecog,textspotting,kie}] [--splits SPLITS [SPLITS ...]] [--lmdb] [--overwrite-cfg] [--dataset-zoo-path DATASET_ZOO_PATH] datasets [datasets ...]

|

||||

```

|

||||

|

||||

| ARGS | Type | Description |

|

||||

|

|

@ -28,13 +27,13 @@ python tools/dataset_converters/prepare_dataset.py [$DATASET_NAME] --task [$TASK

|

|||

For example, the following command shows how to use the script to prepare the ICDAR2015 dataset for text detection task.

|

||||

|

||||

```bash

|

||||

python tools/dataset_converters/prepare_dataset.py icdar2015 --task textdet

|

||||

python tools/dataset_converters/prepare_dataset.py icdar2015 --task textdet --overwrite-cfg

|

||||

```

|

||||

|

||||

Also, the script supports preparing multiple datasets at the same time. For example, the following command shows how to prepare the ICDAR2015 and TotalText datasets for text recognition task.

|

||||

|

||||

```bash

|

||||

python tools/dataset_converters/prepare_dataset.py icdar2015 totaltext --task textrecog

|

||||

python tools/dataset_converters/prepare_dataset.py icdar2015 totaltext --task textrecog --overwrite-cfg

|

||||

```

|

||||

|

||||

To check the supported datasets of Dataset Preparer, please refer to [Dataset Zoo](./datasetzoo.md). Some of other datasets that need to be prepared manually are listed in [Text Detection](./det.md) and [Text Recognition](./recog.md).

|

||||

|

|

@ -81,30 +80,30 @@ For example, if we want to change the training set of `configs/textrecog/crnn/cr

|

|||

]

|

||||

```

|

||||

|

||||

### Configuration of Dataset Preparer

|

||||

## Design

|

||||

|

||||

Dataset preparer uses a modular design to enhance extensibility, which allows users to extend it to other public or private datasets easily. The configuration files of the dataset preparers are stored in the `dataset_zoo/`, where all the configs of currently supported datasets can be found here. The directory structure is as follows:

|

||||

There are many OCR datasets with different languages, annotation formats, and scenarios. There are generally two ways to use these datasets: to quickly understand the relevant information about the dataset, or to use it to train models. To meet these two usage scenarios, MMOCR provides dataset automatic preparation scripts. The dataset automatic preparation script uses modular design, which greatly enhances scalability, and allows users to easily configure other public or private datasets. The configuration files for the dataset automatic preparation script are uniformly stored in the `dataset_zoo/` directory. Users can find all the configuration files for the dataset preparation scripts officially supported by MMOCR in this directory. The directory structure of this folder is as follows:

|

||||

|

||||

```text

|

||||

dataset_zoo/

|

||||

├── icdar2015

|

||||

│ ├── metafile.yml

|

||||

│ ├── sample_anno.md

|

||||

│ ├── textdet.py

|

||||

│ ├── textrecog.py

|

||||

│ └── textspotting.py

|

||||

└── wildreceipt

|

||||

├── metafile.yml

|

||||

├── sample_anno.md

|

||||

├── kie.py

|

||||

├── textdet.py

|

||||

├── textrecog.py

|

||||

└── textspotting.py

|

||||

```

|

||||

|

||||

`metafile.yml` is the metafile of the dataset, which contains the basic information of the dataset, including the year of publication, the author of the paper, and other information such as license. The other files named by the task are the configuration files of the dataset preparer, which are used to configure the download, decompression, format conversion, etc. of the dataset. These configs are in Python format, and their usage is completely consistent with the configuration files in MMOCR repo. See [Configuration File Documentation](../config.md) for detailed usage.

|

||||

### Dataset-related Information

|

||||

|

||||

#### Metafile

|

||||

|

||||

Take the ICDAR2015 dataset as an example, the `metafile.yml` stores the basic information of the dataset:

|

||||

The relevant information of a dataset includes the annotation format, annotation examples, and basic statistical information of the dataset. Although this information can be found on the official website of each dataset, it is scattered across various websites, and users need to spend a lot of time to discover the basic information of the dataset. Therefore, MMOCR has designed some paradigms to help users quickly understand the basic information of the dataset. MMOCR divides the relevant information of the dataset into two parts. One part is the basic information of the dataset, including the year of publication, the authors of the paper, and copyright information, etc. The other part is the annotation information of the dataset, including the annotation format and annotation examples. MMOCR provides a paradigm for each part, and contributors can fill in the basic information of the dataset according to the paradigm. This way, users can quickly understand the basic information of the dataset. Based on the basic information of the dataset, MMOCR provides a `metafile.yml` file, which contains the basic information of the corresponding dataset, including the year of publication, the authors of the paper, and copyright information, etc. In this way, users can quickly understand the basic information of the dataset. This file is not mandatory during the dataset preparation process (so users can ignore it when adding their own private datasets), but to better understand the information of various public datasets, MMOCR recommends that users read the corresponding metafile information before using the dataset preparation script to understand whether the characteristics of the dataset meet the user's needs. MMOCR uses ICDAR2015 as an example, and its sample content is shown below:

|

||||

|

||||

```yaml

|

||||

Name: 'Incidental Scene Text IC15'

|

||||

|

|

@ -137,104 +136,640 @@ Data:

|

|||

Link: https://creativecommons.org/licenses/by/4.0/

|

||||

```

|

||||

|

||||

It is not mandatory to use the metafile in the dataset preparation process (so users can ignore this file when preparing private datasets), but in order to better understand the information of each public dataset, we recommend that users read the metafile before preparing the dataset, which will help to understand whether the datasets meet their needs.

|

||||

Specifically, MMOCR lists the meaning of each field in the following table:

|

||||

|

||||

```{warning}

|

||||

The following section is outdated as of MMOCR 1.0.0rc6.

|

||||

```

|

||||

| Field Name | Meaning |

|

||||

| :--------------- | :------------------------------------------------------------------------------------------------------- |

|

||||

| Name | The name of the dataset |

|

||||

| Paper.Title | The title of the paper for the dataset |

|

||||

| Paper.URL | The URL of the paper for the dataset |

|

||||

| Paper.Venue | The venue of the paper for the dataset |

|

||||

| Paper.Year | The year of publication for the paper |

|

||||

| Paper.BibTeX | The BibTeX citation of the paper for the dataset |

|

||||

| Data.Website | The official website of the dataset |

|

||||

| Data.Language | The supported languages of the dataset |

|

||||

| Data.Scene | The supported scenes of the dataset, such as `Natural Scene`, `Document`, `Handwritten`, etc. |

|

||||

| Data.Granularity | The supported granularities of the dataset, such as `Character`, `Word`, `Line`, etc. |

|

||||

| Data.Tasks | The supported tasks of the dataset, such as `textdet`, `textrecog`, `textspotting`, `kie`, etc. |

|

||||

| Data.License | License information for the dataset. Use `N/A` if no license exists. |

|

||||

| Data.Format | File format of the annotation files, such as `.txt`, `.xml`, `.json`, etc. |

|

||||

| Data.Keywords | Keywords describing the characteristics of the dataset, such as `Horizontal`, `Vertical`, `Curved`, etc. |

|

||||

|

||||

#### Config of Dataset Preparer

|

||||

For the annotation information of the dataset, MMOCR provides a `sample_anno.md` file, which users can use as a template to fill in the annotation information of the dataset, so that users can quickly understand the annotation information of the dataset. MMOCR uses ICDAR2015 as an example, and the sample content is as follows:

|

||||

|

||||

Next, we will introduce the conventional fields and usage of the dataset preparer configuration files.

|

||||

````markdown

|

||||

**Text Detection**

|

||||

|

||||

In the configuration files, there are two fields `data_root` and `cache_path`, which are used to store the converted dataset and the temporary files such as the archived files downloaded during the data preparation process.

|

||||

```text

|

||||

# x1,y1,x2,y2,x3,y3,x4,y4,trans

|

||||

|

||||

377,117,463,117,465,130,378,130,Genaxis Theatre

|

||||

493,115,519,115,519,131,493,131,[06]

|

||||

374,155,409,155,409,170,374,170,###

|

||||

````

|

||||

|

||||

`sample_anno.md` provides annotation information for different tasks of the dataset, including the format of the annotation files (text corresponds to `txt` files, and the format of the annotation files can also be found in `meta.yml`), and examples of the annotations.

|

||||

|

||||

With the information in these two files, users can quickly understand the basic information of the dataset. Additionally, MMOCR has summarized the basic information of all datasets, and users can view the basic information of all datasets in the [Overview](.overview.md).

|

||||

|

||||

### Dataset Usage

|

||||

|

||||

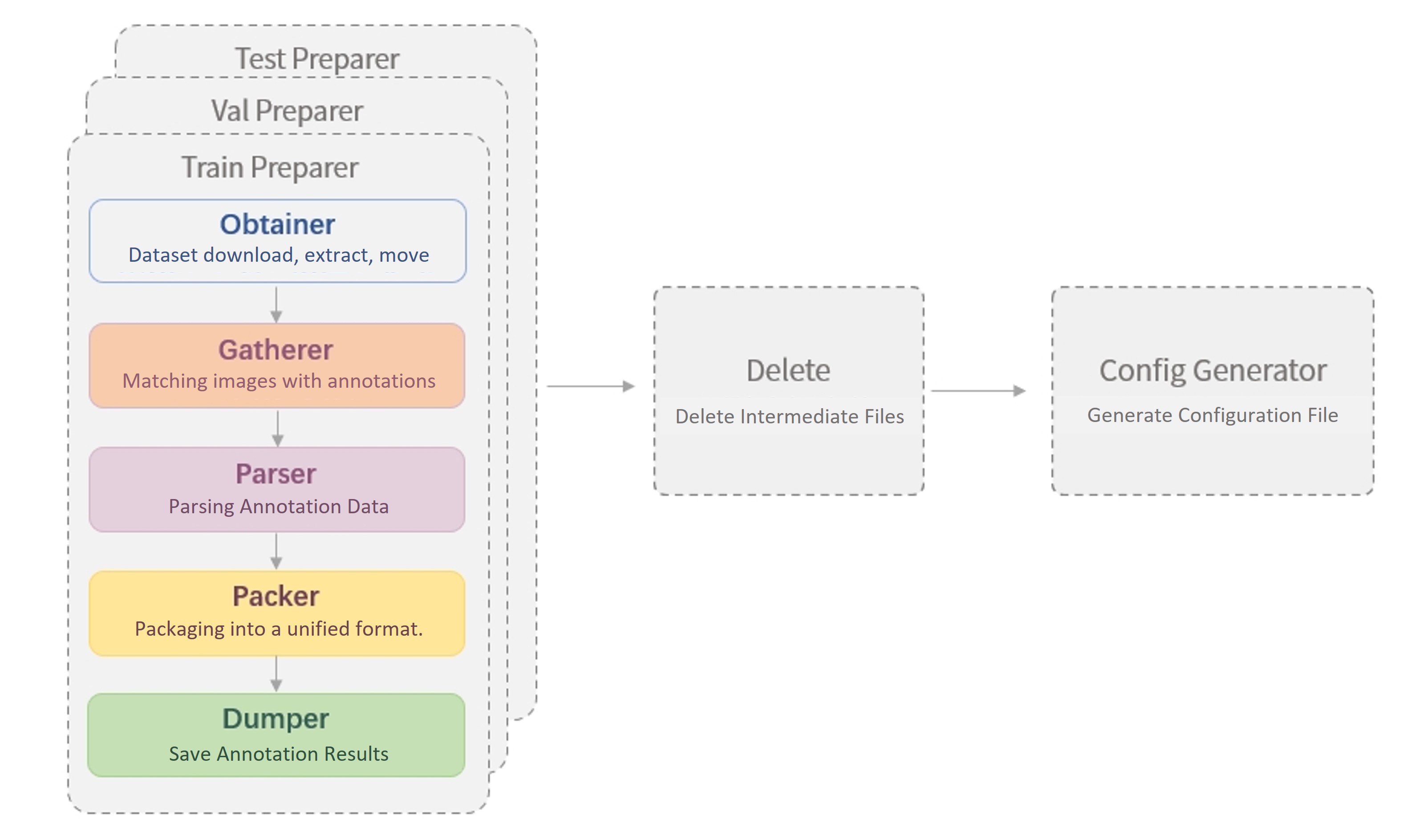

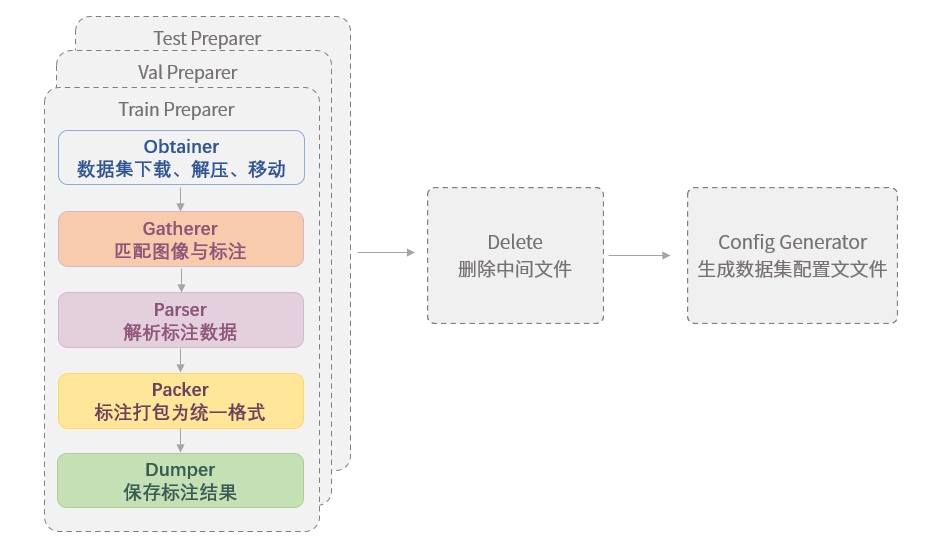

After decades of development, the OCR field has seen a series of related datasets emerge, often providing text annotation files in various styles, making it necessary for users to perform format conversion when using these datasets. Therefore, to facilitate dataset preparation for users, we have designed the Dataset Preparer to help users quickly prepare datasets in the format supported by MMOCR. For details, please refer to the [Dataset Format](../../basic_concepts/datasets.md) document. The following figure shows a typical workflow for running the Dataset Preparer.

|

||||

|

||||

|

||||

|

||||

The figure shows that when running the Dataset Preparer, the following operations will be performed in sequence:

|

||||

|

||||

1. For the training set, validation set, and test set, the preparers will perform:

|

||||

1. [Dataset download, extraction, and movement (Obtainer)](#Dataset-download-extraction-and-movement-obtainer)

|

||||

2. [Matching annotations with images (Gatherer)](#dataset-collection-gatherer)

|

||||

3. [Parsing original annotations (Parser)](#dataset-parsing-parser)

|

||||

4. [Packing annotations into a unified format (Packer)](#dataset-conversion-packer)

|

||||

5. [Saving annotations (Dumper)](#annotation-saving-dumper)

|

||||

2. Delete files (Delete)

|

||||

3. Generate the configuration file for the data set (Config Generator).

|

||||

|

||||

To handle various types of datasets, MMOCR has designed each component as a plug-and-play module, and allows users to configure the dataset preparation process through configuration files located in `dataset_zoo/`. These configuration files are in Python format and can be used in the same way as other configuration files in MMOCR, as described in the [Configuration File documentation](../config.md).

|

||||

|

||||

In `dataset_zoo/`, each dataset has its own folder, and the configuration files are named after the task to distinguish different configurations under different tasks. Taking the text detection part of ICDAR2015 as an example, the sample configuration file `dataset_zoo/icdar2015/textdet.py` is shown below:

|

||||

|

||||

```python

|

||||

data_root = './data/icdar2015'

|

||||

cache_path = './data/cache'

|

||||

data_root = 'data/icdar2015'

|

||||

cache_path = 'data/cache'

|

||||

train_preparer = dict(

|

||||

obtainer=dict(

|

||||

type='NaiveDataObtainer',

|

||||

cache_path=cache_path,

|

||||

files=[

|

||||

dict(

|

||||

url='https://rrc.cvc.uab.es/downloads/ch4_training_images.zip',

|

||||

save_name='ic15_textdet_train_img.zip',

|

||||

md5='c51cbace155dcc4d98c8dd19d378f30d',

|

||||

content=['image'],

|

||||

mapping=[['ic15_textdet_train_img', 'textdet_imgs/train']]),

|

||||

dict(

|

||||

url='https://rrc.cvc.uab.es/downloads/'

|

||||

'ch4_training_localization_transcription_gt.zip',

|

||||

save_name='ic15_textdet_train_gt.zip',

|

||||

md5='3bfaf1988960909014f7987d2343060b',

|

||||

content=['annotation'],

|

||||

mapping=[['ic15_textdet_train_gt', 'annotations/train']]),

|

||||

]),

|

||||

gatherer=dict(

|

||||

type='PairGatherer',

|

||||

img_suffixes=['.jpg', '.JPG'],

|

||||

rule=[r'img_(\d+)\.([jJ][pP][gG])', r'gt_img_\1.txt']),

|

||||

parser=dict(type='ICDARTxtTextDetAnnParser', encoding='utf-8-sig'),

|

||||

packer=dict(type='TextDetPacker'),

|

||||

dumper=dict(type='JsonDumper'),

|

||||

)

|

||||

|

||||

test_preparer = dict(

|

||||

obtainer=dict(

|

||||

type='NaiveDataObtainer',

|

||||

cache_path=cache_path,

|

||||

files=[

|

||||

dict(

|

||||

url='https://rrc.cvc.uab.es/downloads/ch4_test_images.zip',

|

||||

save_name='ic15_textdet_test_img.zip',

|

||||

md5='97e4c1ddcf074ffcc75feff2b63c35dd',

|

||||

content=['image'],

|

||||

mapping=[['ic15_textdet_test_img', 'textdet_imgs/test']]),

|

||||

dict(

|

||||

url='https://rrc.cvc.uab.es/downloads/'

|

||||

'Challenge4_Test_Task4_GT.zip',

|

||||

save_name='ic15_textdet_test_gt.zip',

|

||||

md5='8bce173b06d164b98c357b0eb96ef430',

|

||||

content=['annotation'],

|

||||

mapping=[['ic15_textdet_test_gt', 'annotations/test']]),

|

||||

]),

|

||||

gatherer=dict(

|

||||

type='PairGatherer',

|

||||

img_suffixes=['.jpg', '.JPG'],

|

||||

rule=[r'img_(\d+)\.([jJ][pP][gG])', r'gt_img_\1.txt']),

|

||||

parser=dict(type='ICDARTxtTextDetAnnParser', encoding='utf-8-sig'),

|

||||

packer=dict(type='TextDetPacker'),

|

||||

dumper=dict(type='JsonDumper'),

|

||||

)

|

||||

|

||||

delete = ['annotations', 'ic15_textdet_test_img', 'ic15_textdet_train_img']

|

||||

config_generator = dict(type='TextDetConfigGenerator')

|

||||

```

|

||||

|

||||

Data preparation usually contains two steps: "raw data preparation" and "format conversion and saving". Therefore, we use the `data_obtainer` and `data_converter` to configure the behavior of these two steps. In some cases, users can also ignore `data_converter` to only download and decompress the raw data, without performing format conversion and saving. Or, for the local stored dataset, use ignore `data_obtainer` to only perform format conversion and saving.

|

||||

#### Dataset download extraction and movement (Obtainer)

|

||||

|

||||

Take the text detection task of the ICDAR2015 dataset (`dataset_zoo/icdar2015/textdet.py`) as an example:

|

||||

The `obtainer` module in Dataset Preparer is responsible for downloading, extracting, and moving the dataset. Currently, MMOCR only provides the `NaiveDataObtainer`. Generally speaking, the built-in `NaiveDataObtainer` is sufficient for downloading most datasets that can be accessed through direct links, and supports operations such as extraction, moving files, and renaming. However, MMOCR currently does not support automatically downloading datasets stored in resources that require login, such as Baidu or Google Drive. Here is a brief introduction to the `NaiveDataObtainer`.

|

||||

|

||||

| Field Name | Meaning |

|

||||

| ---------- | -------------------------------------------------------------------------------------------- |

|

||||

| cache_path | Dataset cache path, used to store the compressed files downloaded during dataset preparation |

|

||||

| data_root | Root directory where the dataset is stored |

|

||||

| files | Dataset file list, used to describe the download information of the dataset |

|

||||

|

||||

The `files` field is a list, and each element in the list is a dictionary used to describe the download information of a dataset file. The table below shows the meaning of each field:

|

||||

|

||||

| Field Name | Meaning |

|

||||

| ------------------ | ------------------------------------------------------------------------------------------------------------------------------------------ |

|

||||

| url | Download link for the dataset file |

|

||||

| save_name | Name used to save the dataset file |

|

||||

| md5 (optional) | MD5 hash of the dataset file, used to check if the downloaded file is complete |

|

||||

| split (optional) | Dataset split the file belongs to, such as `train`, `test`, etc., this field can be omitted |

|

||||

| content (optional) | Content of the dataset file, such as `image`, `annotation`, etc., this field can be omitted |

|

||||

| mapping (optional) | Decompression mapping of the dataset file, used to specify the storage location of the file after decompression, this field can be omitted |

|

||||

|

||||

The Dataset Preparer follows the following conventions:

|

||||

|

||||

- Images of different types of datasets are moved to the corresponding category `{taskname}_imgs/{split}/` folder, such as `textdet_imgs/train/`.

|

||||

- For a annotation file containing annotation information for all images, the annotations are moved to `annotations/{split}.*` file, such as `annotations/train.json`.

|

||||

- For a annotation file containing annotation information for one image, all annotation files are moved to `annotations/{split}/` folder, such as `annotations/train/`.

|

||||

- For some other special cases, such as all training, testing, and validation images are in one folder, the images can be moved to a self-set folder, such as `{taskname}_imgs/imgs/`, and the image storage location should be specified in the subsequent `gatherer` module.

|

||||

|

||||

An example configuration is as follows:

|

||||

|

||||

```python

|

||||

data_obtainer = dict(

|

||||

type='NaiveDataObtainer',

|

||||

cache_path=cache_path,

|

||||

data_root=data_root,

|

||||

files=[

|

||||

obtainer=dict(

|

||||

type='NaiveDataObtainer',

|

||||

cache_path=cache_path,

|

||||

files=[

|

||||

dict(

|

||||

url='https://rrc.cvc.uab.es/downloads/ch4_training_images.zip',

|

||||

save_name='ic15_textdet_train_img.zip',

|

||||

md5='c51cbace155dcc4d98c8dd19d378f30d',

|

||||

content=['image'],

|

||||

mapping=[['ic15_textdet_train_img', 'textdet_imgs/train']]),

|

||||

dict(

|

||||

url='https://rrc.cvc.uab.es/downloads/'

|

||||

'ch4_training_localization_transcription_gt.zip',

|

||||

save_name='ic15_textdet_train_gt.zip',

|

||||

md5='3bfaf1988960909014f7987d2343060b',

|

||||

content=['annotation'],

|

||||

mapping=[['ic15_textdet_train_gt', 'annotations/train']]),

|

||||

]),

|

||||

```

|

||||

|

||||

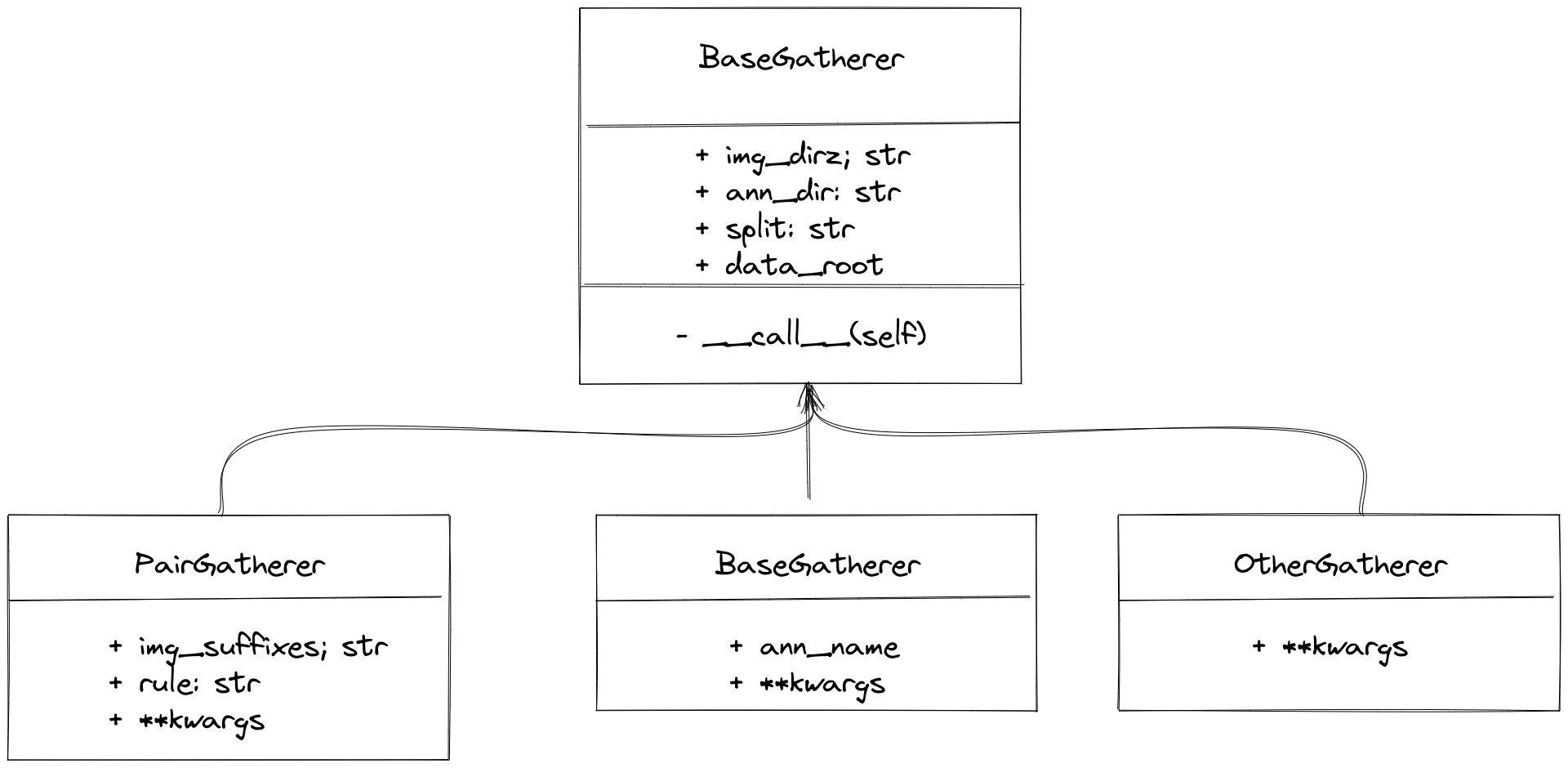

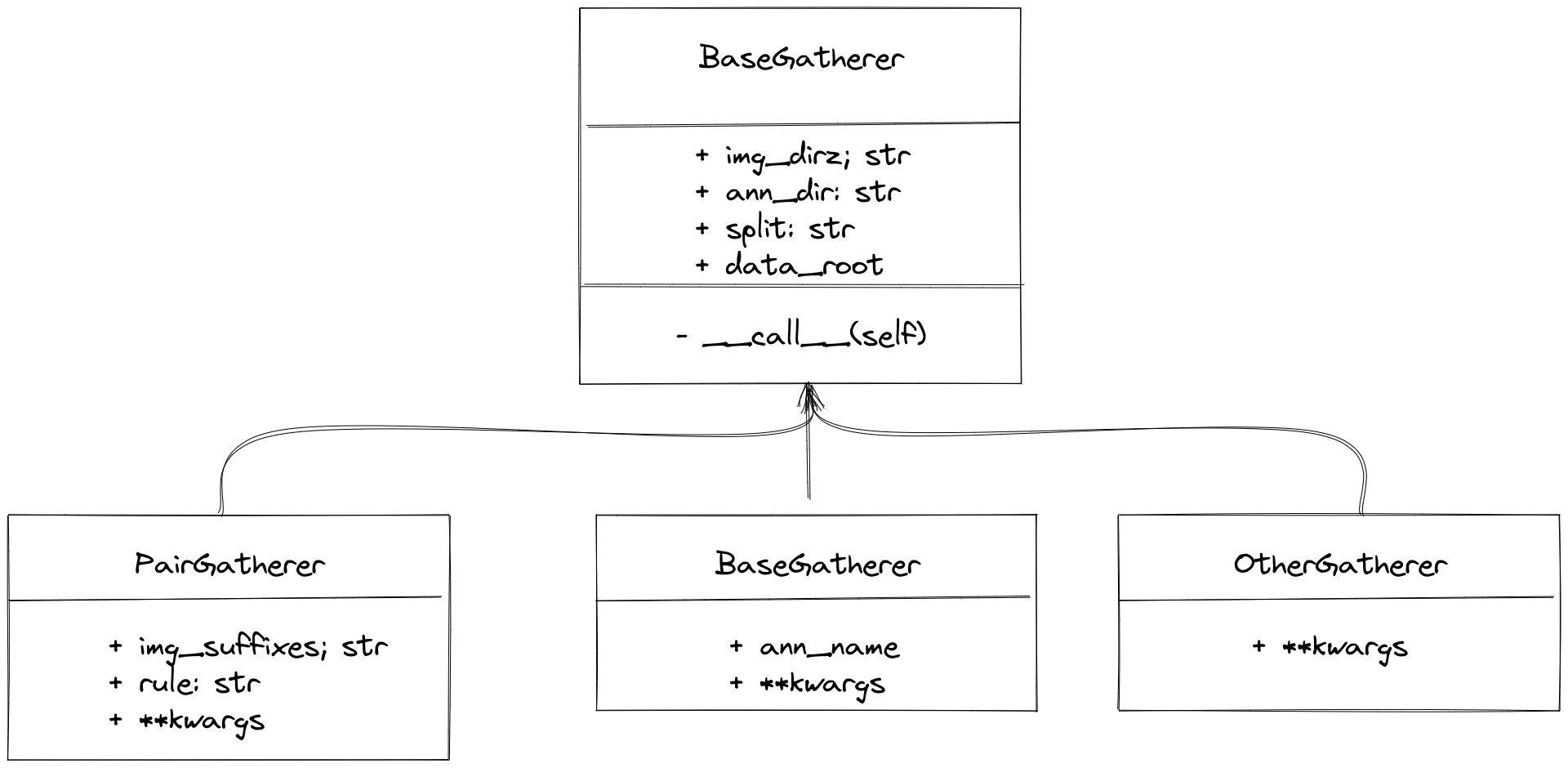

#### Dataset collection (Gatherer)

|

||||

|

||||

The `gatherer` module traverses the files in the dataset directory, matches image files with their corresponding annotation files, and organizes a file list for the `parser` module to read. Therefore, it is necessary to know the matching rules between image files and annotation files in the current dataset. There are two commonly used annotation storage formats for OCR datasets: one is multiple annotation files corresponding to multiple images, and the other is a single annotation file corresponding to multiple images, for example:

|

||||

|

||||

```text

|

||||

Many-to-Many

|

||||

├── {taskname}_imgs/{split}/img_img_1.jpg

|

||||

├── annotations/{split}/gt_img_1.txt

|

||||

├── {taskname}_imgs/{split}/img_2.jpg

|

||||

├── annotations/{split}/gt_img_2.txt

|

||||

├── {taskname}_imgs/{split}/img_3.JPG

|

||||

├── annotations/{split}/gt_img_3.txt

|

||||

|

||||

One-to-Many

|

||||

├── {taskname}/{split}/img_1.jpg

|

||||

├── {taskname}/{split}/img_2.jpg

|

||||

├── {taskname}/{split}/img_3.JPG

|

||||

├── annotations/gt.txt

|

||||

```

|

||||

|

||||

Specific design is as follows:

|

||||

|

||||

|

||||

|

||||

MMOCR has built-in `PairGatherer` and `MonoGatherer` to handle the two common cases mentioned above. `PairGatherer` is used for many-to-many situations, while `MonoGatherer` is used for one-to-many situations.

|

||||

|

||||

```{note}

|

||||

To simplify processing, the gatherer assumes that the dataset's images and annotations are stored separately in `{taskname}_imgs/{split}/` and `annotations/`, respectively. In particular, for many-to-many situations, the annotation file needs to be placed in `annotations/{split}`.

|

||||

```

|

||||

|

||||

- In the many-to-many case, `PairGatherer` needs to find the image files and corresponding annotation files according to a certain naming convention. First, the suffix of the image needs to be specified by the `img_suffixes` parameter, as in the example above `img_suffixes=[.jpg,.JPG]`. In addition, a pair of [regular expressions](https://docs.python.org/3/library/re.html) `rule` is used to specify the correspondence between the image and annotation files. For example, `rule=[r'img_(\d+)\.([jJ][pP][gG])',r'gt_img_\1.txt']`. The first regular expression is used to match the image file name, `\d+` is used to match the image sequence number, and `([jJ][pP][gG])` is used to match the image suffix. The second regular expression is used to match the annotation file name, where `\1` associates the matched image sequence number with the annotation file sequence number. An example configuration is:

|

||||

|

||||

```python

|

||||

gatherer=dict(

|

||||

type='PairGatherer',

|

||||

img_suffixes=['.jpg', '.JPG'],

|

||||

rule=[r'img_(\d+)\.([jJ][pP][gG])', r'gt_img_\1.txt']),

|

||||

```

|

||||

|

||||

For the case of one-to-many, it is usually simple, and the user only needs to specify the annotation file name. For example, for the training set configuration:

|

||||

|

||||

```python

|

||||

gatherer=dict(type='MonoGatherer', ann_name='train.txt'),

|

||||

```

|

||||

|

||||

MMOCR has also made conventions on the return value of `Gatherer`. `Gatherer` returns a tuple with two elements. The first element is a list of image paths (including all image paths) or the folder containing all images. The second element is a list of annotation file paths (including all annotation file paths) or the path of the annotation file (the annotation file contains all image annotation information). Specifically, the return value of `PairGatherer` is (list of image paths, list of annotation file paths), as shown below:

|

||||

|

||||

```python

|

||||

(['{taskname}_imgs/{split}/img_1.jpg', '{taskname}_imgs/{split}/img_2.jpg', '{taskname}_imgs/{split}/img_3.JPG'],

|

||||

['annotations/{split}/gt_img_1.txt', 'annotations/{split}/gt_img_2.txt', 'annotations/{split}/gt_img_3.txt'])

|

||||

```

|

||||

|

||||

`MonoGatherer` returns a tuple containing the path to the image directory and the path to the annotation file, as follows:

|

||||

|

||||

```python

|

||||

('{taskname}/{split}', 'annotations/gt.txt')

|

||||

```

|

||||

|

||||

#### Dataset parsing (Parser)

|

||||

|

||||

`Parser` is mainly used to parse the original annotation files. Since the original annotation formats vary greatly, MMOCR provides `BaseParser` as a base class, which users can inherit to implement their own `Parser`. In `BaseParser`, MMOCR has designed two interfaces: `parse_files` and `parse_file`, where the annotation parsing is conventionally carried out. For the two different input situations of `Gatherer` (many-to-many, one-to-many), the implementations of these two interfaces should be different.

|

||||

|

||||

- `BaseParser` by default handles the many-to-many situation. Among them, `parse_files` distributes the data in parallel to multiple `parse_file` processes, and each `parse_file` parses the annotation of a single image separately.

|

||||

- For the one-to-many situation, the user needs to override `parse_files` to implement loading the annotation and returning standardized results.

|

||||

|

||||

The interface of `BaseParser` is defined as follows:

|

||||

|

||||

```python

|

||||

class BaseParser:

|

||||

def __call__(self, img_paths, ann_paths):

|

||||

return self.parse_files(img_paths, ann_paths)

|

||||

|

||||

def parse_files(self, img_paths: Union[List[str], str],

|

||||

ann_paths: Union[List[str], str]) -> List[Tuple]:

|

||||

samples = track_parallel_progress_multi_args(

|

||||

self.parse_file, (img_paths, ann_paths), nproc=self.nproc)

|

||||

return samples

|

||||

|

||||

@abstractmethod

|

||||

def parse_file(self, img_path: str, ann_path: str) -> Tuple:

|

||||

|

||||

raise NotImplementedError

|

||||

```

|

||||

|

||||

In order to ensure the uniformity of subsequent modules, MMOCR has made conventions for the return values of `parse_files` and `parse_file`. The return value of `parse_file` is a tuple, the first element of which is the image path, and the second element is the annotation information. The annotation information is a list, each element of which is a dictionary with the fields `poly`, `text`, and `ignore`, as shown below:

|

||||

|

||||

```python

|

||||

# An example of returned values:

|

||||

(

|

||||

'imgs/train/xxx.jpg',

|

||||

[

|

||||

dict(

|

||||

url='https://rrc.cvc.uab.es/downloads/ch4_training_images.zip',

|

||||

save_name='ic15_textdet_train_img.zip',

|

||||

md5='c51cbace155dcc4d98c8dd19d378f30d',

|

||||

split=['train'],

|

||||

content=['image'],

|

||||

mapping=[['ic15_textdet_train_img', 'imgs/train']]),

|

||||

dict(

|

||||

url='https://rrc.cvc.uab.es/downloads/ch4_test_images.zip',

|

||||

save_name='ic15_textdet_test_img.zip',

|

||||

md5='97e4c1ddcf074ffcc75feff2b63c35dd',

|

||||

split=['test'],

|

||||

content=['image'],

|

||||

mapping=[['ic15_textdet_test_img', 'imgs/test']]),

|

||||

poly=[0, 1, 1, 1, 1, 0, 0, 0],

|

||||

text='hello',

|

||||

ignore=False),

|

||||

...

|

||||

]

|

||||

)

|

||||

```

|

||||

|

||||

The output of `parse_files` is a list, and each element in the list is the return value of `parse_file`. An example is:

|

||||

|

||||

```python

|

||||

[

|

||||

(

|

||||

'imgs/train/xxx.jpg',

|

||||

[

|

||||

dict(

|

||||

poly=[0, 1, 1, 1, 1, 0, 0, 0],

|

||||

text='hello',

|

||||

ignore=False),

|

||||

...

|

||||

]

|

||||

),

|

||||

...

|

||||

]

|

||||

```

|

||||

|

||||

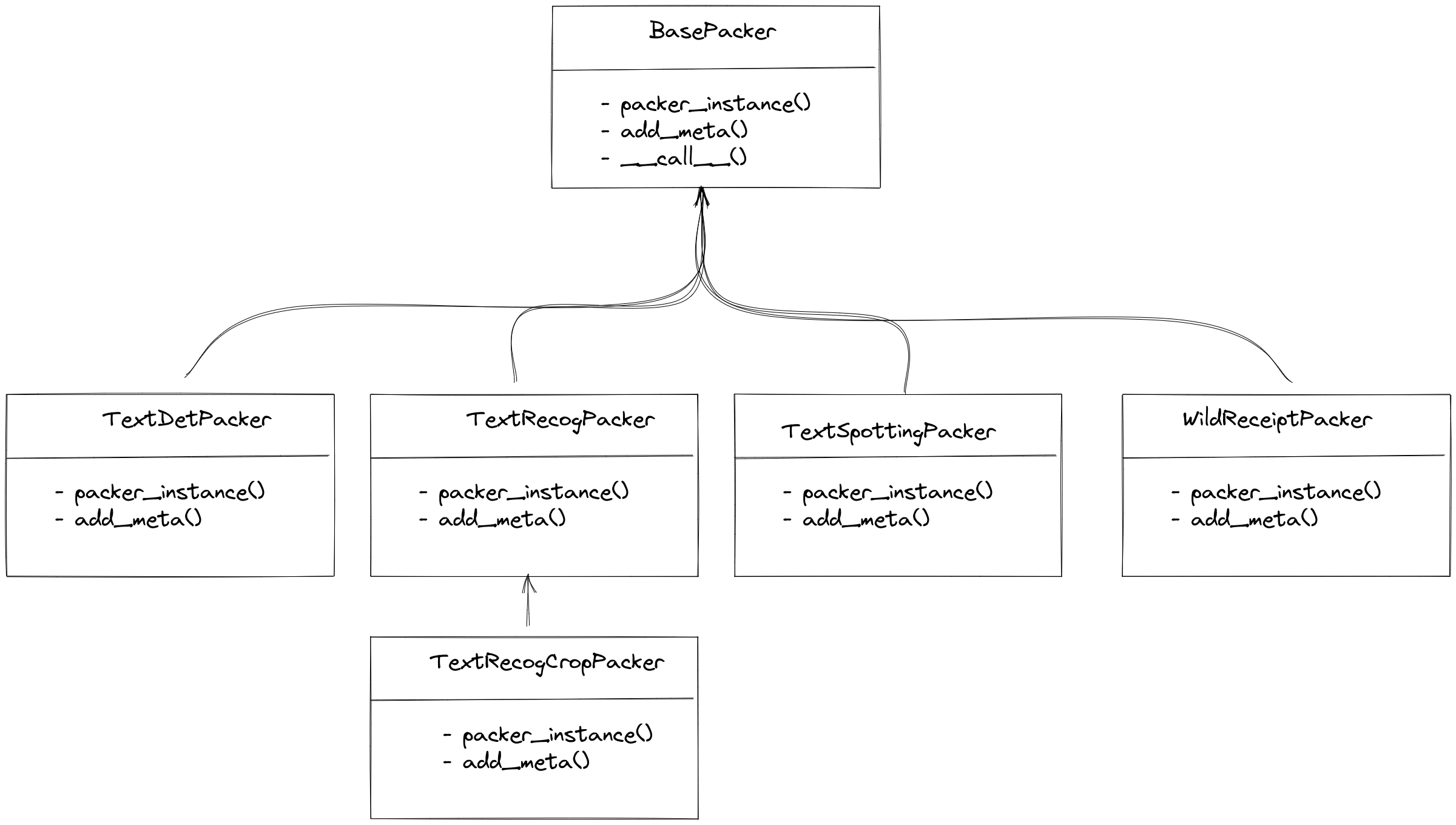

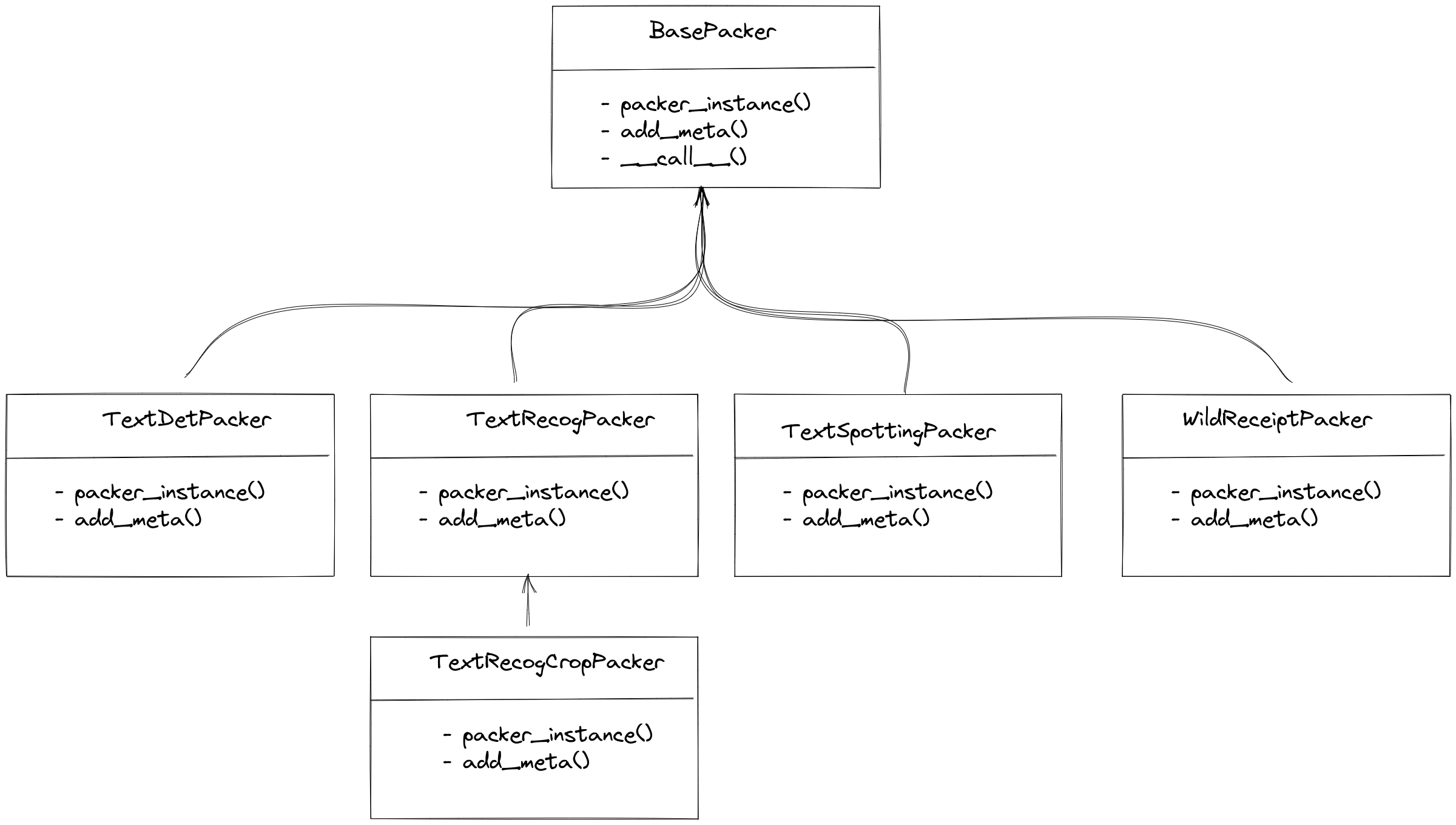

#### Dataset Conversion (Packer)

|

||||

|

||||

`Packer` is mainly used to convert data into a unified annotation format, because the input data is the output of parsers and the format has been fixed. Therefore, the packer only needs to convert the input format into a unified annotation format for each task. Currently, MMOCR supports tasks such as text detection, text recognition, end-to-end OCR, and key information extraction, and MMOCR has a corresponding packer for each task, as shown below:

|

||||

|

||||

|

||||

|

||||

For text detection, end-to-end OCR, and key information extraction, MMOCR has a unique corresponding `Packer`. However, for text recognition, MMOCR provides two `Packer` options: `TextRecogPacker` and `TextRecogCropPacker`, due to the existence of two types of datasets:

|

||||

|

||||

- Each image is a recognition sample, and the annotation information returned by the `parser` is only a `dict(text='xxx')`. In this case, `TextRecogPacker` can be used.

|

||||

- The dataset does not crop text from the image, and it essentially contains end-to-end OCR annotations that include the position information of the text and the corresponding text information. `TextRecogCropPacker` will crop the text from the image and then convert it into the unified format for text recognition.

|

||||

|

||||

#### Annotation Saving (Dumper)

|

||||

|

||||

The `dumper` module is used to determine what format the data should be saved in. Currently, MMOCR supports `JsonDumper`, `WildreceiptOpensetDumper`, and `TextRecogLMDBDumper`. They are used to save data in the standard MMOCR JSON format, the Wildreceipt format, and the LMDB format commonly used in the academic community for text recognition, respectively.

|

||||

|

||||

#### Delete files (Delete)

|

||||

|

||||

When processing a dataset, temporary files that are not needed may be generated. Here, a list of such files or folders can be passed in, which will be deleted when the conversion is finished.

|

||||

|

||||

#### Generate the configuration file for the dataset (ConfigGenerator)

|

||||

|

||||

In order to automatically generate basic configuration files after preparing the dataset, MMOCR has implemented `TextDetConfigGenerator`, `TextRecogConfigGenerator`, and `TextSpottingConfigGenerator` for each task. The main parameters supported by these generators are as follows:

|

||||

|

||||

| Field Name | Meaning |

|

||||

| ----------- | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| data_root | Root directory where the dataset is stored. |

|

||||

| train_anns | Path to the training set annotations in the configuration file. If not specified, it defaults to `[dict(ann_file='{taskname}_train.json', dataset_postfix='']`. |

|

||||

| val_anns | Path to the validation set annotations in the configuration file. If not specified, it defaults to an empty string. |

|

||||

| test_anns | Path to the test set annotations in the configuration file. If not specified, it defaults to `[dict(ann_file='{taskname}_test.json', dataset_postfix='']`. |

|

||||

| config_path | Path to the directory where the configuration files for the algorithm are stored. The configuration generator will write the default configuration to `{config_path}/{taskname}/_base_/datasets/{dataset_name}.py`. If not specified, it defaults to `configs/`. |

|

||||

|

||||

After preparing all the files for the dataset, the configuration generator will automatically generate the basic configuration files required to call the dataset. Below is a minimal example of a `TextDetConfigGenerator` configuration:

|

||||

|

||||

```python

|

||||

config_generator = dict(type='TextDetConfigGenerator')

|

||||

```

|

||||

|

||||

The generated file will be placed by default under `configs/{task}/_base_/datasets/`. In this example, the basic configuration file for the ICDAR 2015 dataset will be generated at `configs/textdet/_base_/datasets/icdar2015.py`.

|

||||

|

||||

```python

|

||||

icdar2015_textdet_data_root = 'data/icdar2015'

|

||||

|

||||

icdar2015_textdet_train = dict(

|

||||

type='OCRDataset',

|

||||

data_root=icdar2015_textdet_data_root,

|

||||

ann_file='textdet_train.json',

|

||||

filter_cfg=dict(filter_empty_gt=True, min_size=32),

|

||||

pipeline=None)

|

||||

|

||||

icdar2015_textdet_test = dict(

|

||||

type='OCRDataset',

|

||||

data_root=icdar2015_textdet_data_root,

|

||||

ann_file='textdet_test.json',

|

||||

test_mode=True,

|

||||

pipeline=None)

|

||||

```

|

||||

|

||||

If the dataset is special and there are several variants of the annotations, the configuration generator also supports generating variables pointing to each variant in the base configuration. However, this requires users to differentiate them by using different `dataset_postfix` when setting up. For example, the ICDAR 2015 text recognition dataset has two annotation versions for the test set, the original version and the 1811 version, which can be specified in `test_anns` as follows:

|

||||

|

||||

```python

|

||||

config_generator = dict(

|

||||

type='TextRecogConfigGenerator',

|

||||

test_anns=[

|

||||

dict(ann_file='textrecog_test.json'),

|

||||

dict(dataset_postfix='857', ann_file='textrecog_test_857.json')

|

||||

])

|

||||

```

|

||||

|

||||

The default type of `data_obtainer` is `NaiveDataObtainer`, which mainly downloads and decompresses the original files to the specified directory. Here, we configure the URL, save name, MD5 value, etc. of the original dataset files through the `files` parameter. The `mapping` parameter is used to specify the path where the data is decompressed or moved. In addition, the two optional parameters `split` and `content` respectively indicate the content type stored in the compressed file and the corresponding dataset.

|

||||

The configuration generator will generate the following configurations:

|

||||

|

||||

```python

|

||||

data_converter = dict(

|

||||

type='TextDetDataConverter',

|

||||

splits=['train', 'test'],

|

||||

data_root=data_root,

|

||||

gatherer=dict(

|

||||

type='pair_gather',

|

||||

suffixes=['.jpg', '.JPG'],

|

||||

rule=[r'img_(\d+)\.([jJ][pP][gG])', r'gt_img_\1.txt']),

|

||||

parser=dict(type='ICDARTxtTextDetAnnParser'),

|

||||

icdar2015_textrecog_data_root = 'data/icdar2015'

|

||||

|

||||

icdar2015_textrecog_train = dict(

|

||||

type='OCRDataset',

|

||||

data_root=icdar2015_textrecog_data_root,

|

||||

ann_file='textrecog_train.json',

|

||||

pipeline=None)

|

||||

|

||||

icdar2015_textrecog_test = dict(

|

||||

type='OCRDataset',

|

||||

data_root=icdar2015_textrecog_data_root,

|

||||

ann_file='textrecog_test.json',

|

||||

test_mode=True,

|

||||

pipeline=None)

|

||||

|

||||

icdar2015_1811_textrecog_test = dict(

|

||||

type='OCRDataset',

|

||||

data_root=icdar2015_textrecog_data_root,

|

||||

ann_file='textrecog_test_1811.json',

|

||||

test_mode=True,

|

||||

pipeline=None)

|

||||

```

|

||||

|

||||

With this file, MMOCR can directly import this dataset into the `dataloader` from the model configuration file (the following sample is excerpted from [`configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015.py`](/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015.py)):

|

||||

|

||||

```python

|

||||

_base_ = [

|

||||

'../_base_/datasets/icdar2015.py',

|

||||

# ...

|

||||

]

|

||||

|

||||

# dataset settings

|

||||

icdar2015_textdet_train = _base_.icdar2015_textdet_train

|

||||

icdar2015_textdet_test = _base_.icdar2015_textdet_test

|

||||

# ...

|

||||

|

||||

train_dataloader = dict(

|

||||

dataset=icdar2015_textdet_train)

|

||||

|

||||

val_dataloader = dict(

|

||||

dataset=icdar2015_textdet_test)

|

||||

|

||||

test_dataloader = val_dataloader

|

||||

```

|

||||

|

||||

```{note}

|

||||

By default, the configuration generator does not overwrite existing base configuration files unless the user manually specifies `overwrite-cfg` when running the script.

|

||||

```

|

||||

|

||||

## Adding a new dataset to Dataset Preparer

|

||||

|

||||

### Adding Public Datasets

|

||||

|

||||

MMOCR has already supported many [commonly used public datasets](./datasetzoo.md). If the dataset you want to use has not been supported yet and you are willing to [contribute to the MMOCR](../../notes/contribution_guide.md) open-source community, you can follow the steps below to add a new dataset.

|

||||

|

||||

In the following example, we will show you how to add the **ICDAR2013** dataset step by step.

|

||||

|

||||

#### Adding `metafile.yml`

|

||||

|

||||

First, make sure that the dataset you want to add does not already exist in `dataset_zoo/`. Then, create a new folder named after the dataset you want to add, such as `icdar2013/` (usually, use lowercase alphanumeric characters without symbols to name the dataset). In the `icdar2013/` folder, create a `metafile.yml` file and fill in the basic information of the dataset according to the following template:

|

||||

|

||||

```yaml

|

||||

Name: 'Incidental Scene Text IC13'

|

||||

Paper:

|

||||

Title: ICDAR 2013 Robust Reading Competition

|

||||

URL: https://www.imlab.jp/publication_data/1352/icdar_competition_report.pdf

|

||||

Venue: ICDAR

|

||||

Year: '2013'

|

||||

BibTeX: '@inproceedings{karatzas2013icdar,

|

||||

title={ICDAR 2013 robust reading competition},

|

||||

author={Karatzas, Dimosthenis and Shafait, Faisal and Uchida, Seiichi and Iwamura, Masakazu and i Bigorda, Lluis Gomez and Mestre, Sergi Robles and Mas, Joan and Mota, David Fernandez and Almazan, Jon Almazan and De Las Heras, Lluis Pere},

|

||||

booktitle={2013 12th international conference on document analysis and recognition},

|

||||

pages={1484--1493},

|

||||

year={2013},

|

||||

organization={IEEE}}'

|

||||

Data:

|

||||

Website: https://rrc.cvc.uab.es/?ch=2

|

||||

Language:

|

||||

- English

|

||||

Scene:

|

||||

- Natural Scene

|

||||

Granularity:

|

||||

- Word

|

||||

Tasks:

|

||||

- textdet

|

||||

- textrecog

|

||||

- textspotting

|

||||

License:

|

||||

Type: N/A

|

||||

Link: N/A

|

||||

Format: .txt

|

||||

Keywords:

|

||||

- Horizontal

|

||||

```

|

||||

|

||||

#### Add Annotation Examples

|

||||

|

||||

Finally, you can add an annotation example file `sample_anno.md` under the `dataset_zoo/icdar2013/` directory to help the documentation script add annotation examples when generating documentation. The annotation example file is a Markdown file that typically contains the raw data format of a single sample. For example, the following code block shows a sample data file for the ICDAR2013 dataset:

|

||||

|

||||

````markdown

|

||||

**Text Detection**

|

||||

|

||||

```text

|

||||

# train split

|

||||

# x1 y1 x2 y2 "transcript"

|

||||

|

||||

158 128 411 181 "Footpath"

|

||||

443 128 501 169 "To"

|

||||

64 200 363 243 "Colchester"

|

||||

|

||||

# test split

|

||||

# x1, y1, x2, y2, "transcript"

|

||||

|

||||

38, 43, 920, 215, "Tiredness"

|

||||

275, 264, 665, 450, "kills"

|

||||

0, 699, 77, 830, "A"

|

||||

````

|

||||

|

||||

#### Add configuration files for corresponding tasks

|

||||

|

||||

In the `dataset_zoo/icdar2013` directory, add a `.py` configuration file named after the task. For example, `textdet.py`, `textrecog.py`, `textspotting.py`, `kie.py`, etc. The configuration template is shown below:

|

||||

|

||||

```python

|

||||

data_root = ''

|

||||

data_cache = 'data/cache'

|

||||

train_prepare = dict(

|

||||

obtainer=dict(

|

||||

type='NaiveObtainer',

|

||||

data_cache=data_cache,

|

||||

files=[

|

||||

dict(

|

||||

url='xx',

|

||||

md5='',

|

||||

save_name='xxx',

|

||||

mapping=list())

|

||||

]),

|

||||

gatherer=dict(type='xxxGatherer', **kwargs),

|

||||

parser=dict(type='xxxParser', **kwargs),

|

||||

packer=dict(type='TextxxxPacker'), # Packer for the task

|

||||

dumper=dict(type='JsonDumper'),

|

||||

delete=['annotations', 'ic15_textdet_test_img', 'ic15_textdet_train_img'])

|

||||

)

|

||||

test_prepare = dict(

|

||||

obtainer=dict(

|

||||

type='NaiveObtainer',

|

||||

data_cache=data_cache,

|

||||

files=[

|

||||

dict(

|

||||

url='xx',

|

||||

md5='',

|

||||

save_name='xxx',

|

||||

mapping=list())

|

||||

]),

|

||||

gatherer=dict(type='xxxGatherer', **kwargs),

|

||||

parser=dict(type='xxxParser', **kwargs),

|

||||

packer=dict(type='TextxxxPacker'), # Packer for the task

|

||||

dumper=dict(type='JsonDumper'),

|

||||

)

|

||||

```

|

||||

|

||||

```{warning}

|

||||

This section is outdated and not yet synchronized with its Chinese version, please switch the language for the latest information.

|

||||

```

|

||||

Taking the file detection task as an example, let's introduce the specific content of the configuration file. In general, users do not need to implement new `obtainer`, `gatherer`, `packer`, or `dumper`, but usually need to implement a new `parser` according to the annotation format of the dataset.

|

||||

|

||||

`data_converter` is responsible for loading and converting the original to the format supported by MMOCR. We provide a number of built-in data converters for different tasks, such as `TextDetDataConverter`, `TextRecogDataConverter`, `TextSpottingDataConverter`, and `WildReceiptConverter` (Since we only support WildReceipt dataset for KIE task at present, we only provide this converter for now).

|

||||

Regarding the configuration of `obtainer`, we will not go into detail here, and you can refer to [Data set download, extraction, and movement (Obtainer)](#Dataset-download-extraction-and-movement-obtainer).

|

||||

|

||||

Take the text detection task as an example, `TextDetDataConverter` mainly completes the following work:

|

||||

|

||||

- Collect and match the images and original annotation files, such as the image `img_1.jpg` and the annotation `gt_img_1.txt`

|

||||

- Load and parse the original annotations to obtain necessary information such as the bounding box and text

|

||||

- Convert the parsed data to the format supported by MMOCR

|

||||

- Dump the converted data to the specified path and format

|

||||

|

||||

The above steps can be configured separately through `gatherer`, `parser`, `dumper`.

|

||||

|

||||

Specifically, the `gatherer` is used to collect and match the images and annotations in the original dataset. Typically, there are two relations between images and annotations, one is many-to-many, the other is many-to-one.

|

||||

For the `gatherer`, by observing the obtained ICDAR2013 dataset files, we found that each image has a corresponding `.txt` format annotation file:

|

||||

|

||||

```text

|

||||

many-to-many

|

||||

├── img_1.jpg

|

||||

├── gt_img_1.txt

|

||||

├── img_2.jpg

|

||||

├── gt_img_2.txt

|

||||

├── img_3.JPG

|

||||

├── gt_img_3.txt

|

||||

|

||||

one-to-many

|

||||

├── img_1.jpg

|

||||

├── img_2.jpg

|

||||

├── img_3.JPG

|

||||

├── gt.txt

|

||||

data_root

|

||||

├── textdet_imgs/train/

|

||||

│ ├── img_1.jpg

|

||||

│ ├── img_2.jpg

|

||||

│ └── ...

|

||||

├── annotations/train/

|

||||

│ ├── gt_img_1.txt

|

||||

│ ├── gt_img_2.txt

|

||||

│ └── ...

|

||||

```

|

||||

|

||||

Therefore, we provide two built-in gatherers, `pair_gather` and `mono_gather`, to handle the two cases. `pair_gather` is used for the case of many-to-many, and `mono_gather` is used for the case of one-to-many. `pair_gather` needs to specify the `suffixes` parameter to indicate the suffix of the image, such as `suffixes=[.jpg,.JPG]` in the above example. In addition, we need to specify the corresponding relationship between the image and the annotation file through the regular expression, such as `rule=[r'img_(\d+)\.([jJ][pP][gG])',r'gt_img_\1.txt']` in the above example. Where `\d+` is used to match the serial number of the image, `([jJ][pP][gG])` is used to match the suffix of the image, and `\_1` matches the serial number of the image and the serial number of the annotation file.

|

||||

Moreover, the name of each annotation file corresponds to the image: `gt_img_1.txt` corresponds to `img_1.jpg`, and so on. Therefore, `PairGatherer` can be used to match them.

|

||||

|

||||

When the image and annotation file are matched, the original annotations will be parsed. Since the annotation format is usually varied from dataset to dataset, the parsers are usually dataset related. Then, the parser will pack the required data into the MMOCR format.

|

||||

```python

|

||||

gatherer=dict(

|

||||

type='PairGatherer',

|

||||

img_suffixes=['.jpg'],

|

||||

rule=[r'(\w+)\.jpg', r'gt_\1.txt'])

|

||||

```

|

||||

|

||||

Finally, we can specify the dumpers to decide the data format. Currently, we support `JsonDumper`, `WildreceiptOpensetDumper`, and `TextRecogLMDBDumper`. They are used to save the data in the standard MMOCR Json format, Wildreceipt format, and the LMDB format commonly used in academia in the field of text recognition, respectively.

|

||||

The first regular expression in the rule is used to match the image file name, and the second regular expression is used to match the annotation file name. Here, `(\w+)` is used to match the image file name, and `gt_\1.txt` is used to match the annotation file name, where `\1` represents the content matched by the first regular expression. That is, it replaces `img_xx.jpg` with `gt_img_xx.txt`.

|

||||

|

||||

Next, you need to implement a `parser` to parse the original annotation files into a standard format. Usually, before adding a new dataset, users can browse the [details page](./datasetzoo.md) of the supported datasets and check if there is a dataset with the same format. If there is, you can use the parser of that dataset directly. Otherwise, you need to implement a new format parser.

|

||||

|

||||

Data format parsers are stored in the `mmocr/datasets/preparers/parsers` directory. All parsers need to inherit from `BaseParser` and implement the `parse_file` or `parse_files` method. For more information, please refer to [Parsing original annotations (Parser)](#dataset-parsing-parser).

|

||||

|

||||

By observing the annotation files of the ICDAR2013 dataset:

|

||||

|

||||

```text

|

||||

158 128 411 181 "Footpath"

|

||||

443 128 501 169 "To"

|

||||

64 200 363 243 "Colchester"

|

||||

542, 710, 938, 841, "break"

|

||||

87, 884, 457, 1021, "could"

|

||||

517, 919, 831, 1024, "save"

|

||||

```

|

||||

|

||||

We found that the built-in `ICDARTxtTextDetAnnParser` already meets the requirements, so we can directly use this parser and configure it in the `preparer`.

|

||||

|

||||

```python

|

||||

parser=dict(

|

||||

type='ICDARTxtTextDetAnnParser',

|

||||

remove_strs=[',', '"'],

|

||||

encoding='utf-8',

|

||||

format='x1 y1 x2 y2 trans',

|

||||

separator=' ',

|

||||

mode='xyxy')

|

||||

```

|

||||

|

||||

In the configuration for the `ICDARTxtTextDetAnnParser`, `remove_strs=[',', '"']` is specified to remove extra quotes and commas in the annotation files. In the `format` section, `x1 y1 x2 y2 trans` indicates that each line in the annotation file contains four coordinates and a text content separated by spaces (`separator`=' '). Also, `mode` is set to `xyxy`, which means that the coordinates in the annotation file are the coordinates of the top-left and bottom-right corners, so that `ICDARTxtTextDetAnnParser` can parse the annotations into a unified format.

|

||||

|

||||

For the `packer`, taking the file detection task as an example, its `packer` is `TextDetPacker`, and its configuration is as follows:

|

||||

|

||||

```python

|

||||

packer=dict(type='TextDetPacker')

|

||||

```

|

||||

|

||||

Finally, specify the `dumper`, which is generally saved in json format. Its configuration is as follows:

|

||||

|

||||

```python

|

||||

dumper=dict(type='JsonDumper')

|

||||

```

|

||||

|

||||

After the above configuration, the configuration file for the ICDAR2013 training set is as follows:

|

||||

|

||||

```python

|

||||

train_preparer = dict(

|

||||

obtainer=dict(

|

||||

type='NaiveDataObtainer',

|

||||

cache_path=cache_path,

|

||||

files=[

|

||||

dict(

|

||||

url='https://rrc.cvc.uab.es/downloads/'

|

||||

'Challenge2_Training_Task12_Images.zip',

|

||||

save_name='ic13_textdet_train_img.zip',

|

||||

md5='a443b9649fda4229c9bc52751bad08fb',

|

||||

content=['image'],

|

||||

mapping=[['ic13_textdet_train_img', 'textdet_imgs/train']]),

|

||||

dict(

|

||||

url='https://rrc.cvc.uab.es/downloads/'

|

||||

'Challenge2_Training_Task1_GT.zip',

|

||||

save_name='ic13_textdet_train_gt.zip',

|

||||

md5='f3a425284a66cd67f455d389c972cce4',

|

||||

content=['annotation'],

|

||||

mapping=[['ic13_textdet_train_gt', 'annotations/train']]),

|

||||

]),

|

||||

gatherer=dict(

|

||||

type='PairGatherer',

|

||||

img_suffixes=['.jpg'],

|

||||

rule=[r'(\w+)\.jpg', r'gt_\1.txt']),

|

||||

parser=dict(

|

||||

type='ICDARTxtTextDetAnnParser',

|

||||

remove_strs=[',', '"'],

|

||||

format='x1 y1 x2 y2 trans',

|

||||

separator=' ',

|

||||

mode='xyxy'),

|

||||

packer=dict(type='TextDetPacker'),

|

||||

dumper=dict(type='JsonDumper'),

|

||||

)

|

||||

```

|

||||

|

||||

To automatically generate the basic configuration after the dataset is prepared, you also need to configure the corresponding task's `config_generator`.

|

||||

|

||||

In this example, since it is a text detection task, you only need to set the generator to `TextDetConfigGenerator`.

|

||||

|

||||

```python

|

||||

config_generator = dict(type='TextDetConfigGenerator')

|

||||

```

|

||||

|

||||

### Use DataPreparer to prepare customized dataset

|

||||

|

||||

|

|

|

|||

|

|

@ -1,10 +1,10 @@

|

|||

# Useful Tools

|

||||

|

||||

## Analysis Tools

|

||||

## Visualization Tools

|

||||

|

||||

### Dataset Visualization Tool

|

||||

|

||||

MMOCR provides a dataset visualization tool `tools/analysis_tools/browse_datasets.py` to help users troubleshoot possible dataset-related problems. You just need to specify the path to the training config (usually stored in `configs/textdet/dbnet/xxx.py`) or the dataset config (usually stored in `configs/textdet/_base_/datasets/xxx.py`), and the tool will automatically plots the transformed (or original) images and labels.

|

||||

MMOCR provides a dataset visualization tool `tools/visualizations/browse_datasets.py` to help users troubleshoot possible dataset-related problems. You just need to specify the path to the training config (usually stored in `configs/textdet/dbnet/xxx.py`) or the dataset config (usually stored in `configs/textdet/_base_/datasets/xxx.py`), and the tool will automatically plots the transformed (or original) images and labels.

|

||||

|

||||

#### Usage

|

||||

|

||||

|

|

@ -25,11 +25,11 @@ python tools/visualizations/browse_dataset.py \

|

|||

| config | str | (required) Path to the config. |

|

||||

| -o, --output-dir | str | If GUI is not available, specifying an output path to save the visualization results. |

|

||||

| -p, --phase | str | Phase of dataset to visualize. Use "train", "test" or "val" if you just want to visualize the default split. It's also possible to be a dataset variable name, which might be useful when a dataset split has multiple variants in the config. |

|

||||

| -m, --mode | `original`, `transformed`, `pipeline` | Display mode: display original pictures or transformed pictures or comparison pictures. `original` only visualizes the original dataset & annotations; `transformed` shows the resulting images processed through all the transforms; `pipeline` shows all the intermediate images. Defaults to "transformed". |

|

||||

| -m, --mode | `original`, `transformed`, `pipeline` | Display mode: display original pictures or transformed pictures or comparison pictures.`original` only visualizes the original dataset & annotations; `transformed` shows the resulting images processed through all the transforms; `pipeline` shows all the intermediate images. Defaults to "transformed". |

|

||||

| -t, --task | `auto`, `textdet`, `textrecog` | Specify the task type of the dataset. If `auto`, the task type will be inferred from the config. If the script is unable to infer the task type, you need to specify it manually. Defaults to `auto`. |

|

||||

| -n, --show-number | int | The number of samples to visualized. If not specified, display all images in the dataset. |

|

||||

| -i, --show-interval | float | Interval of visualization (s), defaults to 2. |

|

||||

| --cfg-options | float | Override configs. [Example](./config.md#command-line-modification) |

|

||||

| --cfg-options | float | Override configs.[Example](./config.md#command-line-modification) |

|

||||

|

||||

#### Examples

|

||||

|

||||

|

|

@ -37,7 +37,7 @@ The following example demonstrates how to use the tool to visualize the training

|

|||

|

||||

```Bash

|

||||

# Example: Visualizing the training data used by dbnet_r50dcn_v2_fpnc_1200e_icadr2015 model

|

||||

python tools/analysis_tools/browse_dataset.py configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py

|

||||

python tools/visualizations/browse_dataset.py configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py

|

||||

```

|

||||

|

||||

By default, the visualization mode is "transformed", and you will see the images & annotations being transformed by the pipeline:

|

||||

|

|

@ -49,7 +49,7 @@ By default, the visualization mode is "transformed", and you will see the images

|

|||

If you just want to visualize the original dataset, simply set the mode to "original":

|

||||

|

||||

```Bash

|

||||

python tools/analysis_tools/browse_dataset.py configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py -m original

|

||||

python tools/visualizations/browse_dataset.py configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py -m original

|

||||

```

|

||||

|

||||

<div align=center><img src="https://user-images.githubusercontent.com/22607038/206646570-382d0f26-908a-4ab4-b1a7-5cc31fa70c5f.jpg" style=" width: auto; height: 40%; "></div>

|

||||

|

|

@ -57,7 +57,7 @@ python tools/analysis_tools/browse_dataset.py configs/textdet/dbnet/dbnet_resnet

|

|||

Or, to visualize the entire pipeline:

|

||||

|

||||

```Bash

|

||||

python tools/analysis_tools/browse_dataset.py configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py -m pipeline

|

||||

python tools/visualizations/browse_dataset.py configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py -m pipeline

|

||||

```

|

||||

|

||||

<div align=center><img src="https://user-images.githubusercontent.com/22607038/206637571-287640c0-1f55-453f-a2fc-9f9734b9593f.jpg" style=" width: auto; height: 40%; "></div>

|

||||

|

|

@ -65,7 +65,7 @@ python tools/analysis_tools/browse_dataset.py configs/textdet/dbnet/dbnet_resnet

|

|||

In addition, users can also visualize the original images and their corresponding labels of the dataset by specifying the path to the dataset config file, for example:

|

||||

|

||||

```Bash

|

||||

python tools/analysis_tools/browse_dataset.py configs/textrecog/_base_/datasets/icdar2015.py

|

||||

python tools/visualizations/browse_dataset.py configs/textrecog/_base_/datasets/icdar2015.py

|

||||

```

|

||||

|

||||

Some datasets might have multiple variants. For example, the test split of `icdar2015` textrecog dataset has two variants, which the [base dataset config](/configs/textrecog/_base_/datasets/icdar2015.py) defines as follows:

|

||||

|

|

@ -85,11 +85,58 @@ icdar2015_1811_textrecog_test = dict(

|

|||

In this case, you can specify the variant name to visualize the corresponding dataset:

|

||||

|

||||

```Bash

|

||||

python tools/analysis_tools/browse_dataset.py configs/textrecog/_base_/datasets/icdar2015.py -p icdar2015_1811_textrecog_test

|

||||

python tools/visualizations/browse_dataset.py configs/textrecog/_base_/datasets/icdar2015.py -p icdar2015_1811_textrecog_test

|

||||

```

|

||||

|

||||

Based on this tool, users can easily verify if the annotation of a custom dataset is correct.

|

||||

|

||||

### Hyper-parameter Scheduler Visualization

|

||||

|

||||

This tool aims to help the user to check the hyper-parameter scheduler of the optimizer (without training), which support the "learning rate" or "momentum"

|

||||

|

||||

#### Introduce the scheduler visualization tool

|

||||

|

||||

```bash

|

||||

python tools/visualizations/vis_scheduler.py \

|

||||

${CONFIG_FILE} \

|

||||

[-p, --parameter ${PARAMETER_NAME}] \

|

||||

[-d, --dataset-size ${DATASET_SIZE}] \

|

||||

[-n, --ngpus ${NUM_GPUs}] \

|

||||