* rebase * fefine * fix lint * update readme * rebase * fix lint * update docstring * update docstring * rebase * rename corespanding names * rebase |

||

|---|---|---|

| .. | ||

| README.md | ||

| launch.py | ||

README.md

MMPretrain Gradio Demo

Here is a gradio demo for MMPretrain supported inference tasks.

Currently supported tasks:

- Image Classifiation

- Image-To-Image Retrieval

- Text-To-Image Retrieval (require multi-modality support)

- Image Caption (require multi-modality support)

- Visual Question Answering (require multi-modality support)

- Visual Grounding (require multi-modality support)

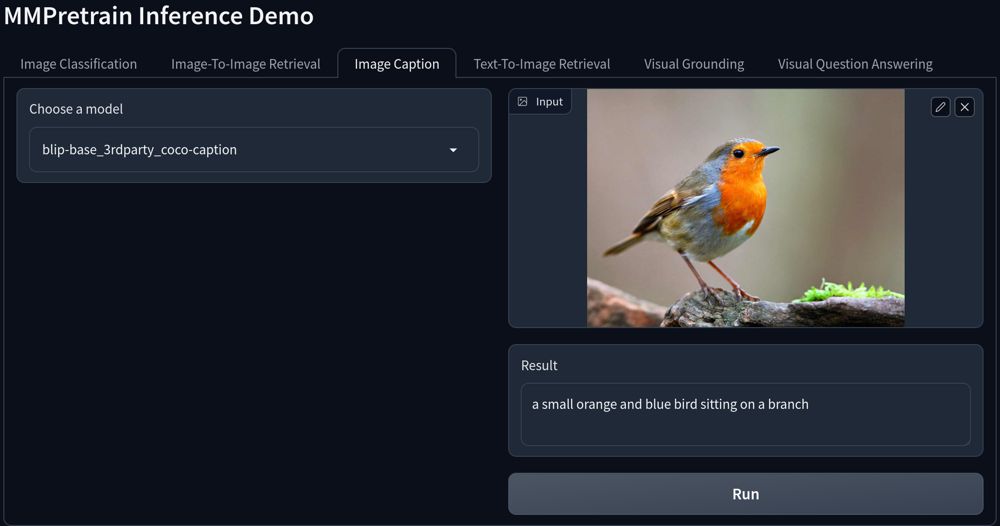

Preview

Requirements

To run the demo, you need to install MMPretrain at first. And please install with the extra multi-modality dependencies to enable multi-modality tasks.

# At the MMPretrain root folder

pip install -e ".[multimodal]"

And then install the latest gradio package.

pip install "gradio>=3.31.0"

Start

Then, you can start the gradio server on the local machine by:

# At the project folder

python launch.py

The demo will start a local server http://127.0.0.1:7860 and you can browse it by your browser.

And to share it to others, please set share=True in the demo.launch().