mirror of

https://github.com/open-mmlab/mmrazor.git

synced 2025-06-03 15:02:54 +08:00

Revert "[Enhancement] Add benchmark test script" (#263)

Revert "[Enhancement] Add benchmark test script (#262)" This reverts commit f60cf9c469c1365cb8e1dd62aabb6e2937e1cffa.

This commit is contained in:

parent

f60cf9c469

commit

5105489d64

@ -124,7 +124,8 @@ jobs:

|

||||

docker exec mmrazor pip install -e /mmdetection

|

||||

docker exec mmrazor pip install -e /mmclassification

|

||||

docker exec mmrazor pip install -e /mmsegmentation

|

||||

docker exec mmrazor pip install -r requirements.txt

|

||||

pip install -r requirements.txt

|

||||

python -c 'import mmcv; print(mmcv.__version__)'

|

||||

- run:

|

||||

name: Build and install

|

||||

command: |

|

||||

|

||||

@ -40,8 +40,6 @@ def parse_args():

|

||||

'--work-dir',

|

||||

default='work_dirs/benchmark_test',

|

||||

help='the dir to save metric')

|

||||

parser.add_argument(

|

||||

'--replace-ceph', action='store_true', help='load data from ceph')

|

||||

parser.add_argument(

|

||||

'--run', action='store_true', help='run script directly')

|

||||

parser.add_argument(

|

||||

@ -68,70 +66,12 @@ def parse_args():

|

||||

return args

|

||||

|

||||

|

||||

def replace_to_ceph(cfg):

|

||||

|

||||

file_client_args = dict(

|

||||

backend='petrel',

|

||||

path_mapping=dict({

|

||||

'./data/coco':

|

||||

's3://openmmlab/datasets/detection/coco',

|

||||

'data/coco':

|

||||

's3://openmmlab/datasets/detection/coco',

|

||||

'./data/cityscapes':

|

||||

's3://openmmlab/datasets/segmentation/cityscapes',

|

||||

'data/cityscapes':

|

||||

's3://openmmlab/datasets/segmentation/cityscapes',

|

||||

'./data/imagenet':

|

||||

's3://openmmlab/datasets/classification/imagenet',

|

||||

'data/imagenet':

|

||||

's3://openmmlab/datasets/classification/imagenet',

|

||||

}))

|

||||

|

||||

def _process_pipeline(dataset, name):

|

||||

|

||||

def replace_img(pipeline):

|

||||

if pipeline['type'] == 'LoadImageFromFile':

|

||||

pipeline['file_client_args'] = file_client_args

|

||||

|

||||

def replace_ann(pipeline):

|

||||

if pipeline['type'] == 'LoadAnnotations' or pipeline[

|

||||

'type'] == 'LoadPanopticAnnotations':

|

||||

pipeline['file_client_args'] = file_client_args

|

||||

|

||||

if 'pipeline' in dataset:

|

||||

replace_img(dataset.pipeline[0])

|

||||

replace_ann(dataset.pipeline[1])

|

||||

if 'dataset' in dataset:

|

||||

# dataset wrapper

|

||||

replace_img(dataset.dataset.pipeline[0])

|

||||

replace_ann(dataset.dataset.pipeline[1])

|

||||

else:

|

||||

# dataset wrapper

|

||||

replace_img(dataset.dataset.pipeline[0])

|

||||

replace_ann(dataset.dataset.pipeline[1])

|

||||

|

||||

def _process_evaluator(evaluator, name):

|

||||

if evaluator['type'] == 'CocoPanopticMetric':

|

||||

evaluator['file_client_args'] = file_client_args

|

||||

|

||||

# half ceph

|

||||

_process_pipeline(cfg.train_dataloader.dataset, cfg.filename)

|

||||

_process_pipeline(cfg.val_dataloader.dataset, cfg.filename)

|

||||

_process_pipeline(cfg.test_dataloader.dataset, cfg.filename)

|

||||

_process_evaluator(cfg.val_evaluator, cfg.filename)

|

||||

_process_evaluator(cfg.test_evaluator, cfg.filename)

|

||||

|

||||

|

||||

def create_test_job_batch(commands, model_info, args, port):

|

||||

|

||||

fname = model_info.name

|

||||

|

||||

cfg_path = Path(model_info.config)

|

||||

|

||||

cfg = mmengine.Config.fromfile(cfg_path)

|

||||

|

||||

if args.replace_ceph:

|

||||

replace_to_ceph(cfg)

|

||||

config = Path(model_info.config)

|

||||

# assert config.exists(), f'{fname}: {config} not found.'

|

||||

|

||||

http_prefix = 'https://download.openmmlab.com/mmrazor/'

|

||||

if 's3://' in args.checkpoint_root:

|

||||

@ -159,8 +99,6 @@ def create_test_job_batch(commands, model_info, args, port):

|

||||

job_name = f'{args.job_name}_{fname}'

|

||||

work_dir = Path(args.work_dir) / fname

|

||||

work_dir.mkdir(parents=True, exist_ok=True)

|

||||

test_cfg_path = work_dir / 'config.py'

|

||||

cfg.dump(test_cfg_path)

|

||||

|

||||

if args.quotatype is not None:

|

||||

quota_cfg = f'#SBATCH --quotatype {args.quotatype}\n'

|

||||

@ -172,7 +110,8 @@ def create_test_job_batch(commands, model_info, args, port):

|

||||

master_port = f'NASTER_PORT={port}'

|

||||

|

||||

script_name = osp.join('tools', 'test.py')

|

||||

job_script = (f'#!/bin/bash\n'

|

||||

job_script = (

|

||||

f'#!/bin/bash\n'

|

||||

f'#SBATCH --output {work_dir}/job.%j.out\n'

|

||||

f'#SBATCH --partition={args.partition}\n'

|

||||

f'#SBATCH --job-name {job_name}\n'

|

||||

@ -181,15 +120,14 @@ def create_test_job_batch(commands, model_info, args, port):

|

||||

f'#SBATCH --ntasks-per-node={args.gpus}\n'

|

||||

f'#SBATCH --ntasks={args.gpus}\n'

|

||||

f'#SBATCH --cpus-per-task=5\n\n'

|

||||

f'{master_port} {runner} -u {script_name} '

|

||||

f'{test_cfg_path} {checkpoint} '

|

||||

f'{master_port} {runner} -u {script_name} {config} {checkpoint} '

|

||||

f'--work-dir {work_dir} '

|

||||

f'--launcher={launcher}\n')

|

||||

|

||||

with open(work_dir / 'job.sh', 'w') as f:

|

||||

f.write(job_script)

|

||||

|

||||

commands.append(f'echo "{test_cfg_path}"')

|

||||

commands.append(f'echo "{config}"')

|

||||

if args.local:

|

||||

commands.append(f'bash {work_dir}/job.sh')

|

||||

else:

|

||||

@ -238,7 +176,8 @@ def summary(args):

|

||||

expect_result = model_info.results[0].metrics

|

||||

summary_result = {

|

||||

'expect': expect_result,

|

||||

'actual': {k: v

|

||||

'actual':

|

||||

{METRIC_MAPPINGS[k]: v

|

||||

for k, v in latest_result.items()}

|

||||

}

|

||||

model_results[model_name] = summary_result

|

||||

|

||||

@ -10,6 +10,7 @@ An activation boundary for a neuron refers to a separating hyperplane that deter

|

||||

|

||||

<img width="1184" alt="pipeline" src="https://user-images.githubusercontent.com/88702197/187422794-d681ed58-293a-4d9e-9e5b-9937289136a7.png">

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

### Classification

|

||||

|

||||

@ -8,6 +8,7 @@ Convolutional neural networks have been widely deployed in various application s

|

||||

|

||||

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

#### Classification

|

||||

|

||||

@ -10,6 +10,7 @@ Learning portable neural networks is very essential for computer vision for the

|

||||

|

||||

<img width="910" alt="pipeline" src="https://user-images.githubusercontent.com/88702197/187423163-b34896fc-8516-403b-acd7-4c0b8e43af5b.png">

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

### Classification

|

||||

|

||||

@ -22,6 +22,7 @@ almost 10.4 times less parameters outperforms a larger, state-of-the-art teacher

|

||||

|

||||

<img width="743" alt="pipeline" src="https://user-images.githubusercontent.com/88702197/187423686-68719140-a978-4a19-a684-42b1d793d1fb.png">

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

### Classification

|

||||

|

||||

@ -10,6 +10,7 @@ Knowledge distillation (KD) has been proven to be a simple and effective tool fo

|

||||

|

||||

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

### Segmentation

|

||||

|

||||

@ -10,6 +10,7 @@ Knowledge distillation, in which a student model is trained to mimic a teacher m

|

||||

|

||||

<img width="836" alt="pipeline" src="https://user-images.githubusercontent.com/88702197/187424617-6259a7fc-b610-40ae-92eb-f21450dcbaa1.png">

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

### Detection

|

||||

|

||||

@ -11,6 +11,7 @@ Comprehensive experiments verify that our approach is flexible and effective. It

|

||||

|

||||

|

||||

|

||||

|

||||

## Introduction

|

||||

|

||||

### Supernet pre-training on ImageNet

|

||||

|

||||

@ -11,6 +11,8 @@ Notably, by setting optimized channel numbers, our AutoSlim-MobileNet-v2 at 305M

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Introduction

|

||||

|

||||

### Supernet pre-training on ImageNet

|

||||

|

||||

@ -1,5 +1,4 @@

|

||||

# Algorithm

|

||||

|

||||

## Introduction

|

||||

|

||||

### What is algorithm in MMRazor

|

||||

@ -19,7 +18,7 @@ And in MMRazor, `algorithm` is a general item for these technologies. For exampl

|

||||

|

||||

### About base algorithm

|

||||

|

||||

In the directory of ``` models/algorith``ms ```, all model compression algorithms are divided into 4 subdirectories: nas / pruning / distill / quantization. These algorithms must inherit from `BaseAlgorithm`, whose definition is as below.

|

||||

In the directory of `models/algorith``ms`, all model compression algorithms are divided into 4 subdirectories: nas / pruning / distill / quantization. These algorithms must inherit from `BaseAlgorithm`, whose definition is as below.

|

||||

|

||||

```Python

|

||||

from typing import Dict, List, Optional, Tuple, Union

|

||||

@ -91,6 +90,8 @@ batch inputs preprocess (see more information in `BaseDataPreprocessor` class of

|

||||

|

||||

`BaseAlgorithm`'s forward is just a wrapper of `BaseModel`'s forward. Sub-classes inherited from BaseAlgorithm only need to override the `loss` method, which implements the logic to calculate loss, thus various algorithms can be trained in the runner.

|

||||

|

||||

|

||||

|

||||

## How to use existing algorithms in MMRazor

|

||||

|

||||

1. Configure your architecture that will be slimmed

|

||||

@ -107,7 +108,7 @@ architecture = _base_.model

|

||||

|

||||

- Use your customized model as below, which is an example of defining a VGG model as our architecture.

|

||||

|

||||

> How to customize architectures can refer to our tutorial: [Customize Architectures](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_3_customize_architectures.html).

|

||||

> How to customize architectures can refer to our tutorial: [Customize Architectures](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_3_customize_architectures.html#).

|

||||

|

||||

```Python

|

||||

default_scope='mmcls'

|

||||

@ -228,7 +229,7 @@ class XXX(BaseAlgorithm):

|

||||

|

||||

4. Import the class

|

||||

|

||||

You can add the following line to ``` mmrazor/models/algorithms/``{subdirectory}/``__init__.py ```

|

||||

You can add the following line to `mmrazor/models/algorithms/``{subdirectory}/``__init__.py`

|

||||

|

||||

```CoffeeScript

|

||||

from .xxx import XXX

|

||||

@ -254,7 +255,7 @@ Please refer to our tutorials about how to customize different algorithms for mo

|

||||

|

||||

1. NAS

|

||||

|

||||

[Customize NAS algorithms](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_4_customize_nas_algorithms.html)

|

||||

[Customize NAS algorithms](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_4_customize_nas_algorithms.html#)

|

||||

|

||||

2. Pruning

|

||||

|

||||

|

||||

@ -1,12 +1,11 @@

|

||||

# Apply existing algorithms to new tasks

|

||||

|

||||

Here we show how to apply existing algorithms to other tasks with an example of [SPOS ](https://github.com/open-mmlab/mmrazor/tree/dev-1.x/configs/nas/mmcls/spos)& [DetNAS](https://github.com/open-mmlab/mmrazor/tree/dev-1.x/configs/nas/mmdet/detnas).

|

||||

|

||||

> SPOS: Single Path One-Shot NAS for classification

|

||||

>

|

||||

> DetNAS: Single Path One-Shot NAS for detection

|

||||

|

||||

**You just need to configure the existing algorithms in your config only by replacing** **the architecture of** **mmcls** **with** **mmdet**\*\*'s\*\*

|

||||

**You just need to configure the existing algorithms in your config only by replacing** **the architecture of** **mmcls** **with** **mmdet****'s**

|

||||

|

||||

You can implement a new algorithm by inheriting from the existing algorithm quickly if the new task's specificity leads to the failure of applying directly.

|

||||

|

||||

|

||||

@ -1,5 +1,4 @@

|

||||

# Customize Architectures

|

||||

|

||||

Different from other tasks, architectures in MMRazor may consist of some special model components, such as **searchable backbones, connectors, dynamic ops**. In MMRazor, you can not only develop some common model components like other codebases of OpenMMLab, but also develop some special model components. Here is how to develop searchable model components and common model components.

|

||||

|

||||

> Please refer to these documents as follows if you want to know about **connectors** and **dynamic ops**.

|

||||

|

||||

@ -1,5 +1,4 @@

|

||||

# Customize mixed algorithms

|

||||

|

||||

Here we show how to customize mixed algorithms with our algorithm components. We take [AutoSlim ](https://github.com/open-mmlab/mmrazor/tree/dev-1.x/configs/pruning/mmcls/autoslim)as an example.

|

||||

|

||||

> **Why is AutoSlim a mixed algorithm?**

|

||||

|

||||

@ -1,8 +1,7 @@

|

||||

# Delivery

|

||||

|

||||

## Introduction of Delivery

|

||||

|

||||

`Delivery` is a mechanism used in **knowledge distillation**\*\*,\*\* which is to **align the intermediate results** between the teacher model and the student model by delivering and rewriting these intermediate results between them. As shown in the figure below, deliveries can be used to:

|

||||

`Delivery` is a mechanism used in **knowledge distillation****,** which is to **align the intermediate results** between the teacher model and the student model by delivering and rewriting these intermediate results between them. As shown in the figure below, deliveries can be used to:

|

||||

|

||||

- **Deliver the output of a layer of the teacher model directly to a layer of the student model.** In some knowledge distillation algorithms, we may need to deliver the output of a layer of the teacher model to the student model directly. For example, in [LAD](https://arxiv.org/abs/2108.10520) algorithm, the student model needs to obtain the label assignment of the teacher model directly.

|

||||

- **Align the inputs of the teacher model and the student model.** For example, in the MMClassification framework, some widely used data augmentations such as [mixup](https://arxiv.org/abs/1710.09412) and [CutMix](https://arxiv.org/abs/1905.04899) are not implemented in Data Pipelines but in `forward_train`, and due to the randomness of these data augmentation methods, it may lead to a gap between the input of the teacher model and the student model.

|

||||

@ -13,23 +12,26 @@ In general, the delivery mechanism allows us to deliver intermediate results bet

|

||||

|

||||

## Usage of Delivery

|

||||

|

||||

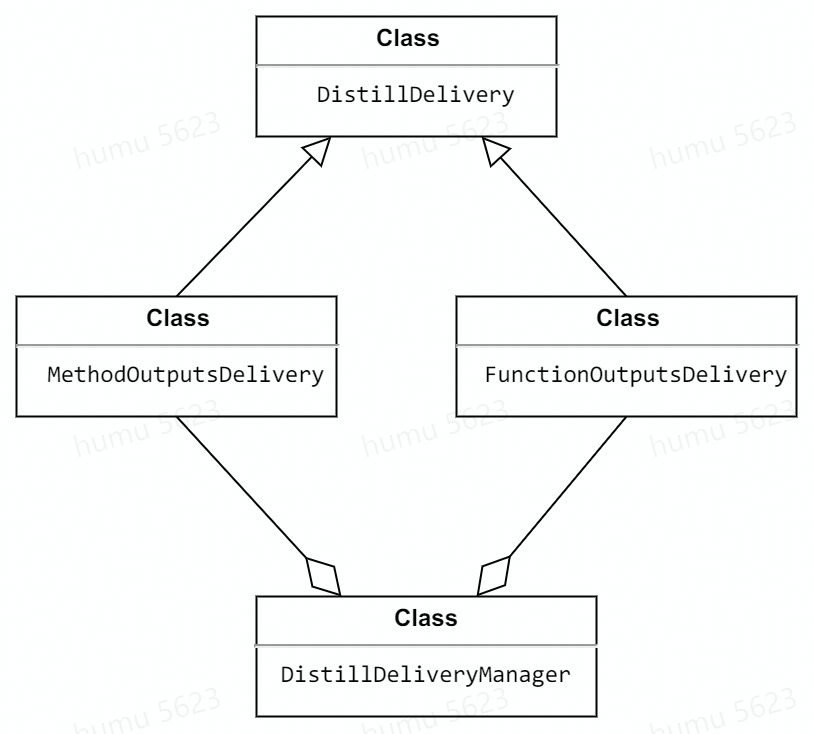

Currently, we support two deliveries: ``` FunctionOutputs``Delivery ``` and ``` MethodOutputs``Delivery ```, both of which inherit from `DistillDiliver`. And these deliveries can be managed by ``` Distill``Delivery``Manager ``` or just be used on their own.

|

||||

Currently, we support two deliveries: `FunctionOutputs``Delivery` and `MethodOutputs``Delivery`, both of which inherit from `DistillDiliver`. And these deliveries can be managed by `Distill``Delivery``Manager` or just be used on their own.

|

||||

|

||||

Their relationship is shown below.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### FunctionOutputsDelivery

|

||||

|

||||

``` FunctionOutputs``Delivery ``` is used to align the **function's** intermediate results between the teacher model and the student model.

|

||||

`FunctionOutputs``Delivery` is used to align the **function's** intermediate results between the teacher model and the student model.

|

||||

|

||||

> When initializing ``` FunctionOutputs``Delivery ```, you need to pass `func_path` argument, which requires extra attention. For example,

|

||||

> `anchor_inside_flags` is a function in mmdetection to check whether the

|

||||

> anchors are inside the border. This function is in

|

||||

> `mmdet/core/anchor/utils.py` and used in

|

||||

> `mmdet/models/dense_heads/anchor_head`. Then the `func_path` should be

|

||||

> `mmdet.models.dense_heads.anchor_head.anchor_inside_flags` but not

|

||||

> `mmdet.core.anchor.utils.anchor_inside_flags`.

|

||||

> When initializing `FunctionOutputs``Delivery`, you need to pass `func_path` argument, which requires extra attention. For example,

|

||||

`anchor_inside_flags` is a function in mmdetection to check whether the

|

||||

anchors are inside the border. This function is in

|

||||

`mmdet/core/anchor/utils.py` and used in

|

||||

`mmdet/models/dense_heads/anchor_head`. Then the `func_path` should be

|

||||

`mmdet.models.dense_heads.anchor_head.anchor_inside_flags` but not

|

||||

`mmdet.core.anchor.utils.anchor_inside_flags`.

|

||||

|

||||

#### Case 1: Delivery single function's output from the teacher to the student.

|

||||

|

||||

@ -94,9 +96,11 @@ Out:

|

||||

True

|

||||

```

|

||||

|

||||

|

||||

|

||||

### MethodOutputsDelivery

|

||||

|

||||

``` MethodOutputs``Delivery ``` is used to align the **method's** intermediate results between the teacher model and the student model.

|

||||

`MethodOutputs``Delivery` is used to align the **method's** intermediate results between the teacher model and the student model.

|

||||

|

||||

#### Case: **Align the inputs of the teacher model and the student model**

|

||||

|

||||

@ -160,11 +164,13 @@ True

|

||||

|

||||

The randomness is eliminated by using `MethodOutputsDelivery`.

|

||||

|

||||

|

||||

|

||||

### 2.3 DistillDeliveryManager

|

||||

|

||||

``` Distill``Delivery``Manager ``` is actually a context manager, used to manage delivers. When entering the ``` Distill``Delivery``Manager ```, all delivers managed will be started.

|

||||

`Distill``Delivery``Manager` is actually a context manager, used to manage delivers. When entering the `Distill``Delivery``Manager`, all delivers managed will be started.

|

||||

|

||||

With the help of ``` Distill``Delivery``Manager ```, we are able to manage several different DistillDeliveries with as little code as possible, thereby reducing the possibility of errors.

|

||||

With the help of `Distill``Delivery``Manager`, we are able to manage several different DistillDeliveries with as little code as possible, thereby reducing the possibility of errors.

|

||||

|

||||

#### Case: Manager deliveries with DistillDeliveryManager

|

||||

|

||||

@ -206,8 +212,8 @@ True

|

||||

|

||||

## Reference

|

||||

|

||||

\[1\] Zhang, Hongyi, et al. "mixup: Beyond empirical risk minimization." *arXiv* abs/1710.09412 (2017).

|

||||

[1] Zhang, Hongyi, et al. "mixup: Beyond empirical risk minimization." *arXiv* abs/1710.09412 (2017).

|

||||

|

||||

\[2\] Yun, Sangdoo, et al. "Cutmix: Regularization strategy to train strong classifiers with localizable features." *ICCV* (2019).

|

||||

[2] Yun, Sangdoo, et al. "Cutmix: Regularization strategy to train strong classifiers with localizable features." *ICCV* (2019).

|

||||

|

||||

\[3\] Nguyen, Chuong H., et al. "Improving object detection by label assignment distillation." *WACV* (2022).

|

||||

[3] Nguyen, Chuong H., et al. "Improving object detection by label assignment distillation." *WACV* (2022).

|

||||

@ -1,5 +1,4 @@

|

||||

# Mutable

|

||||

|

||||

## Introduction

|

||||

|

||||

### What is Mutable

|

||||

@ -10,6 +9,7 @@ To understand it better, we take the mutable module as an example to explain as

|

||||

|

||||

|

||||

|

||||

|

||||

As shown in the figure above, `Mutable` is a container that holds some candidate operations, thus it can sample candidates to constitute the subnet. `Supernet` usually consists of multiple `Mutable`, therefore, `Supernet` will be searchable with the help of `Mutable`. And all candidate operations in `Mutable` constitute the search space of `SuperNet`.

|

||||

|

||||

> If you want to know more about the relationship between Mutable and Mutator, please refer to [Mutator 用户文档](https://aicarrier.feishu.cn/docx/doxcnmcie75HcbqkfBGaEoemBKg)

|

||||

@ -49,7 +49,6 @@ As shown in the figure above.

|

||||

- **White blocks** stand the basic classes, which include `BaseMutable` and `DerivedMethodMixin`. `BaseMutable` is the base class for all mutables, which defines required properties and abstracmethods. `DerivedMethodMixin` is a mixin class to provide mutable parameters with some useful methods to derive mutable.

|

||||

|

||||

- **Gray blocks** stand different types of base mutables.

|

||||

|

||||

> Because there are correlations between channels of some layers, we divide mutable parameters into `MutableChannel` and `MutableValue`, so you can also think `MutableChannel` is a special `MutableValue`.

|

||||

|

||||

For supporting module and parameters mutable, we provide `MutableModule`, `MutableChannel` and `MutableValue` these base classes to implement required basic functions. And we also add `OneshotMutableModule` and `DiffMutableModule` two types based on `MutableModule` to meet different types of algorithms' requirements.

|

||||

@ -221,7 +220,7 @@ Let's use `OneShotMutableOP` as an example for customizing mutable.

|

||||

|

||||

First, you need to determine which type mutable to implement. Thus, you can implement your mutable faster by inheriting from correlative base mutable.

|

||||

|

||||

Then create a new file ``` mmrazor/models/mutables/mutable_module/``one_shot_mutable_module ```, class `OneShotMutableOP` inherits from `OneShotMutableModule`.

|

||||

Then create a new file `mmrazor/models/mutables/mutable_module/``one_shot_mutable_module`, class `OneShotMutableOP` inherits from `OneShotMutableModule`.

|

||||

|

||||

```Python

|

||||

# Copyright (c) OpenMMLab. All rights reserved.

|

||||

|

||||

@ -1,5 +1,6 @@

|

||||

# Mutator

|

||||

|

||||

|

||||

## Introduction

|

||||

|

||||

### What is Mutator

|

||||

@ -10,6 +11,7 @@

|

||||

|

||||

|

||||

|

||||

|

||||

In a word, Mutator is the manager of Mutable. Each different type of mutable is commonly managed by their one correlative mutator, respectively.

|

||||

|

||||

As shown in the figure, Mutable is a component of supernet, therefore Mutator can implement some functions about subnet from supernet by handling Mutable.

|

||||

@ -20,6 +22,7 @@ In MMRazor, we have implemented some mutators, their relationship is as below.

|

||||

|

||||

|

||||

|

||||

|

||||

`BaseMutator`: Base class for all mutators. It has appointed some abstract methods supported by all mutators.

|

||||

|

||||

`ModuleMuator`/ `ChannelMutator`: Two different types mutators are for handling mutable module and mutable channel respectively.

|

||||

@ -52,11 +55,9 @@ If existing mutators do not meet your needs, you can also customize your needed

|

||||

All mutators need to implement at least two of the following interfaces

|

||||

|

||||

- `prepare_from_supernet()`

|

||||

|

||||

- Make some necessary preparations according to the given supernet. These preparations may include, but are not limited to, grouping the search space, and initializing mutator with the parameters needed for itself.

|

||||

|

||||

- `search_groups`

|

||||

|

||||

- Group of search space.

|

||||

|

||||

- Note that **search groups** and **search space** are two different concepts. The latter defines what choices can be used for searching. The former groups the search space, and searchable blocks that are grouped into the same group will share the same search space and the same sample result.

|

||||

@ -97,7 +98,6 @@ class OneShotModuleMutator(ModuleMutator):

|

||||

```

|

||||

|

||||

### 2. Implement abstract methods

|

||||

|

||||

2.1. Rewrite the `mutable_class_type` property

|

||||

|

||||

```Python

|

||||

@ -119,7 +119,7 @@ As the `prepare_from_supernet()` method and the `search_groups` property are alr

|

||||

|

||||

If you need to implement them by yourself, you can refer to these as follows.

|

||||

|

||||

2.3. **Understand** **`search_groups`\*\*\*\*(optional)**

|

||||

2.3. **Understand** **`search_groups`****(optional)**

|

||||

|

||||

Let's take an example to see what default `search_groups` do.

|

||||

|

||||

|

||||

@ -1,5 +1,6 @@

|

||||

# Recorder

|

||||

|

||||

|

||||

## Introduction of Recorder

|

||||

|

||||

`Recorder` is a context manager used to record various intermediate results during the model forward. It can help `Delivery` finish data delivering by recording source data in some distillation algorithms. And it can also be used to obtain some specific data for visual analysis or other functions you want.

|

||||

@ -11,7 +12,6 @@ In general, `Recorder` will help us expand more functions in implementing algori

|

||||

## Usage of Recorder

|

||||

|

||||

Currently, we support five `Recorder`, as shown in the following table

|

||||

|

||||

| FunctionOutputsRecorder | Record output results of some functions |

|

||||

| ----------------------- | ------------------------------------------- |

|

||||

| MethodOutputsRecorder | Record output results of some methods |

|

||||

@ -25,17 +25,20 @@ Their relationship is shown below.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### FunctionOutputsRecorder

|

||||

|

||||

`FunctionOutputsRecorder` is used to record the output results of intermediate **function**.

|

||||

|

||||

> When instantiating `FunctionOutputsRecorder`, you need to pass `source` argument, which requires extra attention. For example,

|

||||

> `anchor_inside_flags` is a function in mmdetection to check whether the

|

||||

> anchors are inside the border. This function is in

|

||||

> `mmdet/core/anchor/utils.py` and used in

|

||||

> `mmdet/models/dense_heads/anchor_head`. Then the `source` argument should be

|

||||

> `mmdet.models.dense_heads.anchor_head.anchor_inside_flags` but not

|

||||

> `mmdet.core.anchor.utils.anchor_inside_flags`.

|

||||

`anchor_inside_flags` is a function in mmdetection to check whether the

|

||||

anchors are inside the border. This function is in

|

||||

`mmdet/core/anchor/utils.py` and used in

|

||||

`mmdet/models/dense_heads/anchor_head`. Then the `source` argument should be

|

||||

`mmdet.models.dense_heads.anchor_head.anchor_inside_flags` but not

|

||||

`mmdet.core.anchor.utils.anchor_inside_flags`.

|

||||

|

||||

#### Example

|

||||

|

||||

@ -49,7 +52,7 @@ from mmrazor.structures import FunctionOutputsRecorder

|

||||

def toy_func() -> int:

|

||||

return random.randint(0, 1000000)

|

||||

|

||||

# instantiate with specifying used path

|

||||

# instantiate with specifing used path

|

||||

r1 = FunctionOutputsRecorder('toy_module.toy_func')

|

||||

|

||||

# initialize is to make specified module can be recorded by

|

||||

@ -95,6 +98,8 @@ Out:

|

||||

119729

|

||||

```

|

||||

|

||||

|

||||

|

||||

### MethodOutputsRecorder

|

||||

|

||||

`MethodOutputsRecorder` is used to record the output results of intermediate **method**.

|

||||

@ -113,7 +118,7 @@ class Toy():

|

||||

|

||||

toy = Toy()

|

||||

|

||||

# instantiate with specifying used path

|

||||

# instantiate with specifing used path

|

||||

r1 = MethodOutputsRecorder('toy_module.Toy.toy_func')

|

||||

# initialize is to make specified module can be recorded by

|

||||

# registering customized forward hook.

|

||||

@ -186,7 +191,7 @@ class ToyModel(nn.Module):

|

||||

return self.conv2(x1 + x2)

|

||||

|

||||

model = ToyModel()

|

||||

# instantiate with specifying module name.

|

||||

# instantiate with specifing module name.

|

||||

r1 = ModuleOutputsRecorder('conv1')

|

||||

|

||||

# initialize is to make specified module can be recorded by

|

||||

@ -222,7 +227,7 @@ True

|

||||

|

||||

### ParameterRecorder

|

||||

|

||||

`ParameterRecorder` is used to record the intermediate parameter of ``` nn.``Module ```. Its usage is similar to `ModuleOutputsRecorder`'s and `ModuleInputsRecorder`'s, but it instantiates with parameter name instead of module name.

|

||||

`ParameterRecorder` is used to record the intermediate parameter of `nn.``Module`. Its usage is similar to `ModuleOutputsRecorder`'s and `ModuleInputsRecorder`'s, but it instantiates with parameter name instead of module name.

|

||||

|

||||

#### Example

|

||||

|

||||

@ -242,7 +247,7 @@ class ToyModel(nn.Module):

|

||||

return self.toy_conv(x)

|

||||

|

||||

model = ToyModel()

|

||||

# instantiate with specifying parameter name.

|

||||

# instantiate with specifing parameter name.

|

||||

r1 = ParameterRecorder('toy_conv.weight')

|

||||

# initialize is to make specified module can be recorded by

|

||||

# registering customized forward hook.

|

||||

|

||||

@ -1,5 +1,4 @@

|

||||

# Overview

|

||||

|

||||

## Why MMRazor

|

||||

|

||||

MMRazor is a model compression toolkit for model slimming, which includes 4 mainstream technologies:

|

||||

@ -25,15 +24,16 @@ Different algorithms, e.g., NAS, pruning and KD, can be incorporated in a plug-n

|

||||

|

||||

With better modular design, developers can implement new model compression algorithms with only a few codes, or even by simply modifying config files.

|

||||

|

||||

## Design and Implement

|

||||

|

||||

|

||||

## Design and Implement

|

||||

|

||||

|

||||

### Design

|

||||

|

||||

There are 3 layers (**Application** / **Algorithm** / **Component**) in overview design. MMRazor mainly includes both of **Component** and **Algorithm**, while **Application** consist of some OpenMMLab upstream repos, such as MMClassification, MMDetection, MMSegmentation and so on.

|

||||

|

||||

**Component** provides many useful functions for quickly implementing **Algorithm.** And thanks to OpenMMLab 's powerful and highly flexible config mode and registry mechanism\*\*, Algorithm\*\* can be conveniently applied to **Application.**

|

||||

**Component** provides many useful functions for quickly implementing **Algorithm.** And thanks to OpenMMLab 's powerful and highly flexible config mode and registry mechanism**, Algorithm** can be conveniently applied to **Application.**

|

||||

|

||||

How to apply our lightweight algorithms to some upstream tasks? Please refer to the below.

|

||||

|

||||

@ -45,7 +45,7 @@ In OpenMMLab, implementing vision tasks commonly includes 3 parts (model / datas

|

||||

|

||||

`Architecture` is similar to `model` of the upstream repos. You can chose to directly use the original `model` or customize the new `model` as your architecture according to different tasks. For example, you can directly use ResNet-34 and ResNet-18 of MMClassification to implement some KD algorithms, but in NAS, you may need to customize a searchable model.

|

||||

|

||||

``` Compone``n``ts ``` consist of various special functions for supporting different lightweight algorithms. They can be directly used in config because of registered into MMEngine. Thus, you can pick some components you need to quickly implement your algorithm. For example, you may need `mutator` / `mutable` / `searchle backbone` if you want to implement a NAS algorithm, and you can pick from `distill loss` / `recorder` / `delivery` / `connector` if you need a KD algorithm.

|

||||

`Compone``n``ts` consist of various special functions for supporting different lightweight algorithms. They can be directly used in config because of registered into MMEngine. Thus, you can pick some components you need to quickly implement your algorithm. For example, you may need `mutator` / `mutable` / `searchle backbone` if you want to implement a NAS algorithm, and you can pick from `distill loss` / `recorder` / `delivery` / `connector` if you need a KD algorithm.

|

||||

|

||||

Please refer to the next section for more details about **Implement**.

|

||||

|

||||

@ -87,7 +87,7 @@ We provide the following general tutorials according to some typical requirement

|

||||

|

||||

**Tutorial list**

|

||||

|

||||

- [Tutorial 1: Overview](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_1_overview.html)

|

||||

- [Tutorial 1: Overview](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_1_overview.html#)

|

||||

- [Tutorial 2: Learn about Configs](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_2_learn_about_configs.html)

|

||||

- [Toturial 3: Customize Architectures](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_3_customize_architectures.html)

|

||||

- [Toturial 4: Customize NAS algorithms](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_4_customize_nas_algorithms.html)

|

||||

@ -96,10 +96,13 @@ We provide the following general tutorials according to some typical requirement

|

||||

- [Tutorial 7: Customize mixed algorithms](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_7_customize_mixed_algorithms_with_out_algorithms_components.html)

|

||||

- [Tutorial 8: Apply existing algorithms to new tasks](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_8_apply_existing_algorithms_to_new_tasks.html)

|

||||

|

||||

|

||||

|

||||

## F&Q

|

||||

|

||||

If you encounter some trouble using MMRazor, you can find whether your question has existed in **F&Q(to add link)**. If not existed, welcome to open a [Github issue](https://github.com/open-mmlab/mmrazor/issues) for getting support, we will reply it as soon.

|

||||

|

||||

|

||||

## Get support and contribute back

|

||||

|

||||

MMRazor is maintained on the [MMRazor Github repository](https://github.com/open-mmlab/mmrazor). We collect feedback and new proposals/ideas on Github. You can:

|

||||

|

||||

@ -2,6 +2,7 @@

|

||||

|

||||

## Directory structure of configs in mmrazor

|

||||

|

||||

## More about config

|

||||

|

||||

|

||||

## More about config

|

||||

Please refer to config.md in mmengine.

|

||||

Loading…

x

Reference in New Issue

Block a user