[Docs] Add docs and update algo README (#259)

* docs v0.1 * update picture links in algo READMEpull/263/head

parent

f45e2bdca3

commit

ce22497b25

|

|

@ -8,7 +8,8 @@

|

|||

|

||||

An activation boundary for a neuron refers to a separating hyperplane that determines whether the neuron is activated or deactivated. It has been long considered in neural networks that the activations of neurons, rather than their exact output values, play the most important role in forming classification friendly partitions of the hidden feature space. However, as far as we know, this aspect of neural networks has not been considered in the literature of knowledge transfer. In this pa- per, we propose a knowledge transfer method via distillation of activation boundaries formed by hidden neurons. For the distillation, we propose an activation transfer loss that has the minimum value when the boundaries generated by the stu- dent coincide with those by the teacher. Since the activation transfer loss is not differentiable, we design a piecewise differentiable loss approximating the activation transfer loss. By the proposed method, the student learns a separating bound- ary between activation region and deactivation region formed by each neuron in the teacher. Through the experiments in various aspects of knowledge transfer, it is verified that the proposed method outperforms the current state-of-the-art [link](https://github.com/bhheo/AB_distillation)

|

||||

|

||||

|

||||

<img width="1184" alt="pipeline" src="https://user-images.githubusercontent.com/88702197/187422794-d681ed58-293a-4d9e-9e5b-9937289136a7.png">

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -6,7 +6,8 @@ Convolutional neural networks have been widely deployed in various application s

|

|||

|

||||

## Pipeline

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -8,7 +8,8 @@

|

|||

|

||||

Learning portable neural networks is very essential for computer vision for the purpose that pre-trained heavy deep models can be well applied on edge devices such as mobile phones and micro sensors. Most existing deep neural network compression and speed-up methods are very effective for training compact deep models, when we can directly access the training dataset. However, training data for the given deep network are often unavailable due to some practice problems (e.g. privacy, legal issue, and transmission), and the architecture of the given network are also unknown except some interfaces. To this end, we propose a novel framework for training efficient deep neural networks by exploiting generative adversarial networks (GANs). To be specific, the pre-trained teacher networks are regarded as a fixed discriminator and the generator is utilized for deviating training samples which can obtain the maximum response on the discriminator. Then, an efficient network with smaller model size and computational complexity is trained using the generated data and the teacher network, simultaneously. Efficient student networks learned using the pro- posed Data-Free Learning (DAFL) method achieve 92.22% and 74.47% accuracies using ResNet-18 without any training data on the CIFAR-10 and CIFAR-100 datasets, respectively. Meanwhile, our student network obtains an 80.56% accuracy on the CelebA benchmark.

|

||||

|

||||

|

||||

<img width="910" alt="pipeline" src="https://user-images.githubusercontent.com/88702197/187423163-b34896fc-8516-403b-acd7-4c0b8e43af5b.png">

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -8,7 +8,7 @@

|

|||

|

||||

Knowledge Distillation (KD) has made remarkable progress in the last few years and become a popular paradigm for model compression and knowledge transfer. However, almost all existing KD algorithms are data-driven, i.e., relying on a large amount of original training data or alternative data, which is usually unavailable in real-world scenarios. In this paper, we devote ourselves to this challenging problem and propose a novel adversarial distillation mechanism to craft a compact student model without any real-world data. We introduce a model discrepancy to quantificationally measure the difference between student and teacher models and construct an optimizable upper bound. In our work, the student and the teacher jointly act the role of the discriminator to reduce this discrepancy, when a generator adversarially produces some "hard samples" to enlarge it. Extensive experiments demonstrate that the proposed data-free method yields comparable performance to existing data-driven methods. More strikingly, our approach can be directly extended to semantic segmentation, which is more complicated than classification, and our approach achieves state-of-the-art results.

|

||||

|

||||

|

||||

<img width="1001" alt="pipeline" src="https://user-images.githubusercontent.com/88702197/187423332-30a5d409-6f83-45d7-9e11-e306f7ffec78.png">

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -8,7 +8,7 @@

|

|||

|

||||

State-of-the-art distillation methods are mainly based on distilling deep features from intermediate layers, while the significance of logit distillation is greatly overlooked. To provide a novel viewpoint to study logit distillation, we reformulate the classical KD loss into two parts, i.e., target class knowledge distillation (TCKD) and non-target class knowledge distillation (NCKD). We empirically investigate and prove the effects of the two parts: TCKD transfers knowledge concerning the "difficulty" of training samples, while NCKD is the prominent reason why logit distillation works. More importantly, we reveal that the classical KD loss is a coupled formulation, which (1) suppresses the effectiveness of NCKD and (2) limits the flexibility to balance these two parts. To address these issues, we present Decoupled Knowledge Distillation (DKD), enabling TCKD and NCKD to play their roles more efficiently and flexibly. Compared with complex feature-based methods, our DKD achieves comparable or even better results and has better training efficiency on CIFAR-100, ImageNet, and MS-COCO datasets for image classification and object detection tasks. This paper proves the great potential of logit distillation, and we hope it will be helpful for future research. The code is available at https://github.com/megvii-research/mdistiller.

|

||||

|

||||

|

||||

<img width="921" alt="dkd" src="https://user-images.githubusercontent.com/88702197/187423438-c9eadb93-826f-471c-9553-bdae2e434541.png">

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -20,7 +20,8 @@ allows one to train deeper students that can generalize better or run faster, a

|

|||

controlled by the chosen student capacity. For example, on CIFAR-10, a deep student network with

|

||||

almost 10.4 times less parameters outperforms a larger, state-of-the-art teacher network.

|

||||

|

||||

|

||||

<img width="743" alt="pipeline" src="https://user-images.githubusercontent.com/88702197/187423686-68719140-a978-4a19-a684-42b1d793d1fb.png">

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -8,7 +8,7 @@

|

|||

|

||||

A very simple way to improve the performance of almost any machine learning algorithm is to train many different models on the same data and then to average their predictions. Unfortunately, making predictions using a whole ensemble of models is cumbersome and may be too computationally expensive to allow deployment to a large number of users, especially if the individual models are large neural nets. Caruana and his collaborators have shown that it is possible to compress the knowledge in an ensemble into a single model which is much easier to deploy and we develop this approach further using a different compression technique. We achieve some surprising results on MNIST and we show that we can significantly improve the acoustic model of a heavily used commercial system by distilling the knowledge in an ensemble of models into a single model. We also introduce a new type of ensemble composed of one or more full models and many specialist models which learn to distinguish fine-grained classes that the full models confuse. Unlike a mixture of experts, these specialist models can be trained rapidly and in parallel.

|

||||

|

||||

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -8,11 +8,11 @@ We investigate the design aspects of feature distillation methods achieving netw

|

|||

|

||||

### Feature-based Distillation

|

||||

|

||||

|

||||

|

||||

|

||||

### Margin ReLU

|

||||

|

||||

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -20,7 +20,7 @@ proposed method improves educated student models with a significant margin.

|

|||

In particular for metric learning, it allows students to outperform their

|

||||

teachers' performance, achieving the state of the arts on standard benchmark datasets.

|

||||

|

||||

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -21,7 +21,7 @@ empirically find that completely filtering out regularization samples also deter

|

|||

weighted soft labels to help the network adaptively handle the sample-wise biasvariance tradeoff. Experiments on standard evaluation benchmarks validate the

|

||||

effectiveness of our method.

|

||||

|

||||

|

||||

<img width="1032" alt="pipeline" src="https://user-images.githubusercontent.com/88702197/187424195-a3ea3d72-5ee7-4ffc-b562-65677076c18e.png">

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -10,11 +10,11 @@ Performing knowledge transfer from a large teacher network to a smaller student

|

|||

|

||||

## The teacher and student decision boundaries

|

||||

|

||||

|

||||

<img width="766" alt="distribution" src="https://user-images.githubusercontent.com/88702197/187424317-9f3c5547-a838-4858-b63e-608eee8165f5.png">

|

||||

|

||||

## Pseudo images sampled from the generator

|

||||

|

||||

|

||||

<img width="1176" alt="synthesis" src="https://user-images.githubusercontent.com/88702197/187424322-79be0b07-66b5-4775-8e23-6c2ddca0ad0f.png">

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -8,7 +8,8 @@

|

|||

|

||||

Knowledge distillation (KD) has been proven to be a simple and effective tool for training compact models. Almost all KD variants for dense prediction tasks align the student and teacher networks' feature maps in the spatial domain, typically by minimizing point-wise and/or pair-wise discrepancy. Observing that in semantic segmentation, some layers' feature activations of each channel tend to encode saliency of scene categories (analogue to class activation mapping), we propose to align features channel-wise between the student and teacher networks. To this end, we first transform the feature map of each channel into a probability map using softmax normalization, and then minimize the Kullback-Leibler (KL) divergence of the corresponding channels of the two networks. By doing so, our method focuses on mimicking the soft distributions of channels between networks. In particular, the KL divergence enables learning to pay more attention to the most salient regions of the channel-wise maps, presumably corresponding to the most useful signals for semantic segmentation. Experiments demonstrate that our channel-wise distillation outperforms almost all existing spatial distillation methods for semantic segmentation considerably, and requires less computational cost during training. We consistently achieve superior performance on three benchmarks with various network structures.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -8,7 +8,8 @@

|

|||

|

||||

Knowledge distillation, in which a student model is trained to mimic a teacher model, has been proved as an effective technique for model compression and model accuracy boosting. However, most knowledge distillation methods, designed for image classification, have failed on more challenging tasks, such as object detection. In this paper, we suggest that the failure of knowledge distillation on object detection is mainly caused by two reasons: (1) the imbalance between pixels of foreground and background and (2) lack of distillation on the relation between different pixels. Observing the above reasons, we propose attention-guided distillation and non-local distillation to address the two problems, respectively. Attention-guided distillation is proposed to find the crucial pixels of foreground objects with attention mechanism and then make the students take more effort to learn their features. Non-local distillation is proposed to enable students to learn not only the feature of an individual pixel but also the relation between different pixels captured by non-local modules. Experiments show that our methods achieve excellent AP improvements on both one-stage and two-stage, both anchor-based and anchor-free detectors. For example, Faster RCNN (ResNet101 backbone) with our distillation achieves 43.9 AP on COCO2017, which is 4.1 higher than the baseline.

|

||||

|

||||

|

||||

<img width="836" alt="pipeline" src="https://user-images.githubusercontent.com/88702197/187424617-6259a7fc-b610-40ae-92eb-f21450dcbaa1.png">

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -8,7 +8,7 @@

|

|||

|

||||

Knowledge distillation (KD) has been proven to be a simple and effective tool for training compact models. Almost all KD variants for dense prediction tasks align the student and teacher networks' feature maps in the spatial domain, typically by minimizing point-wise and/or pair-wise discrepancy. Observing that in semantic segmentation, some layers' feature activations of each channel tend to encode saliency of scene categories (analogue to class activation mapping), we propose to align features channel-wise between the student and teacher networks. To this end, we first transform the feature map of each channel into a probability map using softmax normalization, and then minimize the Kullback-Leibler (KL) divergence of the corresponding channels of the two networks. By doing so, our method focuses on mimicking the soft distributions of channels between networks. In particular, the KL divergence enables learning to pay more attention to the most salient regions of the channel-wise maps, presumably corresponding to the most useful signals for semantic segmentation. Experiments demonstrate that our channel-wise distillation outperforms almost all existing spatial distillation methods for semantic segmentation considerably, and requires less computational cost during training. We consistently achieve superior performance on three benchmarks with various network structures.

|

||||

|

||||

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -8,7 +8,7 @@

|

|||

|

||||

This paper addresses the scalability challenge of architecture search by formulating the task in a differentiable manner. Unlike conventional approaches of applying evolution or reinforcement learning over a discrete and non-differentiable search space, our method is based on the continuous relaxation of the architecture representation, allowing efficient search of the architecture using gradient descent. Extensive experiments on CIFAR-10, ImageNet, Penn Treebank and WikiText-2 show that our algorithm excels in discovering high-performance convolutional architectures for image classification and recurrent architectures for language modeling, while being orders of magnitude faster than state-of-the-art non-differentiable techniques. Our implementation has been made publicly available to facilitate further research on efficient architecture search algorithms.

|

||||

|

||||

|

||||

|

||||

|

||||

## Results and models

|

||||

|

||||

|

|

|

|||

|

|

@ -9,7 +9,8 @@

|

|||

We revisit the one-shot Neural Architecture Search (NAS) paradigm and analyze its advantages over existing NAS approaches. Existing one-shot method, however, is hard to train and not yet effective on large scale datasets like ImageNet. This work propose a Single Path One-Shot model to address the challenge in the training. Our central idea is to construct a simplified supernet, where all architectures are single paths so that weight co-adaption problem is alleviated. Training is performed by uniform path sampling. All architectures (and their weights) are trained fully and equally.

|

||||

Comprehensive experiments verify that our approach is flexible and effective. It is easy to train and fast to search. It effortlessly supports complex search spaces (e.g., building blocks, channel, mixed-precision quantization) and different search constraints (e.g., FLOPs, latency). It is thus convenient to use for various needs. It achieves start-of-the-art performance on the large dataset ImageNet.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Introduction

|

||||

|

||||

|

|

|

|||

|

|

@ -8,7 +8,7 @@

|

|||

|

||||

Object detectors are usually equipped with backbone networks designed for image classification. It might be sub-optimal because of the gap between the tasks of image classification and object detection. In this work, we present DetNAS to use Neural Architecture Search (NAS) for the design of better backbones for object detection. It is non-trivial because detection training typically needs ImageNet pre-training while NAS systems require accuracies on the target detection task as supervisory signals. Based on the technique of one-shot supernet, which contains all possible networks in the search space, we propose a framework for backbone search on object detection. We train the supernet under the typical detector training schedule: ImageNet pre-training and detection fine-tuning. Then, the architecture search is performed on the trained supernet, using the detection task as the guidance. This framework makes NAS on backbones very efficient. In experiments, we show the effectiveness of DetNAS on various detectors, for instance, one-stage RetinaNet and the two-stage FPN. We empirically find that networks searched on object detection shows consistent superiority compared to those searched on ImageNet classification. The resulting architecture achieves superior performance than hand-crafted networks on COCO with much less FLOPs complexity.

|

||||

|

||||

|

||||

|

||||

|

||||

## Introduction

|

||||

|

||||

|

|

|

|||

|

|

@ -9,7 +9,9 @@

|

|||

We study how to set channel numbers in a neural network to achieve better accuracy under constrained resources (e.g., FLOPs, latency, memory footprint or model size). A simple and one-shot solution, named AutoSlim, is presented. Instead of training many network samples and searching with reinforcement learning, we train a single slimmable network to approximate the network accuracy of different channel configurations. We then iteratively evaluate the trained slimmable model and greedily slim the layer with minimal accuracy drop. By this single pass, we can obtain the optimized channel configurations under different resource constraints. We present experiments with MobileNet v1, MobileNet v2, ResNet-50 and RL-searched MNasNet on ImageNet classification. We show significant improvements over their default channel configurations. We also achieve better accuracy than recent channel pruning methods and neural architecture search methods.

|

||||

Notably, by setting optimized channel numbers, our AutoSlim-MobileNet-v2 at 305M FLOPs achieves 74.2% top-1 accuracy, 2.4% better than default MobileNet-v2 (301M FLOPs), and even 0.2% better than RL-searched MNasNet (317M FLOPs). Our AutoSlim-ResNet-50 at 570M FLOPs, without depthwise convolutions, achieves 1.3% better accuracy than MobileNet-v1 (569M FLOPs).

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Introduction

|

||||

|

||||

|

|

|

|||

|

|

@ -1 +1,266 @@

|

|||

# Algorithm

|

||||

## Introduction

|

||||

|

||||

### What is algorithm in MMRazor

|

||||

|

||||

MMRazor is a model compression toolkit, which includes 4 mianstream technologies:

|

||||

|

||||

- Neural Architecture Search (NAS)

|

||||

- Pruning

|

||||

- Knowledge Distillation (KD)

|

||||

- Quantization (come soon)

|

||||

|

||||

And in MMRazor, `algorithm` is a general item for these technologies. For example, in NAS,

|

||||

|

||||

[SPOS](https://github.com/open-mmlab/mmrazor/blob/master/configs/nas/spos)[ ](https://arxiv.org/abs/1904.00420)is an `algorithm`, [CWD](https://github.com/open-mmlab/mmrazor/blob/master/configs/distill/cwd) is also an `algorithm` of knowledge distillation.

|

||||

|

||||

`algorithm` is the entrance of `mmrazor/models` . Its role in MMRazor is the same as both `classifier` in [MMClassification](https://github.com/open-mmlab/mmclassification) and `detector` in [MMDetection](https://github.com/open-mmlab/mmdetection).

|

||||

|

||||

### About base algorithm

|

||||

|

||||

In the directory of `models/algorith``ms`, all model compression algorithms are divided into 4 subdirectories: nas / pruning / distill / quantization. These algorithms must inherit from `BaseAlgorithm`, whose definition is as below.

|

||||

|

||||

```Python

|

||||

from typing import Dict, List, Optional, Tuple, Union

|

||||

from mmengine.model import BaseModel

|

||||

from mmrazor.registry import MODELS

|

||||

|

||||

LossResults = Dict[str, torch.Tensor]

|

||||

TensorResults = Union[Tuple[torch.Tensor], torch.Tensor]

|

||||

PredictResults = List[BaseDataElement]

|

||||

ForwardResults = Union[LossResults, TensorResults, PredictResults]

|

||||

|

||||

@MODELS.register_module()

|

||||

class BaseAlgorithm(BaseModel):

|

||||

|

||||

def __init__(self,

|

||||

architecture: Union[BaseModel, Dict],

|

||||

data_preprocessor: Optional[Union[Dict, nn.Module]] = None,

|

||||

init_cfg: Optional[Dict] = None):

|

||||

|

||||

......

|

||||

|

||||

super().__init__(data_preprocessor, init_cfg)

|

||||

self.architecture = architecture

|

||||

|

||||

def forward(self,

|

||||

batch_inputs: torch.Tensor,

|

||||

data_samples: Optional[List[BaseDataElement]] = None,

|

||||

mode: str = 'tensor') -> ForwardResults:

|

||||

|

||||

if mode == 'loss':

|

||||

return self.loss(batch_inputs, data_samples)

|

||||

elif mode == 'tensor':

|

||||

return self._forward(batch_inputs, data_samples)

|

||||

elif mode == 'predict':

|

||||

return self._predict(batch_inputs, data_samples)

|

||||

else:

|

||||

raise RuntimeError(f'Invalid mode "{mode}". '

|

||||

'Only supports loss, predict and tensor mode')

|

||||

|

||||

def loss(

|

||||

self,

|

||||

batch_inputs: torch.Tensor,

|

||||

data_samples: Optional[List[BaseDataElement]] = None,

|

||||

) -> LossResults:

|

||||

"""Calculate losses from a batch of inputs and data samples."""

|

||||

return self.architecture(batch_inputs, data_samples, mode='loss')

|

||||

|

||||

def _forward(

|

||||

self,

|

||||

batch_inputs: torch.Tensor,

|

||||

data_samples: Optional[List[BaseDataElement]] = None,

|

||||

) -> TensorResults:

|

||||

"""Network forward process."""

|

||||

return self.architecture(batch_inputs, data_samples, mode='tensor')

|

||||

|

||||

def _predict(

|

||||

self,

|

||||

batch_inputs: torch.Tensor,

|

||||

data_samples: Optional[List[BaseDataElement]] = None,

|

||||

) -> PredictResults:

|

||||

"""Predict results from a batch of inputs and data samples with post-

|

||||

processing."""

|

||||

return self.architecture(batch_inputs, data_samples, mode='predict')

|

||||

```

|

||||

|

||||

As you can see from above, `BaseAlgorithm` is inherited from `BaseModel` of MMEngine. `BaseModel` implements the basic functions of the algorithmic model, such as weights initialize,

|

||||

|

||||

batch inputs preprocess (see more information in `BaseDataPreprocessor` class of MMEngine), parse losses, and update model parameters. For more details of `BaseModel` , you can see docs for `BaseModel`.

|

||||

|

||||

`BaseAlgorithm`'s forward is just a wrapper of `BaseModel`'s forward. Sub-classes inherited from BaseAlgorithm only need to override the `loss` method, which implements the logic to calculate loss, thus various algorithms can be trained in the runner.

|

||||

|

||||

|

||||

|

||||

## How to use existing algorithms in MMRazor

|

||||

|

||||

1. Configure your architecture that will be slimmed

|

||||

|

||||

- Use the model config of other repos of OpenMMLab directly as below, which is an example of setting Faster-RCNN as our architecture.

|

||||

|

||||

```Python

|

||||

_base_ = [

|

||||

'mmdet::_base_/models/faster_rcnn_r50_fpn.py',

|

||||

]

|

||||

|

||||

architecture = _base_.model

|

||||

```

|

||||

|

||||

- Use your customized model as below, which is an example of defining a VGG model as our architecture.

|

||||

|

||||

> How to customize architectures can refer to our tutorial: [Customize Architectures](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_3_customize_architectures.html#).

|

||||

|

||||

```Python

|

||||

default_scope='mmcls'

|

||||

architecture = dict(

|

||||

type='ImageClassifier',

|

||||

backbone=dict(type='VGG', depth=11, num_classes=1000),

|

||||

neck=None,

|

||||

head=dict(

|

||||

type='ClsHead',

|

||||

loss=dict(type='CrossEntropyLoss', loss_weight=1.0),

|

||||

topk=(1, 5),

|

||||

))

|

||||

```

|

||||

|

||||

2. Apply the registered algorithm to your architecture.

|

||||

|

||||

> The arg name of `algorithm` in config is **model** rather than **algorithm** in order to get better supports of MMCV and MMEngine.

|

||||

|

||||

Maybe more args in model need to set according to the used algorithm.

|

||||

|

||||

```Python

|

||||

model = dict(

|

||||

type='BaseAlgorithm',

|

||||

architecture=architecture)

|

||||

```

|

||||

|

||||

> About the usage of `Config`, refer to [Config ‒ mmcv 1.5.3 documentation](https://mmcv.readthedocs.io/en/latest/understand_mmcv/config.html) please.

|

||||

|

||||

3. Apply some custom hooks or loops to your algorithm. (optional)

|

||||

|

||||

- Custom hooks

|

||||

|

||||

```Python

|

||||

custom_hooks = [

|

||||

dict(type='NaiveVisualizationHook', priority='LOWEST'),

|

||||

]

|

||||

```

|

||||

|

||||

- Custom loops

|

||||

|

||||

```Python

|

||||

_base_ = ['./spos_shufflenet_supernet_8xb128_in1k.py']

|

||||

|

||||

# To chose from ['train_cfg', 'val_cfg', 'test_cfg'] based on your loop type

|

||||

train_cfg = dict(

|

||||

_delete_=True,

|

||||

type='mmrazor.EvolutionSearchLoop',

|

||||

dataloader=_base_.val_dataloader,

|

||||

evaluator=_base_.val_evaluator)

|

||||

|

||||

val_cfg = dict()

|

||||

test_cfg = dict()

|

||||

```

|

||||

|

||||

## How to customize your algorithm

|

||||

|

||||

### Common pipeline

|

||||

|

||||

1. Register a new algorithm

|

||||

|

||||

Create a new file `mmrazor/models/algorithms/{subdirectory}/xxx.py`

|

||||

|

||||

```Python

|

||||

from mmrazor.models.algorithms import BaseAlgorithm

|

||||

from mmrazor.registry import MODELS

|

||||

|

||||

@MODELS.register_module()

|

||||

class XXX(BaseAlgorithm):

|

||||

def __init__(self, architecture):

|

||||

super().__init__(architecture)

|

||||

pass

|

||||

|

||||

def loss(self, batch_inputs):

|

||||

pass

|

||||

```

|

||||

|

||||

2. Rewrite its `loss` method.

|

||||

|

||||

```Python

|

||||

from mmrazor.models.algorithms import BaseAlgorithm

|

||||

from mmrazor.registry import MODELS

|

||||

|

||||

@MODELS.register_module()

|

||||

class XXX(BaseAlgorithm):

|

||||

def __init__(self, architecture):

|

||||

super().__init__(architecture)

|

||||

......

|

||||

|

||||

def loss(self, batch_inputs):

|

||||

......

|

||||

return LossResults

|

||||

```

|

||||

|

||||

3. Add the remaining functions of the algorithm

|

||||

|

||||

> This step is special because of the diversity of algorithms. Some functions of the algorithm may also be implemented in other files.

|

||||

|

||||

```Python

|

||||

from mmrazor.models.algorithms import BaseAlgorithm

|

||||

from mmrazor.registry import MODELS

|

||||

|

||||

@MODELS.register_module()

|

||||

class XXX(BaseAlgorithm):

|

||||

def __init__(self, architecture):

|

||||

super().__init__(architecture)

|

||||

......

|

||||

|

||||

def loss(self, batch_inputs):

|

||||

......

|

||||

return LossResults

|

||||

|

||||

def aaa(self):

|

||||

......

|

||||

|

||||

def bbb(self):

|

||||

......

|

||||

```

|

||||

|

||||

4. Import the class

|

||||

|

||||

You can add the following line to `mmrazor/models/algorithms/``{subdirectory}/``__init__.py`

|

||||

|

||||

```CoffeeScript

|

||||

from .xxx import XXX

|

||||

|

||||

__all__ = ['XXX']

|

||||

```

|

||||

|

||||

In addition, import XXX in `mmrazor/models/algorithms/__init__.py`

|

||||

|

||||

5. Use the algorithm in your config file.

|

||||

|

||||

Please refer to the previous section about how to use existing algorithms in MMRazor

|

||||

|

||||

```Python

|

||||

model = dict(

|

||||

type='XXX',

|

||||

architecture=architecture)

|

||||

```

|

||||

|

||||

### Pipelines for different algorithms

|

||||

|

||||

Please refer to our tutorials about how to customize different algorithms for more details as below.

|

||||

|

||||

1. NAS

|

||||

|

||||

[Customize NAS algorithms](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_4_customize_nas_algorithms.html#)

|

||||

|

||||

2. Pruning

|

||||

|

||||

[Customize Pruning algorithms](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_5_customize_pruning_algorithms.html)

|

||||

|

||||

3. Distill

|

||||

|

||||

[Customize KD algorithms](https://mmrazor.readthedocs.io/en/latest/tutorials/Tutorial_6_customize_kd_algorithms.html)

|

||||

|

|

@ -1 +1,82 @@

|

|||

# Apply existing algorithms to new tasks

|

||||

Here we show how to apply existing algorithms to other tasks with an example of [SPOS ](https://github.com/open-mmlab/mmrazor/tree/dev-1.x/configs/nas/mmcls/spos)& [DetNAS](https://github.com/open-mmlab/mmrazor/tree/dev-1.x/configs/nas/mmdet/detnas).

|

||||

|

||||

> SPOS: Single Path One-Shot NAS for classification

|

||||

>

|

||||

> DetNAS: Single Path One-Shot NAS for detection

|

||||

|

||||

**You just need to configure the existing algorithms in your config only by replacing** **the architecture of** **mmcls** **with** **mmdet****'s**

|

||||

|

||||

You can implement a new algorithm by inheriting from the existing algorithm quickly if the new task's specificity leads to the failure of applying directly.

|

||||

|

||||

SPOS config VS DetNAS config

|

||||

|

||||

- SPOS

|

||||

|

||||

```Python

|

||||

_base_ = [

|

||||

'mmrazor::_base_/settings/imagenet_bs1024_spos.py',

|

||||

'mmrazor::_base_/nas_backbones/spos_shufflenet_supernet.py',

|

||||

'mmcls::_base_/default_runtime.py',

|

||||

]

|

||||

|

||||

# model

|

||||

supernet = dict(

|

||||

type='ImageClassifier',

|

||||

data_preprocessor=_base_.preprocess_cfg,

|

||||

backbone=_base_.nas_backbone,

|

||||

neck=dict(type='GlobalAveragePooling'),

|

||||

head=dict(

|

||||

type='LinearClsHead',

|

||||

num_classes=1000,

|

||||

in_channels=1024,

|

||||

loss=dict(

|

||||

type='LabelSmoothLoss',

|

||||

num_classes=1000,

|

||||

label_smooth_val=0.1,

|

||||

mode='original',

|

||||

loss_weight=1.0),

|

||||

topk=(1, 5)))

|

||||

|

||||

model = dict(

|

||||

type='mmrazor.SPOS',

|

||||

architecture=supernet,

|

||||

mutator=dict(type='mmrazor.OneShotModuleMutator'))

|

||||

|

||||

find_unused_parameters = True

|

||||

```

|

||||

|

||||

- DetNAS

|

||||

|

||||

```Python

|

||||

_base_ = [

|

||||

'mmdet::_base_/models/faster_rcnn_r50_fpn.py',

|

||||

'mmdet::_base_/datasets/coco_detection.py',

|

||||

'mmdet::_base_/schedules/schedule_1x.py',

|

||||

'mmdet::_base_/default_runtime.py',

|

||||

'mmrazor::_base_/nas_backbones/spos_shufflenet_supernet.py'

|

||||

]

|

||||

|

||||

norm_cfg = dict(type='SyncBN', requires_grad=True)

|

||||

|

||||

supernet = _base_.model

|

||||

|

||||

supernet.backbone = _base_.nas_backbone

|

||||

supernet.backbone.norm_cfg = norm_cfg

|

||||

supernet.backbone.out_indices = (0, 1, 2, 3)

|

||||

supernet.backbone.with_last_layer = False

|

||||

|

||||

supernet.neck.norm_cfg = norm_cfg

|

||||

supernet.neck.in_channels = [64, 160, 320, 640]

|

||||

|

||||

supernet.roi_head.bbox_head.norm_cfg = norm_cfg

|

||||

supernet.roi_head.bbox_head.type = 'Shared4Conv1FCBBoxHead'

|

||||

|

||||

model = dict(

|

||||

_delete_=True,

|

||||

type='mmrazor.SPOS',

|

||||

architecture=supernet,

|

||||

mutator=dict(type='mmrazor.OneShotModuleMutator'))

|

||||

|

||||

find_unused_parameters = True

|

||||

```

|

||||

|

|

@ -1 +1,260 @@

|

|||

# Customize Architectures

|

||||

Different from other tasks, architectures in MMRazor may consist of some special model components, such as **searchable backbones, connectors, dynamic ops**. In MMRazor, you can not only develop some common model components like other codebases of OpenMMLab, but also develop some special model components. Here is how to develop searchable model components and common model components.

|

||||

|

||||

> Please refer to these documents as follows if you want to know about **connectors** and **dynamic ops**.

|

||||

>

|

||||

> [Connector 用户文档](https://aicarrier.feishu.cn/docx/doxcnvJG0VHZLqF82MkCHyr9B8b)

|

||||

>

|

||||

> [Dynamic op 用户文档](https://aicarrier.feishu.cn/docx/doxcnbp4n4HeDkJI1fHlWfVklke)

|

||||

|

||||

## Develop searchable model components

|

||||

|

||||

1. Define a new backbone

|

||||

|

||||

Create a new file `mmrazor/models/architectures/backbones/searchable_shufflenet_v2.py`, class `SearchableShuffleNetV2` inherits from `BaseBackBone` of mmcls, which is the codebase that you will use to build the model.

|

||||

|

||||

```Python

|

||||

# Copyright (c) OpenMMLab. All rights reserved.

|

||||

import copy

|

||||

from typing import Dict, List, Optional, Sequence, Tuple, Union

|

||||

|

||||

import torch.nn as nn

|

||||

from mmcls.models.backbones.base_backbone import BaseBackbone

|

||||

from mmcv.cnn import ConvModule, constant_init, normal_init

|

||||

from mmcv.runner import ModuleList, Sequential

|

||||

from torch import Tensor

|

||||

from torch.nn.modules.batchnorm import _BatchNorm

|

||||

|

||||

from mmrazor.registry import MODELS

|

||||

|

||||

@MODELS.register_module()

|

||||

class SearchableShuffleNetV2(BaseBackbone):

|

||||

|

||||

def __init__(self, ):

|

||||

pass

|

||||

|

||||

def _make_layer(self, out_channels, num_blocks, stage_idx):

|

||||

pass

|

||||

|

||||

def _freeze_stages(self):

|

||||

pass

|

||||

|

||||

def init_weights(self):

|

||||

pass

|

||||

|

||||

def forward(self, x):

|

||||

pass

|

||||

|

||||

def train(self, mode=True):

|

||||

pass

|

||||

```

|

||||

|

||||

2. Build the architecture of the new backbone based on `arch_setting`

|

||||

|

||||

```Python

|

||||

@MODELS.register_module()

|

||||

class SearchableShuffleNetV2(BaseBackbone):

|

||||

def __init__(self,

|

||||

arch_setting: List[List],

|

||||

stem_multiplier: int = 1,

|

||||

widen_factor: float = 1.0,

|

||||

out_indices: Sequence[int] = (4, ),

|

||||

frozen_stages: int = -1,

|

||||

with_last_layer: bool = True,

|

||||

conv_cfg: Optional[Dict] = None,

|

||||

norm_cfg: Dict = dict(type='BN'),

|

||||

act_cfg: Dict = dict(type='ReLU'),

|

||||

norm_eval: bool = False,

|

||||

with_cp: bool = False,

|

||||

init_cfg: Optional[Union[Dict, List[Dict]]] = None) -> None:

|

||||

layers_nums = 5 if with_last_layer else 4

|

||||

for index in out_indices:

|

||||

if index not in range(0, layers_nums):

|

||||

raise ValueError('the item in out_indices must in '

|

||||

f'range(0, 5). But received {index}')

|

||||

|

||||

self.frozen_stages = frozen_stages

|

||||

if frozen_stages not in range(-1, layers_nums):

|

||||

raise ValueError('frozen_stages must be in range(-1, 5). '

|

||||

f'But received {frozen_stages}')

|

||||

|

||||

super().__init__(init_cfg)

|

||||

|

||||

self.arch_setting = arch_setting

|

||||

self.widen_factor = widen_factor

|

||||

self.out_indices = out_indices

|

||||

self.conv_cfg = conv_cfg

|

||||

self.norm_cfg = norm_cfg

|

||||

self.act_cfg = act_cfg

|

||||

self.norm_eval = norm_eval

|

||||

self.with_cp = with_cp

|

||||

|

||||

last_channels = 1024

|

||||

self.in_channels = 16 * stem_multiplier

|

||||

|

||||

# build the first layer

|

||||

self.conv1 = ConvModule(

|

||||

in_channels=3,

|

||||

out_channels=self.in_channels,

|

||||

kernel_size=3,

|

||||

stride=2,

|

||||

padding=1,

|

||||

conv_cfg=conv_cfg,

|

||||

norm_cfg=norm_cfg,

|

||||

act_cfg=act_cfg)

|

||||

|

||||

# build the middle layers

|

||||

self.layers = ModuleList()

|

||||

for channel, num_blocks, mutable_cfg in arch_setting:

|

||||

out_channels = round(channel * widen_factor)

|

||||

layer = self._make_layer(out_channels, num_blocks,

|

||||

copy.deepcopy(mutable_cfg))

|

||||

self.layers.append(layer)

|

||||

|

||||

# build the last layer

|

||||

if with_last_layer:

|

||||

self.layers.append(

|

||||

ConvModule(

|

||||

in_channels=self.in_channels,

|

||||

out_channels=last_channels,

|

||||

kernel_size=1,

|

||||

conv_cfg=conv_cfg,

|

||||

norm_cfg=norm_cfg,

|

||||

act_cfg=act_cfg))

|

||||

```

|

||||

|

||||

3. Implement`_make_layer` with `mutable_cfg`

|

||||

|

||||

```Python

|

||||

@MODELS.register_module()

|

||||

class SearchableShuffleNetV2(BaseBackbone):

|

||||

|

||||

...

|

||||

|

||||

def _make_layer(self, out_channels: int, num_blocks: int,

|

||||

mutable_cfg: Dict) -> Sequential:

|

||||

"""Stack mutable blocks to build a layer for ShuffleNet V2.

|

||||

Note:

|

||||

Here we use ``module_kwargs`` to pass dynamic parameters such as

|

||||

``in_channels``, ``out_channels`` and ``stride``

|

||||

to build the mutable.

|

||||

Args:

|

||||

out_channels (int): out_channels of the block.

|

||||

num_blocks (int): number of blocks.

|

||||

mutable_cfg (dict): Config of mutable.

|

||||

Returns:

|

||||

mmcv.runner.Sequential: The layer made.

|

||||

"""

|

||||

layers = []

|

||||

for i in range(num_blocks):

|

||||

stride = 2 if i == 0 else 1

|

||||

|

||||

mutable_cfg.update(

|

||||

module_kwargs=dict(

|

||||

in_channels=self.in_channels,

|

||||

out_channels=out_channels,

|

||||

stride=stride))

|

||||

layers.append(MODELS.build(mutable_cfg))

|

||||

self.in_channels = out_channels

|

||||

|

||||

return Sequential(*layers)

|

||||

|

||||

...

|

||||

```

|

||||

|

||||

4. Implement other common methods

|

||||

|

||||

You can refer to the implementation of `ShuffleNetV2` in mmcls for finishing other common methods.

|

||||

|

||||

5. Import the module

|

||||

|

||||

You can either add the following line to `mmrazor/models/architectures/backbones/__init__.py`

|

||||

|

||||

```Python

|

||||

from .searchable_shufflenet_v2 import SearchableShuffleNetV2

|

||||

|

||||

__all__ = ['SearchableShuffleNetV2']

|

||||

```

|

||||

|

||||

or alternatively add

|

||||

|

||||

```Python

|

||||

custom_imports = dict(

|

||||

imports=['mmrazor.models.architectures.backbones.searchable_shufflenet_v2'],

|

||||

allow_failed_imports=False)

|

||||

```

|

||||

|

||||

to the config file to avoid modifying the original code.

|

||||

|

||||

6. Use the backbone in your config file

|

||||

|

||||

```Python

|

||||

architecture = dict(

|

||||

type=xxx,

|

||||

model=dict(

|

||||

...

|

||||

backbone=dict(

|

||||

type='mmrazor.SearchableShuffleNetV2',

|

||||

arg1=xxx,

|

||||

arg2=xxx),

|

||||

...

|

||||

```

|

||||

|

||||

## Develop common model components

|

||||

|

||||

Here we show how to add a new backbone with an example of `xxxNet`.

|

||||

|

||||

1. Define a new backbone

|

||||

|

||||

Create a new file `mmrazor/models/architectures/backbones/xxxnet.py`, then implement the class `xxxNet`.

|

||||

|

||||

```Python

|

||||

from mmengine.model import BaseModule

|

||||

from mmrazor.registry import MODELS

|

||||

|

||||

@MODELS.register_module()

|

||||

class xxxNet(BaseModule):

|

||||

|

||||

def __init__(self, arg1, arg2, init_cfg=None):

|

||||

super().__init__(init_cfg=init_cfg)

|

||||

pass

|

||||

|

||||

def forward(self, x):

|

||||

pass

|

||||

```

|

||||

|

||||

2. Import the module

|

||||

|

||||

You can either add the following line to `mmrazor/models/architectures/backbones/__init__.py`

|

||||

|

||||

```Python

|

||||

from .xxxnet import xxxNet

|

||||

|

||||

__all__ = ['xxxNet']

|

||||

```

|

||||

|

||||

or alternatively add

|

||||

|

||||

```Python

|

||||

custom_imports = dict(

|

||||

imports=['mmrazor.models.architectures.backbones.xxxnet'],

|

||||

allow_failed_imports=False)

|

||||

```

|

||||

|

||||

to the config file to avoid modifying the original code.

|

||||

|

||||

3. Use the backbone in your config file

|

||||

|

||||

```Python

|

||||

architecture = dict(

|

||||

type=xxx,

|

||||

model=dict(

|

||||

...

|

||||

backbone=dict(

|

||||

type='xxxNet',

|

||||

arg1=xxx,

|

||||

arg2=xxx),

|

||||

...

|

||||

```

|

||||

|

||||

How to add other model components is similar to backbone's. For more details, please refer to other codebases' docs.

|

||||

|

|

@ -1 +1,159 @@

|

|||

# Customize mixed algorithms

|

||||

Here we show how to customize mixed algorithms with our algorithm components. We take [AutoSlim ](https://github.com/open-mmlab/mmrazor/tree/dev-1.x/configs/pruning/mmcls/autoslim)as an example.

|

||||

|

||||

> **Why is AutoSlim a mixed algorithm?**

|

||||

>

|

||||

> In [AutoSlim](https://github.com/open-mmlab/mmrazor/tree/dev-1.x/configs/pruning/mmcls/autoslim), the sandwich rule and the inplace distillation will be introduced to enhance the training process, which is called as the slimmable training. The sandwich rule means that we train the model at smallest width, largest width and (n − 2) random widths, instead of n random widths. And the inplace distillation means that we use the predicted label of the model at the largest width as the training label for other widths, while for the largest width we use ground truth. So both the KD algorithm and the pruning algorithm are used in [AutoSlim](https://github.com/open-mmlab/mmrazor/tree/dev-1.x/configs/pruning/mmcls/autoslim).

|

||||

|

||||

1. Register a new algorithm

|

||||

|

||||

Create a new file `mmrazor/models/algorithms/nas/autoslim.py`, class `AutoSlim` inherits from class `BaseAlgorithm`. You need to build the KD algorithm component (distiller) and the pruning algorithm component (mutator) because AutoSlim is a mixed algorithm.

|

||||

|

||||

> You can also inherit from the existing algorithm instead of `BaseAlgorithm` if your algorithm is similar to the existing algorithm.

|

||||

|

||||

> You can choose existing algorithm components in MMRazor, such as `OneShotChannelMutator` and `ConfigurableDistiller` in AutoSlim.

|

||||

>

|

||||

> If these in MMRazor don't meet your needs, you can customize new algorithm components for your algorithm. Reference is as follows:

|

||||

>

|

||||

> [Tutorials: Customize KD algorithms](https://aicarrier.feishu.cn/docx/doxcnFWOTLQYJ8FIlUGsYrEjisd)

|

||||

>

|

||||

> [Tutorials: Customize Pruning algorithms](https://aicarrier.feishu.cn/docx/doxcnzXlPv0cDdmd0wNrq0SEqsh)

|

||||

>

|

||||

> [Tutorials: Customize KD algorithms](https://aicarrier.feishu.cn/docx/doxcnFWOTLQYJ8FIlUGsYrEjisd)

|

||||

|

||||

```Python

|

||||

# Copyright (c) OpenMMLab. All rights reserved.

|

||||

from typing import Dict, List, Optional, Union

|

||||

import torch

|

||||

from torch import nn

|

||||

|

||||

from mmrazor.models.distillers import ConfigurableDistiller

|

||||

from mmrazor.models.mutators import OneShotChannelMutator

|

||||

from mmrazor.registry import MODELS

|

||||

from ..base import BaseAlgorithm

|

||||

|

||||

VALID_MUTATOR_TYPE = Union[OneShotChannelMutator, Dict]

|

||||

VALID_DISTILLER_TYPE = Union[ConfigurableDistiller, Dict]

|

||||

|

||||

@MODELS.register_module()

|

||||

class AutoSlim(BaseAlgorithm):

|

||||

def __init__(self,

|

||||

mutator: VALID_MUTATOR_TYPE,

|

||||

distiller: VALID_DISTILLER_TYPE,

|

||||

architecture: Union[BaseModel, Dict],

|

||||

data_preprocessor: Optional[Union[Dict, nn.Module]] = None,

|

||||

init_cfg: Optional[Dict] = None,

|

||||

num_samples: int = 2) -> None:

|

||||

super().__init__(architecture, data_preprocessor, init_cfg)

|

||||

self.mutator = self._build_mutator(mutator)

|

||||

# `prepare_from_supernet` must be called before distiller initialized

|

||||

self.mutator.prepare_from_supernet(self.architecture)

|

||||

|

||||

self.distiller = self._build_distiller(distiller)

|

||||

self.distiller.prepare_from_teacher(self.architecture)

|

||||

self.distiller.prepare_from_student(self.architecture)

|

||||

|

||||

......

|

||||

|

||||

def _build_mutator(self,

|

||||

mutator: VALID_MUTATOR_TYPE) -> OneShotChannelMutator:

|

||||

"""build mutator."""

|

||||

if isinstance(mutator, dict):

|

||||

mutator = MODELS.build(mutator)

|

||||

if not isinstance(mutator, OneShotChannelMutator):

|

||||

raise TypeError('mutator should be a `dict` or '

|

||||

'`OneShotModuleMutator` instance, but got '

|

||||

f'{type(mutator)}')

|

||||

|

||||

return mutator

|

||||

|

||||

def _build_distiller(

|

||||

self, distiller: VALID_DISTILLER_TYPE) -> ConfigurableDistiller:

|

||||

if isinstance(distiller, dict):

|

||||

distiller = MODELS.build(distiller)

|

||||

if not isinstance(distiller, ConfigurableDistiller):

|

||||

raise TypeError('distiller should be a `dict` or '

|

||||

'`ConfigurableDistiller` instance, but got '

|

||||

f'{type(distiller)}')

|

||||

|

||||

return distiller

|

||||

```

|

||||

|

||||

2. Implement the core logic in `train_step`

|

||||

|

||||

In `train_step`, both the `mutator` and the `distiller` play an important role. For example, `sample_subnet`, `set_max_subnet` and `set_min_subnet` are supported by the `mutator`, and the function of`distill_step` is mainly implemented by the `distiller`.

|

||||

|

||||

```Python

|

||||

@MODELS.register_module()

|

||||

class AutoSlim(BaseAlgorithm):

|

||||

|

||||

......

|

||||

|

||||

def train_step(self, data: List[dict],

|

||||

optim_wrapper: OptimWrapper) -> Dict[str, torch.Tensor]:

|

||||

|

||||

def distill_step(

|

||||

batch_inputs: torch.Tensor, data_samples: List[BaseDataElement]

|

||||

) -> Dict[str, torch.Tensor]:

|

||||

......

|

||||

|

||||

......

|

||||

|

||||

batch_inputs, data_samples = self.data_preprocessor(data, True)

|

||||

|

||||

total_losses = dict()

|

||||

# update the max subnet loss.

|

||||

self.set_max_subnet()

|

||||

......

|

||||

total_losses.update(add_prefix(max_subnet_losses, 'max_subnet'))

|

||||

|

||||

# update the min subnet loss.

|

||||

self.set_min_subnet()

|

||||

min_subnet_losses = distill_step(batch_inputs, data_samples)

|

||||

total_losses.update(add_prefix(min_subnet_losses, 'min_subnet'))

|

||||

|

||||

# update the random subnet loss.

|

||||

for sample_idx in range(self.num_samples):

|

||||

self.set_subnet(self.sample_subnet())

|

||||

random_subnet_losses = distill_step(batch_inputs, data_samples)

|

||||

total_losses.update(

|

||||

add_prefix(random_subnet_losses,

|

||||

f'random_subnet_{sample_idx}'))

|

||||

|

||||

return total_losses

|

||||

```

|

||||

|

||||

3. Import the class

|

||||

|

||||

You can either add the following line to `mmrazor/models/algorithms/nas/__init__.py`

|

||||

|

||||

```Python

|

||||

from .autoslim import AutoSlim

|

||||

|

||||

__all__ = ['AutoSlim']

|

||||

```

|

||||

|

||||

or alternatively add

|

||||

|

||||

```Python

|

||||

custom_imports = dict(

|

||||

imports=['mmrazor.models.algorithms.nas.autoslim'],

|

||||

allow_failed_imports=False)

|

||||

```

|

||||

|

||||

to the config file to avoid modifying the original code.

|

||||

|

||||

4. Use the algorithm in your config file

|

||||

|

||||

```Python

|

||||

model= dict(

|

||||

type='mmrazor.AutoSlim',

|

||||

architecture=...,

|

||||

mutator=dict(

|

||||

type='OneShotChannelMutator',

|

||||

...),

|

||||

distiller=dict(

|

||||

type='ConfigurableDistiller',

|

||||

...),

|

||||

...)

|

||||

```

|

||||

|

|

@ -1 +1,219 @@

|

|||

# Delivery

|

||||

## Introduction of Delivery

|

||||

|

||||

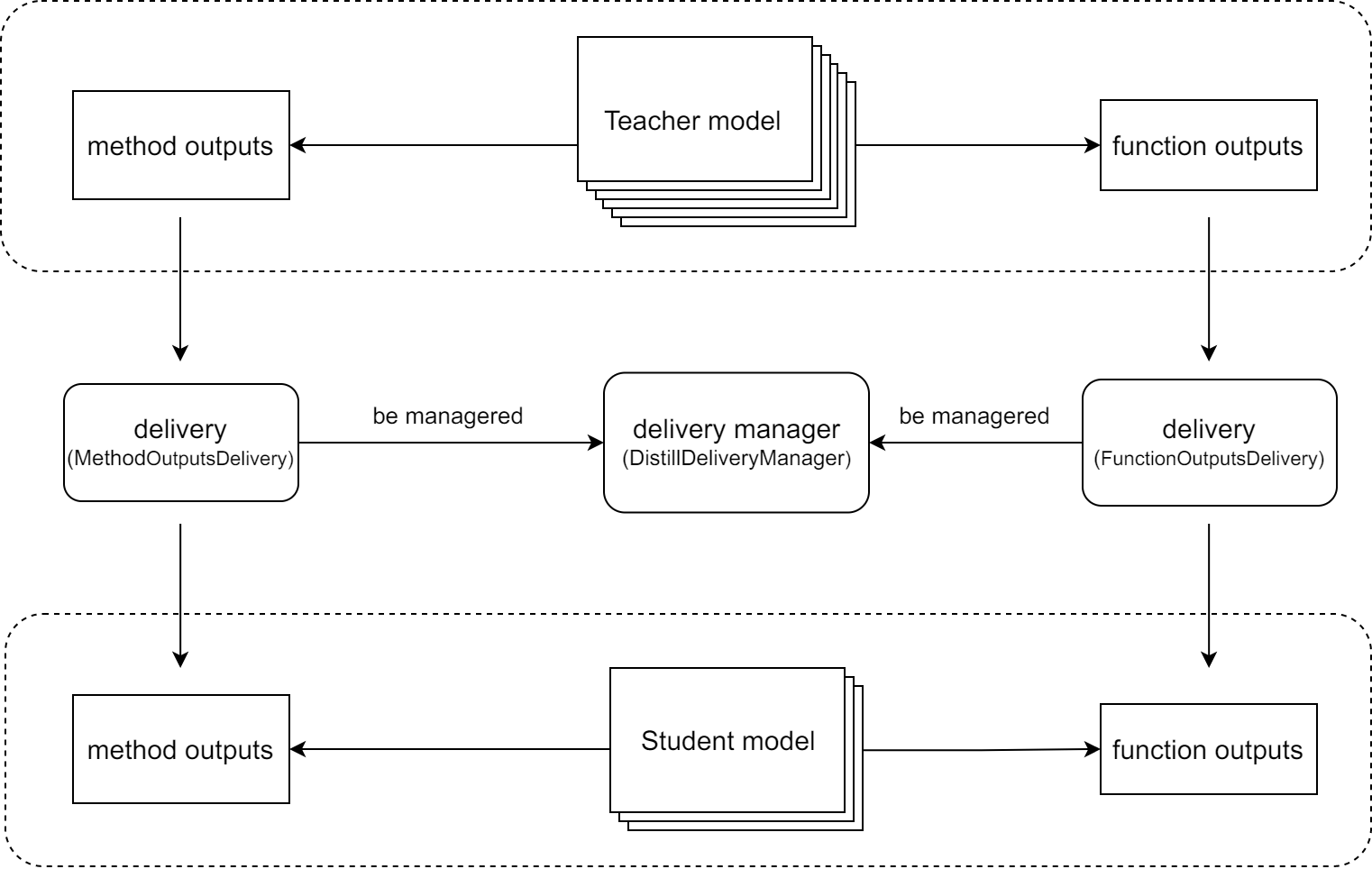

`Delivery` is a mechanism used in **knowledge distillation****,** which is to **align the intermediate results** between the teacher model and the student model by delivering and rewriting these intermediate results between them. As shown in the figure below, deliveries can be used to:

|

||||

|

||||

- **Deliver the output of a layer of the teacher model directly to a layer of the student model.** In some knowledge distillation algorithms, we may need to deliver the output of a layer of the teacher model to the student model directly. For example, in [LAD](https://arxiv.org/abs/2108.10520) algorithm, the student model needs to obtain the label assignment of the teacher model directly.

|

||||

- **Align the inputs of the teacher model and the student model.** For example, in the MMClassification framework, some widely used data augmentations such as [mixup](https://arxiv.org/abs/1710.09412) and [CutMix](https://arxiv.org/abs/1905.04899) are not implemented in Data Pipelines but in `forward_train`, and due to the randomness of these data augmentation methods, it may lead to a gap between the input of the teacher model and the student model.

|

||||

|

||||

|

||||

|

||||

In general, the delivery mechanism allows us to deliver intermediate results between the teacher model and the student model **without adding additional code**, which reduces the hard coding in the source code.

|

||||

|

||||

## Usage of Delivery

|

||||

|

||||

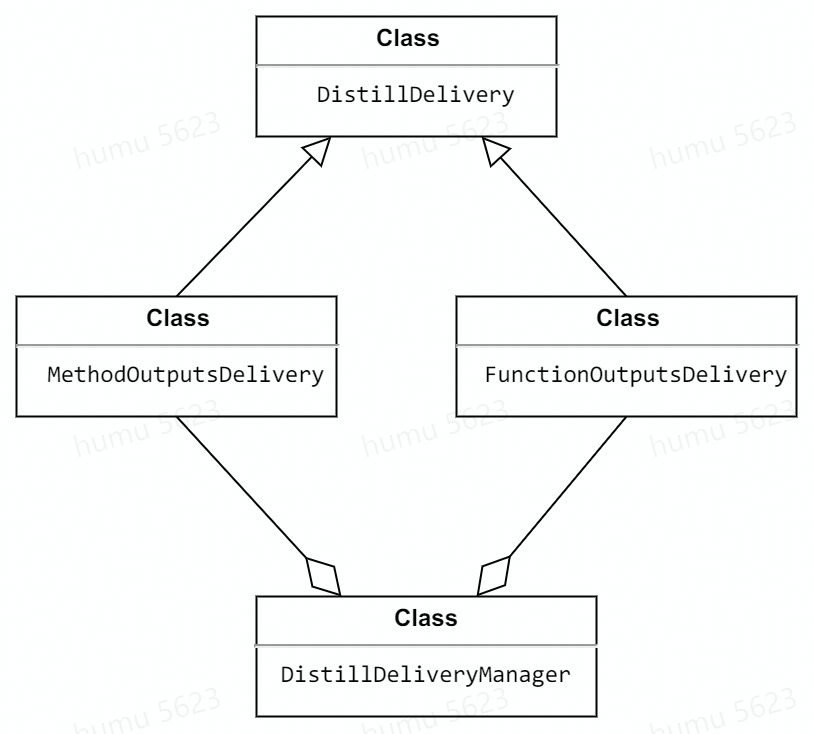

Currently, we support two deliveries: `FunctionOutputs``Delivery` and `MethodOutputs``Delivery`, both of which inherit from `DistillDiliver`. And these deliveries can be managed by `Distill``Delivery``Manager` or just be used on their own.

|

||||

|

||||

Their relationship is shown below.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### FunctionOutputsDelivery

|

||||

|

||||

`FunctionOutputs``Delivery` is used to align the **function's** intermediate results between the teacher model and the student model.

|

||||

|

||||

> When initializing `FunctionOutputs``Delivery`, you need to pass `func_path` argument, which requires extra attention. For example,

|

||||

`anchor_inside_flags` is a function in mmdetection to check whether the

|

||||

anchors are inside the border. This function is in

|

||||

`mmdet/core/anchor/utils.py` and used in

|

||||

`mmdet/models/dense_heads/anchor_head`. Then the `func_path` should be

|

||||

`mmdet.models.dense_heads.anchor_head.anchor_inside_flags` but not

|

||||

`mmdet.core.anchor.utils.anchor_inside_flags`.

|

||||

|

||||

#### Case 1: Delivery single function's output from the teacher to the student.

|

||||

|

||||

```Python

|

||||

import random

|

||||

from mmrazor.core import FunctionOutputsDelivery

|

||||

|

||||

def toy_func() -> int:

|

||||

return random.randint(0, 1000000)

|

||||

|

||||

delivery = FunctionOutputsDelivery(max_keep_data=1, func_path='toy_module.toy_func')

|

||||

|

||||

# override_data is False, which means that not override the data with

|

||||

# the recorded data. So it will get the original output of toy_func

|

||||

# in teacher model, and it is also recorded to be deliveried to the student.

|

||||

delivery.override_data = False

|

||||

with delivery:

|

||||

output_teacher = toy_module.toy_func()

|

||||

|

||||

# override_data is True, which means that override the data with

|

||||

# the recorded data, so it will get the output of toy_func

|

||||

# in teacher model rather than the student's.

|

||||

delivery.override_data = True

|

||||

with delivery:

|

||||

output_student = toy_module.toy_func()

|

||||

|

||||

print(output_teacher == output_student)

|

||||

```

|

||||

|

||||

Out:

|

||||

|

||||

```Python

|

||||

True

|

||||

```

|

||||

|

||||

#### Case 2: Delivery multi function's outputs from the teacher to the student.

|

||||

|

||||

If a function is executed more than once during the forward of the teacher model, all the outputs of this function will be used to override function outputs from the student model

|

||||

|

||||

> Delivery order is first-in first-out.

|

||||

|

||||

```Python

|

||||

delivery = FunctionOutputsDelivery(

|

||||

max_keep_data=2, func_path='toy_module.toy_func')

|

||||

|

||||

delivery.override_data = False

|

||||

with delivery:

|

||||

output1_teacher = toy_module.toy_func()

|

||||

output2_teacher = toy_module.toy_func()

|

||||

|

||||

delivery.override_data = True

|

||||

with delivery:

|

||||

output1_student = toy_module.toy_func()

|

||||

output2_student = toy_module.toy_func()

|

||||

|

||||

print(output1_teacher == output1_student and output2_teacher == output2_student)

|

||||

```

|

||||

|

||||

Out:

|

||||

|

||||

```Python

|

||||

True

|

||||

```

|

||||

|

||||

|

||||

|

||||

### MethodOutputsDelivery

|

||||

|

||||

`MethodOutputs``Delivery` is used to align the **method's** intermediate results between the teacher model and the student model.

|

||||

|

||||

#### Case: **Align the inputs of the teacher model and the student model**

|

||||

|

||||

Here we use mixup as an example to show how to align the inputs of the teacher model and the student model.

|

||||

|

||||

- Without Delivery

|

||||

|

||||

```Python

|

||||

# main.py

|

||||

from mmcls.models.utils import Augments

|

||||

from mmrazor.core import MethodOutputsDelivery

|

||||

|

||||

augments_cfg = dict(type='BatchMixup', alpha=1., num_classes=10, prob=1.0)

|

||||

augments = Augments(augments_cfg)

|

||||

|

||||

imgs = torch.randn(2, 3, 32, 32)

|

||||

label = torch.randint(0, 10, (2,))

|

||||

|

||||

imgs_teacher, label_teacher = augments(imgs, label)

|

||||

imgs_student, label_student = augments(imgs, label)

|

||||

|

||||

print(torch.equal(label_teacher, label_student))

|

||||

print(torch.equal(imgs_teacher, imgs_student))

|

||||

```

|

||||

|

||||

Out:

|

||||

|

||||

```Python

|

||||

False

|

||||

False

|

||||

from mmcls.models.utils import Augments

|

||||

from mmrazor.core import DistillDeliveryManager

|

||||

```

|

||||

|

||||

The results are different due to the randomness of mixup.

|

||||

|

||||

- With Delivery

|

||||

|

||||

```Python

|

||||

delivery = MethodOutputsDelivery(

|

||||

max_keep_data=1, method_path='mmcls.models.utils.Augments.__call__')

|

||||

|

||||

delivery.override_data = False

|

||||

with delivery:

|

||||

imgs_teacher, label_teacher = augments(imgs, label)

|

||||

|

||||

delivery.override_data = True

|

||||

with delivery:

|

||||

imgs_student, label_student = augments(imgs, label)

|

||||

|

||||

print(torch.equal(label_teacher, label_student))

|

||||

print(torch.equal(imgs_teacher, imgs_student))

|

||||

```

|

||||

|

||||

Out:

|

||||

|

||||

```Python

|

||||

True

|

||||

True

|

||||

```

|

||||

|

||||

The randomness is eliminated by using `MethodOutputsDelivery`.

|

||||

|

||||

|

||||

|

||||

### 2.3 DistillDeliveryManager

|

||||

|

||||

`Distill``Delivery``Manager` is actually a context manager, used to manage delivers. When entering the `Distill``Delivery``Manager`, all delivers managed will be started.

|

||||

|

||||

With the help of `Distill``Delivery``Manager`, we are able to manage several different DistillDeliveries with as little code as possible, thereby reducing the possibility of errors.

|

||||

|

||||

#### Case: Manager deliveries with DistillDeliveryManager

|

||||

|

||||

```Python

|

||||

from mmcls.models.utils import Augments

|

||||

from mmrazor.core import DistillDeliveryManager

|

||||

|

||||

augments_cfg = dict(type='BatchMixup', alpha=1., num_classes=10, prob=1.0)

|

||||

augments = Augments(augments_cfg)

|

||||

|

||||

distill_deliveries = [

|

||||

ConfigDict(type='MethodOutputs', max_keep_data=1,

|

||||

method_path='mmcls.models.utils.Augments.__call__')]

|

||||

|

||||

# instantiate DistillDeliveryManager

|

||||

manager = DistillDeliveryManager(distill_deliveries)

|

||||

|

||||

imgs = torch.randn(2, 3, 32, 32)

|

||||

label = torch.randint(0, 10, (2,))

|

||||

|

||||

manager.override_data = False

|

||||

with manager:

|

||||

imgs_teacher, label_teacher = augments(imgs, label)

|

||||

|

||||

manager.override_data = True

|

||||

with manager:

|

||||

imgs_student, label_student = augments(imgs, label)

|

||||

|

||||

print(torch.equal(label_teacher, label_student))

|

||||

print(torch.equal(imgs_teacher, imgs_student))

|

||||

```

|

||||

|

||||

Out:

|

||||

|

||||

```Python

|

||||

True

|

||||

True

|

||||

```

|

||||

|

||||

## Reference

|

||||

|

||||

[1] Zhang, Hongyi, et al. "mixup: Beyond empirical risk minimization." *arXiv* abs/1710.09412 (2017).

|

||||

|

||||

[2] Yun, Sangdoo, et al. "Cutmix: Regularization strategy to train strong classifiers with localizable features." *ICCV* (2019).

|

||||

|

||||

[3] Nguyen, Chuong H., et al. "Improving object detection by label assignment distillation." *WACV* (2022).

|

||||

|

|

@ -1 +1,394 @@

|

|||

# Mutable

|

||||

## Introduction

|

||||

|

||||

### What is Mutable

|

||||

|

||||

`Mutable` is one of basic function components in NAS algorithms and some pruning algorithms, which makes supernet searchable by providing optional modules or parameters.

|

||||

|

||||

To understand it better, we take the mutable module as an example to explain as follows.

|

||||

|

||||

|

||||

|

||||

|

||||

As shown in the figure above, `Mutable` is a container that holds some candidate operations, thus it can sample candidates to constitute the subnet. `Supernet` usually consists of multiple `Mutable`, therefore, `Supernet` will be searchable with the help of `Mutable`. And all candidate operations in `Mutable` constitute the search space of `SuperNet`.

|

||||

|

||||

> If you want to know more about the relationship between Mutable and Mutator, please refer to [Mutator 用户文档](https://aicarrier.feishu.cn/docx/doxcnmcie75HcbqkfBGaEoemBKg)

|

||||

|

||||

### Features

|

||||

|

||||

#### 1. Support module mutable

|

||||

|

||||

It is the common and basic function for NAS algorithms. We can use it to implement some classical one-shot NAS algorithms, such as [SPOS](https://arxiv.org/abs/1904.00420), [DetNAS ](https://arxiv.org/abs/1903.10979)and so on.

|

||||

|

||||

#### 2. Support parameter mutable

|

||||

|

||||

To implement more complicated and funny algorithms easier, we supported making some important parameters searchable, such as input channel, output channel, kernel size and so on.

|

||||

|

||||

What is more, we can implement **dynamic op** by using mutable parameters.

|

||||

|

||||

#### 3. Support deriving from mutable parameter

|

||||

|

||||

Because of the restriction of defined architecture, there may be correlations between some mutable parameters, **such as concat and expand ratio.**

|

||||

|

||||