mirror of

https://github.com/open-mmlab/mmselfsup.git

synced 2025-06-03 14:59:38 +08:00

* refactor reconstruction visualization * support simmim visualization * fix reconstruction bug of MAE * support visualization of MaskFeat * refaction mae visualization demo * add unit test * fix lint and ut * update * add docs * set random seed * update * update docstring * add torch version check * update * rename * update version * update * fix lint * add docstring * update docs

2.7 KiB

2.7 KiB

Analysis tools

Count number of parameters

python tools/analysis_tools/count_parameters.py ${CONFIG_FILE}

An example:

python tools/analysis_tools/count_parameters.py configs/selfsup/mocov2/mocov2_resnet50_8xb32-coslr-200e_in1k.py

Publish a model

Before you publish a model, you may want to

- Convert model weights to CPU tensors.

- Delete the optimizer states.

- Compute the hash of the checkpoint file and append the hash id to the filename.

python tools/model_converters/publish_model.py ${INPUT_FILENAME} ${OUTPUT_FILENAME}

An example:

python tools/model_converters/publish_model.py YOUR/PATH/epoch_100.pth YOUR/PATH/epoch_100_output.pth

Reproducibility

If you want to make your performance exactly reproducible, please set --cfg-options randomness.deterministic=True to train the final model. Note that this will switch off torch.backends.cudnn.benchmark and slow down the training speed.

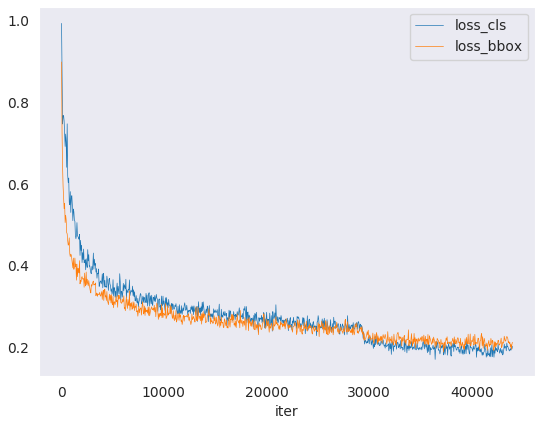

Log Analysis

tools/analysis_tools/analyze_logs.py plots loss/lr curves given a training

log file. Run pip install seaborn first to install the dependency.

python tools/analysis_tools/analyze_logs.py plot_curve [--keys ${KEYS}] [--title ${TITLE}] [--legend ${LEGEND}] [--backend ${BACKEND}] [--style ${STYLE}] [--out ${OUT_FILE}]

Examples:

-

Plot the classification loss of some run.

python tools/analysis_tools/analyze_logs.py plot_curve log.json --keys loss_dense --legend loss_dense -

Plot the classification and regression loss of some run, and save the figure to a pdf.

python tools/analysis_tools/analyze_logs.py plot_curve log.json --keys loss_dense loss_single --out losses.pdf -

Compare the loss of two runs in the same figure.

python tools/analysis_tools/analyze_logs.py plot_curve log1.json log2.json --keys loss --legend run1 run2 -

Compute the average training speed.

python tools/analysis_tools/analyze_logs.py cal_train_time log.json [--include-outliers]The output is expected to be like the following.

-----Analyze train time of work_dirs/some_exp/20190611_192040.log.json----- slowest epoch 11, average time is 1.2024 fastest epoch 1, average time is 1.1909 time std over epochs is 0.0028 average iter time: 1.1959 s/iter