-

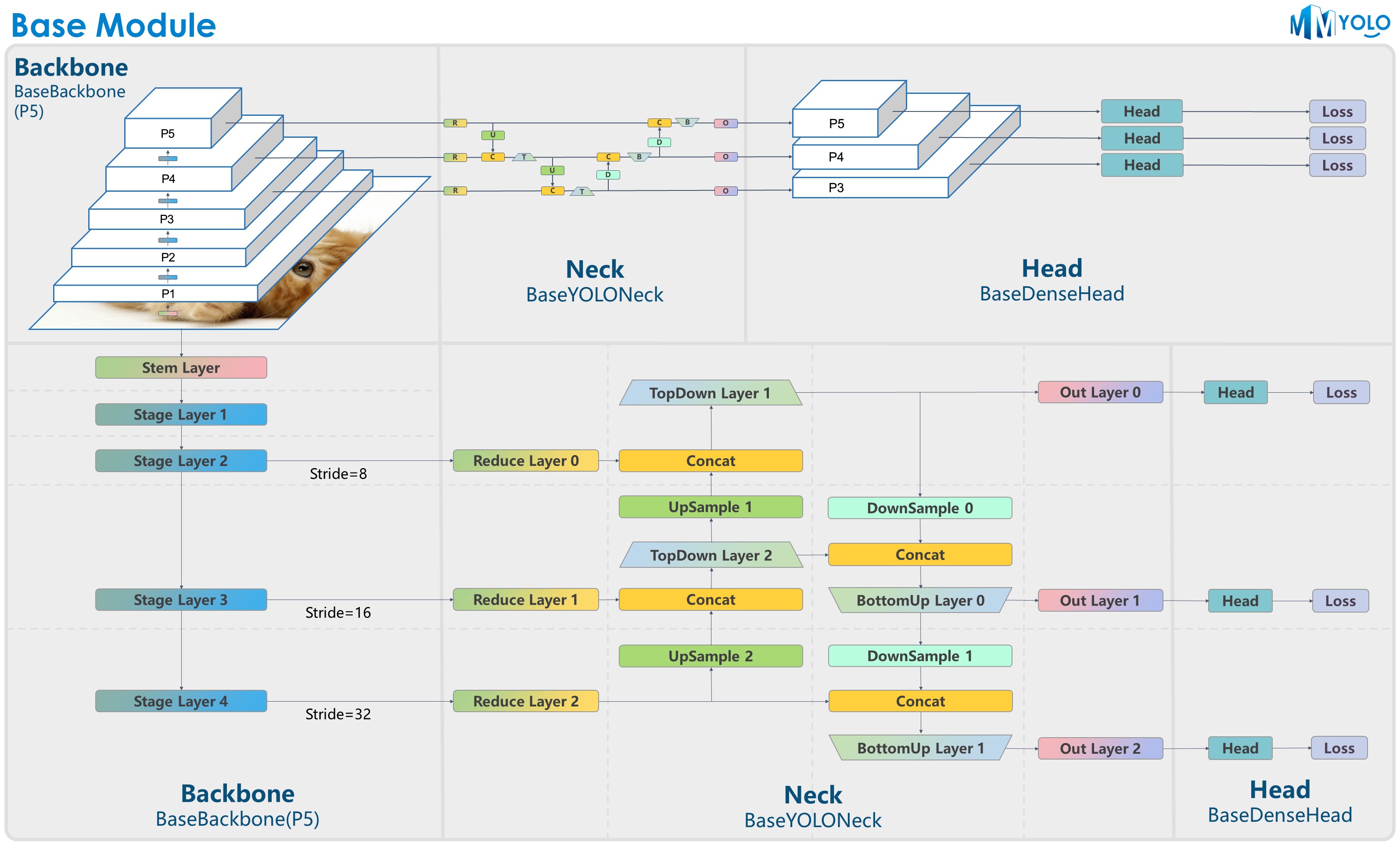

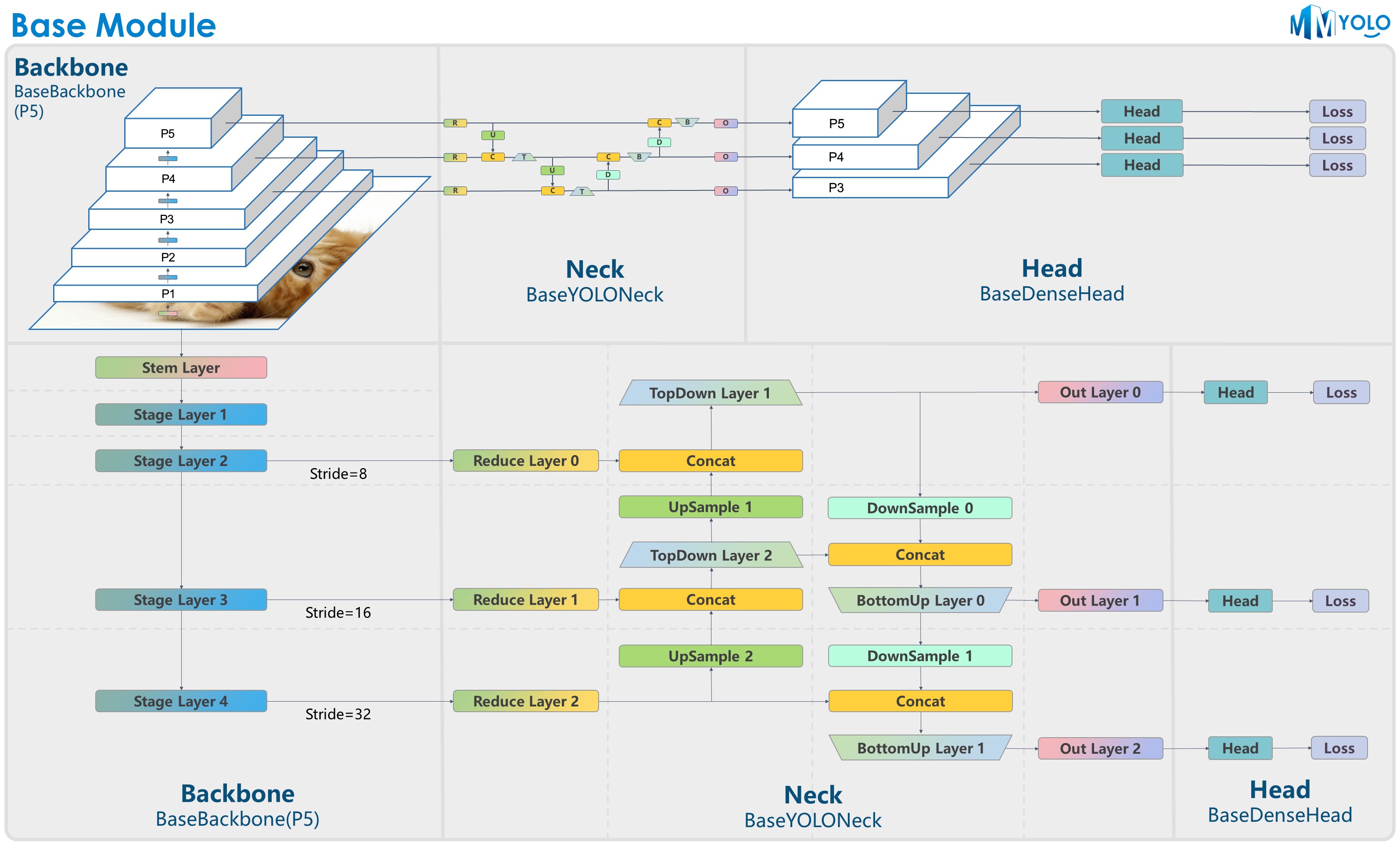

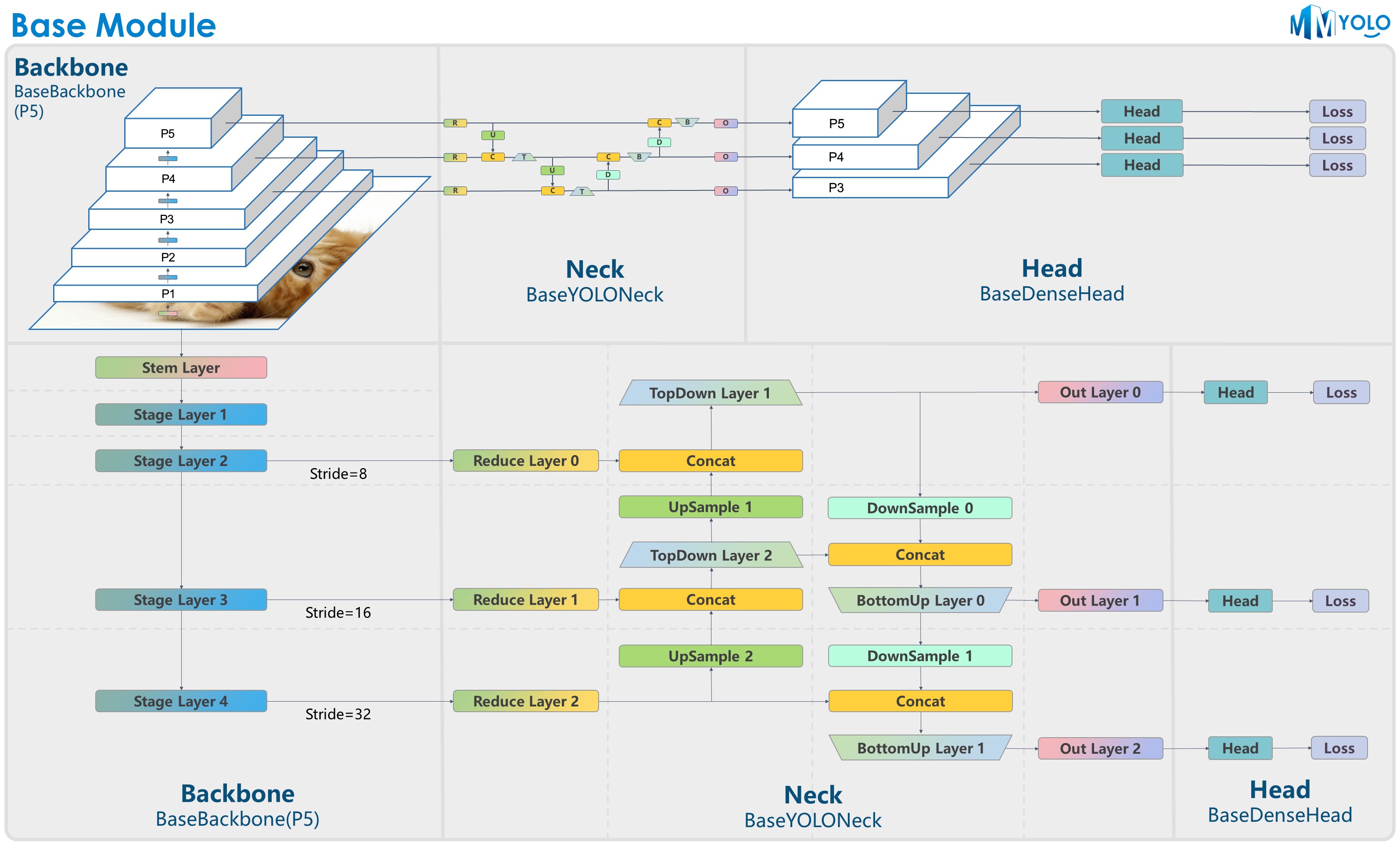

- The figure above is contributed by RangeKing@GitHub, thank you very much!

-And the figure of P6 model is in [model_design.md](docs/en/algorithm_descriptions/model_design.md).

+And the figure of P6 model is in [model_design.md](docs/en/recommended_topics/model_design.md).

## 🛠️ Installation [🔝](#-table-of-contents)

-MMYOLO relies on PyTorch, MMCV, MMEngine, and MMDetection. Below are quick steps for installation. Please refer to the [Install Guide](docs/en/get_started.md) for more detailed instructions.

+MMYOLO relies on PyTorch, MMCV, MMEngine, and MMDetection. Below are quick steps for installation. Please refer to the [Install Guide](docs/en/get_started/installation.md) for more detailed instructions.

```shell

conda create -n open-mmlab python=3.8 pytorch==1.10.1 torchvision==0.11.2 cudatoolkit=11.3 -c pytorch -y

@@ -160,38 +160,96 @@ The usage of MMYOLO is almost identical to MMDetection and all tutorials are str

For different parts from MMDetection, we have also prepared user guides and advanced guides, please read our [documentation](https://mmyolo.readthedocs.io/zenh_CN/latest/).

-- User Guides

+

The figure above is contributed by RangeKing@GitHub, thank you very much!

-And the figure of P6 model is in [model_design.md](docs/en/algorithm_descriptions/model_design.md).

+And the figure of P6 model is in [model_design.md](docs/en/recommended_topics/model_design.md).

## 🛠️ Installation [🔝](#-table-of-contents)

-MMYOLO relies on PyTorch, MMCV, MMEngine, and MMDetection. Below are quick steps for installation. Please refer to the [Install Guide](docs/en/get_started.md) for more detailed instructions.

+MMYOLO relies on PyTorch, MMCV, MMEngine, and MMDetection. Below are quick steps for installation. Please refer to the [Install Guide](docs/en/get_started/installation.md) for more detailed instructions.

```shell

conda create -n open-mmlab python=3.8 pytorch==1.10.1 torchvision==0.11.2 cudatoolkit=11.3 -c pytorch -y

@@ -160,38 +160,96 @@ The usage of MMYOLO is almost identical to MMDetection and all tutorials are str

For different parts from MMDetection, we have also prepared user guides and advanced guides, please read our [documentation](https://mmyolo.readthedocs.io/zenh_CN/latest/).

-- User Guides

+ 图为 RangeKing@GitHub 提供,非常感谢!

-P6 模型图详见 [model_design.md](docs/zh_cn/featured_topics/model_design.md)。

+P6 模型图详见 [model_design.md](docs/zh_cn/recommended_topics/model_design.md)。

@@ -197,16 +197,17 @@ MMYOLO 用法和 MMDetection 几乎一致,所有教程都是通用的,你也

图为 RangeKing@GitHub 提供,非常感谢!

-P6 模型图详见 [model_design.md](docs/zh_cn/featured_topics/model_design.md)。

+P6 模型图详见 [model_design.md](docs/zh_cn/recommended_topics/model_design.md)。

@@ -197,16 +197,17 @@ MMYOLO 用法和 MMDetection 几乎一致,所有教程都是通用的,你也

-

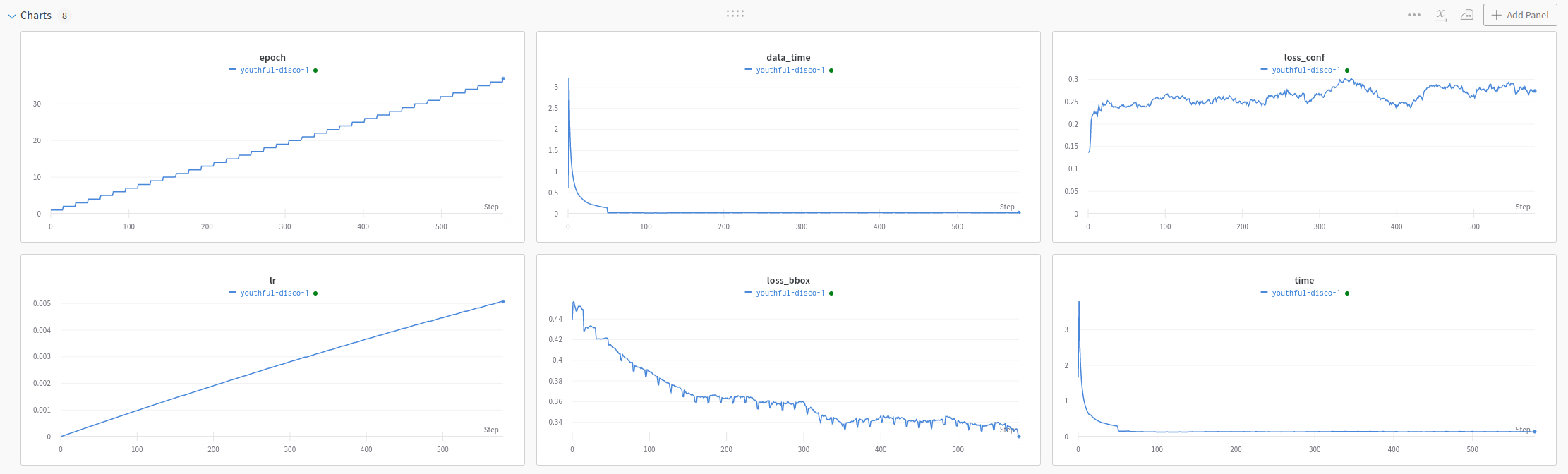

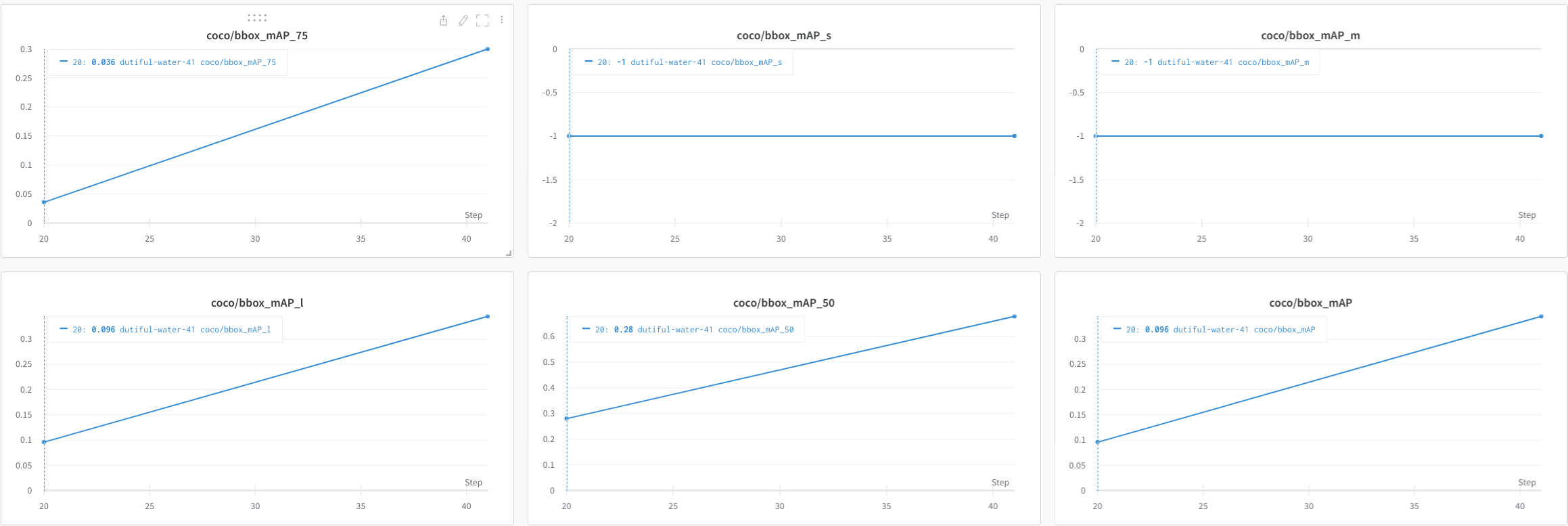

-- Plot the classification and regression loss of some run, and save the figure to a pdf.

-

- ```shell

- mim run mmdet analyze_logs plot_curve \

- yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json \

- --keys loss_cls loss_bbox \

- --legend loss_cls loss_bbox \

- --out losses_yolov5_s.pdf

- ```

-

-

-

-- Plot the classification and regression loss of some run, and save the figure to a pdf.

-

- ```shell

- mim run mmdet analyze_logs plot_curve \

- yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json \

- --keys loss_cls loss_bbox \

- --legend loss_cls loss_bbox \

- --out losses_yolov5_s.pdf

- ```

-

-  -

-- Compare the bbox mAP of two runs in the same figure.

-

- ```shell

- mim run mmdet analyze_logs plot_curve \

- yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json \

- yolov5_n-v61_syncbn_fast_8xb16-300e_coco_20220919_090739.log.json \

- --keys bbox_mAP \

- --legend yolov5_s yolov5_n \

- --eval-interval 10 # Note that the evaluation interval must be the same as during training. Otherwise, it will raise an error.

- ```

-

-

-

-- Compare the bbox mAP of two runs in the same figure.

-

- ```shell

- mim run mmdet analyze_logs plot_curve \

- yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json \

- yolov5_n-v61_syncbn_fast_8xb16-300e_coco_20220919_090739.log.json \

- --keys bbox_mAP \

- --legend yolov5_s yolov5_n \

- --eval-interval 10 # Note that the evaluation interval must be the same as during training. Otherwise, it will raise an error.

- ```

-

- -

-#### Compute the average training speed

-

-```shell

-mim run mmdet analyze_logs cal_train_time \

- ${LOG} \ # path of train log in json format

- [--include-outliers] # include the first value of every epoch when computing the average time

-```

-

-Examples:

-

-```shell

-mim run mmdet analyze_logs cal_train_time \

- yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json

-```

-

-The output is expected to be like the following.

-

-```text

------Analyze train time of yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json-----

-slowest epoch 278, average time is 0.1705 s/iter

-fastest epoch 300, average time is 0.1510 s/iter

-time std over epochs is 0.0026

-average iter time: 0.1556 s/iter

-```

-

-### Print the whole config

-

-`print_config.py` in MMDetection prints the whole config verbatim, expanding all its imports. The command is as following.

-

-```shell

-mim run mmdet print_config \

- ${CONFIG} \ # path of the config file

- [--save-path] \ # save path of whole config, suffixed with .py, .json or .yml

- [--cfg-options ${OPTIONS [OPTIONS...]}] # override some settings in the used config

-```

-

-Examples:

-

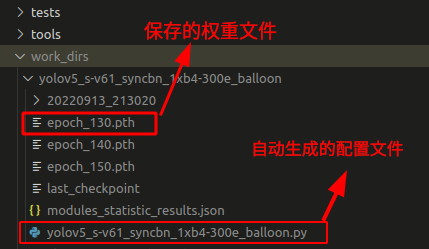

-```shell

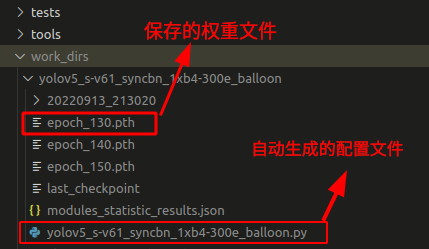

-mim run mmdet print_config \

- configs/yolov5/yolov5_s-v61_syncbn_fast_1xb4-300e_balloon.py \

- --save-path ./work_dirs/yolov5_s-v61_syncbn_fast_1xb4-300e_balloon.py

-```

-

-Running the above command will save the `yolov5_s-v61_syncbn_fast_1xb4-300e_balloon.py` config file with the inheritance relationship expanded to \`\`yolov5_s-v61_syncbn_fast_1xb4-300e_balloon_whole.py`in the`./work_dirs\` folder.

-

-## Set the random seed

-

-If you want to set the random seed during training, you can use the following command.

-

-```shell

-python ./tools/train.py \

- ${CONFIG} \ # path of the config file

- --cfg-options randomness.seed=2023 \ # set seed to 2023

- [randomness.diff_rank_seed=True] \ # set different seeds according to global rank

- [randomness.deterministic=True] # set the deterministic option for CUDNN backend

-# [] stands for optional parameters, when actually entering the command line, you do not need to enter []

-```

-

-`randomness` has three parameters that can be set, with the following meanings.

-

-- `randomness.seed=2023`, set the random seed to 2023.

-- `randomness.diff_rank_seed=True`, set different seeds according to global rank. Defaults to False.

-- `randomness.deterministic=True`, set the deterministic option for cuDNN backend, i.e., set `torch.backends.cudnn.deterministic` to True and `torch.backends.cudnn.benchmark` to False. Defaults to False. See https://pytorch.org/docs/stable/notes/randomness.html for more details.

-

-## Specify specific GPUs during training or inference

-

-If you have multiple GPUs, such as 8 GPUs, numbered `0, 1, 2, 3, 4, 5, 6, 7`, GPU 0 will be used by default for training or inference. If you want to specify other GPUs for training or inference, you can use the following commands:

-

-```shell

-CUDA_VISIBLE_DEVICES=5 python ./tools/train.py ${CONFIG} #train

-CUDA_VISIBLE_DEVICES=5 python ./tools/test.py ${CONFIG} ${CHECKPOINT_FILE} #test

-```

-

-If you set `CUDA_VISIBLE_DEVICES` to -1 or a number greater than the maximum GPU number, such as 8, the CPU will be used for training or inference.

-

-If you want to use several of these GPUs to train in parallel, you can use the following command:

-

-```shell

-CUDA_VISIBLE_DEVICES=0,1,2,3 ./tools/dist_train.sh ${CONFIG} ${GPU_NUM}

-```

-

-Here the `GPU_NUM` is 4. In addition, if multiple tasks are trained in parallel on one machine and each task requires multiple GPUs, the PORT of each task need to be set differently to avoid communication conflict, like the following commands:

-

-```shell

-CUDA_VISIBLE_DEVICES=0,1,2,3 PORT=29500 ./tools/dist_train.sh ${CONFIG} 4

-CUDA_VISIBLE_DEVICES=4,5,6,7 PORT=29501 ./tools/dist_train.sh ${CONFIG} 4

-```

diff --git a/docs/en/advanced_guides/index.rst b/docs/en/advanced_guides/index.rst

deleted file mode 100644

index bd72cd2e..00000000

--- a/docs/en/advanced_guides/index.rst

+++ /dev/null

@@ -1,25 +0,0 @@

-Data flow

-************************

-

-.. toctree::

- :maxdepth: 1

-

- data_flow.md

-

-

-How to

-************************

-

-.. toctree::

- :maxdepth: 1

-

- how_to.md

-

-

-Plugins

-************************

-

-.. toctree::

- :maxdepth: 1

-

- plugins.md

diff --git a/docs/en/common_usage/amp_training.md b/docs/en/common_usage/amp_training.md

new file mode 100644

index 00000000..3767114a

--- /dev/null

+++ b/docs/en/common_usage/amp_training.md

@@ -0,0 +1 @@

+# Automatic mixed precision(AMP)training

diff --git a/docs/en/common_usage/freeze_layers.md b/docs/en/common_usage/freeze_layers.md

new file mode 100644

index 00000000..4614f324

--- /dev/null

+++ b/docs/en/common_usage/freeze_layers.md

@@ -0,0 +1,28 @@

+# Freeze layers

+

+## Freeze the weight of backbone

+

+In MMYOLO, we can freeze some `stages` of the backbone network by setting `frozen_stages` parameters, so that these `stage` parameters do not participate in model updating.

+It should be noted that `frozen_stages = i` means that all parameters from the initial `stage` to the `i`th `stage` will be frozen. The following is an example of `YOLOv5`. Other algorithms are the same logic.

+

+```python

+_base_ = './yolov5_s-v61_syncbn_8xb16-300e_coco.py'

+

+model = dict(

+ backbone=dict(

+ frozen_stages=1 # Indicates that the parameters in the first stage and all stages before it are frozen

+ ))

+```

+

+## Freeze the weight of neck

+

+In addition, it's able to freeze the whole `neck` with the parameter `freeze_all` in MMYOLO. The following is an example of `YOLOv5`. Other algorithms are the same logic.

+

+```python

+_base_ = './yolov5_s-v61_syncbn_8xb16-300e_coco.py'

+

+model = dict(

+ neck=dict(

+ freeze_all=True # If freeze_all=True, all parameters of the neck will be frozen

+ ))

+```

diff --git a/docs/en/common_usage/mim_usage.md b/docs/en/common_usage/mim_usage.md

new file mode 100644

index 00000000..2752ea5f

--- /dev/null

+++ b/docs/en/common_usage/mim_usage.md

@@ -0,0 +1,89 @@

+# Use mim to run scripts from other OpenMMLab repositories

+

+```{note}

+1. All script calls across libraries are currently not supported and are being fixed. More examples will be added to this document when the fix is complete. 2.

+2. mAP plotting and average training speed calculation are fixed in the MMDetection dev-3.x branch, which currently needs to be installed via the source code to be run successfully.

+```

+

+## Log Analysis

+

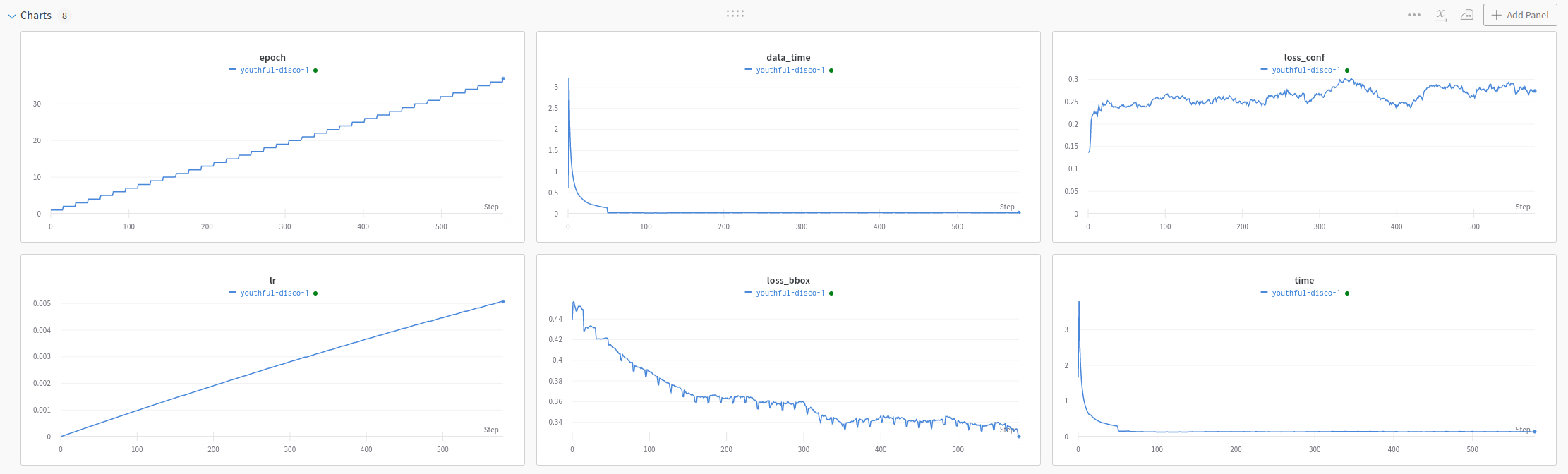

+### Curve plotting

+

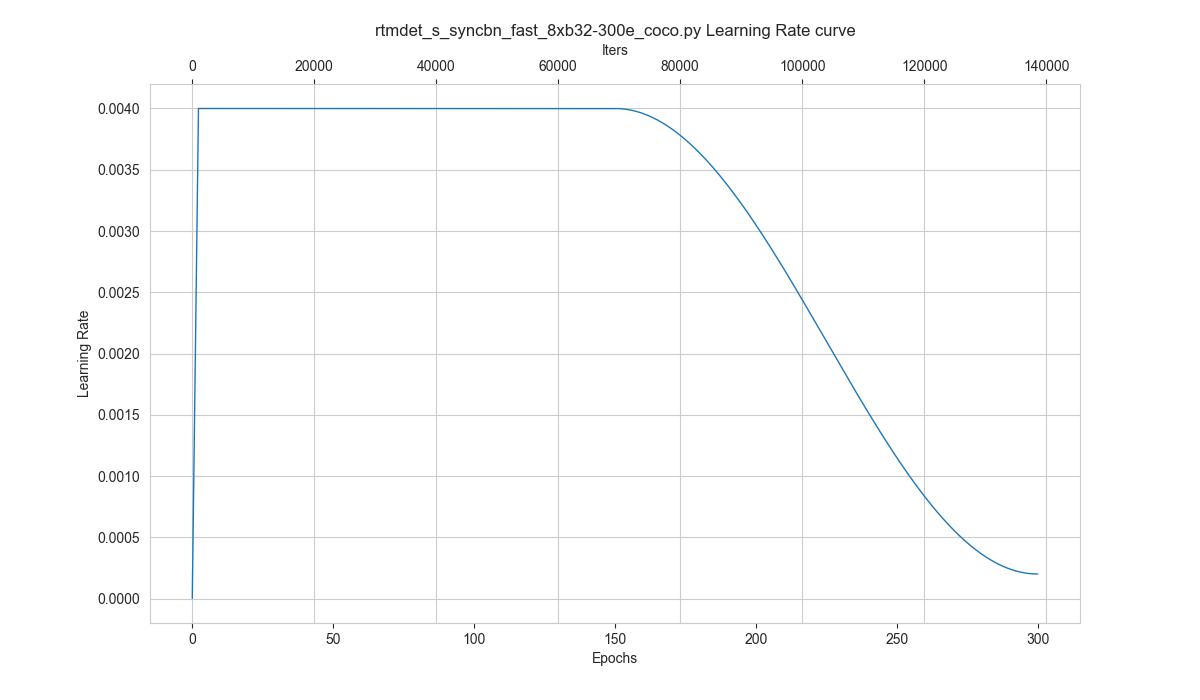

+`tools/analysis_tools/analyze_logs.py` plots loss/mAP curves given a training log file. Run `pip install seaborn` first to install the dependency.

+

+```shell

+mim run mmdet analyze_logs plot_curve \

+ ${LOG} \ # path of train log in json format

+ [--keys ${KEYS}] \ # the metric that you want to plot, default to 'bbox_mAP'

+ [--start-epoch ${START_EPOCH}] # the epoch that you want to start, default to 1

+ [--eval-interval ${EVALUATION_INTERVAL}] \ # the evaluation interval when training, default to 1

+ [--title ${TITLE}] \ # title of figure

+ [--legend ${LEGEND}] \ # legend of each plot, default to None

+ [--backend ${BACKEND}] \ # backend of plt, default to None

+ [--style ${STYLE}] \ # style of plt, default to 'dark'

+ [--out ${OUT_FILE}] # the path of output file

+# [] stands for optional parameters, when actually entering the command line, you do not need to enter []

+```

+

+Examples:

+

+- Plot the classification loss of some run.

+

+ ```shell

+ mim run mmdet analyze_logs plot_curve \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json \

+ --keys loss_cls \

+ --legend loss_cls

+ ```

+

+

-

-#### Compute the average training speed

-

-```shell

-mim run mmdet analyze_logs cal_train_time \

- ${LOG} \ # path of train log in json format

- [--include-outliers] # include the first value of every epoch when computing the average time

-```

-

-Examples:

-

-```shell

-mim run mmdet analyze_logs cal_train_time \

- yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json

-```

-

-The output is expected to be like the following.

-

-```text

------Analyze train time of yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json-----

-slowest epoch 278, average time is 0.1705 s/iter

-fastest epoch 300, average time is 0.1510 s/iter

-time std over epochs is 0.0026

-average iter time: 0.1556 s/iter

-```

-

-### Print the whole config

-

-`print_config.py` in MMDetection prints the whole config verbatim, expanding all its imports. The command is as following.

-

-```shell

-mim run mmdet print_config \

- ${CONFIG} \ # path of the config file

- [--save-path] \ # save path of whole config, suffixed with .py, .json or .yml

- [--cfg-options ${OPTIONS [OPTIONS...]}] # override some settings in the used config

-```

-

-Examples:

-

-```shell

-mim run mmdet print_config \

- configs/yolov5/yolov5_s-v61_syncbn_fast_1xb4-300e_balloon.py \

- --save-path ./work_dirs/yolov5_s-v61_syncbn_fast_1xb4-300e_balloon.py

-```

-

-Running the above command will save the `yolov5_s-v61_syncbn_fast_1xb4-300e_balloon.py` config file with the inheritance relationship expanded to \`\`yolov5_s-v61_syncbn_fast_1xb4-300e_balloon_whole.py`in the`./work_dirs\` folder.

-

-## Set the random seed

-

-If you want to set the random seed during training, you can use the following command.

-

-```shell

-python ./tools/train.py \

- ${CONFIG} \ # path of the config file

- --cfg-options randomness.seed=2023 \ # set seed to 2023

- [randomness.diff_rank_seed=True] \ # set different seeds according to global rank

- [randomness.deterministic=True] # set the deterministic option for CUDNN backend

-# [] stands for optional parameters, when actually entering the command line, you do not need to enter []

-```

-

-`randomness` has three parameters that can be set, with the following meanings.

-

-- `randomness.seed=2023`, set the random seed to 2023.

-- `randomness.diff_rank_seed=True`, set different seeds according to global rank. Defaults to False.

-- `randomness.deterministic=True`, set the deterministic option for cuDNN backend, i.e., set `torch.backends.cudnn.deterministic` to True and `torch.backends.cudnn.benchmark` to False. Defaults to False. See https://pytorch.org/docs/stable/notes/randomness.html for more details.

-

-## Specify specific GPUs during training or inference

-

-If you have multiple GPUs, such as 8 GPUs, numbered `0, 1, 2, 3, 4, 5, 6, 7`, GPU 0 will be used by default for training or inference. If you want to specify other GPUs for training or inference, you can use the following commands:

-

-```shell

-CUDA_VISIBLE_DEVICES=5 python ./tools/train.py ${CONFIG} #train

-CUDA_VISIBLE_DEVICES=5 python ./tools/test.py ${CONFIG} ${CHECKPOINT_FILE} #test

-```

-

-If you set `CUDA_VISIBLE_DEVICES` to -1 or a number greater than the maximum GPU number, such as 8, the CPU will be used for training or inference.

-

-If you want to use several of these GPUs to train in parallel, you can use the following command:

-

-```shell

-CUDA_VISIBLE_DEVICES=0,1,2,3 ./tools/dist_train.sh ${CONFIG} ${GPU_NUM}

-```

-

-Here the `GPU_NUM` is 4. In addition, if multiple tasks are trained in parallel on one machine and each task requires multiple GPUs, the PORT of each task need to be set differently to avoid communication conflict, like the following commands:

-

-```shell

-CUDA_VISIBLE_DEVICES=0,1,2,3 PORT=29500 ./tools/dist_train.sh ${CONFIG} 4

-CUDA_VISIBLE_DEVICES=4,5,6,7 PORT=29501 ./tools/dist_train.sh ${CONFIG} 4

-```

diff --git a/docs/en/advanced_guides/index.rst b/docs/en/advanced_guides/index.rst

deleted file mode 100644

index bd72cd2e..00000000

--- a/docs/en/advanced_guides/index.rst

+++ /dev/null

@@ -1,25 +0,0 @@

-Data flow

-************************

-

-.. toctree::

- :maxdepth: 1

-

- data_flow.md

-

-

-How to

-************************

-

-.. toctree::

- :maxdepth: 1

-

- how_to.md

-

-

-Plugins

-************************

-

-.. toctree::

- :maxdepth: 1

-

- plugins.md

diff --git a/docs/en/common_usage/amp_training.md b/docs/en/common_usage/amp_training.md

new file mode 100644

index 00000000..3767114a

--- /dev/null

+++ b/docs/en/common_usage/amp_training.md

@@ -0,0 +1 @@

+# Automatic mixed precision(AMP)training

diff --git a/docs/en/common_usage/freeze_layers.md b/docs/en/common_usage/freeze_layers.md

new file mode 100644

index 00000000..4614f324

--- /dev/null

+++ b/docs/en/common_usage/freeze_layers.md

@@ -0,0 +1,28 @@

+# Freeze layers

+

+## Freeze the weight of backbone

+

+In MMYOLO, we can freeze some `stages` of the backbone network by setting `frozen_stages` parameters, so that these `stage` parameters do not participate in model updating.

+It should be noted that `frozen_stages = i` means that all parameters from the initial `stage` to the `i`th `stage` will be frozen. The following is an example of `YOLOv5`. Other algorithms are the same logic.

+

+```python

+_base_ = './yolov5_s-v61_syncbn_8xb16-300e_coco.py'

+

+model = dict(

+ backbone=dict(

+ frozen_stages=1 # Indicates that the parameters in the first stage and all stages before it are frozen

+ ))

+```

+

+## Freeze the weight of neck

+

+In addition, it's able to freeze the whole `neck` with the parameter `freeze_all` in MMYOLO. The following is an example of `YOLOv5`. Other algorithms are the same logic.

+

+```python

+_base_ = './yolov5_s-v61_syncbn_8xb16-300e_coco.py'

+

+model = dict(

+ neck=dict(

+ freeze_all=True # If freeze_all=True, all parameters of the neck will be frozen

+ ))

+```

diff --git a/docs/en/common_usage/mim_usage.md b/docs/en/common_usage/mim_usage.md

new file mode 100644

index 00000000..2752ea5f

--- /dev/null

+++ b/docs/en/common_usage/mim_usage.md

@@ -0,0 +1,89 @@

+# Use mim to run scripts from other OpenMMLab repositories

+

+```{note}

+1. All script calls across libraries are currently not supported and are being fixed. More examples will be added to this document when the fix is complete. 2.

+2. mAP plotting and average training speed calculation are fixed in the MMDetection dev-3.x branch, which currently needs to be installed via the source code to be run successfully.

+```

+

+## Log Analysis

+

+### Curve plotting

+

+`tools/analysis_tools/analyze_logs.py` plots loss/mAP curves given a training log file. Run `pip install seaborn` first to install the dependency.

+

+```shell

+mim run mmdet analyze_logs plot_curve \

+ ${LOG} \ # path of train log in json format

+ [--keys ${KEYS}] \ # the metric that you want to plot, default to 'bbox_mAP'

+ [--start-epoch ${START_EPOCH}] # the epoch that you want to start, default to 1

+ [--eval-interval ${EVALUATION_INTERVAL}] \ # the evaluation interval when training, default to 1

+ [--title ${TITLE}] \ # title of figure

+ [--legend ${LEGEND}] \ # legend of each plot, default to None

+ [--backend ${BACKEND}] \ # backend of plt, default to None

+ [--style ${STYLE}] \ # style of plt, default to 'dark'

+ [--out ${OUT_FILE}] # the path of output file

+# [] stands for optional parameters, when actually entering the command line, you do not need to enter []

+```

+

+Examples:

+

+- Plot the classification loss of some run.

+

+ ```shell

+ mim run mmdet analyze_logs plot_curve \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json \

+ --keys loss_cls \

+ --legend loss_cls

+ ```

+

+  +

+- Plot the classification and regression loss of some run, and save the figure to a pdf.

+

+ ```shell

+ mim run mmdet analyze_logs plot_curve \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json \

+ --keys loss_cls loss_bbox \

+ --legend loss_cls loss_bbox \

+ --out losses_yolov5_s.pdf

+ ```

+

+

+

+- Plot the classification and regression loss of some run, and save the figure to a pdf.

+

+ ```shell

+ mim run mmdet analyze_logs plot_curve \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json \

+ --keys loss_cls loss_bbox \

+ --legend loss_cls loss_bbox \

+ --out losses_yolov5_s.pdf

+ ```

+

+  +

+- Compare the bbox mAP of two runs in the same figure.

+

+ ```shell

+ mim run mmdet analyze_logs plot_curve \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json \

+ yolov5_n-v61_syncbn_fast_8xb16-300e_coco_20220919_090739.log.json \

+ --keys bbox_mAP \

+ --legend yolov5_s yolov5_n \

+ --eval-interval 10 # Note that the evaluation interval must be the same as during training. Otherwise, it will raise an error.

+ ```

+

+

+

+- Compare the bbox mAP of two runs in the same figure.

+

+ ```shell

+ mim run mmdet analyze_logs plot_curve \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json \

+ yolov5_n-v61_syncbn_fast_8xb16-300e_coco_20220919_090739.log.json \

+ --keys bbox_mAP \

+ --legend yolov5_s yolov5_n \

+ --eval-interval 10 # Note that the evaluation interval must be the same as during training. Otherwise, it will raise an error.

+ ```

+

+ +

+### Compute the average training speed

+

+```shell

+mim run mmdet analyze_logs cal_train_time \

+ ${LOG} \ # path of train log in json format

+ [--include-outliers] # include the first value of every epoch when computing the average time

+```

+

+Examples:

+

+```shell

+mim run mmdet analyze_logs cal_train_time \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json

+```

+

+The output is expected to be like the following.

+

+```text

+-----Analyze train time of yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json-----

+slowest epoch 278, average time is 0.1705 s/iter

+fastest epoch 300, average time is 0.1510 s/iter

+time std over epochs is 0.0026

+average iter time: 0.1556 s/iter

+```

diff --git a/docs/en/common_usage/module_combination.md b/docs/en/common_usage/module_combination.md

new file mode 100644

index 00000000..3f9ffa4c

--- /dev/null

+++ b/docs/en/common_usage/module_combination.md

@@ -0,0 +1 @@

+# Module combination

diff --git a/docs/en/common_usage/multi_necks.md b/docs/en/common_usage/multi_necks.md

new file mode 100644

index 00000000..b6f2bc25

--- /dev/null

+++ b/docs/en/common_usage/multi_necks.md

@@ -0,0 +1,37 @@

+# Apply multiple Necks

+

+If you want to stack multiple Necks, you can directly set the Neck parameters in the config. MMYOLO supports concatenating multiple Necks in the form of `List`. You need to ensure that the output channel of the previous Neck matches the input channel of the next Neck. If you need to adjust the number of channels, you can insert the `mmdet.ChannelMapper` module to align the number of channels between multiple Necks. The specific configuration is as follows:

+

+```python

+_base_ = './yolov5_s-v61_syncbn_8xb16-300e_coco.py'

+

+deepen_factor = _base_.deepen_factor

+widen_factor = _base_.widen_factor

+model = dict(

+ type='YOLODetector',

+ neck=[

+ dict(

+ type='YOLOv5PAFPN',

+ deepen_factor=deepen_factor,

+ widen_factor=widen_factor,

+ in_channels=[256, 512, 1024],

+ out_channels=[256, 512, 1024], # The out_channels is controlled by widen_factor,so the YOLOv5PAFPN's out_channels equls to out_channels * widen_factor

+ num_csp_blocks=3,

+ norm_cfg=dict(type='BN', momentum=0.03, eps=0.001),

+ act_cfg=dict(type='SiLU', inplace=True)),

+ dict(

+ type='mmdet.ChannelMapper',

+ in_channels=[128, 256, 512],

+ out_channels=128,

+ ),

+ dict(

+ type='mmdet.DyHead',

+ in_channels=128,

+ out_channels=256,

+ num_blocks=2,

+ # disable zero_init_offset to follow official implementation

+ zero_init_offset=False)

+ ]

+ bbox_head=dict(head_module=dict(in_channels=[512,512,512])) # The out_channels is controlled by widen_factor,so the YOLOv5HeadModuled in_channels * widen_factor equals to the last neck's out_channels

+)

+```

diff --git a/docs/en/common_usage/output_predictions.md b/docs/en/common_usage/output_predictions.md

new file mode 100644

index 00000000..57192990

--- /dev/null

+++ b/docs/en/common_usage/output_predictions.md

@@ -0,0 +1,40 @@

+# Output prediction results

+

+If you want to save the prediction results as a specific file for offline evaluation, MMYOLO currently supports both json and pkl formats.

+

+```{note}

+The json file only save `image_id`, `bbox`, `score` and `category_id`. The json file can be read using the json library.

+The pkl file holds more content than the json file, and also holds information such as the file name and size of the predicted image; the pkl file can be read using the pickle library. The pkl file can be read using the pickle library.

+```

+

+## Output into json file

+

+If you want to output the prediction results as a json file, the command is as follows.

+

+```shell

+python tools/test.py {path_to_config} {path_to_checkpoint} --json-prefix {json_prefix}

+```

+

+The argument after `--json-prefix` should be a filename prefix (no need to enter the `.json` suffix) and can also contain a path. For a concrete example:

+

+```shell

+python tools/test.py configs\yolov5\yolov5_s-v61_syncbn_8xb16-300e_coco.py yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth --json-prefix work_dirs/demo/json_demo

+```

+

+Running the above command will output the `json_demo.bbox.json` file in the `work_dirs/demo` folder.

+

+## Output into pkl file

+

+If you want to output the prediction results as a pkl file, the command is as follows.

+

+```shell

+python tools/test.py {path_to_config} {path_to_checkpoint} --out {path_to_output_file}

+```

+

+The argument after `--out` should be a full filename (**must be** with a `.pkl` or `.pickle` suffix) and can also contain a path. For a concrete example:

+

+```shell

+python tools/test.py configs\yolov5\yolov5_s-v61_syncbn_8xb16-300e_coco.py yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth --out work_dirs/demo/pkl_demo.pkl

+```

+

+Running the above command will output the `pkl_demo.pkl` file in the `work_dirs/demo` folder.

diff --git a/docs/en/advanced_guides/plugins.md b/docs/en/common_usage/plugins.md

similarity index 100%

rename from docs/en/advanced_guides/plugins.md

rename to docs/en/common_usage/plugins.md

diff --git a/docs/en/common_usage/resume_training.md b/docs/en/common_usage/resume_training.md

new file mode 100644

index 00000000..d33f1d28

--- /dev/null

+++ b/docs/en/common_usage/resume_training.md

@@ -0,0 +1 @@

+# Resume training

diff --git a/docs/en/common_usage/set_random_seed.md b/docs/en/common_usage/set_random_seed.md

new file mode 100644

index 00000000..c45c165f

--- /dev/null

+++ b/docs/en/common_usage/set_random_seed.md

@@ -0,0 +1,18 @@

+# Set the random seed

+

+If you want to set the random seed during training, you can use the following command.

+

+```shell

+python ./tools/train.py \

+ ${CONFIG} \ # path of the config file

+ --cfg-options randomness.seed=2023 \ # set seed to 2023

+ [randomness.diff_rank_seed=True] \ # set different seeds according to global rank

+ [randomness.deterministic=True] # set the deterministic option for CUDNN backend

+# [] stands for optional parameters, when actually entering the command line, you do not need to enter []

+```

+

+`randomness` has three parameters that can be set, with the following meanings.

+

+- `randomness.seed=2023`, set the random seed to 2023.

+- `randomness.diff_rank_seed=True`, set different seeds according to global rank. Defaults to False.

+- `randomness.deterministic=True`, set the deterministic option for cuDNN backend, i.e., set `torch.backends.cudnn.deterministic` to True and `torch.backends.cudnn.benchmark` to False. Defaults to False. See https://pytorch.org/docs/stable/notes/randomness.html for more details.

diff --git a/docs/en/common_usage/set_syncbn.md b/docs/en/common_usage/set_syncbn.md

new file mode 100644

index 00000000..dba33be6

--- /dev/null

+++ b/docs/en/common_usage/set_syncbn.md

@@ -0,0 +1 @@

+# Enabling and disabling SyncBatchNorm

diff --git a/docs/en/common_usage/specify_device.md b/docs/en/common_usage/specify_device.md

new file mode 100644

index 00000000..72c8017e

--- /dev/null

+++ b/docs/en/common_usage/specify_device.md

@@ -0,0 +1,23 @@

+# Specify specific GPUs during training or inference

+

+If you have multiple GPUs, such as 8 GPUs, numbered `0, 1, 2, 3, 4, 5, 6, 7`, GPU 0 will be used by default for training or inference. If you want to specify other GPUs for training or inference, you can use the following commands:

+

+```shell

+CUDA_VISIBLE_DEVICES=5 python ./tools/train.py ${CONFIG} #train

+CUDA_VISIBLE_DEVICES=5 python ./tools/test.py ${CONFIG} ${CHECKPOINT_FILE} #test

+```

+

+If you set `CUDA_VISIBLE_DEVICES` to -1 or a number greater than the maximum GPU number, such as 8, the CPU will be used for training or inference.

+

+If you want to use several of these GPUs to train in parallel, you can use the following command:

+

+```shell

+CUDA_VISIBLE_DEVICES=0,1,2,3 ./tools/dist_train.sh ${CONFIG} ${GPU_NUM}

+```

+

+Here the `GPU_NUM` is 4. In addition, if multiple tasks are trained in parallel on one machine and each task requires multiple GPUs, the PORT of each task need to be set differently to avoid communication conflict, like the following commands:

+

+```shell

+CUDA_VISIBLE_DEVICES=0,1,2,3 PORT=29500 ./tools/dist_train.sh ${CONFIG} 4

+CUDA_VISIBLE_DEVICES=4,5,6,7 PORT=29501 ./tools/dist_train.sh ${CONFIG} 4

+```

diff --git a/docs/en/deploy/index.rst b/docs/en/deploy/index.rst

deleted file mode 100644

index 6fcfb241..00000000

--- a/docs/en/deploy/index.rst

+++ /dev/null

@@ -1,16 +0,0 @@

-Basic Deployment Guide

-************************

-

-.. toctree::

- :maxdepth: 1

-

- basic_deployment_guide.md

-

-

-Deployment tutorial

-************************

-

-.. toctree::

- :maxdepth: 1

-

- yolov5_deployment.md

diff --git a/docs/en/get_started.md b/docs/en/get_started.md

deleted file mode 100644

index 01c1a716..00000000

--- a/docs/en/get_started.md

+++ /dev/null

@@ -1,280 +0,0 @@

-# Get Started

-

-## Prerequisites

-

-Compatible MMEngine, MMCV and MMDetection versions are shown as below. Please install the correct version to avoid installation issues.

-

-| MMYOLO version | MMDetection version | MMEngine version | MMCV version |

-| :------------: | :----------------------: | :----------------------: | :---------------------: |

-| main | mmdet>=3.0.0rc5, \<3.1.0 | mmengine>=0.3.1, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-| 0.3.0 | mmdet>=3.0.0rc5, \<3.1.0 | mmengine>=0.3.1, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-| 0.2.0 | mmdet>=3.0.0rc3, \<3.1.0 | mmengine>=0.3.1, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-| 0.1.3 | mmdet>=3.0.0rc3, \<3.1.0 | mmengine>=0.3.1, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-| 0.1.2 | mmdet>=3.0.0rc2, \<3.1.0 | mmengine>=0.3.0, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-| 0.1.1 | mmdet==3.0.0rc1 | mmengine>=0.1.0, \<0.2.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-| 0.1.0 | mmdet==3.0.0rc0 | mmengine>=0.1.0, \<0.2.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-

-In this section, we demonstrate how to prepare an environment with PyTorch.

-

-MMDetection works on Linux, Windows, and macOS. It requires Python 3.7+, CUDA 9.2+, and PyTorch 1.7+.

-

-```{note}

-If you are experienced with PyTorch and have already installed it, just skip this part and jump to the [next section](#installation). Otherwise, you can follow these steps for the preparation.

-```

-

-**Step 0.** Download and install Miniconda from the [official website](https://docs.conda.io/en/latest/miniconda.html).

-

-**Step 1.** Create a conda environment and activate it.

-

-```shell

-conda create --name openmmlab python=3.8 -y

-conda activate openmmlab

-```

-

-**Step 2.** Install PyTorch following [official instructions](https://pytorch.org/get-started/locally/), e.g.

-

-On GPU platforms:

-

-```shell

-conda install pytorch torchvision -c pytorch

-```

-

-On CPU platforms:

-

-```shell

-conda install pytorch torchvision cpuonly -c pytorch

-```

-

-## Installation

-

-### Best Practices

-

-**Step 0.** Install [MMEngine](https://github.com/open-mmlab/mmengine) and [MMCV](https://github.com/open-mmlab/mmcv) using [MIM](https://github.com/open-mmlab/mim).

-

-```shell

-pip install -U openmim

-mim install "mmengine>=0.3.1"

-mim install "mmcv>=2.0.0rc1,<2.1.0"

-mim install "mmdet>=3.0.0rc5,<3.1.0"

-```

-

-**Note:**

-

-a. In MMCV-v2.x, `mmcv-full` is rename to `mmcv`, if you want to install `mmcv` without CUDA ops, you can use `mim install "mmcv-lite>=2.0.0rc1"` to install the lite version.

-

-b. If you would like to use albumentations, we suggest using pip install -r requirements/albu.txt or pip install -U albumentations --no-binary qudida,albumentations. If you simply use pip install albumentations==1.0.1, it will install opencv-python-headless simultaneously (even though you have already installed opencv-python). We recommended checking the environment after installing albumentation to ensure that opencv-python and opencv-python-headless are not installed at the same time, because it might cause unexpected issues if they both installed. Please refer to [official documentation](https://albumentations.ai/docs/getting_started/installation/#note-on-opencv-dependencies) for more details.

-

-**Step 1.** Install MMYOLO.

-

-Case a: If you develop and run mmdet directly, install it from source:

-

-```shell

-git clone https://github.com/open-mmlab/mmyolo.git

-cd mmyolo

-# Install albumentations

-pip install -r requirements/albu.txt

-# Install MMYOLO

-mim install -v -e .

-# "-v" means verbose, or more output

-# "-e" means installing a project in editable mode,

-# thus any local modifications made to the code will take effect without reinstallation.

-```

-

-Case b: If you use MMYOLO as a dependency or third-party package, install it with MIM:

-

-```shell

-mim install "mmyolo"

-```

-

-## Verify the installation

-

-To verify whether MMYOLO is installed correctly, we provide some sample codes to run an inference demo.

-

-**Step 1.** We need to download config and checkpoint files.

-

-```shell

-mim download mmyolo --config yolov5_s-v61_syncbn_fast_8xb16-300e_coco --dest .

-```

-

-The downloading will take several seconds or more, depending on your network environment. When it is done, you will find two files `yolov5_s-v61_syncbn_fast_8xb16-300e_coco.py` and `yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth` in your current folder.

-

-**Step 2.** Verify the inference demo.

-

-Option (a). If you install MMYOLO from source, just run the following command.

-

-```shell

-python demo/image_demo.py demo/demo.jpg \

- yolov5_s-v61_syncbn_fast_8xb16-300e_coco.py \

- yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth

-

-# Optional parameters

-# --out-dir ./output *The detection results are output to the specified directory. When args have action --show, the script do not save results. Default: ./output

-# --device cuda:0 *The computing resources used, including cuda and cpu. Default: cuda:0

-# --show *Display the results on the screen. Default: False

-# --score-thr 0.3 *Confidence threshold. Default: 0.3

-```

-

-You will see a new image on your `output` folder, where bounding boxes are plotted.

-

-Supported input types:

-

-- Single image, include `jpg`, `jpeg`, `png`, `ppm`, `bmp`, `pgm`, `tif`, `tiff`, `webp`.

-- Folder, all image files in the folder will be traversed and the corresponding results will be output.

-- URL, will automatically download from the URL and the corresponding results will be output.

-

-Option (b). If you install MMYOLO with MIM, open your python interpreter and copy&paste the following codes.

-

-```python

-from mmdet.apis import init_detector, inference_detector

-from mmyolo.utils import register_all_modules

-

-register_all_modules()

-config_file = 'yolov5_s-v61_syncbn_fast_8xb16-300e_coco.py'

-checkpoint_file = 'yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth'

-model = init_detector(config_file, checkpoint_file, device='cpu') # or device='cuda:0'

-inference_detector(model, 'demo/demo.jpg')

-```

-

-You will see a list of `DetDataSample`, and the predictions are in the `pred_instance`, indicating the detected bounding boxes, labels, and scores.

-

-### Customize Installation

-

-#### CUDA versions

-

-When installing PyTorch, you need to specify the version of CUDA. If you are not clear on which to choose, follow our recommendations:

-

-- For Ampere-based NVIDIA GPUs, such as GeForce 30 series and NVIDIA A100, CUDA 11 is a must.

-- For older NVIDIA GPUs, CUDA 11 is backward compatible, but CUDA 10.2 offers better compatibility and is more lightweight.

-

-Please make sure the GPU driver satisfies the minimum version requirements. See [this table](https://docs.nvidia.com/cuda/cuda-toolkit-release-notes/index.html#cuda-major-component-versions__table-cuda-toolkit-driver-versions) for more information.

-

-```{note}

-Installing CUDA runtime libraries is enough if you follow our best practices, because no CUDA code will be compiled locally. However, if you hope to compile MMCV from source or develop other CUDA operators, you need to install the complete CUDA toolkit from NVIDIA's [website](https://developer.nvidia.com/cuda-downloads), and its version should match the CUDA version of PyTorch. i.e., the specified version of cudatoolkit in `conda install` command.

-```

-

-#### Install MMEngine without MIM

-

-To install MMEngine with pip instead of MIM, please follow \[MMEngine installation guides\](https://mmengine.readthedocs.io/en/latest/get_started/installation.html).

-

-For example, you can install MMEngine by the following command.

-

-```shell

-pip install "mmengine>=0.3.1"

-```

-

-#### Install MMCV without MIM

-

-MMCV contains C++ and CUDA extensions, thus depending on PyTorch in a complex way. MIM solves such dependencies automatically and makes the installation easier. However, it is not a must.

-

-To install MMCV with pip instead of MIM, please follow [MMCV installation guides](https://mmcv.readthedocs.io/en/2.x/get_started/installation.html). This requires manually specifying a find-url based on the PyTorch version and its CUDA version.

-

-For example, the following command installs MMCV built for PyTorch 1.12.x and CUDA 11.6.

-

-```shell

-pip install "mmcv>=2.0.0rc1" -f https://download.openmmlab.com/mmcv/dist/cu116/torch1.12.0/index.html

-```

-

-#### Install on CPU-only platforms

-

-MMDetection can be built for the CPU-only environment. In CPU mode you can train (requires MMCV version >= `2.0.0rc1`), test, or infer a model.

-

-However, some functionalities are gone in this mode:

-

-- Deformable Convolution

-- Modulated Deformable Convolution

-- ROI pooling

-- Deformable ROI pooling

-- CARAFE

-- SyncBatchNorm

-- CrissCrossAttention

-- MaskedConv2d

-- Temporal Interlace Shift

-- nms_cuda

-- sigmoid_focal_loss_cuda

-- bbox_overlaps

-

-If you try to train/test/infer a model containing the above ops, an error will be raised.

-The following table lists affected algorithms.

-

-| Operator | Model |

-| :-----------------------------------------------------: | :--------------------------------------------------------------------------------------: |

-| Deformable Convolution/Modulated Deformable Convolution | DCN、Guided Anchoring、RepPoints、CentripetalNet、VFNet、CascadeRPN、NAS-FCOS、DetectoRS |

-| MaskedConv2d | Guided Anchoring |

-| CARAFE | CARAFE |

-| SyncBatchNorm | ResNeSt |

-

-#### Install on Google Colab

-

-[Google Colab](https://research.google.com/) usually has PyTorch installed,

-thus we only need to install MMEngine, MMCV, MMDetection, and MMYOLO with the following commands.

-

-**Step 1.** Install [MMEngine](https://github.com/open-mmlab/mmengine) and [MMCV](https://github.com/open-mmlab/mmcv) using [MIM](https://github.com/open-mmlab/mim).

-

-```shell

-!pip3 install openmim

-!mim install "mmengine>=0.3.1"

-!mim install "mmcv>=2.0.0rc1,<2.1.0"

-!mim install "mmdet>=3.0.0rc5,<3.1.0"

-```

-

-**Step 2.** Install MMYOLO from the source.

-

-```shell

-!git clone https://github.com/open-mmlab/mmyolo.git

-%cd mmyolo

-!pip install -e .

-```

-

-**Step 3.** Verification.

-

-```python

-import mmyolo

-print(mmyolo.__version__)

-# Example output: 0.1.0, or an another version.

-```

-

-```{note}

-Within Jupyter, the exclamation mark `!` is used to call external executables and `%cd` is a [magic command](https://ipython.readthedocs.io/en/stable/interactive/magics.html#magic-cd) to change the current working directory of Python.

-```

-

-#### Using MMYOLO with Docker

-

-We provide a [Dockerfile](https://github.com/open-mmlab/mmyolo/blob/main/docker/Dockerfile) to build an image. Ensure that your [docker version](https://docs.docker.com/engine/install/) >=19.03.

-

-Reminder: If you find out that your download speed is very slow, we suggest that you can canceling the comments in the last two lines of `Optional` in the [Dockerfile](https://github.com/open-mmlab/mmyolo/blob/main/docker/Dockerfile#L19-L20) to obtain a rocket like download speed:

-

-```dockerfile

-# (Optional)

-RUN sed -i 's/http:\/\/archive.ubuntu.com\/ubuntu\//http:\/\/mirrors.aliyun.com\/ubuntu\//g' /etc/apt/sources.list && \

- pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

-```

-

-Build Command:

-

-```shell

-# build an image with PyTorch 1.9, CUDA 11.1

-# If you prefer other versions, just modified the Dockerfile

-docker build -t mmyolo docker/

-```

-

-Run it with:

-

-```shell

-export DATA_DIR=/path/to/your/dataset

-docker run --gpus all --shm-size=8g -it -v ${DATA_DIR}:/mmyolo/data mmyolo

-```

-

-### Troubleshooting

-

-If you have some issues during the installation, please first view the [FAQ](notes/faq.md) page.

-You may [open an issue](https://github.com/open-mmlab/mmyolo/issues/new/choose) on GitHub if no solution is found.

-

-### Develop using multiple MMYOLO versions

-

-The training and testing scripts have been modified in `PYTHONPATH` to ensure that the scripts use MMYOLO in the current directory.

-

-To have the default MMYOLO installed in your environment instead of what is currently in use, you can remove the code that appears in the relevant script:

-

-```shell

-PYTHONPATH="$(dirname $0)/..":$PYTHONPATH

-```

diff --git a/docs/en/get_started/15_minutes_instance_segmentation.md b/docs/en/get_started/15_minutes_instance_segmentation.md

new file mode 100644

index 00000000..c66a2f28

--- /dev/null

+++ b/docs/en/get_started/15_minutes_instance_segmentation.md

@@ -0,0 +1,3 @@

+# 15 minutes to get started with MMYOLO instance segmentation

+

+TODO

diff --git a/docs/en/get_started/15_minutes_object_detection.md b/docs/en/get_started/15_minutes_object_detection.md

new file mode 100644

index 00000000..37409e5a

--- /dev/null

+++ b/docs/en/get_started/15_minutes_object_detection.md

@@ -0,0 +1,3 @@

+# 15 minutes to get started with MMYOLO object detection

+

+TODO

diff --git a/docs/en/get_started/15_minutes_rotated_object_detection.md b/docs/en/get_started/15_minutes_rotated_object_detection.md

new file mode 100644

index 00000000..6e04c8c0

--- /dev/null

+++ b/docs/en/get_started/15_minutes_rotated_object_detection.md

@@ -0,0 +1,3 @@

+# 15 minutes to get started with MMYOLO rotated object detection

+

+TODO

diff --git a/docs/en/get_started/article.md b/docs/en/get_started/article.md

new file mode 100644

index 00000000..ea28d491

--- /dev/null

+++ b/docs/en/get_started/article.md

@@ -0,0 +1 @@

+# Resources summary

diff --git a/docs/en/get_started/dependencies.md b/docs/en/get_started/dependencies.md

new file mode 100644

index 00000000..d75275f1

--- /dev/null

+++ b/docs/en/get_started/dependencies.md

@@ -0,0 +1,44 @@

+# Prerequisites

+

+Compatible MMEngine, MMCV and MMDetection versions are shown as below. Please install the correct version to avoid installation issues.

+

+| MMYOLO version | MMDetection version | MMEngine version | MMCV version |

+| :------------: | :----------------------: | :----------------------: | :---------------------: |

+| main | mmdet>=3.0.0rc5, \<3.1.0 | mmengine>=0.3.1, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

+| 0.3.0 | mmdet>=3.0.0rc5, \<3.1.0 | mmengine>=0.3.1, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

+| 0.2.0 | mmdet>=3.0.0rc3, \<3.1.0 | mmengine>=0.3.1, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

+| 0.1.3 | mmdet>=3.0.0rc3, \<3.1.0 | mmengine>=0.3.1, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

+| 0.1.2 | mmdet>=3.0.0rc2, \<3.1.0 | mmengine>=0.3.0, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

+| 0.1.1 | mmdet==3.0.0rc1 | mmengine>=0.1.0, \<0.2.0 | mmcv>=2.0.0rc0, \<2.1.0 |

+| 0.1.0 | mmdet==3.0.0rc0 | mmengine>=0.1.0, \<0.2.0 | mmcv>=2.0.0rc0, \<2.1.0 |

+

+In this section, we demonstrate how to prepare an environment with PyTorch.

+

+MMDetection works on Linux, Windows, and macOS. It requires Python 3.7+, CUDA 9.2+, and PyTorch 1.7+.

+

+```{note}

+If you are experienced with PyTorch and have already installed it, just skip this part and jump to the [next section](#installation). Otherwise, you can follow these steps for the preparation.

+```

+

+**Step 0.** Download and install Miniconda from the [official website](https://docs.conda.io/en/latest/miniconda.html).

+

+**Step 1.** Create a conda environment and activate it.

+

+```shell

+conda create --name openmmlab python=3.8 -y

+conda activate openmmlab

+```

+

+**Step 2.** Install PyTorch following [official instructions](https://pytorch.org/get-started/locally/), e.g.

+

+On GPU platforms:

+

+```shell

+conda install pytorch torchvision -c pytorch

+```

+

+On CPU platforms:

+

+```shell

+conda install pytorch torchvision cpuonly -c pytorch

+```

diff --git a/docs/en/get_started/installation.md b/docs/en/get_started/installation.md

new file mode 100644

index 00000000..d73bede7

--- /dev/null

+++ b/docs/en/get_started/installation.md

@@ -0,0 +1,123 @@

+# Installation

+

+## Best Practices

+

+**Step 0.** Install [MMEngine](https://github.com/open-mmlab/mmengine) and [MMCV](https://github.com/open-mmlab/mmcv) using [MIM](https://github.com/open-mmlab/mim).

+

+```shell

+pip install -U openmim

+mim install "mmengine>=0.3.1"

+mim install "mmcv>=2.0.0rc1,<2.1.0"

+mim install "mmdet>=3.0.0rc5,<3.1.0"

+```

+

+**Note:**

+

+a. In MMCV-v2.x, `mmcv-full` is rename to `mmcv`, if you want to install `mmcv` without CUDA ops, you can use `mim install "mmcv-lite>=2.0.0rc1"` to install the lite version.

+

+b. If you would like to use albumentations, we suggest using pip install -r requirements/albu.txt or pip install -U albumentations --no-binary qudida,albumentations. If you simply use pip install albumentations==1.0.1, it will install opencv-python-headless simultaneously (even though you have already installed opencv-python). We recommended checking the environment after installing albumentation to ensure that opencv-python and opencv-python-headless are not installed at the same time, because it might cause unexpected issues if they both installed. Please refer to [official documentation](https://albumentations.ai/docs/getting_started/installation/#note-on-opencv-dependencies) for more details.

+

+**Step 1.** Install MMYOLO.

+

+Case a: If you develop and run mmdet directly, install it from source:

+

+```shell

+git clone https://github.com/open-mmlab/mmyolo.git

+cd mmyolo

+# Install albumentations

+pip install -r requirements/albu.txt

+# Install MMYOLO

+mim install -v -e .

+# "-v" means verbose, or more output

+# "-e" means installing a project in editable mode,

+# thus any local modifications made to the code will take effect without reinstallation.

+```

+

+Case b: If you use MMYOLO as a dependency or third-party package, install it with MIM:

+

+```shell

+mim install "mmyolo"

+```

+

+## Verify the installation

+

+To verify whether MMYOLO is installed correctly, we provide some sample codes to run an inference demo.

+

+**Step 1.** We need to download config and checkpoint files.

+

+```shell

+mim download mmyolo --config yolov5_s-v61_syncbn_fast_8xb16-300e_coco --dest .

+```

+

+The downloading will take several seconds or more, depending on your network environment. When it is done, you will find two files `yolov5_s-v61_syncbn_fast_8xb16-300e_coco.py` and `yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth` in your current folder.

+

+**Step 2.** Verify the inference demo.

+

+Option (a). If you install MMYOLO from source, just run the following command.

+

+```shell

+python demo/image_demo.py demo/demo.jpg \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco.py \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth

+

+# Optional parameters

+# --out-dir ./output *The detection results are output to the specified directory. When args have action --show, the script do not save results. Default: ./output

+# --device cuda:0 *The computing resources used, including cuda and cpu. Default: cuda:0

+# --show *Display the results on the screen. Default: False

+# --score-thr 0.3 *Confidence threshold. Default: 0.3

+```

+

+You will see a new image on your `output` folder, where bounding boxes are plotted.

+

+Supported input types:

+

+- Single image, include `jpg`, `jpeg`, `png`, `ppm`, `bmp`, `pgm`, `tif`, `tiff`, `webp`.

+- Folder, all image files in the folder will be traversed and the corresponding results will be output.

+- URL, will automatically download from the URL and the corresponding results will be output.

+

+Option (b). If you install MMYOLO with MIM, open your python interpreter and copy&paste the following codes.

+

+```python

+from mmdet.apis import init_detector, inference_detector

+from mmyolo.utils import register_all_modules

+

+register_all_modules()

+config_file = 'yolov5_s-v61_syncbn_fast_8xb16-300e_coco.py'

+checkpoint_file = 'yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth'

+model = init_detector(config_file, checkpoint_file, device='cpu') # or device='cuda:0'

+inference_detector(model, 'demo/demo.jpg')

+```

+

+You will see a list of `DetDataSample`, and the predictions are in the `pred_instance`, indicating the detected bounding boxes, labels, and scores.

+

+## Using MMYOLO with Docker

+

+We provide a [Dockerfile](https://github.com/open-mmlab/mmyolo/blob/main/docker/Dockerfile) to build an image. Ensure that your [docker version](https://docs.docker.com/engine/install/) >=19.03.

+

+Reminder: If you find out that your download speed is very slow, we suggest that you can canceling the comments in the last two lines of `Optional` in the [Dockerfile](https://github.com/open-mmlab/mmyolo/blob/main/docker/Dockerfile#L19-L20) to obtain a rocket like download speed:

+

+```dockerfile

+# (Optional)

+RUN sed -i 's/http:\/\/archive.ubuntu.com\/ubuntu\//http:\/\/mirrors.aliyun.com\/ubuntu\//g' /etc/apt/sources.list && \

+ pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

+```

+

+Build Command:

+

+```shell

+# build an image with PyTorch 1.9, CUDA 11.1

+# If you prefer other versions, just modified the Dockerfile

+docker build -t mmyolo docker/

+```

+

+Run it with:

+

+```shell

+export DATA_DIR=/path/to/your/dataset

+docker run --gpus all --shm-size=8g -it -v ${DATA_DIR}:/mmyolo/data mmyolo

+```

+

+## Troubleshooting

+

+If you have some issues during the installation, please first view the [FAQ](../tutorials/faq.md) page.

+You may [open an issue](https://github.com/open-mmlab/mmyolo/issues/new/choose) on GitHub if no solution is found.

diff --git a/docs/en/get_started/overview.md b/docs/en/get_started/overview.md

new file mode 100644

index 00000000..07dd0c5c

--- /dev/null

+++ b/docs/en/get_started/overview.md

@@ -0,0 +1 @@

+# Overview

diff --git a/docs/en/index.rst b/docs/en/index.rst

index 123680de..5516b619 100644

--- a/docs/en/index.rst

+++ b/docs/en/index.rst

@@ -1,36 +1,83 @@

Welcome to MMYOLO's documentation!

=======================================

+You can switch between Chinese and English documents in the top-right corner of the layout.

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Get Started

+

+ get_started/overview.md

+ get_started/dependencies.md

+ get_started/installation.md

+ get_started/15_minutes_object_detection.md

+ get_started/15_minutes_rotated_object_detection.md

+ get_started/15_minutes_instance_segmentation.md

+ get_started/article.md

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Recommended Topics

+

+ recommended_topics/contributing.md

+ recommended_topics/model_design.md

+ recommended_topics/algorithm_descriptions/index.rst

+ recommended_topics/replace_backbone.md

+ recommended_topics/labeling_to_deployment_tutorials.md

+ recommended_topics/visualization.md

+ recommended_topics/deploy/index.rst

+ recommended_topics/troubleshooting_steps.md

+ recommended_topics/industry_examples.md

+ recommended_topics/mm_basics.md

+ recommended_topics/dataset_preparation.md

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Common Usage

+

+ common_usage/resume_training.md

+ common_usage/syncbn.md

+ common_usage/amp_training.md

+ common_usage/plugins.md

+ common_usage/freeze_layers.md

+ common_usage/output_predictions.md

+ common_usage/set_random_seed.md

+ common_usage/module_combination.md

+ common_usage/mim_usage.md

+ common_usage/multi_necks.md

+ common_usage/specify_device.md

+

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Useful Tools

+

+ useful_tools/browse_coco_json.md

+ useful_tools/browse_dataset.md

+ useful_tools/print_config.md

+ useful_tools/dataset_analysis.md

+ useful_tools/optimize_anchors.md

+ useful_tools/extract_subcoco.md

+ useful_tools/vis_scheduler.md

+ useful_tools/dataset_converters.md

+ useful_tools/download_dataset.md

+ useful_tools/log_analysis.md

+ useful_tools/model_converters.md

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Basic Tutorials

+

+ tutorials/config.md

+ tutorials/data_flow.md

+ tutorials/custom_installation.md

+ tutorials/faq.md

+

.. toctree::

:maxdepth: 1

- :caption: Get Started

+ :caption: Advanced Tutorials

- overview.md

- get_started.md

-

-.. toctree::

- :maxdepth: 2

- :caption: User Guides

-

- user_guides/index.rst

-

-.. toctree::

- :maxdepth: 2

- :caption: Algorithm Descriptions

-

- algorithm_descriptions/index.rst

-

-.. toctree::

- :maxdepth: 2

- :caption: Advanced Guides

-

- advanced_guides/index.rst

-

-.. toctree::

- :maxdepth: 2

- :caption: Deployment Guides

-

- deploy/index.rst

+ advanced_guides/cross-library_application.md

.. toctree::

:maxdepth: 1

@@ -49,24 +96,17 @@ Welcome to MMYOLO's documentation!

:caption: Notes

notes/changelog.md

- notes/faq.md

notes/compatibility.md

notes/conventions.md

+ notes/code_style.md

+

.. toctree::

- :maxdepth: 2

- :caption: Community

-

- community/contributing.md

- community/code_style.md

-

-.. toctree::

- :caption: Switch Languag

+ :caption: Switch Language

switch_language.md

-

Indices and tables

==================

diff --git a/docs/en/community/code_style.md b/docs/en/notes/code_style.md

similarity index 89%

rename from docs/en/community/code_style.md

rename to docs/en/notes/code_style.md

index 08c534ea..3bc8291e 100644

--- a/docs/en/community/code_style.md

+++ b/docs/en/notes/code_style.md

@@ -1,3 +1,3 @@

-## Code Style

+# Code Style

Coming soon. Please refer to [chinese documentation](https://mmyolo.readthedocs.io/zh_CN/latest/community/code_style.html).

diff --git a/docs/en/overview.md b/docs/en/overview.md

deleted file mode 100644

index 339bcfa3..00000000

--- a/docs/en/overview.md

+++ /dev/null

@@ -1,56 +0,0 @@

-# Overview

-

-This chapter introduces you to the overall framework of MMYOLO and provides links to detailed tutorials.

-

-## What is MMYOLO

-

-

+

+### Compute the average training speed

+

+```shell

+mim run mmdet analyze_logs cal_train_time \

+ ${LOG} \ # path of train log in json format

+ [--include-outliers] # include the first value of every epoch when computing the average time

+```

+

+Examples:

+

+```shell

+mim run mmdet analyze_logs cal_train_time \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json

+```

+

+The output is expected to be like the following.

+

+```text

+-----Analyze train time of yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700.log.json-----

+slowest epoch 278, average time is 0.1705 s/iter

+fastest epoch 300, average time is 0.1510 s/iter

+time std over epochs is 0.0026

+average iter time: 0.1556 s/iter

+```

diff --git a/docs/en/common_usage/module_combination.md b/docs/en/common_usage/module_combination.md

new file mode 100644

index 00000000..3f9ffa4c

--- /dev/null

+++ b/docs/en/common_usage/module_combination.md

@@ -0,0 +1 @@

+# Module combination

diff --git a/docs/en/common_usage/multi_necks.md b/docs/en/common_usage/multi_necks.md

new file mode 100644

index 00000000..b6f2bc25

--- /dev/null

+++ b/docs/en/common_usage/multi_necks.md

@@ -0,0 +1,37 @@

+# Apply multiple Necks

+

+If you want to stack multiple Necks, you can directly set the Neck parameters in the config. MMYOLO supports concatenating multiple Necks in the form of `List`. You need to ensure that the output channel of the previous Neck matches the input channel of the next Neck. If you need to adjust the number of channels, you can insert the `mmdet.ChannelMapper` module to align the number of channels between multiple Necks. The specific configuration is as follows:

+

+```python

+_base_ = './yolov5_s-v61_syncbn_8xb16-300e_coco.py'

+

+deepen_factor = _base_.deepen_factor

+widen_factor = _base_.widen_factor

+model = dict(

+ type='YOLODetector',

+ neck=[

+ dict(

+ type='YOLOv5PAFPN',

+ deepen_factor=deepen_factor,

+ widen_factor=widen_factor,

+ in_channels=[256, 512, 1024],

+ out_channels=[256, 512, 1024], # The out_channels is controlled by widen_factor,so the YOLOv5PAFPN's out_channels equls to out_channels * widen_factor

+ num_csp_blocks=3,

+ norm_cfg=dict(type='BN', momentum=0.03, eps=0.001),

+ act_cfg=dict(type='SiLU', inplace=True)),

+ dict(

+ type='mmdet.ChannelMapper',

+ in_channels=[128, 256, 512],

+ out_channels=128,

+ ),

+ dict(

+ type='mmdet.DyHead',

+ in_channels=128,

+ out_channels=256,

+ num_blocks=2,

+ # disable zero_init_offset to follow official implementation

+ zero_init_offset=False)

+ ]

+ bbox_head=dict(head_module=dict(in_channels=[512,512,512])) # The out_channels is controlled by widen_factor,so the YOLOv5HeadModuled in_channels * widen_factor equals to the last neck's out_channels

+)

+```

diff --git a/docs/en/common_usage/output_predictions.md b/docs/en/common_usage/output_predictions.md

new file mode 100644

index 00000000..57192990

--- /dev/null

+++ b/docs/en/common_usage/output_predictions.md

@@ -0,0 +1,40 @@

+# Output prediction results

+

+If you want to save the prediction results as a specific file for offline evaluation, MMYOLO currently supports both json and pkl formats.

+

+```{note}

+The json file only save `image_id`, `bbox`, `score` and `category_id`. The json file can be read using the json library.

+The pkl file holds more content than the json file, and also holds information such as the file name and size of the predicted image; the pkl file can be read using the pickle library. The pkl file can be read using the pickle library.

+```

+

+## Output into json file

+

+If you want to output the prediction results as a json file, the command is as follows.

+

+```shell

+python tools/test.py {path_to_config} {path_to_checkpoint} --json-prefix {json_prefix}

+```

+

+The argument after `--json-prefix` should be a filename prefix (no need to enter the `.json` suffix) and can also contain a path. For a concrete example:

+

+```shell

+python tools/test.py configs\yolov5\yolov5_s-v61_syncbn_8xb16-300e_coco.py yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth --json-prefix work_dirs/demo/json_demo

+```

+

+Running the above command will output the `json_demo.bbox.json` file in the `work_dirs/demo` folder.

+

+## Output into pkl file

+

+If you want to output the prediction results as a pkl file, the command is as follows.

+

+```shell

+python tools/test.py {path_to_config} {path_to_checkpoint} --out {path_to_output_file}

+```

+

+The argument after `--out` should be a full filename (**must be** with a `.pkl` or `.pickle` suffix) and can also contain a path. For a concrete example:

+

+```shell

+python tools/test.py configs\yolov5\yolov5_s-v61_syncbn_8xb16-300e_coco.py yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth --out work_dirs/demo/pkl_demo.pkl

+```

+

+Running the above command will output the `pkl_demo.pkl` file in the `work_dirs/demo` folder.

diff --git a/docs/en/advanced_guides/plugins.md b/docs/en/common_usage/plugins.md

similarity index 100%

rename from docs/en/advanced_guides/plugins.md

rename to docs/en/common_usage/plugins.md

diff --git a/docs/en/common_usage/resume_training.md b/docs/en/common_usage/resume_training.md

new file mode 100644

index 00000000..d33f1d28

--- /dev/null

+++ b/docs/en/common_usage/resume_training.md

@@ -0,0 +1 @@

+# Resume training

diff --git a/docs/en/common_usage/set_random_seed.md b/docs/en/common_usage/set_random_seed.md

new file mode 100644

index 00000000..c45c165f

--- /dev/null

+++ b/docs/en/common_usage/set_random_seed.md

@@ -0,0 +1,18 @@

+# Set the random seed

+

+If you want to set the random seed during training, you can use the following command.

+

+```shell

+python ./tools/train.py \

+ ${CONFIG} \ # path of the config file

+ --cfg-options randomness.seed=2023 \ # set seed to 2023

+ [randomness.diff_rank_seed=True] \ # set different seeds according to global rank

+ [randomness.deterministic=True] # set the deterministic option for CUDNN backend

+# [] stands for optional parameters, when actually entering the command line, you do not need to enter []

+```

+

+`randomness` has three parameters that can be set, with the following meanings.

+

+- `randomness.seed=2023`, set the random seed to 2023.

+- `randomness.diff_rank_seed=True`, set different seeds according to global rank. Defaults to False.

+- `randomness.deterministic=True`, set the deterministic option for cuDNN backend, i.e., set `torch.backends.cudnn.deterministic` to True and `torch.backends.cudnn.benchmark` to False. Defaults to False. See https://pytorch.org/docs/stable/notes/randomness.html for more details.

diff --git a/docs/en/common_usage/set_syncbn.md b/docs/en/common_usage/set_syncbn.md

new file mode 100644

index 00000000..dba33be6

--- /dev/null

+++ b/docs/en/common_usage/set_syncbn.md

@@ -0,0 +1 @@

+# Enabling and disabling SyncBatchNorm

diff --git a/docs/en/common_usage/specify_device.md b/docs/en/common_usage/specify_device.md

new file mode 100644

index 00000000..72c8017e

--- /dev/null

+++ b/docs/en/common_usage/specify_device.md

@@ -0,0 +1,23 @@

+# Specify specific GPUs during training or inference

+

+If you have multiple GPUs, such as 8 GPUs, numbered `0, 1, 2, 3, 4, 5, 6, 7`, GPU 0 will be used by default for training or inference. If you want to specify other GPUs for training or inference, you can use the following commands:

+

+```shell

+CUDA_VISIBLE_DEVICES=5 python ./tools/train.py ${CONFIG} #train

+CUDA_VISIBLE_DEVICES=5 python ./tools/test.py ${CONFIG} ${CHECKPOINT_FILE} #test

+```

+

+If you set `CUDA_VISIBLE_DEVICES` to -1 or a number greater than the maximum GPU number, such as 8, the CPU will be used for training or inference.

+

+If you want to use several of these GPUs to train in parallel, you can use the following command:

+

+```shell

+CUDA_VISIBLE_DEVICES=0,1,2,3 ./tools/dist_train.sh ${CONFIG} ${GPU_NUM}

+```

+

+Here the `GPU_NUM` is 4. In addition, if multiple tasks are trained in parallel on one machine and each task requires multiple GPUs, the PORT of each task need to be set differently to avoid communication conflict, like the following commands:

+

+```shell

+CUDA_VISIBLE_DEVICES=0,1,2,3 PORT=29500 ./tools/dist_train.sh ${CONFIG} 4

+CUDA_VISIBLE_DEVICES=4,5,6,7 PORT=29501 ./tools/dist_train.sh ${CONFIG} 4

+```

diff --git a/docs/en/deploy/index.rst b/docs/en/deploy/index.rst

deleted file mode 100644

index 6fcfb241..00000000

--- a/docs/en/deploy/index.rst

+++ /dev/null

@@ -1,16 +0,0 @@

-Basic Deployment Guide

-************************

-

-.. toctree::

- :maxdepth: 1

-

- basic_deployment_guide.md

-

-

-Deployment tutorial

-************************

-

-.. toctree::

- :maxdepth: 1

-

- yolov5_deployment.md

diff --git a/docs/en/get_started.md b/docs/en/get_started.md

deleted file mode 100644

index 01c1a716..00000000

--- a/docs/en/get_started.md

+++ /dev/null

@@ -1,280 +0,0 @@

-# Get Started

-

-## Prerequisites

-

-Compatible MMEngine, MMCV and MMDetection versions are shown as below. Please install the correct version to avoid installation issues.

-

-| MMYOLO version | MMDetection version | MMEngine version | MMCV version |

-| :------------: | :----------------------: | :----------------------: | :---------------------: |

-| main | mmdet>=3.0.0rc5, \<3.1.0 | mmengine>=0.3.1, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-| 0.3.0 | mmdet>=3.0.0rc5, \<3.1.0 | mmengine>=0.3.1, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-| 0.2.0 | mmdet>=3.0.0rc3, \<3.1.0 | mmengine>=0.3.1, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-| 0.1.3 | mmdet>=3.0.0rc3, \<3.1.0 | mmengine>=0.3.1, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-| 0.1.2 | mmdet>=3.0.0rc2, \<3.1.0 | mmengine>=0.3.0, \<1.0.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-| 0.1.1 | mmdet==3.0.0rc1 | mmengine>=0.1.0, \<0.2.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-| 0.1.0 | mmdet==3.0.0rc0 | mmengine>=0.1.0, \<0.2.0 | mmcv>=2.0.0rc0, \<2.1.0 |

-

-In this section, we demonstrate how to prepare an environment with PyTorch.

-

-MMDetection works on Linux, Windows, and macOS. It requires Python 3.7+, CUDA 9.2+, and PyTorch 1.7+.

-

-```{note}

-If you are experienced with PyTorch and have already installed it, just skip this part and jump to the [next section](#installation). Otherwise, you can follow these steps for the preparation.

-```

-

-**Step 0.** Download and install Miniconda from the [official website](https://docs.conda.io/en/latest/miniconda.html).

-

-**Step 1.** Create a conda environment and activate it.

-

-```shell

-conda create --name openmmlab python=3.8 -y

-conda activate openmmlab

-```

-

-**Step 2.** Install PyTorch following [official instructions](https://pytorch.org/get-started/locally/), e.g.

-

-On GPU platforms:

-

-```shell

-conda install pytorch torchvision -c pytorch

-```

-

-On CPU platforms:

-

-```shell

-conda install pytorch torchvision cpuonly -c pytorch

-```

-

-## Installation

-

-### Best Practices

-

-**Step 0.** Install [MMEngine](https://github.com/open-mmlab/mmengine) and [MMCV](https://github.com/open-mmlab/mmcv) using [MIM](https://github.com/open-mmlab/mim).

-

-```shell

-pip install -U openmim

-mim install "mmengine>=0.3.1"

-mim install "mmcv>=2.0.0rc1,<2.1.0"

-mim install "mmdet>=3.0.0rc5,<3.1.0"

-```

-

-**Note:**

-

-a. In MMCV-v2.x, `mmcv-full` is rename to `mmcv`, if you want to install `mmcv` without CUDA ops, you can use `mim install "mmcv-lite>=2.0.0rc1"` to install the lite version.

-

-b. If you would like to use albumentations, we suggest using pip install -r requirements/albu.txt or pip install -U albumentations --no-binary qudida,albumentations. If you simply use pip install albumentations==1.0.1, it will install opencv-python-headless simultaneously (even though you have already installed opencv-python). We recommended checking the environment after installing albumentation to ensure that opencv-python and opencv-python-headless are not installed at the same time, because it might cause unexpected issues if they both installed. Please refer to [official documentation](https://albumentations.ai/docs/getting_started/installation/#note-on-opencv-dependencies) for more details.

-

-**Step 1.** Install MMYOLO.

-

-Case a: If you develop and run mmdet directly, install it from source:

-

-```shell

-git clone https://github.com/open-mmlab/mmyolo.git

-cd mmyolo

-# Install albumentations

-pip install -r requirements/albu.txt

-# Install MMYOLO

-mim install -v -e .

-# "-v" means verbose, or more output

-# "-e" means installing a project in editable mode,

-# thus any local modifications made to the code will take effect without reinstallation.

-```

-

-Case b: If you use MMYOLO as a dependency or third-party package, install it with MIM:

-

-```shell

-mim install "mmyolo"

-```

-

-## Verify the installation

-

-To verify whether MMYOLO is installed correctly, we provide some sample codes to run an inference demo.

-

-**Step 1.** We need to download config and checkpoint files.

-

-```shell

-mim download mmyolo --config yolov5_s-v61_syncbn_fast_8xb16-300e_coco --dest .

-```

-

-The downloading will take several seconds or more, depending on your network environment. When it is done, you will find two files `yolov5_s-v61_syncbn_fast_8xb16-300e_coco.py` and `yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth` in your current folder.

-

-**Step 2.** Verify the inference demo.

-

-Option (a). If you install MMYOLO from source, just run the following command.

-

-```shell

-python demo/image_demo.py demo/demo.jpg \

- yolov5_s-v61_syncbn_fast_8xb16-300e_coco.py \

- yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth

-

-# Optional parameters

-# --out-dir ./output *The detection results are output to the specified directory. When args have action --show, the script do not save results. Default: ./output

-# --device cuda:0 *The computing resources used, including cuda and cpu. Default: cuda:0

-# --show *Display the results on the screen. Default: False

-# --score-thr 0.3 *Confidence threshold. Default: 0.3