diff --git a/.gitignore b/.gitignore

index 74f61b87..195f1940 100644

--- a/.gitignore

+++ b/.gitignore

@@ -115,6 +115,7 @@ data

*.log.json

docs/modelzoo_statistics.md

mmyolo/.mim

+output/

work_dirs

yolov5-6.1/

diff --git a/docs/en/user_guides/useful_tools.md b/docs/en/user_guides/useful_tools.md

index e14ad891..e38217ff 100644

--- a/docs/en/user_guides/useful_tools.md

+++ b/docs/en/user_guides/useful_tools.md

@@ -196,9 +196,54 @@ python tools/analysis_tools/dataset_analysis.py configs/yolov5/voc/yolov5_s-v61_

--out-dir work_dirs/dataset_analysis

```

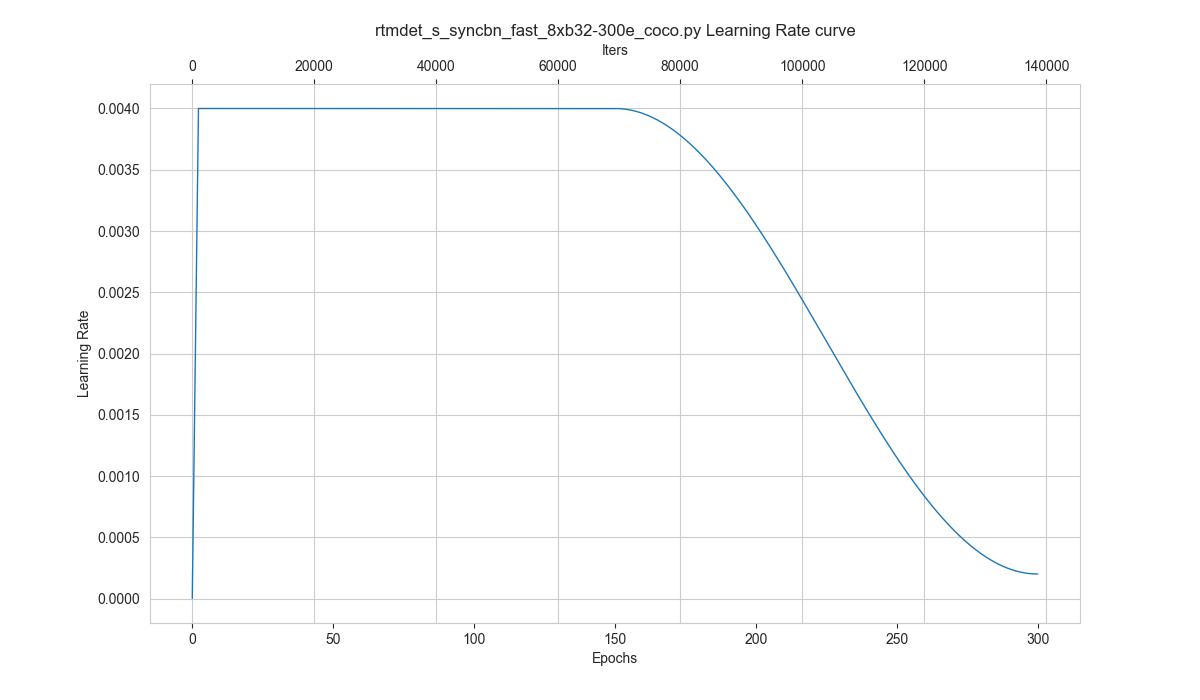

+### Hyper-parameter Scheduler Visualization

+

+`tools/analysis_tools/vis_scheduler` aims to help the user to check the hyper-parameter scheduler of the optimizer(without training), which support the "learning rate", "momentum", and "weight_decay".

+

+```bash

+python tools/analysis_tools/vis_scheduler.py \

+ ${CONFIG_FILE} \

+ [-p, --parameter ${PARAMETER_NAME}] \

+ [-d, --dataset-size ${DATASET_SIZE}] \

+ [-n, --ngpus ${NUM_GPUs}] \

+ [-o, --out-dir ${OUT_DIR}] \

+ [--title ${TITLE}] \

+ [--style ${STYLE}] \

+ [--window-size ${WINDOW_SIZE}] \

+ [--cfg-options]

+```

+

+**Description of all arguments**:

+

+- `config`: The path of a model config file.

+- **`-p, --parameter`**: The param to visualize its change curve, choose from "lr", "momentum" or "wd". Default to use "lr".

+- **`-d, --dataset-size`**: The size of the datasets. If set,`DATASETS.build` will be skipped and `${DATASET_SIZE}` will be used as the size. Default to use the function `DATASETS.build`.

+- **`-n, --ngpus`**: The number of GPUs used in training, default to be 1.

+- **`-o, --out-dir`**: The output path of the curve plot, default not to output.

+- `--title`: Title of figure. If not set, default to be config file name.

+- `--style`: Style of plt. If not set, default to be `whitegrid`.

+- `--window-size`: The shape of the display window. If not specified, it will be set to `12*7`. If used, it must be in the format `'W*H'`.

+- `--cfg-options`: Modifications to the configuration file, refer to [Learn about Configs](../user_guides/config.md).

+

+```{note}

+Loading annotations maybe consume much time, you can directly specify the size of the dataset with `-d, dataset-size` to save time.

+```

+

+You can use the following command to plot the step learning rate schedule used in the config `configs/rtmdet/rtmdet_s_syncbn_fast_8xb32-300e_coco.py`:

+

+```shell

+python tools/analysis_tools/vis_scheduler.py \

+ configs/rtmdet/rtmdet_s_syncbn_fast_8xb32-300e_coco.py \

+ --dataset-size 118287 \

+ --ngpus 8 \

+ --out-dir ./output

+```

+

+

+

## Dataset Conversion

-The folder `tools/data_converters` currently contains `ballon2coco.py` and `yolo2coco.py` two dataset conversion tools.

+The folder `tools/data_converters` currently contains `ballon2coco.py`, `yolo2coco.py`, and `labelme2coco.py` - three dataset conversion tools.

- `ballon2coco.py` converts the `balloon` dataset (this small dataset is for starters only) to COCO format.

diff --git a/docs/zh_cn/user_guides/useful_tools.md b/docs/zh_cn/user_guides/useful_tools.md

index 56243ed2..92b3517b 100644

--- a/docs/zh_cn/user_guides/useful_tools.md

+++ b/docs/zh_cn/user_guides/useful_tools.md

@@ -212,9 +212,54 @@ python tools/analysis_tools/dataset_analysis.py configs/yolov5/voc/yolov5_s-v61_

--out-dir work_dirs/dataset_analysis

```

+### 优化器参数策略可视化

+

+`tools/analysis_tools/vis_scheduler.py` 旨在帮助用户检查优化器的超参数调度器(无需训练),支持学习率(learning rate)、动量(momentum)和权值衰减(weight decay)。

+

+```shell

+python tools/analysis_tools/vis_scheduler.py \

+ ${CONFIG_FILE} \

+ [-p, --parameter ${PARAMETER_NAME}] \

+ [-d, --dataset-size ${DATASET_SIZE}] \

+ [-n, --ngpus ${NUM_GPUs}] \

+ [-o, --out-dir ${OUT_DIR}] \

+ [--title ${TITLE}] \

+ [--style ${STYLE}] \

+ [--window-size ${WINDOW_SIZE}] \

+ [--cfg-options]

+```

+

+**所有参数的说明**:

+

+- `config` : 模型配置文件的路径。

+- **`-p, parameter`**: 可视化参数名,只能为 `["lr", "momentum", "wd"]` 之一, 默认为 `"lr"`.

+- **`-d, --dataset-size`**: 数据集的大小。如果指定,`DATASETS.build` 将被跳过并使用这个数值作为数据集大小,默认使用 `DATASETS.build` 所得数据集的大小。

+- **`-n, --ngpus`**: 使用 GPU 的数量, 默认为1。

+- **`-o, --out-dir`**: 保存的可视化图片的文件夹路径,默认不保存。

+- `--title`: 可视化图片的标题,默认为配置文件名。

+- `--style`: 可视化图片的风格,默认为 `whitegrid`。

+- `--window-size`: 可视化窗口大小,如果没有指定,默认为 `12*7`。如果需要指定,按照格式 `'W*H'`。

+- `--cfg-options`: 对配置文件的修改,参考[学习配置文件](../user_guides/config.md)。

+

+```{note}

+部分数据集在解析标注阶段比较耗时,推荐直接将 `-d, dataset-size` 指定数据集的大小,以节约时间。

+```

+

+你可以使用如下命令来绘制配置文件 `configs/rtmdet/rtmdet_s_syncbn_fast_8xb32-300e_coco.py` 将会使用的学习率变化曲线:

+

+```shell

+python tools/analysis_tools/vis_scheduler.py \

+ configs/rtmdet/rtmdet_s_syncbn_fast_8xb32-300e_coco.py \

+ --dataset-size 118287 \

+ --ngpus 8 \

+ --out-dir ./output

+```

+

+

+

## 数据集转换

-文件夹 `tools/data_converters/` 目前包含 `ballon2coco.py` 和 `yolo2coco.py` 两个数据集转换工具。

+文件夹 `tools/data_converters/` 目前包含 `ballon2coco.py`、`yolo2coco.py` 和 `labelme2coco.py` 三个数据集转换工具。

- `ballon2coco.py` 将 `balloon` 数据集(该小型数据集仅作为入门使用)转换成 COCO 的格式。

diff --git a/tools/analysis_tools/vis_scheduler.py b/tools/analysis_tools/vis_scheduler.py

new file mode 100644

index 00000000..91b8f5fe

--- /dev/null

+++ b/tools/analysis_tools/vis_scheduler.py

@@ -0,0 +1,296 @@

+# Copyright (c) OpenMMLab. All rights reserved.

+"""Hyper-parameter Scheduler Visualization.

+

+This tool aims to help the user to check

+the hyper-parameter scheduler of the optimizer(without training),

+which support the "learning rate", "momentum", and "weight_decay".

+

+Example:

+```shell

+python tools/analysis_tools/vis_scheduler.py \

+ configs/rtmdet/rtmdet_s_syncbn_fast_8xb32-300e_coco.py \

+ --dataset-size 118287 \

+ --ngpus 8 \

+ --out-dir ./output

+```

+Modified from: https://github.com/open-mmlab/mmclassification/blob/1.x/tools/visualizations/vis_scheduler.py # noqa

+"""

+import argparse

+import json

+import os.path as osp

+import re

+from pathlib import Path

+from unittest.mock import MagicMock

+

+import matplotlib.pyplot as plt

+import rich

+import torch.nn as nn

+from mmengine.config import Config, DictAction

+from mmengine.hooks import Hook

+from mmengine.model import BaseModel

+from mmengine.runner import Runner

+from mmengine.utils.path import mkdir_or_exist

+from mmengine.visualization import Visualizer

+from rich.progress import BarColumn, MofNCompleteColumn, Progress, TextColumn

+

+from mmyolo.utils import register_all_modules

+

+

+def parse_args():

+ parser = argparse.ArgumentParser(

+ description='Visualize a hyper-parameter scheduler')

+ parser.add_argument('config', help='config file path')

+ parser.add_argument(

+ '-p',

+ '--parameter',

+ type=str,

+ default='lr',

+ choices=['lr', 'momentum', 'wd'],

+ help='The parameter to visualize its change curve, choose from'

+ '"lr", "wd" and "momentum". Defaults to "lr".')

+ parser.add_argument(

+ '-d',

+ '--dataset-size',

+ type=int,

+ help='The size of the dataset. If specify, `DATASETS.build` will '

+ 'be skipped and use this size as the dataset size.')

+ parser.add_argument(

+ '-n',

+ '--ngpus',

+ type=int,

+ default=1,

+ help='The number of GPUs used in training.')

+ parser.add_argument(

+ '-o', '--out-dir', type=Path, help='Path to output file')

+ parser.add_argument(

+ '--log-level',

+ default='WARNING',

+ help='The log level of the handler and logger. Defaults to '

+ 'WARNING.')

+ parser.add_argument('--title', type=str, help='title of figure')

+ parser.add_argument(

+ '--style', type=str, default='whitegrid', help='style of plt')

+ parser.add_argument('--not-show', default=False, action='store_true')

+ parser.add_argument(

+ '--window-size',

+ default='12*7',

+ help='Size of the window to display images, in format of "$W*$H".')

+ parser.add_argument(

+ '--cfg-options',

+ nargs='+',

+ action=DictAction,

+ help='override some settings in the used config, the key-value pair '

+ 'in xxx=yyy format will be merged into config file. If the value to '

+ 'be overwritten is a list, it should be like key="[a,b]" or key=a,b '

+ 'It also allows nested list/tuple values, e.g. key="[(a,b),(c,d)]" '

+ 'Note that the quotation marks are necessary and that no white space '

+ 'is allowed.')

+ args = parser.parse_args()

+ if args.window_size != '':

+ assert re.match(r'\d+\*\d+', args.window_size), \

+ "'window-size' must be in format 'W*H'."

+

+ return args

+

+

+class SimpleModel(BaseModel):

+ """simple model that do nothing in train_step."""

+

+ def __init__(self):

+ super().__init__()

+ self.data_preprocessor = nn.Identity()

+ self.conv = nn.Conv2d(1, 1, 1)

+

+ def forward(self, inputs, data_samples, mode='tensor'):

+ pass

+

+ def train_step(self, data, optim_wrapper):

+ pass

+

+

+class ParamRecordHook(Hook):

+

+ def __init__(self, by_epoch):

+ super().__init__()

+ self.by_epoch = by_epoch

+ self.lr_list = []

+ self.momentum_list = []

+ self.wd_list = []

+ self.task_id = 0

+ self.progress = Progress(BarColumn(), MofNCompleteColumn(),

+ TextColumn('{task.description}'))

+

+ def before_train(self, runner):

+ if self.by_epoch:

+ total = runner.train_loop.max_epochs

+ self.task_id = self.progress.add_task(

+ 'epochs', start=True, total=total)

+ else:

+ total = runner.train_loop.max_iters

+ self.task_id = self.progress.add_task(

+ 'iters', start=True, total=total)

+ self.progress.start()

+

+ def after_train_epoch(self, runner):

+ if self.by_epoch:

+ self.progress.update(self.task_id, advance=1)

+

+ # TODO: Support multiple schedulers

+ def after_train_iter(self, runner, batch_idx, data_batch, outputs):

+ if not self.by_epoch:

+ self.progress.update(self.task_id, advance=1)

+ self.lr_list.append(runner.optim_wrapper.get_lr()['lr'][0])

+ self.momentum_list.append(

+ runner.optim_wrapper.get_momentum()['momentum'][0])

+ self.wd_list.append(

+ runner.optim_wrapper.param_groups[0]['weight_decay'])

+

+ def after_train(self, runner):

+ self.progress.stop()

+

+

+def plot_curve(lr_list, args, param_name, iters_per_epoch, by_epoch=True):

+ """Plot learning rate vs iter graph."""

+ try:

+ import seaborn as sns

+ sns.set_style(args.style)

+ except ImportError:

+ pass

+

+ wind_w, wind_h = args.window_size.split('*')

+ wind_w, wind_h = int(wind_w), int(wind_h)

+ plt.figure(figsize=(wind_w, wind_h))

+

+ ax: plt.Axes = plt.subplot()

+ ax.plot(lr_list, linewidth=1)

+

+ if by_epoch:

+ ax.xaxis.tick_top()

+ ax.set_xlabel('Iters')

+ ax.xaxis.set_label_position('top')

+ sec_ax = ax.secondary_xaxis(

+ 'bottom',

+ functions=(lambda x: x / iters_per_epoch,

+ lambda y: y * iters_per_epoch))

+ sec_ax.set_xlabel('Epochs')

+ else:

+ plt.xlabel('Iters')

+ plt.ylabel(param_name)

+

+ if args.title is None:

+ plt.title(f'{osp.basename(args.config)} {param_name} curve')

+ else:

+ plt.title(args.title)

+

+

+def simulate_train(data_loader, cfg, by_epoch):

+ model = SimpleModel()

+ param_record_hook = ParamRecordHook(by_epoch=by_epoch)

+ default_hooks = dict(

+ param_scheduler=cfg.default_hooks['param_scheduler'],

+ runtime_info=None,

+ timer=None,

+ logger=None,

+ checkpoint=None,

+ sampler_seed=None,

+ param_record=param_record_hook)

+

+ runner = Runner(

+ model=model,

+ work_dir=cfg.work_dir,

+ train_dataloader=data_loader,

+ train_cfg=cfg.train_cfg,

+ log_level=cfg.log_level,

+ optim_wrapper=cfg.optim_wrapper,

+ param_scheduler=cfg.param_scheduler,

+ default_scope=cfg.default_scope,

+ default_hooks=default_hooks,

+ visualizer=MagicMock(spec=Visualizer),

+ custom_hooks=cfg.get('custom_hooks', None))

+

+ runner.train()

+

+ param_dict = dict(

+ lr=param_record_hook.lr_list,

+ momentum=param_record_hook.momentum_list,

+ wd=param_record_hook.wd_list)

+

+ return param_dict

+

+

+def main():

+ args = parse_args()

+ cfg = Config.fromfile(args.config)

+ if args.cfg_options is not None:

+ cfg.merge_from_dict(args.cfg_options)

+ if cfg.get('work_dir', None) is None:

+ # use config filename as default work_dir if cfg.work_dir is None

+ cfg.work_dir = osp.join('./work_dirs',

+ osp.splitext(osp.basename(args.config))[0])

+

+ cfg.log_level = args.log_level

+ # register all modules in mmyolo into the registries

+ register_all_modules()

+

+ # init logger

+ print('Param_scheduler :')

+ rich.print_json(json.dumps(cfg.param_scheduler))

+

+ # prepare data loader

+ batch_size = cfg.train_dataloader.batch_size * args.ngpus

+

+ if 'by_epoch' in cfg.train_cfg:

+ by_epoch = cfg.train_cfg.get('by_epoch')

+ elif 'type' in cfg.train_cfg:

+ by_epoch = cfg.train_cfg.get('type') == 'EpochBasedTrainLoop'

+ else:

+ raise ValueError('please set `train_cfg`.')

+

+ if args.dataset_size is None and by_epoch:

+ from mmyolo.registry import DATASETS

+ dataset_size = len(DATASETS.build(cfg.train_dataloader.dataset))

+ else:

+ dataset_size = args.dataset_size or batch_size

+

+ class FakeDataloader(list):

+ dataset = MagicMock(metainfo=None)

+

+ data_loader = FakeDataloader(range(dataset_size // batch_size))

+ dataset_info = (

+ f'\nDataset infos:'

+ f'\n - Dataset size: {dataset_size}'

+ f'\n - Batch size per GPU: {cfg.train_dataloader.batch_size}'

+ f'\n - Number of GPUs: {args.ngpus}'

+ f'\n - Total batch size: {batch_size}')

+ if by_epoch:

+ dataset_info += f'\n - Iterations per epoch: {len(data_loader)}'

+ rich.print(dataset_info + '\n')

+

+ # simulation training process

+ param_dict = simulate_train(data_loader, cfg, by_epoch)

+ param_list = param_dict[args.parameter]

+

+ if args.parameter == 'lr':

+ param_name = 'Learning Rate'

+ elif args.parameter == 'momentum':

+ param_name = 'Momentum'

+ else:

+ param_name = 'Weight Decay'

+ plot_curve(param_list, args, param_name, len(data_loader), by_epoch)

+

+ if args.out_dir:

+ # make dir for output

+ mkdir_or_exist(args.out_dir)

+

+ # save the graph

+ out_file = osp.join(

+ args.out_dir, f'{osp.basename(args.config)}-{args.parameter}.jpg')

+ plt.savefig(out_file)

+ print(f'\nThe {param_name} graph is saved at {out_file}')

+

+ if not args.not_show:

+ plt.show()

+

+

+if __name__ == '__main__':

+ main()