Merge branch 'branch_6.0' of https://github.com/sunstricken/yolov5 into branch_6.0

commit

1be6f8f298

.github/workflows

classify

segment

|

|

@ -39,13 +39,18 @@ jobs:

|

|||

--insecure \

|

||||

--accept 403,429,500,502,999 \

|

||||

--exclude-all-private \

|

||||

--exclude 'https?://(www\.)?(linkedin\.com|twitter\.com|instagram\.com|kaggle\.com|fonts\.gstatic\.com|url\.com)' \

|

||||

--exclude 'https?://(www\.)?(linkedin\.com|twitter\.com|x\.com|instagram\.com|kaggle\.com|fonts\.gstatic\.com|url\.com)' \

|

||||

--exclude-path '**/ci.yaml' \

|

||||

--github-token ${{ secrets.GITHUB_TOKEN }} \

|

||||

--header "User-Agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/126.0.6478.183 Safari/537.36" \

|

||||

'./**/*.md' \

|

||||

'./**/*.html' | tee -a $GITHUB_STEP_SUMMARY

|

||||

|

||||

# Raise error if broken links found

|

||||

if ! grep -q "0 Errors" $GITHUB_STEP_SUMMARY; then

|

||||

exit 1

|

||||

fi

|

||||

|

||||

- name: Test Markdown, HTML, YAML, Python and Notebook links with retry

|

||||

if: github.event_name == 'workflow_dispatch'

|

||||

uses: ultralytics/actions/retry@main

|

||||

|

|

@ -60,7 +65,7 @@ jobs:

|

|||

--insecure \

|

||||

--accept 429,999 \

|

||||

--exclude-all-private \

|

||||

--exclude 'https?://(www\.)?(linkedin\.com|twitter\.com|instagram\.com|kaggle\.com|fonts\.gstatic\.com|url\.com)' \

|

||||

--exclude 'https?://(www\.)?(linkedin\.com|twitter\.com|x\.com|instagram\.com|kaggle\.com|fonts\.gstatic\.com|url\.com)' \

|

||||

--exclude-path '**/ci.yaml' \

|

||||

--github-token ${{ secrets.GITHUB_TOKEN }} \

|

||||

--header "User-Agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/126.0.6478.183 Safari/537.36" \

|

||||

|

|

@ -70,3 +75,8 @@ jobs:

|

|||

'./**/*.yaml' \

|

||||

'./**/*.py' \

|

||||

'./**/*.ipynb' | tee -a $GITHUB_STEP_SUMMARY

|

||||

|

||||

# Raise error if broken links found

|

||||

if ! grep -q "0 Errors" $GITHUB_STEP_SUMMARY; then

|

||||

exit 1

|

||||

fi

|

||||

|

|

|

|||

|

|

@ -1,76 +1,87 @@

|

|||

## Contributing to YOLOv5 🚀

|

||||

<a href="https://www.ultralytics.com/"><img src="https://raw.githubusercontent.com/ultralytics/assets/main/logo/Ultralytics_Logotype_Original.svg" width="320" alt="Ultralytics logo"></a>

|

||||

|

||||

We love your input! We want to make contributing to YOLOv5 as easy and transparent as possible, whether it's:

|

||||

# Contributing to YOLO 🚀

|

||||

|

||||

We value your input and are committed to making contributing to YOLO as easy and transparent as possible. Whether you're:

|

||||

|

||||

- Reporting a bug

|

||||

- Discussing the current state of the code

|

||||

- Discussing the current state of the codebase

|

||||

- Submitting a fix

|

||||

- Proposing a new feature

|

||||

- Becoming a maintainer

|

||||

- Interested in becoming a maintainer

|

||||

|

||||

YOLOv5 works so well due to our combined community effort, and for every small improvement you contribute you will be helping push the frontiers of what's possible in AI 😃!

|

||||

Ultralytics YOLO thrives thanks to the collective efforts of our community. Every improvement you contribute helps push the boundaries of what's possible in AI! 😃

|

||||

|

||||

## Submitting a Pull Request (PR) 🛠️

|

||||

## 🛠️ Submitting a Pull Request (PR)

|

||||

|

||||

Submitting a PR is easy! This example shows how to submit a PR for updating `requirements.txt` in 4 steps:

|

||||

Submitting a PR is straightforward! Here’s an example showing how to update `requirements.txt` in four simple steps:

|

||||

|

||||

### 1. Select File to Update

|

||||

### 1. Select the File to Update

|

||||

|

||||

Select `requirements.txt` to update by clicking on it in GitHub.

|

||||

Click on `requirements.txt` in the GitHub repository.

|

||||

|

||||

<p align="center"><img width="800" alt="PR_step1" src="https://user-images.githubusercontent.com/26833433/122260847-08be2600-ced4-11eb-828b-8287ace4136c.png"></p>

|

||||

|

||||

### 2. Click 'Edit this file'

|

||||

|

||||

The button is in the top-right corner.

|

||||

Find the 'Edit this file' button in the top-right corner.

|

||||

|

||||

<p align="center"><img width="800" alt="PR_step2" src="https://user-images.githubusercontent.com/26833433/122260844-06f46280-ced4-11eb-9eec-b8a24be519ca.png"></p>

|

||||

|

||||

### 3. Make Changes

|

||||

### 3. Make Your Changes

|

||||

|

||||

Change the `matplotlib` version from `3.2.2` to `3.3`.

|

||||

For example, update the `matplotlib` version from `3.2.2` to `3.3`.

|

||||

|

||||

<p align="center"><img width="800" alt="PR_step3" src="https://user-images.githubusercontent.com/26833433/122260853-0a87e980-ced4-11eb-9fd2-3650fb6e0842.png"></p>

|

||||

|

||||

### 4. Preview Changes and Submit PR

|

||||

### 4. Preview Changes and Submit Your PR

|

||||

|

||||

Click on the **Preview changes** tab to verify your updates. At the bottom of the screen select 'Create a **new branch** for this commit', assign your branch a descriptive name such as `fix/matplotlib_version` and click the green **Propose changes** button. All done, your PR is now submitted to YOLOv5 for review and approval 😃!

|

||||

Click the **Preview changes** tab to review your updates. At the bottom, select 'Create a new branch for this commit', give your branch a descriptive name like `fix/matplotlib_version`, and click the green **Propose changes** button. Your PR is now submitted for review! 😃

|

||||

|

||||

<p align="center"><img width="800" alt="PR_step4" src="https://user-images.githubusercontent.com/26833433/122260856-0b208000-ced4-11eb-8e8e-77b6151cbcc3.png"></p>

|

||||

|

||||

### PR recommendations

|

||||

### PR Best Practices

|

||||

|

||||

To allow your work to be integrated as seamlessly as possible, we advise you to:

|

||||

To ensure your work is integrated smoothly, please:

|

||||

|

||||

- ✅ Verify your PR is **up-to-date** with `ultralytics/yolov5` `master` branch. If your PR is behind you can update your code by clicking the 'Update branch' button or by running `git pull` and `git merge master` locally.

|

||||

- ✅ Make sure your PR is **up-to-date** with the `ultralytics/yolov5` `master` branch. If your branch is behind, update it using the 'Update branch' button or by running `git pull` and `git merge master` locally.

|

||||

|

||||

<p align="center"><img width="751" alt="Screenshot 2022-08-29 at 22 47 15" src="https://user-images.githubusercontent.com/26833433/187295893-50ed9f44-b2c9-4138-a614-de69bd1753d7.png"></p>

|

||||

|

||||

- ✅ Verify all YOLOv5 Continuous Integration (CI) **checks are passing**.

|

||||

- ✅ Ensure all YOLO Continuous Integration (CI) **checks are passing**.

|

||||

|

||||

<p align="center"><img width="751" alt="Screenshot 2022-08-29 at 22 47 03" src="https://user-images.githubusercontent.com/26833433/187296922-545c5498-f64a-4d8c-8300-5fa764360da6.png"></p>

|

||||

|

||||

- ✅ Reduce changes to the absolute **minimum** required for your bug fix or feature addition. _"It is not daily increase but daily decrease, hack away the unessential. The closer to the source, the less wastage there is."_ — Bruce Lee

|

||||

- ✅ Limit your changes to the **minimum** required for your bug fix or feature.

|

||||

_"It is not daily increase but daily decrease, hack away the unessential. The closer to the source, the less wastage there is."_ — Bruce Lee

|

||||

|

||||

## Submitting a Bug Report 🐛

|

||||

## 🐛 Submitting a Bug Report

|

||||

|

||||

If you spot a problem with YOLOv5 please submit a Bug Report!

|

||||

If you encounter an issue with YOLO, please submit a bug report!

|

||||

|

||||

For us to start investigating a possible problem we need to be able to reproduce it ourselves first. We've created a few short guidelines below to help users provide what we need to get started.

|

||||

To help us investigate, we need to be able to reproduce the problem. Follow these guidelines to provide what we need to get started:

|

||||

|

||||

When asking a question, people will be better able to provide help if you provide **code** that they can easily understand and use to **reproduce** the problem. This is referred to by community members as creating a [minimum reproducible example](https://docs.ultralytics.com/help/minimum_reproducible_example/). Your code that reproduces the problem should be:

|

||||

When asking a question or reporting a bug, you'll get better help if you provide **code** that others can easily understand and use to **reproduce** the issue. This is known as a [minimum reproducible example](https://docs.ultralytics.com/help/minimum-reproducible-example/). Your code should be:

|

||||

|

||||

- ✅ **Minimal** – Use as little code as possible that still produces the same problem

|

||||

- ✅ **Complete** – Provide **all** parts someone else needs to reproduce your problem in the question itself

|

||||

- ✅ **Reproducible** – Test the code you're about to provide to make sure it reproduces the problem

|

||||

- ✅ **Minimal** – Use as little code as possible that still produces the issue

|

||||

- ✅ **Complete** – Include all parts needed for someone else to reproduce the problem

|

||||

- ✅ **Reproducible** – Test your code to ensure it actually reproduces the issue

|

||||

|

||||

In addition to the above requirements, for [Ultralytics](https://www.ultralytics.com/) to provide assistance your code should be:

|

||||

Additionally, for [Ultralytics](https://www.ultralytics.com/) to assist you, your code should be:

|

||||

|

||||

- ✅ **Current** – Verify that your code is up-to-date with the current GitHub [master](https://github.com/ultralytics/yolov5/tree/master), and if necessary `git pull` or `git clone` a new copy to ensure your problem has not already been resolved by previous commits.

|

||||

- ✅ **Unmodified** – Your problem must be reproducible without any modifications to the codebase in this repository. [Ultralytics](https://www.ultralytics.com/) does not provide support for custom code ⚠️.

|

||||

- ✅ **Current** – Ensure your code is up-to-date with the latest [master branch](https://github.com/ultralytics/yolov5/tree/master). Use `git pull` or `git clone` to get the latest version and confirm your issue hasn't already been fixed.

|

||||

- ✅ **Unmodified** – The problem must be reproducible without any custom modifications to the repository. [Ultralytics](https://www.ultralytics.com/) does not provide support for custom code ⚠️.

|

||||

|

||||

If you believe your problem meets all of the above criteria, please close this issue and raise a new one using the 🐛 **Bug Report** [template](https://github.com/ultralytics/yolov5/issues/new/choose) and provide a [minimum reproducible example](https://docs.ultralytics.com/help/minimum_reproducible_example/) to help us better understand and diagnose your problem.

|

||||

If your issue meets these criteria, please close your current issue and open a new one using the 🐛 **Bug Report** [template](https://github.com/ultralytics/yolov5/issues/new/choose), including your [minimum reproducible example](https://docs.ultralytics.com/help/minimum-reproducible-example/) to help us diagnose your problem.

|

||||

|

||||

## License

|

||||

## 📄 License

|

||||

|

||||

By contributing, you agree that your contributions will be licensed under the [AGPL-3.0 license](https://choosealicense.com/licenses/agpl-3.0/)

|

||||

By contributing, you agree that your contributions will be licensed under the [AGPL-3.0 license](https://choosealicense.com/licenses/agpl-3.0/).

|

||||

|

||||

---

|

||||

|

||||

For more details on contributing, check out the [Ultralytics open-source contributing guide](https://docs.ultralytics.com/help/contributing/), and explore our [Ultralytics blog](https://www.ultralytics.com/blog) for community highlights and best practices.

|

||||

|

||||

We welcome your contributions—thank you for helping make Ultralytics YOLO better! 🚀

|

||||

|

||||

[](https://github.com/ultralytics/ultralytics/graphs/contributors)

|

||||

|

|

|

|||

14

README.md

14

README.md

|

|

@ -181,7 +181,7 @@ python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5x.yaml --

|

|||

- **[Multi-GPU Training](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training/)**: Speed up training using multiple GPUs.

|

||||

- **[PyTorch Hub Integration](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/)** 🌟 **NEW**: Easily load models using PyTorch Hub.

|

||||

- **[Model Export (TFLite, ONNX, CoreML, TensorRT)](https://docs.ultralytics.com/yolov5/tutorials/model_export/)** 🚀: Convert your models to various deployment formats like [ONNX](https://onnx.ai/) or [TensorRT](https://developer.nvidia.com/tensorrt).

|

||||

- **[NVIDIA Jetson Deployment](https://docs.ultralytics.com/yolov5/tutorials/running_on_jetson_nano/)** 🌟 **NEW**: Deploy YOLOv5 on [NVIDIA Jetson](https://developer.nvidia.com/embedded-computing) devices.

|

||||

- **[NVIDIA Jetson Deployment](https://docs.ultralytics.com/guides/nvidia-jetson/)** 🌟 **NEW**: Deploy YOLOv5 on [NVIDIA Jetson](https://developer.nvidia.com/embedded-computing) devices.

|

||||

- **[Test-Time Augmentation (TTA)](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/)**: Enhance prediction accuracy with TTA.

|

||||

- **[Model Ensembling](https://docs.ultralytics.com/yolov5/tutorials/model_ensembling/)**: Combine multiple models for better performance.

|

||||

- **[Model Pruning/Sparsity](https://docs.ultralytics.com/yolov5/tutorials/model_pruning_and_sparsity/)**: Optimize models for size and speed.

|

||||

|

|

@ -191,7 +191,7 @@ python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5x.yaml --

|

|||

- **[Ultralytics HUB Training](https://www.ultralytics.com/hub)** 🚀 **RECOMMENDED**: Train and deploy YOLO models using Ultralytics HUB.

|

||||

- **[ClearML Logging](https://docs.ultralytics.com/yolov5/tutorials/clearml_logging_integration/)**: Integrate with [ClearML](https://clear.ml/) for experiment tracking.

|

||||

- **[Neural Magic DeepSparse Integration](https://docs.ultralytics.com/yolov5/tutorials/neural_magic_pruning_quantization/)**: Accelerate inference with DeepSparse.

|

||||

- **[Comet Logging](https://docs.ultralytics.com/yolov5/tutorials/comet_logging_integration/)** 🌟 **NEW**: Log experiments using [Comet ML](https://www.comet.com/).

|

||||

- **[Comet Logging](https://docs.ultralytics.com/yolov5/tutorials/comet_logging_integration/)** 🌟 **NEW**: Log experiments using [Comet ML](https://www.comet.com/site/).

|

||||

|

||||

</details>

|

||||

|

||||

|

|

@ -219,9 +219,9 @@ Our key integrations with leading AI platforms extend the functionality of Ultra

|

|||

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-neuralmagic.png" width="10%" alt="Neural Magic logo"></a>

|

||||

</div>

|

||||

|

||||

| Ultralytics HUB 🌟 | Weights & Biases | Comet | Neural Magic |

|

||||

| :----------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------: |

|

||||

| Streamline YOLO workflows: Label, train, and deploy effortlessly with [Ultralytics HUB](https://hub.ultralytics.com). Try now! | Track experiments, hyperparameters, and results with [Weights & Biases](https://docs.ultralytics.com/integrations/weights-biases/). | Free forever, [Comet ML](https://docs.ultralytics.com/integrations/comet/) lets you save YOLO models, resume training, and interactively visualize predictions. | Run YOLO inference up to 6x faster with [Neural Magic DeepSparse](https://docs.ultralytics.com/integrations/neural-magic/). |

|

||||

| Ultralytics HUB 🌟 | Weights & Biases | Comet | Neural Magic |

|

||||

| :-----------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------: |

|

||||

| Streamline YOLO workflows: Label, train, and deploy effortlessly with [Ultralytics HUB](https://hub.ultralytics.com/). Try now! | Track experiments, hyperparameters, and results with [Weights & Biases](https://docs.ultralytics.com/integrations/weights-biases/). | Free forever, [Comet ML](https://docs.ultralytics.com/integrations/comet/) lets you save YOLO models, resume training, and interactively visualize predictions. | Run YOLO inference up to 6x faster with [Neural Magic DeepSparse](https://docs.ultralytics.com/integrations/neural-magic/). |

|

||||

|

||||

## ⭐ Ultralytics HUB

|

||||

|

||||

|

|

@ -244,7 +244,7 @@ YOLOv5 is designed for simplicity and ease of use. We prioritize real-world perf

|

|||

<summary>Figure Notes</summary>

|

||||

|

||||

- **COCO AP val** denotes the [mean Average Precision (mAP)](https://www.ultralytics.com/glossary/mean-average-precision-map) at [Intersection over Union (IoU)](https://www.ultralytics.com/glossary/intersection-over-union-iou) thresholds from 0.5 to 0.95, measured on the 5,000-image [COCO val2017 dataset](https://docs.ultralytics.com/datasets/detect/coco/) across various inference sizes (256 to 1536 pixels).

|

||||

- **GPU Speed** measures the average inference time per image on the [COCO val2017 dataset](https://docs.ultralytics.com/datasets/detect/coco/) using an [AWS p3.2xlarge V100 instance](https://aws.amazon.com/ec2/instance-types/p3/) with a batch size of 32.

|

||||

- **GPU Speed** measures the average inference time per image on the [COCO val2017 dataset](https://docs.ultralytics.com/datasets/detect/coco/) using an [AWS p3.2xlarge V100 instance](https://aws.amazon.com/ec2/instance-types/p4/) with a batch size of 32.

|

||||

- **EfficientDet** data is sourced from the [google/automl repository](https://github.com/google/automl) at batch size 8.

|

||||

- **Reproduce** these results using the command: `python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`

|

||||

|

||||

|

|

@ -273,7 +273,7 @@ This table shows the performance metrics for various YOLOv5 models trained on th

|

|||

|

||||

- All checkpoints were trained for 300 epochs using default settings. Nano (n) and Small (s) models use [hyp.scratch-low.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-low.yaml) hyperparameters, while Medium (m), Large (l), and Extra-Large (x) models use [hyp.scratch-high.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-high.yaml).

|

||||

- **mAP<sup>val</sup>** values represent single-model, single-scale performance on the [COCO val2017 dataset](https://docs.ultralytics.com/datasets/detect/coco/).<br>Reproduce using: `python val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65`

|

||||

- **Speed** metrics are averaged over COCO val images using an [AWS p3.2xlarge V100 instance](https://aws.amazon.com/ec2/instance-types/p3/). Non-Maximum Suppression (NMS) time (~1 ms/image) is not included.<br>Reproduce using: `python val.py --data coco.yaml --img 640 --task speed --batch 1`

|

||||

- **Speed** metrics are averaged over COCO val images using an [AWS p3.2xlarge V100 instance](https://aws.amazon.com/ec2/instance-types/p4/). Non-Maximum Suppression (NMS) time (~1 ms/image) is not included.<br>Reproduce using: `python val.py --data coco.yaml --img 640 --task speed --batch 1`

|

||||

- **TTA** ([Test Time Augmentation](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/)) includes reflection and scale augmentations for improved accuracy.<br>Reproduce using: `python val.py --data coco.yaml --img 1536 --iou 0.7 --augment`

|

||||

|

||||

</details>

|

||||

|

|

|

|||

|

|

@ -181,7 +181,7 @@ python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5x.yaml --

|

|||

- **[多 GPU 训练](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training/)**:使用多个 GPU 加速训练。

|

||||

- **[PyTorch Hub 集成](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/)** 🌟 **新增**:使用 PyTorch Hub 轻松加载模型。

|

||||

- **[模型导出 (TFLite, ONNX, CoreML, TensorRT)](https://docs.ultralytics.com/yolov5/tutorials/model_export/)** 🚀:将您的模型转换为各种部署格式,如 [ONNX](https://onnx.ai/) 或 [TensorRT](https://developer.nvidia.com/tensorrt)。

|

||||

- **[NVIDIA Jetson 部署](https://docs.ultralytics.com/yolov5/tutorials/running_on_jetson_nano/)** 🌟 **新增**:在 [NVIDIA Jetson](https://developer.nvidia.com/embedded-computing) 设备上部署 YOLOv5。

|

||||

- **[NVIDIA Jetson 部署](https://docs.ultralytics.com/guides/nvidia-jetson/)** 🌟 **新增**:在 [NVIDIA Jetson](https://developer.nvidia.com/embedded-computing) 设备上部署 YOLOv5。

|

||||

- **[测试时增强 (TTA)](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/)**:使用 TTA 提高预测准确性。

|

||||

- **[模型集成](https://docs.ultralytics.com/yolov5/tutorials/model_ensembling/)**:组合多个模型以获得更好的性能。

|

||||

- **[模型剪枝/稀疏化](https://docs.ultralytics.com/yolov5/tutorials/model_pruning_and_sparsity/)**:优化模型的大小和速度。

|

||||

|

|

@ -191,7 +191,7 @@ python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5x.yaml --

|

|||

- **[Ultralytics HUB 训练](https://www.ultralytics.com/hub)** 🚀 **推荐**:使用 Ultralytics HUB 训练和部署 YOLO 模型。

|

||||

- **[ClearML 日志记录](https://docs.ultralytics.com/yolov5/tutorials/clearml_logging_integration/)**:与 [ClearML](https://clear.ml/) 集成以进行实验跟踪。

|

||||

- **[Neural Magic DeepSparse 集成](https://docs.ultralytics.com/yolov5/tutorials/neural_magic_pruning_quantization/)**:使用 DeepSparse 加速推理。

|

||||

- **[Comet 日志记录](https://docs.ultralytics.com/yolov5/tutorials/comet_logging_integration/)** 🌟 **新增**:使用 [Comet ML](https://www.comet.com/) 记录实验。

|

||||

- **[Comet 日志记录](https://docs.ultralytics.com/yolov5/tutorials/comet_logging_integration/)** 🌟 **新增**:使用 [Comet ML](https://www.comet.com/site/) 记录实验。

|

||||

|

||||

</details>

|

||||

|

||||

|

|

@ -219,9 +219,9 @@ python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5x.yaml --

|

|||

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-neuralmagic.png" width="10%" alt="Neural Magic logo"></a>

|

||||

</div>

|

||||

|

||||

| Ultralytics HUB 🌟 | Weights & Biases | Comet | Neural Magic |

|

||||

| :------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------: | :------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------: |

|

||||

| 简化 YOLO 工作流程:使用 [Ultralytics HUB](https://hub.ultralytics.com) 轻松标注、训练和部署。立即试用! | 使用 [Weights & Biases](https://docs.ultralytics.com/integrations/weights-biases/) 跟踪实验、超参数和结果。 | 永久免费的 [Comet ML](https://docs.ultralytics.com/integrations/comet/) 让您保存 YOLO 模型、恢复训练并交互式地可视化预测。 | 使用 [Neural Magic DeepSparse](https://docs.ultralytics.com/integrations/neural-magic/) 将 YOLO 推理速度提高多达 6 倍。 |

|

||||

| Ultralytics HUB 🌟 | Weights & Biases | Comet | Neural Magic |

|

||||

| :-------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------: | :------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------: |

|

||||

| 简化 YOLO 工作流程:使用 [Ultralytics HUB](https://hub.ultralytics.com/) 轻松标注、训练和部署。立即试用! | 使用 [Weights & Biases](https://docs.ultralytics.com/integrations/weights-biases/) 跟踪实验、超参数和结果。 | 永久免费的 [Comet ML](https://docs.ultralytics.com/integrations/comet/) 让您保存 YOLO 模型、恢复训练并交互式地可视化预测。 | 使用 [Neural Magic DeepSparse](https://docs.ultralytics.com/integrations/neural-magic/) 将 YOLO 推理速度提高多达 6 倍。 |

|

||||

|

||||

## ⭐ Ultralytics HUB

|

||||

|

||||

|

|

@ -244,7 +244,7 @@ YOLOv5 的设计旨在简单易用。我们优先考虑实际性能和可访问

|

|||

<summary>图表说明</summary>

|

||||

|

||||

- **COCO AP val** 表示在 [交并比 (IoU)](https://www.ultralytics.com/glossary/intersection-over-union-iou) 阈值从 0.5 到 0.95 范围内的[平均精度均值 (mAP)](https://www.ultralytics.com/glossary/mean-average-precision-map),在包含 5000 张图像的 [COCO val2017 数据集](https://docs.ultralytics.com/datasets/detect/coco/)上,使用各种推理尺寸(256 到 1536 像素)测量得出。

|

||||

- **GPU Speed** 使用批处理大小为 32 的 [AWS p3.2xlarge V100 实例](https://aws.amazon.com/ec2/instance-types/p3/),测量在 [COCO val2017 数据集](https://docs.ultralytics.com/datasets/detect/coco/)上每张图像的平均推理时间。

|

||||

- **GPU Speed** 使用批处理大小为 32 的 [AWS p3.2xlarge V100 实例](https://aws.amazon.com/ec2/instance-types/p4/),测量在 [COCO val2017 数据集](https://docs.ultralytics.com/datasets/detect/coco/)上每张图像的平均推理时间。

|

||||

- **EfficientDet** 数据来源于 [google/automl 仓库](https://github.com/google/automl),批处理大小为 8。

|

||||

- **复现**这些结果请使用命令:`python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`

|

||||

|

||||

|

|

@ -273,7 +273,7 @@ YOLOv5 的设计旨在简单易用。我们优先考虑实际性能和可访问

|

|||

|

||||

- 所有预训练权重均使用默认设置训练了 300 个周期。Nano (n) 和 Small (s) 模型使用 [hyp.scratch-low.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-low.yaml) 超参数,而 Medium (m)、Large (l) 和 Extra-Large (x) 模型使用 [hyp.scratch-high.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-high.yaml)。

|

||||

- **mAP<sup>val</sup>** 值表示在 [COCO val2017 数据集](https://docs.ultralytics.com/datasets/detect/coco/)上的单模型、单尺度性能。<br>复现请使用:`python val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65`

|

||||

- **速度**指标是在 [AWS p3.2xlarge V100 实例](https://aws.amazon.com/ec2/instance-types/p3/)上对 COCO val 图像进行平均测量的。不包括非极大值抑制 (NMS) 时间(约 1 毫秒/图像)。<br>复现请使用:`python val.py --data coco.yaml --img 640 --task speed --batch 1`

|

||||

- **速度**指标是在 [AWS p3.2xlarge V100 实例](https://aws.amazon.com/ec2/instance-types/p4/)上对 COCO val 图像进行平均测量的。不包括非极大值抑制 (NMS) 时间(约 1 毫秒/图像)。<br>复现请使用:`python val.py --data coco.yaml --img 640 --task speed --batch 1`

|

||||

- **TTA** ([测试时增强](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/)) 包括反射和尺度增强以提高准确性。<br>复现请使用:`python val.py --data coco.yaml --img 1536 --iou 0.7 --augment`

|

||||

|

||||

</details>

|

||||

|

|

|

|||

|

|

@ -7,19 +7,41 @@

|

|||

},

|

||||

"source": [

|

||||

"<div align=\"center\">\n",

|

||||

" <a href=\"https://ultralytics.com/yolo\" target=\"_blank\">\n",

|

||||

" <img width=\"1024\" src=\"https://raw.githubusercontent.com/ultralytics/assets/main/yolov5/v70/splash.png\">\n",

|

||||

" </a>\n",

|

||||

"\n",

|

||||

" <a href=\"https://ultralytics.com/yolov5\" target=\"_blank\">\n",

|

||||

" <img width=\"1024\", src=\"https://raw.githubusercontent.com/ultralytics/assets/main/yolov5/v70/splash.png\"></a>\n",

|

||||

" [中文](https://docs.ultralytics.com/zh/) | [한국어](https://docs.ultralytics.com/ko/) | [日本語](https://docs.ultralytics.com/ja/) | [Русский](https://docs.ultralytics.com/ru/) | [Deutsch](https://docs.ultralytics.com/de/) | [Français](https://docs.ultralytics.com/fr/) | [Español](https://docs.ultralytics.com/es/) | [Português](https://docs.ultralytics.com/pt/) | [Türkçe](https://docs.ultralytics.com/tr/) | [Tiếng Việt](https://docs.ultralytics.com/vi/) | [العربية](https://docs.ultralytics.com/ar/)\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"<br>\n",

|

||||

" <a href=\"https://bit.ly/yolov5-paperspace-notebook\"><img src=\"https://assets.paperspace.io/img/gradient-badge.svg\" alt=\"Run on Gradient\"></a>\n",

|

||||

" <a href=\"https://github.com/ultralytics/ultralytics/actions/workflows/ci.yml\"><img src=\"https://github.com/ultralytics/ultralytics/actions/workflows/ci.yml/badge.svg\" alt=\"Ultralytics CI\"></a>\n",

|

||||

" <a href=\"https://console.paperspace.com/github/ultralytics/ultralytics\"><img src=\"https://assets.paperspace.io/img/gradient-badge.svg\" alt=\"Run on Gradient\"/></a>\n",

|

||||

" <a href=\"https://colab.research.google.com/github/ultralytics/yolov5/blob/master/classify/tutorial.ipynb\"><img src=\"https://colab.research.google.com/assets/colab-badge.svg\" alt=\"Open In Colab\"></a>\n",

|

||||

" <a href=\"https://www.kaggle.com/models/ultralytics/yolov5\"><img src=\"https://kaggle.com/static/images/open-in-kaggle.svg\" alt=\"Open In Kaggle\"></a>\n",

|

||||

" <a href=\"https://www.kaggle.com/models/ultralytics/yolo11\"><img src=\"https://kaggle.com/static/images/open-in-kaggle.svg\" alt=\"Open In Kaggle\"></a>\n",

|

||||

"\n",

|

||||

" <a href=\"https://ultralytics.com/discord\"><img alt=\"Discord\" src=\"https://img.shields.io/discord/1089800235347353640?logo=discord&logoColor=white&label=Discord&color=blue\"></a>\n",

|

||||

" <a href=\"https://community.ultralytics.com\"><img alt=\"Ultralytics Forums\" src=\"https://img.shields.io/discourse/users?server=https%3A%2F%2Fcommunity.ultralytics.com&logo=discourse&label=Forums&color=blue\"></a>\n",

|

||||

" <a href=\"https://reddit.com/r/ultralytics\"><img alt=\"Ultralytics Reddit\" src=\"https://img.shields.io/reddit/subreddit-subscribers/ultralytics?style=flat&logo=reddit&logoColor=white&label=Reddit&color=blue\"></a>\n",

|

||||

"</div>\n",

|

||||

"\n",

|

||||

"This **Ultralytics YOLOv5 Classification Colab Notebook** is the easiest way to get started with [YOLO models](https://www.ultralytics.com/yolo)—no installation needed. Built by [Ultralytics](https://www.ultralytics.com/), the creators of YOLO, this notebook walks you through running **state-of-the-art** models directly in your browser.\n",

|

||||

"\n",

|

||||

"Ultralytics models are constantly updated for performance and flexibility. They're **fast**, **accurate**, and **easy to use**, and they excel at [object detection](https://docs.ultralytics.com/tasks/detect/), [tracking](https://docs.ultralytics.com/modes/track/), [instance segmentation](https://docs.ultralytics.com/tasks/segment/), [image classification](https://docs.ultralytics.com/tasks/classify/), and [pose estimation](https://docs.ultralytics.com/tasks/pose/).\n",

|

||||

"\n",

|

||||

"Find detailed documentation in the [Ultralytics Docs](https://docs.ultralytics.com/). Get support via [GitHub Issues](https://github.com/ultralytics/ultralytics/issues/new/choose). Join discussions on [Discord](https://discord.com/invite/ultralytics), [Reddit](https://www.reddit.com/r/ultralytics/), and the [Ultralytics Community Forums](https://community.ultralytics.com/)!\n",

|

||||

"\n",

|

||||

"Request an Enterprise License for commercial use at [Ultralytics Licensing](https://www.ultralytics.com/license).\n",

|

||||

"\n",

|

||||

"<br>\n",

|

||||

"<div>\n",

|

||||

" <a href=\"https://www.youtube.com/watch?v=ZN3nRZT7b24\" target=\"_blank\">\n",

|

||||

" <img src=\"https://img.youtube.com/vi/ZN3nRZT7b24/maxresdefault.jpg\" alt=\"Ultralytics Video\" width=\"640\" style=\"border-radius: 10px; box-shadow: 0 4px 8px rgba(0, 0, 0, 0.2);\">\n",

|

||||

" </a>\n",

|

||||

"\n",

|

||||

"This <a href=\"https://github.com/ultralytics/yolov5\">YOLOv5</a> 🚀 notebook by <a href=\"https://ultralytics.com\">Ultralytics</a> presents simple train, validate and predict examples to help start your AI adventure.<br>See <a href=\"https://github.com/ultralytics/yolov5/issues/new/choose\">GitHub</a> for community support or <a href=\"https://ultralytics.com/contact\">contact us</a> for professional support.\n",

|

||||

"\n",

|

||||

" <p style=\"font-size: 16px; font-family: Arial, sans-serif; color: #555;\">\n",

|

||||

" <strong>Watch: </strong> How to Train\n",

|

||||

" <a href=\"https://github.com/ultralytics/ultralytics\">Ultralytics</a>\n",

|

||||

" <a href=\"https://docs.ultralytics.com/models/yolo11/\">YOLO11</a> Model on Custom Dataset using Google Colab Notebook 🚀\n",

|

||||

" </p>\n",

|

||||

"</div>"

|

||||

]

|

||||

},

|

||||

|

|

@ -36,7 +58,6 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"colab": {

|

||||

"base_uri": "https://localhost:8080/"

|

||||

|

|

@ -44,22 +65,6 @@

|

|||

"id": "wbvMlHd_QwMG",

|

||||

"outputId": "0806e375-610d-4ec0-c867-763dbb518279"

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stderr",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"YOLOv5 🚀 v7.0-3-g61ebf5e Python-3.7.15 torch-1.12.1+cu113 CUDA:0 (Tesla T4, 15110MiB)\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"Setup complete ✅ (2 CPUs, 12.7 GB RAM, 22.6/78.2 GB disk)\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"source": [

|

||||

"!git clone https://github.com/ultralytics/yolov5 # clone\n",

|

||||

"%cd yolov5\n",

|

||||

|

|

@ -70,6 +75,23 @@

|

|||

"import utils\n",

|

||||

"\n",

|

||||

"display = utils.notebook_init() # checks"

|

||||

],

|

||||

"execution_count": 1,

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stderr",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"YOLOv5 🚀 v7.0-414-g78daef4b Python-3.12.6 torch-2.6.0 CPU\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"Setup complete ✅ (12 CPUs, 24.0 GB RAM, 139.0/460.4 GB disk)\n"

|

||||

]

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

|

|

@ -109,7 +131,7 @@

|

|||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"\u001b[34m\u001b[1mclassify/predict: \u001b[0mweights=['yolov5s-cls.pt'], source=data/images, data=data/coco128.yaml, imgsz=[224, 224], device=, view_img=False, save_txt=False, nosave=False, augment=False, visualize=False, update=False, project=runs/predict-cls, name=exp, exist_ok=False, half=False, dnn=False, vid_stride=1\n",

|

||||

"\u001B[34m\u001B[1mclassify/predict: \u001B[0mweights=['yolov5s-cls.pt'], source=data/images, data=data/coco128.yaml, imgsz=[224, 224], device=, view_img=False, save_txt=False, nosave=False, augment=False, visualize=False, update=False, project=runs/predict-cls, name=exp, exist_ok=False, half=False, dnn=False, vid_stride=1\n",

|

||||

"YOLOv5 🚀 v7.0-3-g61ebf5e Python-3.7.15 torch-1.12.1+cu113 CUDA:0 (Tesla T4, 15110MiB)\n",

|

||||

"\n",

|

||||

"Downloading https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s-cls.pt to yolov5s-cls.pt...\n",

|

||||

|

|

@ -120,7 +142,7 @@

|

|||

"image 1/2 /content/yolov5/data/images/bus.jpg: 224x224 minibus 0.39, police van 0.24, amphibious vehicle 0.05, recreational vehicle 0.04, trolleybus 0.03, 3.9ms\n",

|

||||

"image 2/2 /content/yolov5/data/images/zidane.jpg: 224x224 suit 0.38, bow tie 0.19, bridegroom 0.18, rugby ball 0.04, stage 0.02, 4.6ms\n",

|

||||

"Speed: 0.3ms pre-process, 4.3ms inference, 1.5ms NMS per image at shape (1, 3, 224, 224)\n",

|

||||

"Results saved to \u001b[1mruns/predict-cls/exp\u001b[0m\n"

|

||||

"Results saved to \u001B[1mruns/predict-cls/exp\u001B[0m\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

|

|

@ -198,7 +220,7 @@

|

|||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"\u001b[34m\u001b[1mclassify/val: \u001b[0mdata=../datasets/imagenet, weights=['yolov5s-cls.pt'], batch_size=128, imgsz=224, device=, workers=8, verbose=True, project=runs/val-cls, name=exp, exist_ok=False, half=True, dnn=False\n",

|

||||

"\u001B[34m\u001B[1mclassify/val: \u001B[0mdata=../datasets/imagenet, weights=['yolov5s-cls.pt'], batch_size=128, imgsz=224, device=, workers=8, verbose=True, project=runs/val-cls, name=exp, exist_ok=False, half=True, dnn=False\n",

|

||||

"YOLOv5 🚀 v7.0-3-g61ebf5e Python-3.7.15 torch-1.12.1+cu113 CUDA:0 (Tesla T4, 15110MiB)\n",

|

||||

"\n",

|

||||

"Fusing layers... \n",

|

||||

|

|

@ -1207,7 +1229,7 @@

|

|||

" ear 50 0.48 0.94\n",

|

||||

" toilet paper 50 0.36 0.68\n",

|

||||

"Speed: 0.1ms pre-process, 0.3ms inference, 0.0ms post-process per image at shape (1, 3, 224, 224)\n",

|

||||

"Results saved to \u001b[1mruns/val-cls/exp\u001b[0m\n"

|

||||

"Results saved to \u001B[1mruns/val-cls/exp\u001B[0m\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

|

|

@ -1224,9 +1246,7 @@

|

|||

"source": [

|

||||

"# 3. Train\n",

|

||||

"\n",

|

||||

"<p align=\"\"><a href=\"https://roboflow.com/?ref=ultralytics\"><img width=\"1000\" src=\"https://github.com/ultralytics/assets/raw/main/im/integrations-loop.png\"/></a></p>\n",

|

||||

"Close the active learning loop by sampling images from your inference conditions with the `roboflow` pip package\n",

|

||||

"<br><br>\n",

|

||||

"<p align=\"\"><a href=\"https://ultralytics.com/hub\"><img width=\"1000\" src=\"https://github.com/ultralytics/assets/raw/main/yolov8/banner-integrations.png\"/></a></p>\n",

|

||||

"\n",

|

||||

"Train a YOLOv5s Classification model on the [Imagenette](https://image-net.org/) dataset with `--data imagenet`, starting from pretrained `--pretrained yolov5s-cls.pt`.\n",

|

||||

"\n",

|

||||

|

|

@ -1235,17 +1255,7 @@

|

|||

"- **Training Results** are saved to `runs/train-cls/` with incrementing run directories, i.e. `runs/train-cls/exp2`, `runs/train-cls/exp3` etc.\n",

|

||||

"<br><br>\n",

|

||||

"\n",

|

||||

"A **Mosaic Dataloader** is used for training which combines 4 images into 1 mosaic.\n",

|

||||

"\n",

|

||||

"## Train on Custom Data with Roboflow 🌟 NEW\n",

|

||||

"\n",

|

||||

"[Roboflow](https://roboflow.com/?ref=ultralytics) enables you to easily **organize, label, and prepare** a high quality dataset with your own custom data. Roboflow also makes it easy to establish an active learning pipeline, collaborate with your team on dataset improvement, and integrate directly into your model building workflow with the `roboflow` pip package.\n",

|

||||

"\n",

|

||||

"- Custom Training Example: [https://blog.roboflow.com/train-yolov5-classification-custom-data/](https://blog.roboflow.com/train-yolov5-classification-custom-data/?ref=ultralytics)\n",

|

||||

"- Custom Training Notebook: [](https://colab.research.google.com/drive/1KZiKUAjtARHAfZCXbJRv14-pOnIsBLPV?usp=sharing)\n",

|

||||

"<br>\n",

|

||||

"\n",

|

||||

"<p align=\"\"><a href=\"https://roboflow.com/?ref=ultralytics\"><img width=\"480\" src=\"https://user-images.githubusercontent.com/26833433/202802162-92e60571-ab58-4409-948d-b31fddcd3c6f.png\"/></a></p>Label images lightning fast (including with model-assisted labeling)"

|

||||

"A **Mosaic Dataloader** is used for training which combines 4 images into 1 mosaic."

|

||||

]

|

||||

},

|

||||

{

|

||||

|

|

@ -1289,24 +1299,24 @@

|

|||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"\u001b[34m\u001b[1mclassify/train: \u001b[0mmodel=yolov5s-cls.pt, data=imagenette160, epochs=5, batch_size=64, imgsz=224, nosave=False, cache=ram, device=, workers=8, project=runs/train-cls, name=exp, exist_ok=False, pretrained=True, optimizer=Adam, lr0=0.001, decay=5e-05, label_smoothing=0.1, cutoff=None, dropout=None, verbose=False, seed=0, local_rank=-1\n",

|

||||

"\u001b[34m\u001b[1mgithub: \u001b[0mup to date with https://github.com/ultralytics/yolov5 ✅\n",

|

||||

"\u001B[34m\u001B[1mclassify/train: \u001B[0mmodel=yolov5s-cls.pt, data=imagenette160, epochs=5, batch_size=64, imgsz=224, nosave=False, cache=ram, device=, workers=8, project=runs/train-cls, name=exp, exist_ok=False, pretrained=True, optimizer=Adam, lr0=0.001, decay=5e-05, label_smoothing=0.1, cutoff=None, dropout=None, verbose=False, seed=0, local_rank=-1\n",

|

||||

"\u001B[34m\u001B[1mgithub: \u001B[0mup to date with https://github.com/ultralytics/yolov5 ✅\n",

|

||||

"YOLOv5 🚀 v7.0-3-g61ebf5e Python-3.7.15 torch-1.12.1+cu113 CUDA:0 (Tesla T4, 15110MiB)\n",

|

||||

"\n",

|

||||

"\u001b[34m\u001b[1mTensorBoard: \u001b[0mStart with 'tensorboard --logdir runs/train-cls', view at http://localhost:6006/\n",

|

||||

"\u001B[34m\u001B[1mTensorBoard: \u001B[0mStart with 'tensorboard --logdir runs/train-cls', view at http://localhost:6006/\n",

|

||||

"\n",

|

||||

"Dataset not found ⚠️, missing path /content/datasets/imagenette160, attempting download...\n",

|

||||

"Downloading https://github.com/ultralytics/assets/releases/download/v0.0.0/imagenette160.zip to /content/datasets/imagenette160.zip...\n",

|

||||

"100% 103M/103M [00:00<00:00, 347MB/s] \n",

|

||||

"Unzipping /content/datasets/imagenette160.zip...\n",

|

||||

"Dataset download success ✅ (3.3s), saved to \u001b[1m/content/datasets/imagenette160\u001b[0m\n",

|

||||

"Dataset download success ✅ (3.3s), saved to \u001B[1m/content/datasets/imagenette160\u001B[0m\n",

|

||||

"\n",

|

||||

"\u001b[34m\u001b[1malbumentations: \u001b[0mRandomResizedCrop(p=1.0, height=224, width=224, scale=(0.08, 1.0), ratio=(0.75, 1.3333333333333333), interpolation=1), HorizontalFlip(p=0.5), ColorJitter(p=0.5, brightness=[0.6, 1.4], contrast=[0.6, 1.4], saturation=[0.6, 1.4], hue=[0, 0]), Normalize(p=1.0, mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225), max_pixel_value=255.0), ToTensorV2(always_apply=True, p=1.0, transpose_mask=False)\n",

|

||||

"\u001B[34m\u001B[1malbumentations: \u001B[0mRandomResizedCrop(p=1.0, height=224, width=224, scale=(0.08, 1.0), ratio=(0.75, 1.3333333333333333), interpolation=1), HorizontalFlip(p=0.5), ColorJitter(p=0.5, brightness=[0.6, 1.4], contrast=[0.6, 1.4], saturation=[0.6, 1.4], hue=[0, 0]), Normalize(p=1.0, mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225), max_pixel_value=255.0), ToTensorV2(always_apply=True, p=1.0, transpose_mask=False)\n",

|

||||

"Model summary: 149 layers, 4185290 parameters, 4185290 gradients, 10.5 GFLOPs\n",

|

||||

"\u001b[34m\u001b[1moptimizer:\u001b[0m Adam(lr=0.001) with parameter groups 32 weight(decay=0.0), 33 weight(decay=5e-05), 33 bias\n",

|

||||

"\u001B[34m\u001B[1moptimizer:\u001B[0m Adam(lr=0.001) with parameter groups 32 weight(decay=0.0), 33 weight(decay=5e-05), 33 bias\n",

|

||||

"Image sizes 224 train, 224 test\n",

|

||||

"Using 1 dataloader workers\n",

|

||||

"Logging results to \u001b[1mruns/train-cls/exp\u001b[0m\n",

|

||||

"Logging results to \u001B[1mruns/train-cls/exp\u001B[0m\n",

|

||||

"Starting yolov5s-cls.pt training on imagenette160 dataset with 10 classes for 5 epochs...\n",

|

||||

"\n",

|

||||

" Epoch GPU_mem train_loss val_loss top1_acc top5_acc\n",

|

||||

|

|

@ -1317,7 +1327,7 @@

|

|||

" 5/5 1.73G 0.724 0.634 0.959 0.997: 100% 148/148 [00:37<00:00, 3.94it/s]\n",

|

||||

"\n",

|

||||

"Training complete (0.052 hours)\n",

|

||||

"Results saved to \u001b[1mruns/train-cls/exp\u001b[0m\n",

|

||||

"Results saved to \u001B[1mruns/train-cls/exp\u001B[0m\n",

|

||||

"Predict: python classify/predict.py --weights runs/train-cls/exp/weights/best.pt --source im.jpg\n",

|

||||

"Validate: python classify/val.py --weights runs/train-cls/exp/weights/best.pt --data /content/datasets/imagenette160\n",

|

||||

"Export: python export.py --weights runs/train-cls/exp/weights/best.pt --include onnx\n",

|

||||

|

|

|

|||

|

|

@ -1067,7 +1067,7 @@ def add_tflite_metadata(file, metadata, num_outputs):

|

|||

Note:

|

||||

TFLite metadata can include information such as model name, version, author, and other relevant details.

|

||||

For more details on the structure of the metadata, refer to TensorFlow Lite

|

||||

[metadata guidelines](https://www.tensorflow.org/lite/models/convert/metadata).

|

||||

[metadata guidelines](https://ai.google.dev/edge/litert/models/metadata).

|

||||

"""

|

||||

with contextlib.suppress(ImportError):

|

||||

# check_requirements('tflite_support')

|

||||

|

|

|

|||

|

|

@ -47,7 +47,7 @@ def _create(name, pretrained=True, channels=3, classes=80, autoshape=True, verbo

|

|||

|

||||

Notes:

|

||||

For more information on model loading and customization, visit the

|

||||

[YOLOv5 PyTorch Hub Documentation](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading).

|

||||

[YOLOv5 PyTorch Hub Documentation](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/).

|

||||

"""

|

||||

from pathlib import Path

|

||||

|

||||

|

|

@ -210,7 +210,7 @@ def yolov5s(pretrained=True, channels=3, classes=80, autoshape=True, _verbose=Tr

|

|||

|

||||

Notes:

|

||||

For more details on model loading and customization, visit

|

||||

the [YOLOv5 PyTorch Hub Documentation](https://pytorch.org/hub/ultralytics_yolov5).

|

||||

the [YOLOv5 PyTorch Hub Documentation](https://pytorch.org/hub/ultralytics_yolov5/).

|

||||

"""

|

||||

return _create("yolov5s", pretrained, channels, classes, autoshape, _verbose, device)

|

||||

|

||||

|

|

@ -438,7 +438,7 @@ def yolov5l6(pretrained=True, channels=3, classes=80, autoshape=True, _verbose=T

|

|||

```

|

||||

|

||||

Note:

|

||||

Refer to [PyTorch Hub Documentation](https://pytorch.org/hub/ultralytics_yolov5) for additional usage instructions.

|

||||

Refer to [PyTorch Hub Documentation](https://pytorch.org/hub/ultralytics_yolov5/) for additional usage instructions.

|

||||

"""

|

||||

return _create("yolov5l6", pretrained, channels, classes, autoshape, _verbose, device)

|

||||

|

||||

|

|

|

|||

|

|

@ -7,19 +7,41 @@

|

|||

},

|

||||

"source": [

|

||||

"<div align=\"center\">\n",

|

||||

" <a href=\"https://ultralytics.com/yolo\" target=\"_blank\">\n",

|

||||

" <img width=\"1024\" src=\"https://raw.githubusercontent.com/ultralytics/assets/main/yolov5/v70/splash.png\">\n",

|

||||

" </a>\n",

|

||||

"\n",

|

||||

" <a href=\"https://ultralytics.com/yolov5\" target=\"_blank\">\n",

|

||||

" <img width=\"1024\", src=\"https://raw.githubusercontent.com/ultralytics/assets/main/yolov5/v70/splash.png\"></a>\n",

|

||||

" [中文](https://docs.ultralytics.com/zh/) | [한국어](https://docs.ultralytics.com/ko/) | [日本語](https://docs.ultralytics.com/ja/) | [Русский](https://docs.ultralytics.com/ru/) | [Deutsch](https://docs.ultralytics.com/de/) | [Français](https://docs.ultralytics.com/fr/) | [Español](https://docs.ultralytics.com/es/) | [Português](https://docs.ultralytics.com/pt/) | [Türkçe](https://docs.ultralytics.com/tr/) | [Tiếng Việt](https://docs.ultralytics.com/vi/) | [العربية](https://docs.ultralytics.com/ar/)\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"<br>\n",

|

||||

" <a href=\"https://bit.ly/yolov5-paperspace-notebook\"><img src=\"https://assets.paperspace.io/img/gradient-badge.svg\" alt=\"Run on Gradient\"></a>\n",

|

||||

" <a href=\"https://github.com/ultralytics/ultralytics/actions/workflows/ci.yml\"><img src=\"https://github.com/ultralytics/ultralytics/actions/workflows/ci.yml/badge.svg\" alt=\"Ultralytics CI\"></a>\n",

|

||||

" <a href=\"https://console.paperspace.com/github/ultralytics/ultralytics\"><img src=\"https://assets.paperspace.io/img/gradient-badge.svg\" alt=\"Run on Gradient\"/></a>\n",

|

||||

" <a href=\"https://colab.research.google.com/github/ultralytics/yolov5/blob/master/segment/tutorial.ipynb\"><img src=\"https://colab.research.google.com/assets/colab-badge.svg\" alt=\"Open In Colab\"></a>\n",

|

||||

" <a href=\"https://www.kaggle.com/models/ultralytics/yolov5\"><img src=\"https://kaggle.com/static/images/open-in-kaggle.svg\" alt=\"Open In Kaggle\"></a>\n",

|

||||

" <a href=\"https://www.kaggle.com/models/ultralytics/yolo11\"><img src=\"https://kaggle.com/static/images/open-in-kaggle.svg\" alt=\"Open In Kaggle\"></a>\n",

|

||||

"\n",

|

||||

" <a href=\"https://ultralytics.com/discord\"><img alt=\"Discord\" src=\"https://img.shields.io/discord/1089800235347353640?logo=discord&logoColor=white&label=Discord&color=blue\"></a>\n",

|

||||

" <a href=\"https://community.ultralytics.com\"><img alt=\"Ultralytics Forums\" src=\"https://img.shields.io/discourse/users?server=https%3A%2F%2Fcommunity.ultralytics.com&logo=discourse&label=Forums&color=blue\"></a>\n",

|

||||

" <a href=\"https://reddit.com/r/ultralytics\"><img alt=\"Ultralytics Reddit\" src=\"https://img.shields.io/reddit/subreddit-subscribers/ultralytics?style=flat&logo=reddit&logoColor=white&label=Reddit&color=blue\"></a>\n",

|

||||

"</div>\n",

|

||||

"\n",

|

||||

"This **Ultralytics YOLOv5 Segmentation Colab Notebook** is the easiest way to get started with [YOLO models](https://www.ultralytics.com/yolo)—no installation needed. Built by [Ultralytics](https://www.ultralytics.com/), the creators of YOLO, this notebook walks you through running **state-of-the-art** models directly in your browser.\n",

|

||||

"\n",

|

||||

"Ultralytics models are constantly updated for performance and flexibility. They're **fast**, **accurate**, and **easy to use**, and they excel at [object detection](https://docs.ultralytics.com/tasks/detect/), [tracking](https://docs.ultralytics.com/modes/track/), [instance segmentation](https://docs.ultralytics.com/tasks/segment/), [image classification](https://docs.ultralytics.com/tasks/classify/), and [pose estimation](https://docs.ultralytics.com/tasks/pose/).\n",

|

||||

"\n",

|

||||

"Find detailed documentation in the [Ultralytics Docs](https://docs.ultralytics.com/). Get support via [GitHub Issues](https://github.com/ultralytics/ultralytics/issues/new/choose). Join discussions on [Discord](https://discord.com/invite/ultralytics), [Reddit](https://www.reddit.com/r/ultralytics/), and the [Ultralytics Community Forums](https://community.ultralytics.com/)!\n",

|

||||

"\n",

|

||||

"Request an Enterprise License for commercial use at [Ultralytics Licensing](https://www.ultralytics.com/license).\n",

|

||||

"\n",

|

||||

"<br>\n",

|

||||

"<div>\n",

|

||||

" <a href=\"https://www.youtube.com/watch?v=ZN3nRZT7b24\" target=\"_blank\">\n",

|

||||

" <img src=\"https://img.youtube.com/vi/ZN3nRZT7b24/maxresdefault.jpg\" alt=\"Ultralytics Video\" width=\"640\" style=\"border-radius: 10px; box-shadow: 0 4px 8px rgba(0, 0, 0, 0.2);\">\n",

|

||||

" </a>\n",

|

||||

"\n",

|

||||

"This <a href=\"https://github.com/ultralytics/yolov5\">YOLOv5</a> 🚀 notebook by <a href=\"https://ultralytics.com\">Ultralytics</a> presents simple train, validate and predict examples to help start your AI adventure.<br>See <a href=\"https://github.com/ultralytics/yolov5/issues/new/choose\">GitHub</a> for community support or <a href=\"https://ultralytics.com/contact\">contact us</a> for professional support.\n",

|

||||

"\n",

|

||||

" <p style=\"font-size: 16px; font-family: Arial, sans-serif; color: #555;\">\n",

|

||||

" <strong>Watch: </strong> How to Train\n",

|

||||

" <a href=\"https://github.com/ultralytics/ultralytics\">Ultralytics</a>\n",

|

||||

" <a href=\"https://docs.ultralytics.com/models/yolo11/\">YOLO11</a> Model on Custom Dataset using Google Colab Notebook 🚀\n",

|

||||

" </p>\n",

|

||||

"</div>"

|

||||

]

|

||||

},

|

||||

|

|

@ -109,7 +131,7 @@

|

|||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"\u001b[34m\u001b[1msegment/predict: \u001b[0mweights=['yolov5s-seg.pt'], source=data/images, data=data/coco128.yaml, imgsz=[640, 640], conf_thres=0.25, iou_thres=0.45, max_det=1000, device=, view_img=False, save_txt=False, save_conf=False, save_crop=False, nosave=False, classes=None, agnostic_nms=False, augment=False, visualize=False, update=False, project=runs/predict-seg, name=exp, exist_ok=False, line_thickness=3, hide_labels=False, hide_conf=False, half=False, dnn=False, vid_stride=1, retina_masks=False\n",

|

||||

"\u001B[34m\u001B[1msegment/predict: \u001B[0mweights=['yolov5s-seg.pt'], source=data/images, data=data/coco128.yaml, imgsz=[640, 640], conf_thres=0.25, iou_thres=0.45, max_det=1000, device=, view_img=False, save_txt=False, save_conf=False, save_crop=False, nosave=False, classes=None, agnostic_nms=False, augment=False, visualize=False, update=False, project=runs/predict-seg, name=exp, exist_ok=False, line_thickness=3, hide_labels=False, hide_conf=False, half=False, dnn=False, vid_stride=1, retina_masks=False\n",

|

||||

"YOLOv5 🚀 v7.0-2-gc9d47ae Python-3.7.15 torch-1.12.1+cu113 CUDA:0 (Tesla T4, 15110MiB)\n",

|

||||

"\n",

|

||||

"Downloading https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s-seg.pt to yolov5s-seg.pt...\n",

|

||||

|

|

@ -120,7 +142,7 @@

|

|||

"image 1/2 /content/yolov5/data/images/bus.jpg: 640x480 4 persons, 1 bus, 18.2ms\n",

|

||||

"image 2/2 /content/yolov5/data/images/zidane.jpg: 384x640 2 persons, 1 tie, 13.4ms\n",

|

||||

"Speed: 0.5ms pre-process, 15.8ms inference, 18.5ms NMS per image at shape (1, 3, 640, 640)\n",

|

||||

"Results saved to \u001b[1mruns/predict-seg/exp\u001b[0m\n"

|

||||

"Results saved to \u001B[1mruns/predict-seg/exp\u001B[0m\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

|

|

@ -191,17 +213,17 @@

|

|||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"\u001b[34m\u001b[1msegment/val: \u001b[0mdata=/content/yolov5/data/coco.yaml, weights=['yolov5s-seg.pt'], batch_size=32, imgsz=640, conf_thres=0.001, iou_thres=0.6, max_det=300, task=val, device=, workers=8, single_cls=False, augment=False, verbose=False, save_txt=False, save_hybrid=False, save_conf=False, save_json=False, project=runs/val-seg, name=exp, exist_ok=False, half=True, dnn=False\n",

|

||||

"\u001B[34m\u001B[1msegment/val: \u001B[0mdata=/content/yolov5/data/coco.yaml, weights=['yolov5s-seg.pt'], batch_size=32, imgsz=640, conf_thres=0.001, iou_thres=0.6, max_det=300, task=val, device=, workers=8, single_cls=False, augment=False, verbose=False, save_txt=False, save_hybrid=False, save_conf=False, save_json=False, project=runs/val-seg, name=exp, exist_ok=False, half=True, dnn=False\n",

|

||||

"YOLOv5 🚀 v7.0-2-gc9d47ae Python-3.7.15 torch-1.12.1+cu113 CUDA:0 (Tesla T4, 15110MiB)\n",

|

||||

"\n",

|

||||

"Fusing layers... \n",

|

||||

"YOLOv5s-seg summary: 224 layers, 7611485 parameters, 0 gradients, 26.4 GFLOPs\n",

|

||||

"\u001b[34m\u001b[1mval: \u001b[0mScanning /content/datasets/coco/val2017... 4952 images, 48 backgrounds, 0 corrupt: 100% 5000/5000 [00:03<00:00, 1361.31it/s]\n",

|

||||

"\u001b[34m\u001b[1mval: \u001b[0mNew cache created: /content/datasets/coco/val2017.cache\n",

|

||||

"\u001B[34m\u001B[1mval: \u001B[0mScanning /content/datasets/coco/val2017... 4952 images, 48 backgrounds, 0 corrupt: 100% 5000/5000 [00:03<00:00, 1361.31it/s]\n",

|

||||

"\u001B[34m\u001B[1mval: \u001B[0mNew cache created: /content/datasets/coco/val2017.cache\n",

|

||||

" Class Images Instances Box(P R mAP50 mAP50-95) Mask(P R mAP50 mAP50-95): 100% 157/157 [01:54<00:00, 1.37it/s]\n",

|

||||

" all 5000 36335 0.673 0.517 0.566 0.373 0.672 0.49 0.532 0.319\n",

|

||||

"Speed: 0.6ms pre-process, 4.4ms inference, 2.9ms NMS per image at shape (32, 3, 640, 640)\n",

|

||||

"Results saved to \u001b[1mruns/val-seg/exp\u001b[0m\n"

|

||||

"Results saved to \u001B[1mruns/val-seg/exp\u001B[0m\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

|

|

@ -218,9 +240,7 @@

|

|||

"source": [

|

||||

"# 3. Train\n",

|

||||

"\n",

|

||||

"<p align=\"\"><a href=\"https://roboflow.com/?ref=ultralytics\"><img width=\"1000\" src=\"https://github.com/ultralytics/assets/raw/main/im/integrations-loop.png\"/></a></p>\n",

|

||||

"Close the active learning loop by sampling images from your inference conditions with the `roboflow` pip package\n",

|

||||

"<br><br>\n",

|

||||

"<p align=\"\"><a href=\"https://ultralytics.com/hub\"><img width=\"1000\" src=\"https://github.com/ultralytics/assets/raw/main/yolov8/banner-integrations.png\"/></a></p>\n",

|

||||

"\n",

|

||||

"Train a YOLOv5s-seg model on the [COCO128](https://www.kaggle.com/datasets/ultralytics/coco128) dataset with `--data coco128-seg.yaml`, starting from pretrained `--weights yolov5s-seg.pt`, or from randomly initialized `--weights '' --cfg yolov5s-seg.yaml`.\n",

|

||||

"\n",

|

||||

|

|

@ -230,17 +250,7 @@

|

|||

"- **Training Results** are saved to `runs/train-seg/` with incrementing run directories, i.e. `runs/train-seg/exp2`, `runs/train-seg/exp3` etc.\n",

|

||||

"<br><br>\n",

|

||||

"\n",

|

||||

"A **Mosaic Dataloader** is used for training which combines 4 images into 1 mosaic.\n",

|

||||

"\n",

|

||||

"## Train on Custom Data with Roboflow 🌟 NEW\n",

|

||||

"\n",

|

||||

"[Roboflow](https://roboflow.com/?ref=ultralytics) enables you to easily **organize, label, and prepare** a high quality dataset with your own custom data. Roboflow also makes it easy to establish an active learning pipeline, collaborate with your team on dataset improvement, and integrate directly into your model building workflow with the `roboflow` pip package.\n",

|

||||

"\n",

|

||||

"- Custom Training Example: [https://blog.roboflow.com/train-yolov5-instance-segmentation-custom-dataset/](https://blog.roboflow.com/train-yolov5-instance-segmentation-custom-dataset/?ref=ultralytics)\n",

|

||||

"- Custom Training Notebook: [](https://colab.research.google.com/drive/1JTz7kpmHsg-5qwVz2d2IH3AaenI1tv0N?usp=sharing)\n",

|

||||

"<br>\n",

|

||||

"\n",

|

||||

"<p align=\"\"><a href=\"https://roboflow.com/?ref=ultralytics\"><img width=\"480\" src=\"https://robflow-public-assets.s3.amazonaws.com/how-to-train-yolov5-segmentation-annotation.gif\"/></a></p>Label images lightning fast (including with model-assisted labeling)"

|

||||

"A **Mosaic Dataloader** is used for training which combines 4 images into 1 mosaic."

|

||||

]

|

||||

},

|

||||

{

|

||||

|

|

@ -284,17 +294,17 @@

|

|||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"\u001b[34m\u001b[1msegment/train: \u001b[0mweights=yolov5s-seg.pt, cfg=, data=coco128-seg.yaml, hyp=data/hyps/hyp.scratch-low.yaml, epochs=3, batch_size=16, imgsz=640, rect=False, resume=False, nosave=False, noval=False, noautoanchor=False, noplots=False, evolve=None, bucket=, cache=ram, image_weights=False, device=, multi_scale=False, single_cls=False, optimizer=SGD, sync_bn=False, workers=8, project=runs/train-seg, name=exp, exist_ok=False, quad=False, cos_lr=False, label_smoothing=0.0, patience=100, freeze=[0], save_period=-1, seed=0, local_rank=-1, mask_ratio=4, no_overlap=False\n",

|

||||

"\u001b[34m\u001b[1mgithub: \u001b[0mup to date with https://github.com/ultralytics/yolov5 ✅\n",

|

||||

"\u001B[34m\u001B[1msegment/train: \u001B[0mweights=yolov5s-seg.pt, cfg=, data=coco128-seg.yaml, hyp=data/hyps/hyp.scratch-low.yaml, epochs=3, batch_size=16, imgsz=640, rect=False, resume=False, nosave=False, noval=False, noautoanchor=False, noplots=False, evolve=None, bucket=, cache=ram, image_weights=False, device=, multi_scale=False, single_cls=False, optimizer=SGD, sync_bn=False, workers=8, project=runs/train-seg, name=exp, exist_ok=False, quad=False, cos_lr=False, label_smoothing=0.0, patience=100, freeze=[0], save_period=-1, seed=0, local_rank=-1, mask_ratio=4, no_overlap=False\n",

|

||||

"\u001B[34m\u001B[1mgithub: \u001B[0mup to date with https://github.com/ultralytics/yolov5 ✅\n",

|

||||

"YOLOv5 🚀 v7.0-2-gc9d47ae Python-3.7.15 torch-1.12.1+cu113 CUDA:0 (Tesla T4, 15110MiB)\n",

|

||||

"\n",

|

||||

"\u001b[34m\u001b[1mhyperparameters: \u001b[0mlr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, cls=0.5, cls_pw=1.0, obj=1.0, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0, copy_paste=0.0\n",

|

||||

"\u001b[34m\u001b[1mTensorBoard: \u001b[0mStart with 'tensorboard --logdir runs/train-seg', view at http://localhost:6006/\n",

|

||||

"\u001B[34m\u001B[1mhyperparameters: \u001B[0mlr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, cls=0.5, cls_pw=1.0, obj=1.0, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0, copy_paste=0.0\n",

|

||||

"\u001B[34m\u001B[1mTensorBoard: \u001B[0mStart with 'tensorboard --logdir runs/train-seg', view at http://localhost:6006/\n",

|

||||

"\n",

|

||||

"Dataset not found ⚠️, missing paths ['/content/datasets/coco128-seg/images/train2017']\n",

|

||||

"Downloading https://github.com/ultralytics/assets/releases/download/v0.0.0/coco128-seg.zip to coco128-seg.zip...\n",

|

||||

"100% 6.79M/6.79M [00:01<00:00, 6.73MB/s]\n",

|

||||

"Dataset download success ✅ (1.9s), saved to \u001b[1m/content/datasets\u001b[0m\n",

|

||||

"Dataset download success ✅ (1.9s), saved to \u001B[1m/content/datasets\u001B[0m\n",

|

||||

"\n",

|

||||

" from n params module arguments \n",

|

||||

" 0 -1 1 3520 models.common.Conv [3, 32, 6, 2, 2] \n",

|

||||

|

|

@ -325,20 +335,20 @@

|

|||

"Model summary: 225 layers, 7621277 parameters, 7621277 gradients, 26.6 GFLOPs\n",

|

||||

"\n",

|

||||

"Transferred 367/367 items from yolov5s-seg.pt\n",

|

||||

"\u001b[34m\u001b[1mAMP: \u001b[0mchecks passed ✅\n",

|

||||

"\u001b[34m\u001b[1moptimizer:\u001b[0m SGD(lr=0.01) with parameter groups 60 weight(decay=0.0), 63 weight(decay=0.0005), 63 bias\n",

|

||||

"\u001b[34m\u001b[1malbumentations: \u001b[0mBlur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))\n",

|

||||

"\u001b[34m\u001b[1mtrain: \u001b[0mScanning /content/datasets/coco128-seg/labels/train2017... 126 images, 2 backgrounds, 0 corrupt: 100% 128/128 [00:00<00:00, 1389.59it/s]\n",

|

||||

"\u001b[34m\u001b[1mtrain: \u001b[0mNew cache created: /content/datasets/coco128-seg/labels/train2017.cache\n",

|

||||

"\u001b[34m\u001b[1mtrain: \u001b[0mCaching images (0.1GB ram): 100% 128/128 [00:00<00:00, 238.86it/s]\n",

|

||||

"\u001b[34m\u001b[1mval: \u001b[0mScanning /content/datasets/coco128-seg/labels/train2017.cache... 126 images, 2 backgrounds, 0 corrupt: 100% 128/128 [00:00<?, ?it/s]\n",

|

||||

"\u001b[34m\u001b[1mval: \u001b[0mCaching images (0.1GB ram): 100% 128/128 [00:01<00:00, 98.90it/s]\n",

|

||||

"\u001B[34m\u001B[1mAMP: \u001B[0mchecks passed ✅\n",

|

||||

"\u001B[34m\u001B[1moptimizer:\u001B[0m SGD(lr=0.01) with parameter groups 60 weight(decay=0.0), 63 weight(decay=0.0005), 63 bias\n",

|

||||

"\u001B[34m\u001B[1malbumentations: \u001B[0mBlur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))\n",

|

||||

"\u001B[34m\u001B[1mtrain: \u001B[0mScanning /content/datasets/coco128-seg/labels/train2017... 126 images, 2 backgrounds, 0 corrupt: 100% 128/128 [00:00<00:00, 1389.59it/s]\n",

|

||||

"\u001B[34m\u001B[1mtrain: \u001B[0mNew cache created: /content/datasets/coco128-seg/labels/train2017.cache\n",

|

||||

"\u001B[34m\u001B[1mtrain: \u001B[0mCaching images (0.1GB ram): 100% 128/128 [00:00<00:00, 238.86it/s]\n",

|

||||

"\u001B[34m\u001B[1mval: \u001B[0mScanning /content/datasets/coco128-seg/labels/train2017.cache... 126 images, 2 backgrounds, 0 corrupt: 100% 128/128 [00:00<?, ?it/s]\n",

|

||||

"\u001B[34m\u001B[1mval: \u001B[0mCaching images (0.1GB ram): 100% 128/128 [00:01<00:00, 98.90it/s]\n",

|

||||

"\n",

|

||||

"\u001b[34m\u001b[1mAutoAnchor: \u001b[0m4.27 anchors/target, 0.994 Best Possible Recall (BPR). Current anchors are a good fit to dataset ✅\n",

|

||||

"\u001B[34m\u001B[1mAutoAnchor: \u001B[0m4.27 anchors/target, 0.994 Best Possible Recall (BPR). Current anchors are a good fit to dataset ✅\n",

|

||||

"Plotting labels to runs/train-seg/exp/labels.jpg... \n",

|

||||

"Image sizes 640 train, 640 val\n",

|

||||

"Using 2 dataloader workers\n",

|

||||

"Logging results to \u001b[1mruns/train-seg/exp\u001b[0m\n",

|

||||

"Logging results to \u001B[1mruns/train-seg/exp\u001B[0m\n",

|

||||

"Starting training for 3 epochs...\n",

|

||||

"\n",

|

||||

" Epoch GPU_mem box_loss seg_loss obj_loss cls_loss Instances Size\n",

|

||||

|

|

@ -436,7 +446,7 @@

|

|||

" scissors 128 1 1 0 0.0166 0.00166 1 0 0 0\n",

|

||||

" teddy bear 128 21 0.813 0.829 0.841 0.457 0.826 0.678 0.786 0.422\n",

|

||||

" toothbrush 128 5 0.806 1 0.995 0.733 0.991 1 0.995 0.628\n",

|

||||

"Results saved to \u001b[1mruns/train-seg/exp\u001b[0m\n"

|

||||

"Results saved to \u001B[1mruns/train-seg/exp\u001B[0m\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

|

|

|

|||

File diff suppressed because it is too large

Load Diff

|

|

@ -1,96 +1,100 @@

|

|||

<a href="https://www.ultralytics.com/"><img src="https://raw.githubusercontent.com/ultralytics/assets/main/logo/Ultralytics_Logotype_Original.svg" width="320" alt="Ultralytics logo"></a>

|

||||

|

||||

# ClearML Integration with Ultralytics YOLOv5

|

||||

# ClearML Integration with Ultralytics YOLO

|

||||

|

||||

<img align="center" src="https://github.com/thepycoder/clearml_screenshots/raw/main/logos_dark.png#gh-light-mode-only" alt="Clear|ML"><img align="center" src="https://github.com/thepycoder/clearml_screenshots/raw/main/logos_light.png#gh-dark-mode-only" alt="Clear|ML">

|

||||

<img align="center" src="https://github.com/thepycoder/clearml_screenshots/raw/main/logos_dark.png#gh-light-mode-only" alt="ClearML"><img align="center" src="https://github.com/thepycoder/clearml_screenshots/raw/main/logos_light.png#gh-dark-mode-only" alt="ClearML">

|

||||

|

||||

## ℹ️ About ClearML

|

||||

|

||||

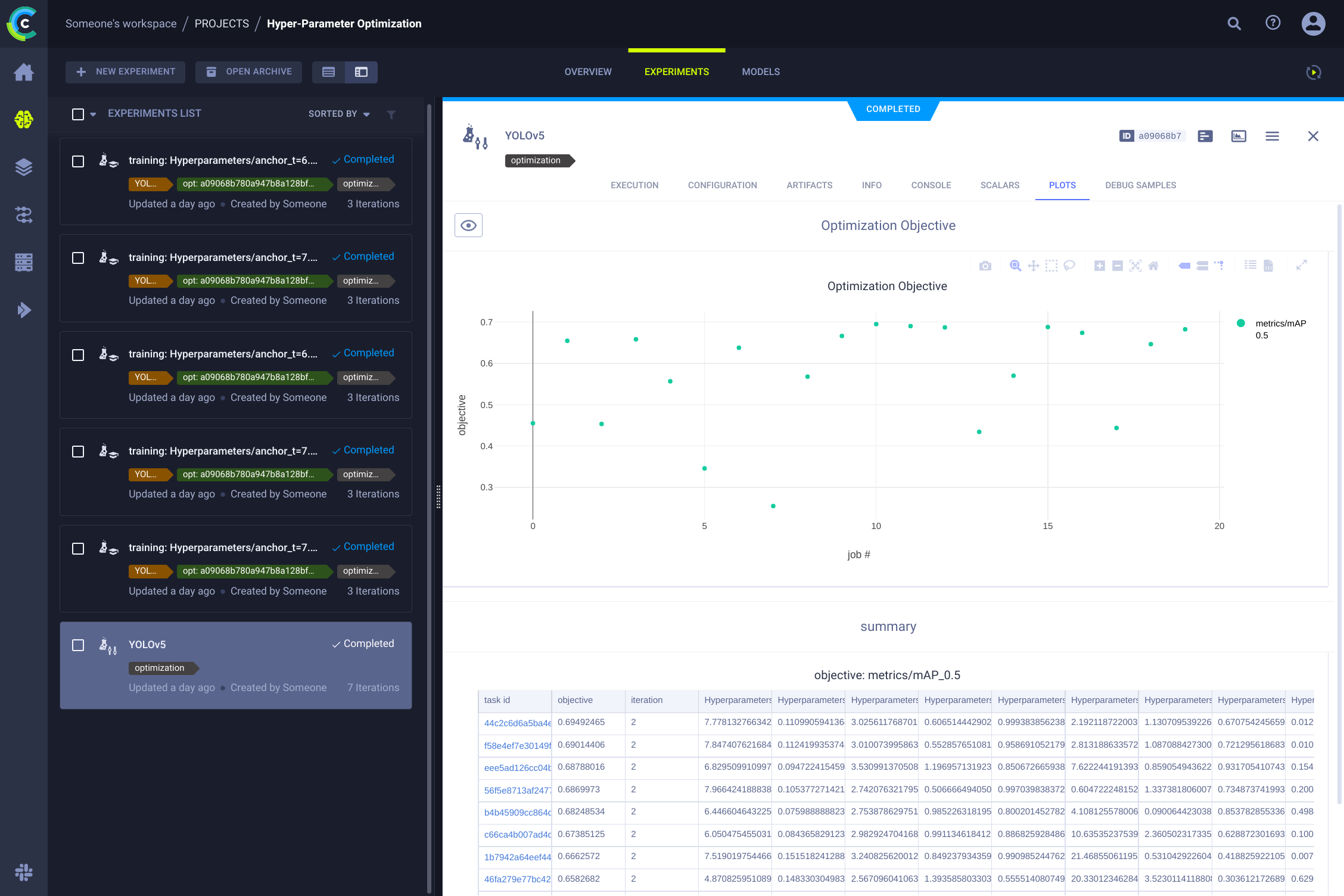

[ClearML](https://clear.ml/) is an [open-source](https://github.com/clearml/clearml) MLOps platform designed to streamline your machine learning workflow and save valuable time ⏱️. Integrating ClearML with [Ultralytics YOLOv5](https://docs.ultralytics.com/models/yolov5/) allows you to leverage a powerful suite of tools:

|

||||

[ClearML](https://clear.ml/) is an [open-source MLOps platform](https://github.com/clearml/clearml) designed to streamline your machine learning workflow and maximize productivity. Integrating ClearML with [Ultralytics YOLO](https://docs.ultralytics.com/models/yolov5/) unlocks a robust suite of tools for experiment tracking, data management, and scalable deployment:

|

||||

|

||||

- **Experiment Management:** 🔨 Track every YOLOv5 [training run](https://docs.ultralytics.com/modes/train/), including parameters, metrics, and outputs. See the [Ultralytics ClearML integration guide](https://docs.ultralytics.com/integrations/clearml/) for more details.

|

||||