diff --git a/.github/CODE_OF_CONDUCT.md b/.github/CODE_OF_CONDUCT.md

index ef10b05fc..27e59e9aa 100644

--- a/.github/CODE_OF_CONDUCT.md

+++ b/.github/CODE_OF_CONDUCT.md

@@ -17,23 +17,23 @@ diverse, inclusive, and healthy community.

Examples of behavior that contributes to a positive environment for our

community include:

-* Demonstrating empathy and kindness toward other people

-* Being respectful of differing opinions, viewpoints, and experiences

-* Giving and gracefully accepting constructive feedback

-* Accepting responsibility and apologizing to those affected by our mistakes,

+- Demonstrating empathy and kindness toward other people

+- Being respectful of differing opinions, viewpoints, and experiences

+- Giving and gracefully accepting constructive feedback

+- Accepting responsibility and apologizing to those affected by our mistakes,

and learning from the experience

-* Focusing on what is best not just for us as individuals, but for the

+- Focusing on what is best not just for us as individuals, but for the

overall community

Examples of unacceptable behavior include:

-* The use of sexualized language or imagery, and sexual attention or

+- The use of sexualized language or imagery, and sexual attention or

advances of any kind

-* Trolling, insulting or derogatory comments, and personal or political attacks

-* Public or private harassment

-* Publishing others' private information, such as a physical or email

+- Trolling, insulting or derogatory comments, and personal or political attacks

+- Public or private harassment

+- Publishing others' private information, such as a physical or email

address, without their explicit permission

-* Other conduct which could reasonably be considered inappropriate in a

+- Other conduct which could reasonably be considered inappropriate in a

professional setting

## Enforcement Responsibilities

@@ -121,8 +121,8 @@ https://www.contributor-covenant.org/version/2/0/code_of_conduct.html.

Community Impact Guidelines were inspired by [Mozilla's code of conduct

enforcement ladder](https://github.com/mozilla/diversity).

-[homepage]: https://www.contributor-covenant.org

-

For answers to common questions about this code of conduct, see the FAQ at

https://www.contributor-covenant.org/faq. Translations are available at

https://www.contributor-covenant.org/translations.

+

+[homepage]: https://www.contributor-covenant.org

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index bff7f8a40..924c940f2 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -13,7 +13,7 @@ ci:

repos:

- repo: https://github.com/pre-commit/pre-commit-hooks

- rev: v4.1.0

+ rev: v4.2.0

hooks:

- id: end-of-file-fixer

- id: trailing-whitespace

@@ -24,7 +24,7 @@ repos:

- id: check-docstring-first

- repo: https://github.com/asottile/pyupgrade

- rev: v2.31.1

+ rev: v2.32.0

hooks:

- id: pyupgrade

args: [--py36-plus]

@@ -42,15 +42,17 @@ repos:

- id: yapf

name: YAPF formatting

- # TODO

- #- repo: https://github.com/executablebooks/mdformat

- # rev: 0.7.7

- # hooks:

- # - id: mdformat

- # additional_dependencies:

- # - mdformat-gfm

- # - mdformat-black

- # - mdformat_frontmatter

+ - repo: https://github.com/executablebooks/mdformat

+ rev: 0.7.14

+ hooks:

+ - id: mdformat

+ additional_dependencies:

+ - mdformat-gfm

+ - mdformat-black

+ exclude: |

+ (?x)^(

+ README.md

+ )$

- repo: https://github.com/asottile/yesqa

rev: v1.3.0

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

index ebde03a56..13b9b73b5 100644

--- a/CONTRIBUTING.md

+++ b/CONTRIBUTING.md

@@ -18,16 +18,19 @@ Submitting a PR is easy! This example shows how to submit a PR for updating `req

### 1. Select File to Update

Select `requirements.txt` to update by clicking on it in GitHub.

+

<p align="center"><img width="800" alt="PR_step1" src="https://user-images.githubusercontent.com/26833433/122260847-08be2600-ced4-11eb-828b-8287ace4136c.png"></p>

### 2. Click 'Edit this file'

Button is in top-right corner.

+

<p align="center"><img width="800" alt="PR_step2" src="https://user-images.githubusercontent.com/26833433/122260844-06f46280-ced4-11eb-9eec-b8a24be519ca.png"></p>

### 3. Make Changes

Change `matplotlib` version from `3.2.2` to `3.3`.

+

<p align="center"><img width="800" alt="PR_step3" src="https://user-images.githubusercontent.com/26833433/122260853-0a87e980-ced4-11eb-9fd2-3650fb6e0842.png"></p>

### 4. Preview Changes and Submit PR

@@ -35,6 +38,7 @@ Change `matplotlib` version from `3.2.2` to `3.3`.

Click on the **Preview changes** tab to verify your updates. At the bottom of the screen select 'Create a **new branch**

for this commit', assign your branch a descriptive name such as `fix/matplotlib_version` and click the green **Propose

changes** button. All done, your PR is now submitted to YOLOv5 for review and approval 😃!

+

<p align="center"><img width="800" alt="PR_step4" src="https://user-images.githubusercontent.com/26833433/122260856-0b208000-ced4-11eb-8e8e-77b6151cbcc3.png"></p>

### PR recommendations

@@ -70,21 +74,21 @@ understand and use to **reproduce** the problem. This is referred to by communit

a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example). Your code that reproduces

the problem should be:

-* ✅ **Minimal** – Use as little code as possible that still produces the same problem

-* ✅ **Complete** – Provide **all** parts someone else needs to reproduce your problem in the question itself

-* ✅ **Reproducible** – Test the code you're about to provide to make sure it reproduces the problem

+- ✅ **Minimal** – Use as little code as possible that still produces the same problem

+- ✅ **Complete** – Provide **all** parts someone else needs to reproduce your problem in the question itself

+- ✅ **Reproducible** – Test the code you're about to provide to make sure it reproduces the problem

In addition to the above requirements, for [Ultralytics](https://ultralytics.com/) to provide assistance your code

should be:

-* ✅ **Current** – Verify that your code is up-to-date with current

+- ✅ **Current** – Verify that your code is up-to-date with current

GitHub [master](https://github.com/ultralytics/yolov5/tree/master), and if necessary `git pull` or `git clone` a new

copy to ensure your problem has not already been resolved by previous commits.

-* ✅ **Unmodified** – Your problem must be reproducible without any modifications to the codebase in this

+- ✅ **Unmodified** – Your problem must be reproducible without any modifications to the codebase in this

repository. [Ultralytics](https://ultralytics.com/) does not provide support for custom code ⚠️.

-If you believe your problem meets all of the above criteria, please close this issue and raise a new one using the 🐛 **

-Bug Report** [template](https://github.com/ultralytics/yolov5/issues/new/choose) and providing

+If you believe your problem meets all of the above criteria, please close this issue and raise a new one using the 🐛

+**Bug Report** [template](https://github.com/ultralytics/yolov5/issues/new/choose) and providing

a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example) to help us better

understand and diagnose your problem.

diff --git a/README.md b/README.md

index 54c5cbd83..f1dd65b0a 100644

--- a/README.md

+++ b/README.md

@@ -103,8 +103,6 @@ results.print() # or .show(), .save(), .crop(), .pandas(), etc.

</details>

-

-

<details>

<summary>Inference with detect.py</summary>

@@ -149,20 +147,20 @@ python train.py --data coco.yaml --cfg yolov5n.yaml --weights '' --batch-size 12

<details open>

<summary>Tutorials</summary>

-* [Train Custom Data](https://github.com/ultralytics/yolov5/wiki/Train-Custom-Data) 🚀 RECOMMENDED

-* [Tips for Best Training Results](https://github.com/ultralytics/yolov5/wiki/Tips-for-Best-Training-Results) ☘️

+- [Train Custom Data](https://github.com/ultralytics/yolov5/wiki/Train-Custom-Data) 🚀 RECOMMENDED

+- [Tips for Best Training Results](https://github.com/ultralytics/yolov5/wiki/Tips-for-Best-Training-Results) ☘️

RECOMMENDED

-* [Weights & Biases Logging](https://github.com/ultralytics/yolov5/issues/1289) 🌟 NEW

-* [Roboflow for Datasets, Labeling, and Active Learning](https://github.com/ultralytics/yolov5/issues/4975) 🌟 NEW

-* [Multi-GPU Training](https://github.com/ultralytics/yolov5/issues/475)

-* [PyTorch Hub](https://github.com/ultralytics/yolov5/issues/36) ⭐ NEW

-* [TFLite, ONNX, CoreML, TensorRT Export](https://github.com/ultralytics/yolov5/issues/251) 🚀

-* [Test-Time Augmentation (TTA)](https://github.com/ultralytics/yolov5/issues/303)

-* [Model Ensembling](https://github.com/ultralytics/yolov5/issues/318)

-* [Model Pruning/Sparsity](https://github.com/ultralytics/yolov5/issues/304)

-* [Hyperparameter Evolution](https://github.com/ultralytics/yolov5/issues/607)

-* [Transfer Learning with Frozen Layers](https://github.com/ultralytics/yolov5/issues/1314) ⭐ NEW

-* [Architecture Summary](https://github.com/ultralytics/yolov5/issues/6998) ⭐ NEW

+- [Weights & Biases Logging](https://github.com/ultralytics/yolov5/issues/1289) 🌟 NEW

+- [Roboflow for Datasets, Labeling, and Active Learning](https://github.com/ultralytics/yolov5/issues/4975) 🌟 NEW

+- [Multi-GPU Training](https://github.com/ultralytics/yolov5/issues/475)

+- [PyTorch Hub](https://github.com/ultralytics/yolov5/issues/36) ⭐ NEW

+- [TFLite, ONNX, CoreML, TensorRT Export](https://github.com/ultralytics/yolov5/issues/251) 🚀

+- [Test-Time Augmentation (TTA)](https://github.com/ultralytics/yolov5/issues/303)

+- [Model Ensembling](https://github.com/ultralytics/yolov5/issues/318)

+- [Model Pruning/Sparsity](https://github.com/ultralytics/yolov5/issues/304)

+- [Hyperparameter Evolution](https://github.com/ultralytics/yolov5/issues/607)

+- [Transfer Learning with Frozen Layers](https://github.com/ultralytics/yolov5/issues/1314) ⭐ NEW

+- [Architecture Summary](https://github.com/ultralytics/yolov5/issues/6998) ⭐ NEW

</details>

@@ -203,7 +201,6 @@ Get started in seconds with our verified environments. Click each icon below for

|:-:|:-:|

|Automatically track and visualize all your YOLOv5 training runs in the cloud with [Weights & Biases](https://wandb.ai/site?utm_campaign=repo_yolo_readme)|Label and export your custom datasets directly to YOLOv5 for training with [Roboflow](https://roboflow.com/?ref=ultralytics) |

-

<!-- ## <div align="center">Compete and Win</div>

We are super excited about our first-ever Ultralytics YOLOv5 🚀 EXPORT Competition with **$10,000** in cash prizes!

@@ -224,18 +221,15 @@ We are super excited about our first-ever Ultralytics YOLOv5 🚀 EXPORT Competi

<details>

<summary>Figure Notes (click to expand)</summary>

-* **COCO AP val** denotes mAP@0.5:0.95 metric measured on the 5000-image [COCO val2017](http://cocodataset.org) dataset over various inference sizes from 256 to 1536.

-* **GPU Speed** measures average inference time per image on [COCO val2017](http://cocodataset.org) dataset using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/) V100 instance at batch-size 32.

-* **EfficientDet** data from [google/automl](https://github.com/google/automl) at batch size 8.

-* **Reproduce** by `python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`

+- **COCO AP val** denotes mAP@0.5:0.95 metric measured on the 5000-image [COCO val2017](http://cocodataset.org) dataset over various inference sizes from 256 to 1536.

+- **GPU Speed** measures average inference time per image on [COCO val2017](http://cocodataset.org) dataset using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/) V100 instance at batch-size 32.

+- **EfficientDet** data from [google/automl](https://github.com/google/automl) at batch size 8.

+- **Reproduce** by `python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`

+

</details>

### Pretrained Checkpoints

-[assets]: https://github.com/ultralytics/yolov5/releases

-

-[TTA]: https://github.com/ultralytics/yolov5/issues/303

-

|Model |size<br><sup>(pixels) |mAP<sup>val<br>0.5:0.95 |mAP<sup>val<br>0.5 |Speed<br><sup>CPU b1<br>(ms) |Speed<br><sup>V100 b1<br>(ms) |Speed<br><sup>V100 b32<br>(ms) |params<br><sup>(M) |FLOPs<br><sup>@640 (B)

|--- |--- |--- |--- |--- |--- |--- |--- |---

|[YOLOv5n][assets] |640 |28.0 |45.7 |**45** |**6.3**|**0.6**|**1.9**|**4.5**

@@ -253,10 +247,10 @@ We are super excited about our first-ever Ultralytics YOLOv5 🚀 EXPORT Competi

<details>

<summary>Table Notes (click to expand)</summary>

-* All checkpoints are trained to 300 epochs with default settings. Nano and Small models use [hyp.scratch-low.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-low.yaml) hyps, all others use [hyp.scratch-high.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-high.yaml).

-* **mAP<sup>val</sup>** values are for single-model single-scale on [COCO val2017](http://cocodataset.org) dataset.<br>Reproduce by `python val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65`

-* **Speed** averaged over COCO val images using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/) instance. NMS times (~1 ms/img) not included.<br>Reproduce by `python val.py --data coco.yaml --img 640 --task speed --batch 1`

-* **TTA** [Test Time Augmentation](https://github.com/ultralytics/yolov5/issues/303) includes reflection and scale augmentations.<br>Reproduce by `python val.py --data coco.yaml --img 1536 --iou 0.7 --augment`

+- All checkpoints are trained to 300 epochs with default settings. Nano and Small models use [hyp.scratch-low.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-low.yaml) hyps, all others use [hyp.scratch-high.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-high.yaml).

+- **mAP<sup>val</sup>** values are for single-model single-scale on [COCO val2017](http://cocodataset.org) dataset.<br>Reproduce by `python val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65`

+- **Speed** averaged over COCO val images using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/) instance. NMS times (~1 ms/img) not included.<br>Reproduce by `python val.py --data coco.yaml --img 640 --task speed --batch 1`

+- **TTA** [Test Time Augmentation](https://github.com/ultralytics/yolov5/issues/303) includes reflection and scale augmentations.<br>Reproduce by `python val.py --data coco.yaml --img 1536 --iou 0.7 --augment`

</details>

@@ -302,3 +296,6 @@ professional support requests please visit [https://ultralytics.com/contact](htt

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-social-instagram.png" width="3%"/>

</a>

</div>

+

+[assets]: https://github.com/ultralytics/yolov5/releases

+[tta]: https://github.com/ultralytics/yolov5/issues/303

diff --git a/utils/loggers/wandb/README.md b/utils/loggers/wandb/README.md

index 3e9c9fd38..d78324b4c 100644

--- a/utils/loggers/wandb/README.md

+++ b/utils/loggers/wandb/README.md

@@ -1,66 +1,72 @@

📚 This guide explains how to use **Weights & Biases** (W&B) with YOLOv5 🚀. UPDATED 29 September 2021.

-* [About Weights & Biases](#about-weights-&-biases)

-* [First-Time Setup](#first-time-setup)

-* [Viewing runs](#viewing-runs)

-* [Disabling wandb](#disabling-wandb)

-* [Advanced Usage: Dataset Versioning and Evaluation](#advanced-usage)

-* [Reports: Share your work with the world!](#reports)

+

+- [About Weights & Biases](#about-weights-&-biases)

+- [First-Time Setup](#first-time-setup)

+- [Viewing runs](#viewing-runs)

+- [Disabling wandb](#disabling-wandb)

+- [Advanced Usage: Dataset Versioning and Evaluation](#advanced-usage)

+- [Reports: Share your work with the world!](#reports)

## About Weights & Biases

+

Think of [W&B](https://wandb.ai/site?utm_campaign=repo_yolo_wandbtutorial) like GitHub for machine learning models. With a few lines of code, save everything you need to debug, compare and reproduce your models — architecture, hyperparameters, git commits, model weights, GPU usage, and even datasets and predictions.

Used by top researchers including teams at OpenAI, Lyft, Github, and MILA, W&B is part of the new standard of best practices for machine learning. How W&B can help you optimize your machine learning workflows:

- * [Debug](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#Free-2) model performance in real time

- * [GPU usage](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#System-4) visualized automatically

- * [Custom charts](https://wandb.ai/wandb/customizable-charts/reports/Powerful-Custom-Charts-To-Debug-Model-Peformance--VmlldzoyNzY4ODI) for powerful, extensible visualization

- * [Share insights](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#Share-8) interactively with collaborators

- * [Optimize hyperparameters](https://docs.wandb.com/sweeps) efficiently

- * [Track](https://docs.wandb.com/artifacts) datasets, pipelines, and production models

+- [Debug](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#Free-2) model performance in real time

+- [GPU usage](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#System-4) visualized automatically

+- [Custom charts](https://wandb.ai/wandb/customizable-charts/reports/Powerful-Custom-Charts-To-Debug-Model-Peformance--VmlldzoyNzY4ODI) for powerful, extensible visualization

+- [Share insights](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#Share-8) interactively with collaborators

+- [Optimize hyperparameters](https://docs.wandb.com/sweeps) efficiently

+- [Track](https://docs.wandb.com/artifacts) datasets, pipelines, and production models

## First-Time Setup

+

<details open>

<summary> Toggle Details </summary>

When you first train, W&B will prompt you to create a new account and will generate an **API key** for you. If you are an existing user you can retrieve your key from https://wandb.ai/authorize. This key is used to tell W&B where to log your data. You only need to supply your key once, and then it is remembered on the same device.

W&B will create a cloud **project** (default is 'YOLOv5') for your training runs, and each new training run will be provided a unique run **name** within that project as project/name. You can also manually set your project and run name as:

- ```shell

- $ python train.py --project ... --name ...

- ```

+```shell

+$ python train.py --project ... --name ...

+```

YOLOv5 notebook example: <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>

<img width="960" alt="Screen Shot 2021-09-29 at 10 23 13 PM" src="https://user-images.githubusercontent.com/26833433/135392431-1ab7920a-c49d-450a-b0b0-0c86ec86100e.png">

-

- </details>

+</details>

## Viewing Runs

+

<details open>

<summary> Toggle Details </summary>

Run information streams from your environment to the W&B cloud console as you train. This allows you to monitor and even cancel runs in <b>realtime</b> . All important information is logged:

- * Training & Validation losses

- * Metrics: Precision, Recall, mAP@0.5, mAP@0.5:0.95

- * Learning Rate over time

- * A bounding box debugging panel, showing the training progress over time

- * GPU: Type, **GPU Utilization**, power, temperature, **CUDA memory usage**

- * System: Disk I/0, CPU utilization, RAM memory usage

- * Your trained model as W&B Artifact

- * Environment: OS and Python types, Git repository and state, **training command**

+- Training & Validation losses

+- Metrics: Precision, Recall, mAP@0.5, mAP@0.5:0.95

+- Learning Rate over time

+- A bounding box debugging panel, showing the training progress over time

+- GPU: Type, **GPU Utilization**, power, temperature, **CUDA memory usage**

+- System: Disk I/0, CPU utilization, RAM memory usage

+- Your trained model as W&B Artifact

+- Environment: OS and Python types, Git repository and state, **training command**

<p align="center"><img width="900" alt="Weights & Biases dashboard" src="https://user-images.githubusercontent.com/26833433/135390767-c28b050f-8455-4004-adb0-3b730386e2b2.png"></p>

</details>

- ## Disabling wandb

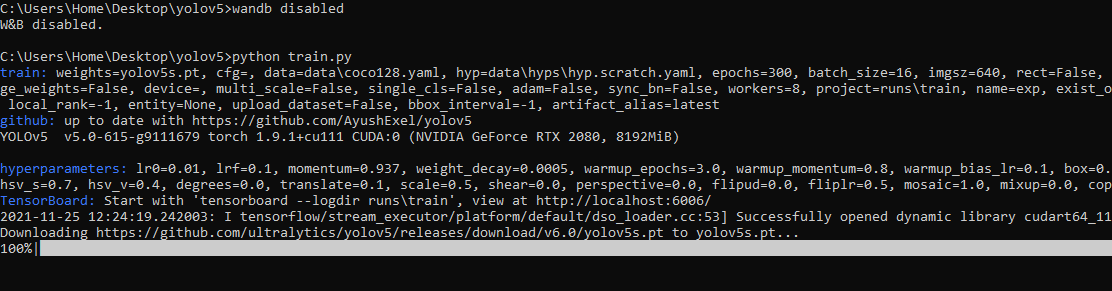

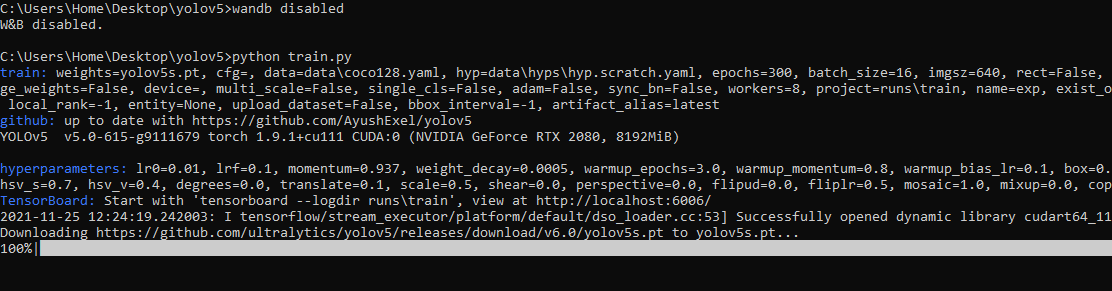

-* training after running `wandb disabled` inside that directory creates no wandb run

-

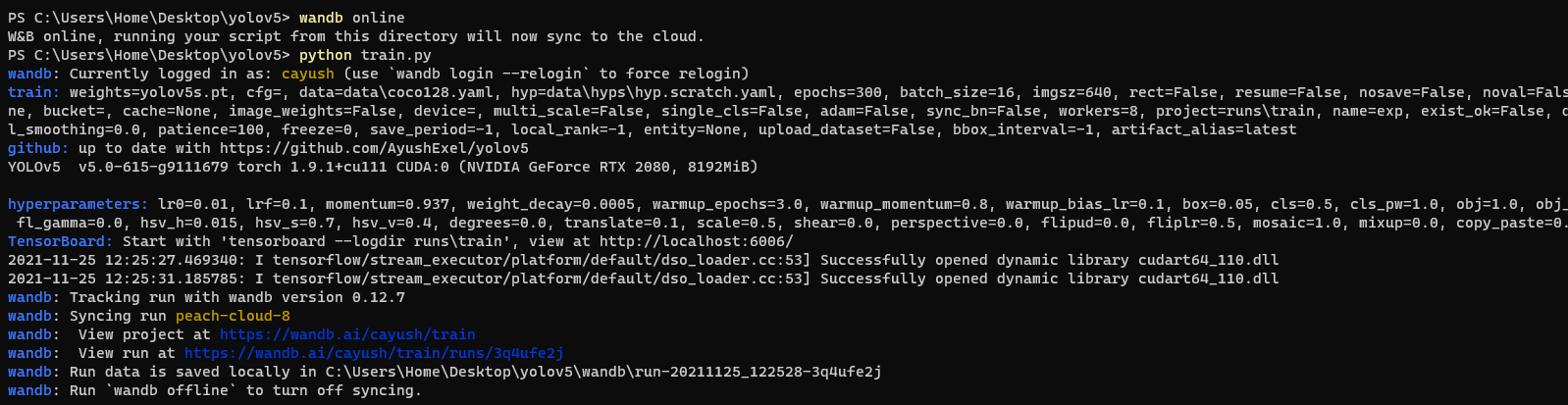

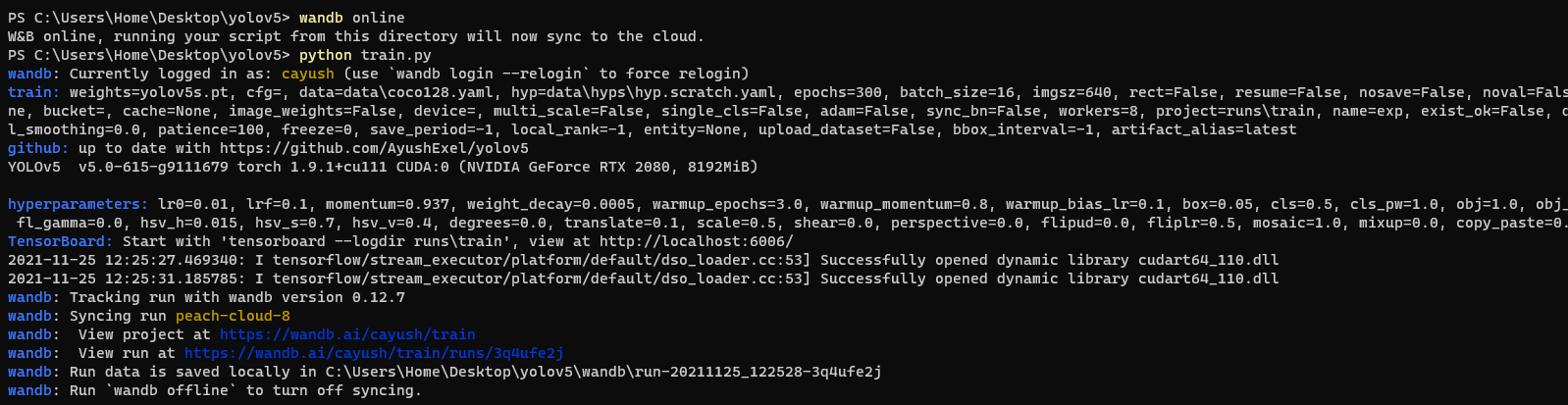

+## Disabling wandb

-* To enable wandb again, run `wandb online`

-

+- training after running `wandb disabled` inside that directory creates no wandb run

+

+

+- To enable wandb again, run `wandb online`

+

## Advanced Usage

+

You can leverage W&B artifacts and Tables integration to easily visualize and manage your datasets, models and training evaluations. Here are some quick examples to get you started.

+

<details open>

<h3> 1: Train and Log Evaluation simultaneousy </h3>

This is an extension of the previous section, but it'll also training after uploading the dataset. <b> This also evaluation Table</b>

@@ -71,18 +77,20 @@ You can leverage W&B artifacts and Tables integration to easily visualize and ma

<b>Code</b> <code> $ python train.py --upload_data val</code>

- </details>

- <h3>2. Visualize and Version Datasets</h3>

+</details>

+

+<h3>2. Visualize and Version Datasets</h3>

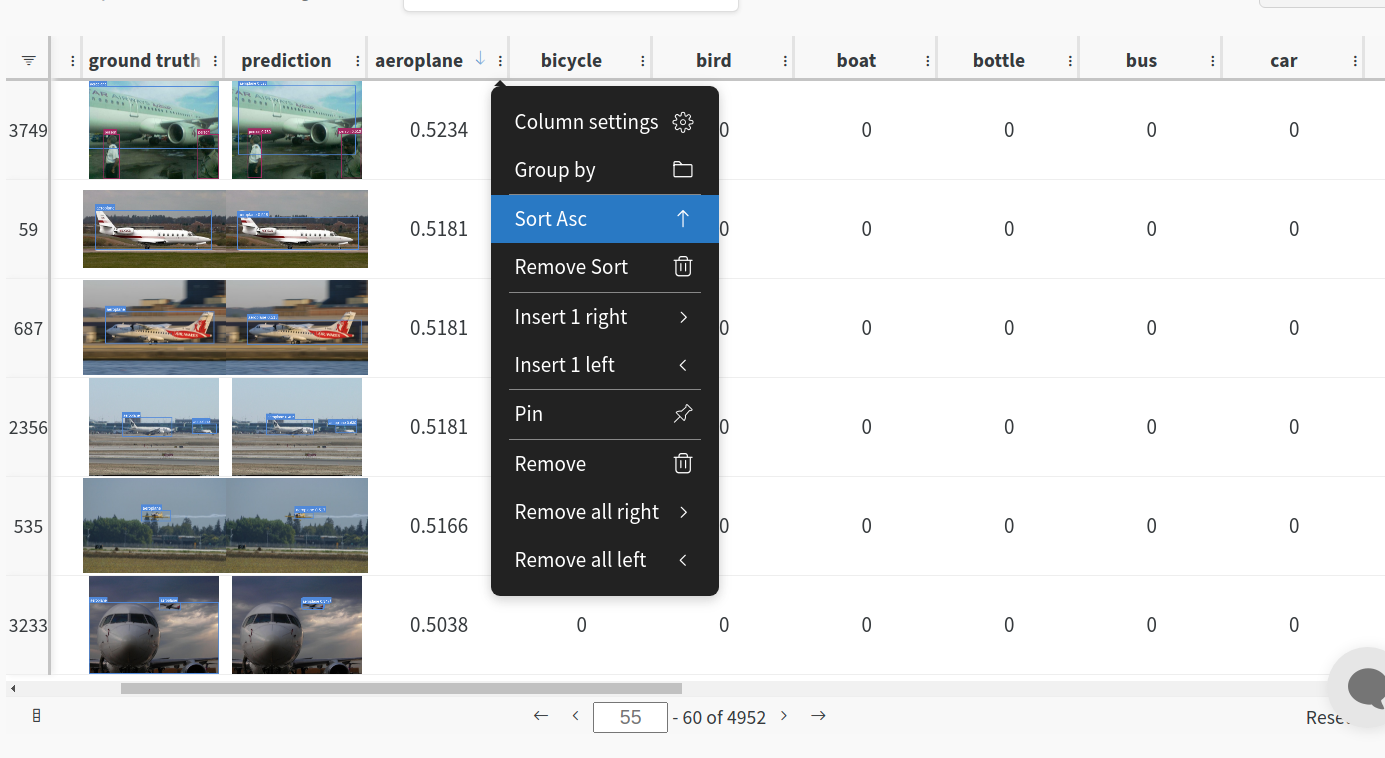

Log, visualize, dynamically query, and understand your data with <a href='https://docs.wandb.ai/guides/data-vis/tables'>W&B Tables</a>. You can use the following command to log your dataset as a W&B Table. This will generate a <code>{dataset}_wandb.yaml</code> file which can be used to train from dataset artifact.

<details>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python utils/logger/wandb/log_dataset.py --project ... --name ... --data .. </code>

-

- </details>

+

- <h3> 3: Train using dataset artifact </h3>

+</details>

+

+<h3> 3: Train using dataset artifact </h3>

When you upload a dataset as described in the first section, you get a new config file with an added `_wandb` to its name. This file contains the information that

can be used to train a model directly from the dataset artifact. <b> This also logs evaluation </b>

<details>

@@ -90,51 +98,54 @@ You can leverage W&B artifacts and Tables integration to easily visualize and ma

<b>Code</b> <code> $ python train.py --data {data}_wandb.yaml </code>

- </details>

- <h3> 4: Save model checkpoints as artifacts </h3>

+</details>

+

+<h3> 4: Save model checkpoints as artifacts </h3>

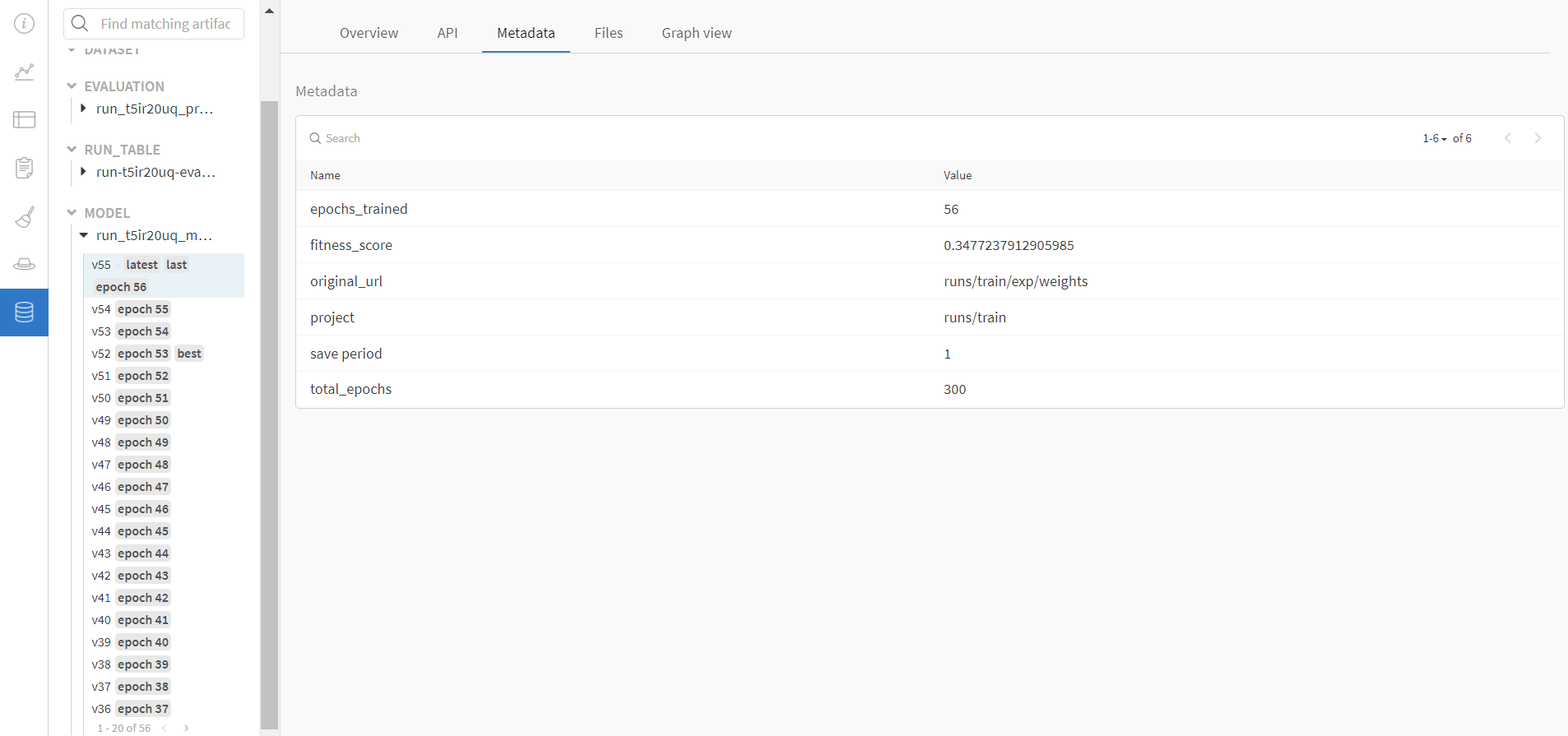

To enable saving and versioning checkpoints of your experiment, pass `--save_period n` with the base cammand, where `n` represents checkpoint interval.

You can also log both the dataset and model checkpoints simultaneously. If not passed, only the final model will be logged

- <details>

+<details>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python train.py --save_period 1 </code>

- </details>

</details>

- <h3> 5: Resume runs from checkpoint artifacts. </h3>

+</details>

+

+<h3> 5: Resume runs from checkpoint artifacts. </h3>

Any run can be resumed using artifacts if the <code>--resume</code> argument starts with <code>wandb-artifact://</code> prefix followed by the run path, i.e, <code>wandb-artifact://username/project/runid </code>. This doesn't require the model checkpoint to be present on the local system.

- <details>

+<details>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python train.py --resume wandb-artifact://{run_path} </code>

- </details>

- <h3> 6: Resume runs from dataset artifact & checkpoint artifacts. </h3>

+</details>

+

+<h3> 6: Resume runs from dataset artifact & checkpoint artifacts. </h3>

<b> Local dataset or model checkpoints are not required. This can be used to resume runs directly on a different device </b>

The syntax is same as the previous section, but you'll need to lof both the dataset and model checkpoints as artifacts, i.e, set bot <code>--upload_dataset</code> or

train from <code>_wandb.yaml</code> file and set <code>--save_period</code>

- <details>

+<details>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python train.py --resume wandb-artifact://{run_path} </code>

- </details>

</details>

- <h3> Reports </h3>

+</details>

+

+<h3> Reports </h3>

W&B Reports can be created from your saved runs for sharing online. Once a report is created you will receive a link you can use to publically share your results. Here is an example report created from the COCO128 tutorial trainings of all four YOLOv5 models ([link](https://wandb.ai/glenn-jocher/yolov5_tutorial/reports/YOLOv5-COCO128-Tutorial-Results--VmlldzozMDI5OTY)).

<img width="900" alt="Weights & Biases Reports" src="https://user-images.githubusercontent.com/26833433/135394029-a17eaf86-c6c1-4b1d-bb80-b90e83aaffa7.png">

-

## Environments

YOLOv5 may be run in any of the following up-to-date verified environments (with all dependencies including [CUDA](https://developer.nvidia.com/cuda)/[CUDNN](https://developer.nvidia.com/cudnn), [Python](https://www.python.org/) and [PyTorch](https://pytorch.org/) preinstalled):

@@ -144,7 +155,6 @@ YOLOv5 may be run in any of the following up-to-date verified environments (with

- **Amazon** Deep Learning AMI. See [AWS Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/AWS-Quickstart)

- **Docker Image**. See [Docker Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/Docker-Quickstart) <a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>

-

## Status