-YOLOv5 🚀 is the world's most loved vision AI, representing Ultralytics open-source research into future vision AI methods, incorporating lessons learned and best practices evolved over thousands of hours of research and development. +Ultralytics YOLOv5 🚀 is a cutting-edge, state-of-the-art (SOTA) computer vision model developed by [Ultralytics](https://www.ultralytics.com/). Based on the PyTorch framework, YOLOv5 is renowned for its ease of use, speed, and accuracy. It incorporates insights and best practices from extensive research and development, making it a popular choice for a wide range of vision AI tasks, including [object detection](https://docs.ultralytics.com/tasks/detect/), [image segmentation](https://docs.ultralytics.com/tasks/segment/), and [image classification](https://docs.ultralytics.com/tasks/classify/). -We hope that the resources here will help you get the most out of YOLOv5. Please browse the YOLOv5 Docs for details, raise an issue on GitHub for support, and join our Discord community for questions and discussions! +We hope the resources here help you get the most out of YOLOv5. Please browse the [YOLOv5 Docs](https://docs.ultralytics.com/yolov5/) for detailed information, raise an issue on [GitHub](https://github.com/ultralytics/yolov5/issues/new/choose) for support, and join our [Discord community](https://discord.com/invite/ultralytics) for questions and discussions! -To request an Enterprise License please complete the form at [Ultralytics Licensing](https://www.ultralytics.com/license). +To request an Enterprise License, please complete the form at [Ultralytics Licensing](https://www.ultralytics.com/license).

@@ -43,59 +45,67 @@ To request an Enterprise License please complete the form at [Ultralytics Licens

@@ -43,59 +45,67 @@ To request an Enterprise License please complete the form at [Ultralytics Licens

-##

YOLO11 🚀 NEW

+## 🚀 YOLO11: The Next Evolution

-We are excited to unveil the launch of Ultralytics YOLO11 🚀, the latest advancement in our state-of-the-art (SOTA) vision models! Available now at **[GitHub](https://github.com/ultralytics/ultralytics)**, YOLO11 builds on our legacy of speed, precision, and ease of use. Whether you're tackling object detection, image segmentation, or image classification, YOLO11 delivers the performance and versatility needed to excel in diverse applications.

+We are excited to announce the launch of **Ultralytics YOLO11** 🚀, the latest advancement in our state-of-the-art (SOTA) vision models! Available now at the [Ultralytics YOLO GitHub repository](https://github.com/ultralytics/ultralytics), YOLO11 builds on our legacy of speed, precision, and ease of use. Whether you're tackling [object detection](https://docs.ultralytics.com/tasks/detect/), [instance segmentation](https://docs.ultralytics.com/tasks/segment/), [pose estimation](https://docs.ultralytics.com/tasks/pose/), [image classification](https://docs.ultralytics.com/tasks/classify/), or [oriented object detection (OBB)](https://docs.ultralytics.com/tasks/obb/), YOLO11 delivers the performance and versatility needed to excel in diverse applications.

Get started today and unlock the full potential of YOLO11! Visit the [Ultralytics Docs](https://docs.ultralytics.com/) for comprehensive guides and resources:

[](https://badge.fury.io/py/ultralytics) [](https://www.pepy.tech/projects/ultralytics)

```bash

+# Install the ultralytics package

pip install ultralytics

```

-  +

+

-##  +

+

Documentation

+## 📚 Documentation

-See the [YOLOv5 Docs](https://docs.ultralytics.com/yolov5/) for full documentation on training, testing and deployment. See below for quickstart examples.

+See the [YOLOv5 Docs](https://docs.ultralytics.com/yolov5/) for full documentation on training, testing, and deployment. See below for quickstart examples.

Install

-Clone repo and install [requirements.txt](https://github.com/ultralytics/yolov5/blob/master/requirements.txt) in a [**Python>=3.8.0**](https://www.python.org/) environment, including [**PyTorch>=1.8**](https://pytorch.org/get-started/locally/). +Clone the repository and install dependencies from [requirements.txt](https://github.com/ultralytics/yolov5/blob/master/requirements.txt) in a [**Python>=3.8.0**](https://www.python.org/) environment. Ensure you have [**PyTorch>=1.8**](https://pytorch.org/get-started/locally/) installed. ```bash -git clone https://github.com/ultralytics/yolov5 # clone +# Clone the YOLOv5 repository +git clone https://github.com/ultralytics/yolov5 + +# Navigate to the cloned directory cd yolov5 -pip install -r requirements.txt # install + +# Install required packages +pip install -r requirements.txt ```

-

@@ -103,19 +113,38 @@ results.print()

Inference

+Inference with PyTorch Hub

-YOLOv5 [PyTorch Hub](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/) inference. [Models](https://github.com/ultralytics/yolov5/tree/master/models) download automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases). +Use YOLOv5 via [PyTorch Hub](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/) for inference. [Models](https://github.com/ultralytics/yolov5/tree/master/models) are automatically downloaded from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases). ```python import torch -# Load YOLOv5 model (options: yolov5n, yolov5s, yolov5m, yolov5l, yolov5x) -model = torch.hub.load("ultralytics/yolov5", "yolov5s") +# Load a YOLOv5 model (options: yolov5n, yolov5s, yolov5m, yolov5l, yolov5x) +model = torch.hub.load("ultralytics/yolov5", "yolov5s") # Default: yolov5s -# Input source (URL, file, PIL, OpenCV, numpy array, or list) -img = "https://ultralytics.com/images/zidane.jpg" +# Define the input image source (URL, local file, PIL image, OpenCV frame, numpy array, or list) +img = "https://ultralytics.com/images/zidane.jpg" # Example image -# Perform inference (handles batching, resizing, normalization) +# Perform inference (handles batching, resizing, normalization automatically) results = model(img) -# Process results (options: .print(), .show(), .save(), .crop(), .pandas()) -results.print() +# Process the results (options: .print(), .show(), .save(), .crop(), .pandas()) +results.print() # Print results to console +results.show() # Display results in a window +results.save() # Save results to runs/detect/exp ```Inference with detect.py

-`detect.py` runs inference on a variety of sources, downloading [models](https://github.com/ultralytics/yolov5/tree/master/models) automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases) and saving results to `runs/detect`. +The `detect.py` script runs inference on various sources. It automatically downloads [models](https://github.com/ultralytics/yolov5/tree/master/models) from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases) and saves the results to the `runs/detect` directory. ```bash -python detect.py --weights yolov5s.pt --source 0 # webcam -python detect.py --weights yolov5s.pt --source img.jpg # image -python detect.py --weights yolov5s.pt --source vid.mp4 # video -python detect.py --weights yolov5s.pt --source screen # screenshot -python detect.py --weights yolov5s.pt --source path/ # directory -python detect.py --weights yolov5s.pt --source list.txt # list of images -python detect.py --weights yolov5s.pt --source list.streams # list of streams -python detect.py --weights yolov5s.pt --source 'path/*.jpg' # glob -python detect.py --weights yolov5s.pt --source 'https://youtu.be/LNwODJXcvt4' # YouTube -python detect.py --weights yolov5s.pt --source 'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream +# Run inference using a webcam +python detect.py --weights yolov5s.pt --source 0 + +# Run inference on a local image file +python detect.py --weights yolov5s.pt --source img.jpg + +# Run inference on a local video file +python detect.py --weights yolov5s.pt --source vid.mp4 + +# Run inference on a screen capture +python detect.py --weights yolov5s.pt --source screen + +# Run inference on a directory of images +python detect.py --weights yolov5s.pt --source path/to/images/ + +# Run inference on a text file listing image paths +python detect.py --weights yolov5s.pt --source list.txt + +# Run inference on a text file listing stream URLs +python detect.py --weights yolov5s.pt --source list.streams + +# Run inference using a glob pattern for images +python detect.py --weights yolov5s.pt --source 'path/to/*.jpg' + +# Run inference on a YouTube video URL +python detect.py --weights yolov5s.pt --source 'https://youtu.be/LNwODJXcvt4' + +# Run inference on an RTSP, RTMP, or HTTP stream +python detect.py --weights yolov5s.pt --source 'rtsp://example.com/media.mp4' ```Training

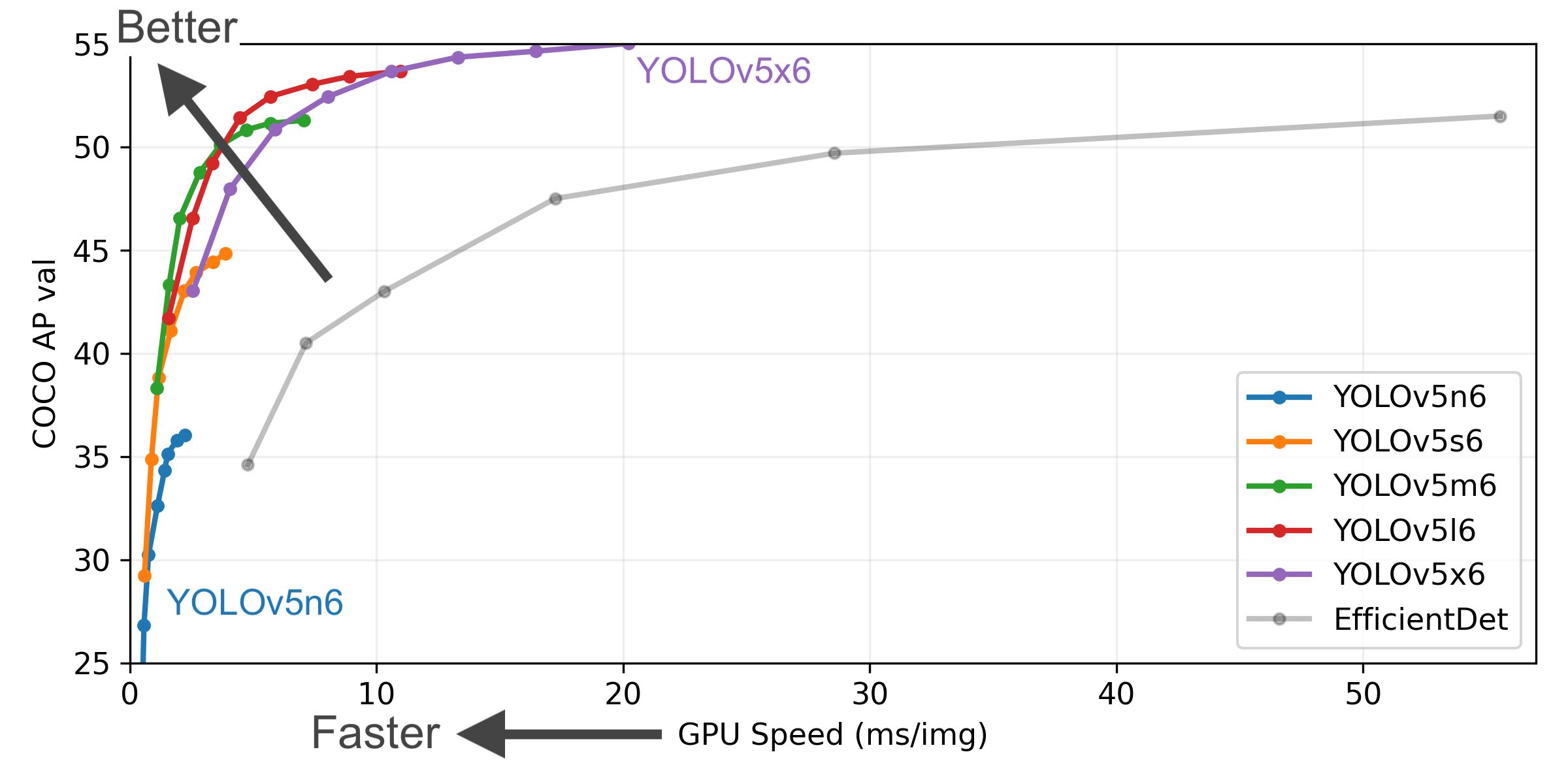

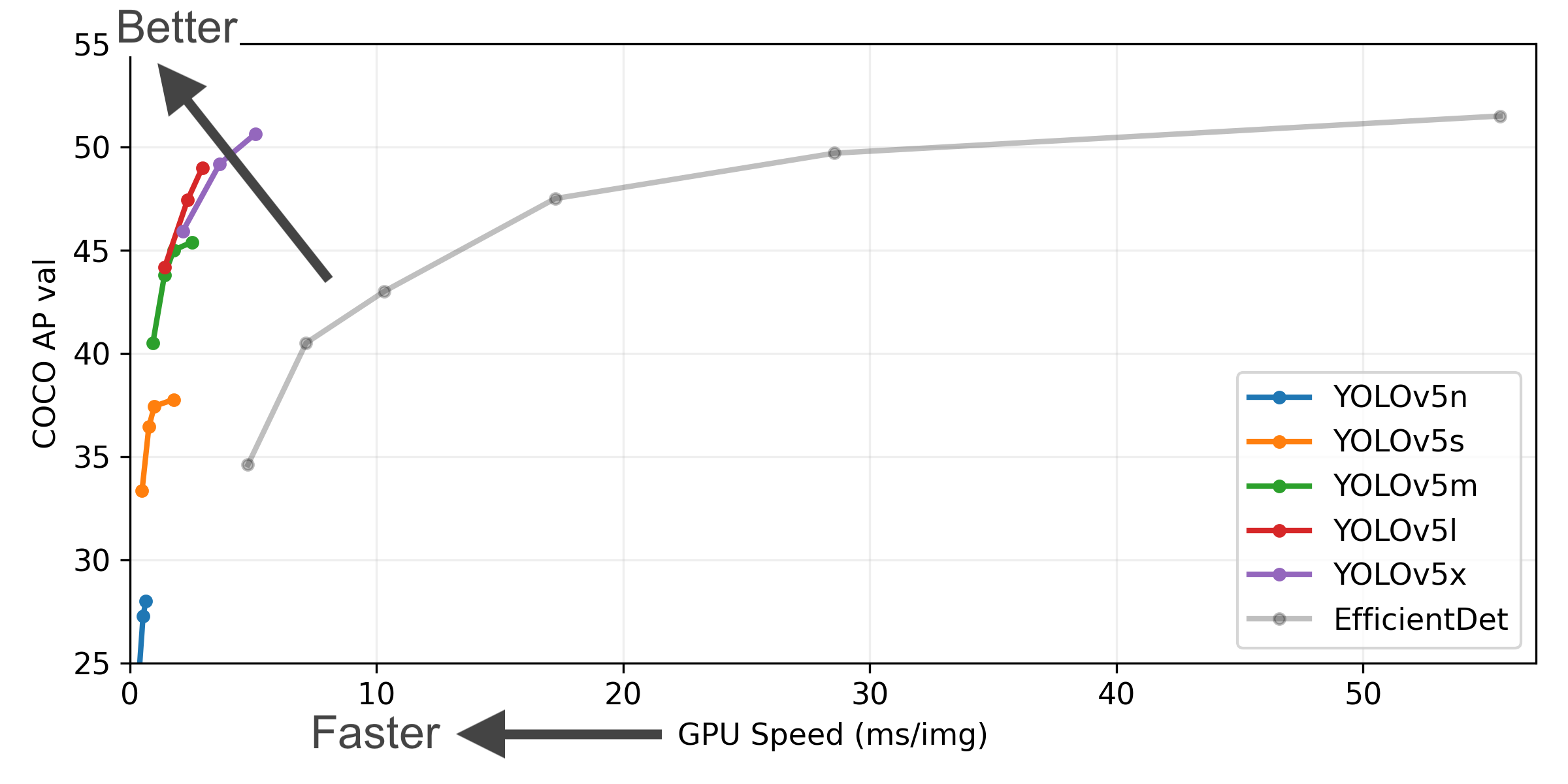

-The commands below reproduce YOLOv5 [COCO](https://github.com/ultralytics/yolov5/blob/master/data/scripts/get_coco.sh) results. [Models](https://github.com/ultralytics/yolov5/tree/master/models) and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) download automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases). Training times for YOLOv5n/s/m/l/x are 1/2/4/6/8 days on a V100 GPU ([Multi-GPU](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training/) times faster). Use the largest `--batch-size` possible, or pass `--batch-size -1` for YOLOv5 [AutoBatch](https://github.com/ultralytics/yolov5/pull/5092). Batch sizes shown for V100-16GB. +The commands below demonstrate how to reproduce YOLOv5 [COCO dataset](https://docs.ultralytics.com/datasets/detect/coco/) results. Both [models](https://github.com/ultralytics/yolov5/tree/master/models) and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) are downloaded automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases). Training times for YOLOv5n/s/m/l/x are approximately 1/2/4/6/8 days on a single V100 GPU. Using [Multi-GPU training](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training/) can significantly reduce training time. Use the largest `--batch-size` your hardware allows, or use `--batch-size -1` for YOLOv5 [AutoBatch](https://github.com/ultralytics/yolov5/pull/5092). The batch sizes shown below are for V100-16GB GPUs. ```bash +# Train YOLOv5n on COCO for 300 epochs python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5n.yaml --batch-size 128 + +# Train YOLOv5s on COCO for 300 epochs python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5s.yaml --batch-size 64 + +# Train YOLOv5m on COCO for 300 epochs python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5m.yaml --batch-size 40 + +# Train YOLOv5l on COCO for 300 epochs python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5l.yaml --batch-size 24 + +# Train YOLOv5x on COCO for 300 epochs python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5x.yaml --batch-size 16 ``` - +

+

Tutorials

-- [Train Custom Data](https://docs.ultralytics.com/yolov5/tutorials/train_custom_data/) 🚀 RECOMMENDED -- [Tips for Best Training Results](https://docs.ultralytics.com/guides/model-training-tips/) ☘️ -- [Multi-GPU Training](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training/) -- [PyTorch Hub](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/) 🌟 NEW -- [TFLite, ONNX, CoreML, TensorRT Export](https://docs.ultralytics.com/yolov5/tutorials/model_export/) 🚀 -- [NVIDIA Jetson platform Deployment](https://docs.ultralytics.com/yolov5/tutorials/running_on_jetson_nano/) 🌟 NEW -- [Test-Time Augmentation (TTA)](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/) -- [Model Ensembling](https://docs.ultralytics.com/yolov5/tutorials/model_ensembling/) -- [Model Pruning/Sparsity](https://docs.ultralytics.com/yolov5/tutorials/model_pruning_and_sparsity/) -- [Hyperparameter Evolution](https://docs.ultralytics.com/yolov5/tutorials/hyperparameter_evolution/) -- [Transfer Learning with Frozen Layers](https://docs.ultralytics.com/yolov5/tutorials/transfer_learning_with_frozen_layers/) -- [Architecture Summary](https://docs.ultralytics.com/yolov5/tutorials/architecture_description/) 🌟 NEW -- [Ultralytics HUB to train and deploy YOLO](https://www.ultralytics.com/hub) 🚀 RECOMMENDED -- [ClearML Logging](https://docs.ultralytics.com/yolov5/tutorials/clearml_logging_integration/) -- [YOLOv5 with Neural Magic's Deepsparse](https://docs.ultralytics.com/yolov5/tutorials/neural_magic_pruning_quantization/) -- [Comet Logging](https://docs.ultralytics.com/yolov5/tutorials/comet_logging_integration/) 🌟 NEW +- **[Train Custom Data](https://docs.ultralytics.com/yolov5/tutorials/train_custom_data/)** 🚀 **RECOMMENDED**: Learn how to train YOLOv5 on your own datasets. +- **[Tips for Best Training Results](https://docs.ultralytics.com/guides/model-training-tips/)** ☘️: Improve your model's performance with expert tips. +- **[Multi-GPU Training](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training/)**: Speed up training using multiple GPUs. +- **[PyTorch Hub Integration](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/)** 🌟 **NEW**: Easily load models using PyTorch Hub. +- **[Model Export (TFLite, ONNX, CoreML, TensorRT)](https://docs.ultralytics.com/yolov5/tutorials/model_export/)** 🚀: Convert your models to various deployment formats. +- **[NVIDIA Jetson Deployment](https://docs.ultralytics.com/yolov5/tutorials/running_on_jetson_nano/)** 🌟 **NEW**: Deploy YOLOv5 on NVIDIA Jetson devices. +- **[Test-Time Augmentation (TTA)](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/)**: Enhance prediction accuracy with TTA. +- **[Model Ensembling](https://docs.ultralytics.com/yolov5/tutorials/model_ensembling/)**: Combine multiple models for better performance. +- **[Model Pruning/Sparsity](https://docs.ultralytics.com/yolov5/tutorials/model_pruning_and_sparsity/)**: Optimize models for size and speed. +- **[Hyperparameter Evolution](https://docs.ultralytics.com/yolov5/tutorials/hyperparameter_evolution/)**: Automatically find the best training hyperparameters. +- **[Transfer Learning with Frozen Layers](https://docs.ultralytics.com/yolov5/tutorials/transfer_learning_with_frozen_layers/)**: Adapt pretrained models to new tasks efficiently. +- **[Architecture Summary](https://docs.ultralytics.com/yolov5/tutorials/architecture_description/)** 🌟 **NEW**: Understand the YOLOv5 model architecture. +- **[Ultralytics HUB Training](https://www.ultralytics.com/hub)** 🚀 **RECOMMENDED**: Train and deploy YOLO models using Ultralytics HUB. +- **[ClearML Logging](https://docs.ultralytics.com/yolov5/tutorials/clearml_logging_integration/)**: Integrate with ClearML for experiment tracking. +- **[Neural Magic DeepSparse Integration](https://docs.ultralytics.com/yolov5/tutorials/neural_magic_pruning_quantization/)**: Accelerate inference with DeepSparse. +- **[Comet Logging](https://docs.ultralytics.com/yolov5/tutorials/comet_logging_integration/)** 🌟 **NEW**: Log experiments using Comet ML.Integrations

+## 🛠️ Integrations

-Our key integrations with leading AI platforms extend the functionality of Ultralytics' offerings, enhancing tasks like dataset labeling, training, visualization, and model management. Discover how Ultralytics, in collaboration with [W&B](https://docs.wandb.ai/guides/integrations/ultralytics/), [Comet](https://bit.ly/yolov8-readme-comet), [Roboflow](https://roboflow.com/?ref=ultralytics) and [OpenVINO](https://docs.ultralytics.com/integrations/openvino/), can optimize your AI workflow.

+Explore Ultralytics' key integrations with leading AI platforms. These collaborations enhance capabilities for dataset labeling, training, visualization, and model management. Discover how Ultralytics works with [Weights & Biases (W&B)](https://docs.wandb.ai/guides/integrations/ultralytics/), [Comet ML](https://bit.ly/yolov5-readme-comet), [Roboflow](https://roboflow.com/?ref=ultralytics), and [Intel OpenVINO](https://docs.ultralytics.com/integrations/openvino/) to optimize your AI workflows.

-

+

+

@@ -174,49 +212,51 @@ Our key integrations with leading AI platforms extend the functionality of Ultra

+

+

+

+

+

+

-

-

+## 📜 License

-##

+## 📜 License

-##

-## About ClearML

+## ℹ️ About ClearML

-[ClearML](https://clear.ml/) is an [open-source](https://github.com/clearml/clearml) toolbox designed to save you time ⏱️.

+[ClearML](https://clear.ml/) is an [open-source](https://github.com/clearml/clearml) MLOps platform designed to streamline your machine learning workflow and save you valuable time ⏱️. Integrating ClearML with Ultralytics YOLOv5 allows you to leverage a powerful suite of tools:

-🔨 Track every YOLOv5 training run in the experiment manager

+- **Experiment Management:** 🔨 Track every [YOLOv5](https://docs.ultralytics.com/models/yolov5/) training run, including parameters, metrics, and outputs. See the [Ultralytics ClearML integration guide](https://docs.ultralytics.com/integrations/clearml/) for more details.

+- **Data Versioning:** 🔧 Version and easily access your custom training data using the integrated ClearML Data Versioning Tool, similar to concepts in [DVC integration](https://docs.ultralytics.com/integrations/dvc/).

+- **Remote Execution:** 🔦 [Remotely train and monitor](https://docs.ultralytics.com/hub/cloud-training/) your YOLOv5 models using ClearML Agent.

+- **Hyperparameter Optimization:** 🔬 Achieve optimal [Mean Average Precision (mAP)](https://docs.ultralytics.com/guides/yolo-performance-metrics/) using ClearML's [Hyperparameter Optimization](https://docs.ultralytics.com/guides/hyperparameter-tuning/) capabilities.

+- **Model Deployment:** 🔭 Turn your trained YOLOv5 model into an API with just a few commands using ClearML Serving, complementing [Ultralytics deployment options](https://docs.ultralytics.com/guides/model-deployment-options/).

-🔧 Version and easily access your custom training data with the integrated ClearML Data Versioning Tool

-

-🔦 Remotely train and monitor your YOLOv5 training runs using ClearML Agent

-

-🔬 Get the very best mAP using ClearML Hyperparameter Optimization

-

-🔭 Turn your newly trained YOLOv5 model into an API with just a few commands using ClearML Serving

-

-And so much more. It's up to you how many of these tools you want to use, you can stick to the experiment manager, or chain them all together into an impressive pipeline!

+You can choose to use only the experiment manager or combine multiple tools into a comprehensive MLOps pipeline.

## 🦾 Setting Things Up

-To keep track of your experiments and/or data, ClearML needs to communicate to a server. You have 2 options to get one:

+ClearML requires communication with a server to track experiments and data. You have two main options:

-Either sign up for free to the [ClearML Hosted Service](https://clear.ml/) or you can set up your own server, see [here](https://clear.ml/docs/latest/docs/deploying_clearml/clearml_server). Even the server is open-source, so even if you're dealing with sensitive data, you should be good to go!

+1. **ClearML Hosted Service:** Sign up for a free account at [app.clear.ml](https://app.clear.ml/).

+2. **Self-Hosted Server:** Set up your own ClearML server. Find instructions [here](https://clear.ml/docs/latest/docs/deploying_clearml/clearml_server). The server is also open-source, ensuring data privacy.

-1. Install the `clearml` python package:

+Follow these steps to get started:

- ```bash

- pip install clearml

- ```

+1. Install the `clearml` Python package:

+ ```bash

+ pip install clearml

+ ```

+ *Note: This package is included in the `requirements.txt` of YOLOv5.*

-2. Connect the ClearML SDK to the server by [creating credentials](https://app.clear.ml/settings/workspace-configuration) (go right top to Settings -> Workspace -> Create new credentials), then execute the command below and follow the instructions:

+2. Connect the ClearML SDK to your server. [Create credentials](https://app.clear.ml/settings/workspace-configuration) (Settings -> Workspace -> Create new credentials), then run the following command and follow the prompts:

+ ```bash

+ clearml-init

+ ```

- ```bash

- clearml-init

- ```

-

-That's it! You're done 😎

+That's it! You're ready to integrate ClearML with your YOLOv5 projects 😎. For a general Ultralytics setup, see the [Quickstart Guide](https://docs.ultralytics.com/quickstart/).

## 🚀 Training YOLOv5 With ClearML

-To enable ClearML experiment tracking, simply install the ClearML pip package.

-

-```bash

-pip install clearml

-```

-

-This will enable integration with the YOLOv5 training script. Every training run from now on, will be captured and stored by the ClearML experiment manager.

-

-If you want to change the `project_name` or `task_name`, use the `--project` and `--name` arguments of the `train.py` script, by default the project will be called `YOLOv5` and the task `Training`. PLEASE NOTE: ClearML uses `/` as a delimiter for subprojects, so be careful when using `/` in your project name!

+ClearML experiment tracking is automatically enabled when the `clearml` package is installed. Every YOLOv5 [training run](https://docs.ultralytics.com/modes/train/) will be captured and stored in the ClearML experiment manager.

+

+To customize the project or task name in ClearML, use the `--project` and `--name` arguments when running `train.py`. By default, the project is `YOLOv5` and the task is `Training`. Note that ClearML uses `/` as a delimiter for subprojects.

+

+**Example Training Command:**

```bash

+# Train YOLOv5s on COCO128 dataset for 3 epochs

python train.py --img 640 --batch 16 --epochs 3 --data coco128.yaml --weights yolov5s.pt --cache

```

-or with custom project and task name:

+**Example with Custom Project and Task Names:**

```bash

-python train.py --project my_project --name my_training --img 640 --batch 16 --epochs 3 --data coco128.yaml --weights yolov5s.pt --cache

+# Train with custom names

+python train.py --project my_yolo_project --name experiment_001 --img 640 --batch 16 --epochs 3 --data coco128.yaml --weights yolov5s.pt --cache

```

-This will capture:

+ClearML automatically captures comprehensive information about your training run:

-- Source code + uncommitted changes

-- Installed packages

-- (Hyper)parameters

-- Model files (use `--save-period n` to save a checkpoint every n epochs)

-- Console output

-- Scalars (mAP_0.5, mAP_0.5:0.95, precision, recall, losses, learning rates, ...)

-- General info such as machine details, runtime, creation date etc.

-- All produced plots such as label correlogram and confusion matrix

-- Images with bounding boxes per epoch

-- Mosaic per epoch

-- Validation images per epoch

-- ...

+- Source code and uncommitted changes

+- Installed Python packages

+- Hyperparameters and configuration settings

+- Model checkpoints (use `--save-period n` to save every `n` epochs)

+- Console output logs

+- Performance metrics ([mAP_0.5](https://docs.ultralytics.com/guides/yolo-performance-metrics/), mAP_0.5:0.95, [precision, recall](https://docs.ultralytics.com/guides/yolo-performance-metrics/), [losses](https://docs.ultralytics.com/reference/utils/loss/), [learning rates](https://www.ultralytics.com/glossary/learning-rate), etc.)

+- System details (machine specs, runtime, creation date)

+- Generated plots (e.g., label correlogram, [confusion matrix](https://www.ultralytics.com/glossary/confusion-matrix))

+- Images with bounding boxes per epoch

+- Mosaic augmentation previews per epoch

+- Validation images per epoch

-That's a lot right? 🤯 Now, we can visualize all of this information in the ClearML UI to get an overview of our training progress. Add custom columns to the table view (such as e.g. mAP_0.5) so you can easily sort on the best performing model. Or select multiple experiments and directly compare them!

-

-There even more we can do with all of this information, like hyperparameter optimization and remote execution, so keep reading if you want to see how that works!

+This wealth of information 🤯 can be visualized in the ClearML UI. You can customize table views, sort experiments by metrics like mAP, and directly compare multiple runs. This detailed tracking enables advanced features like hyperparameter optimization and remote execution.

## 🔗 Dataset Version Management

-Versioning your data separately from your code is generally a good idea and makes it easy to acquire the latest version too. This repository supports supplying a dataset version ID, and it will make sure to get the data if it's not there yet. Next to that, this workflow also saves the used dataset ID as part of the task parameters, so you will always know for sure which data was used in which experiment!

+Versioning your [datasets](https://docs.ultralytics.com/datasets/) separately from code is crucial for reproducibility and collaboration. ClearML's Data Versioning Tool helps manage this process. YOLOv5 supports using ClearML dataset version IDs, automatically downloading the data if needed. The dataset ID used is saved as a task parameter, ensuring you always know which data version was used for each experiment.

### Prepare Your Dataset

-The YOLOv5 repository supports a number of different datasets by using yaml files containing their information. By default datasets are downloaded to the `../datasets` folder in relation to the repository root folder. So if you downloaded the `coco128` dataset using the link in the yaml or with the scripts provided by yolov5, you get this folder structure:

+YOLOv5 uses [YAML](https://www.ultralytics.com/glossary/yaml) files to define dataset configurations. By default, datasets are expected in the `../datasets` directory relative to the repository root. For example, the [COCO128 dataset](https://docs.ultralytics.com/datasets/detect/coco128/) structure looks like this:

```

-..

-|_ yolov5

-|_ datasets

- |_ coco128

- |_ images

- |_ labels

- |_ LICENSE

- |_ README.txt

+../

+├── yolov5/ # Your YOLOv5 repository clone

+└── datasets/

+ └── coco128/

+ ├── images/

+ ├── labels/

+ ├── LICENSE

+ └── README.txt

```

-But this can be any dataset you wish. Feel free to use your own, as long as you keep to this folder structure.

+Ensure your custom dataset follows a similar structure.

-Next, ⚠️**copy the corresponding yaml file to the root of the dataset folder**⚠️. This yaml files contains the information ClearML will need to properly use the dataset. You can make this yourself too, of course, just follow the structure of the example yamls.

-

-Basically we need the following keys: `path`, `train`, `test`, `val`, `nc`, `names`.

+Next, ⚠️**copy the corresponding dataset `.yaml` file into the root of your dataset folder**⚠️. This file contains essential information (`path`, `train`, `test`, `val`, `nc`, `names`) that ClearML needs.

```

-..

-|_ yolov5

-|_ datasets

- |_ coco128

- |_ images

- |_ labels

- |_ coco128.yaml # <---- HERE!

- |_ LICENSE

- |_ README.txt

+../

+└── datasets/

+ └── coco128/

+ ├── images/

+ ├── labels/

+ ├── coco128.yaml # <---- Place the YAML file here!

+ ├── LICENSE

+ └── README.txt

```

### Upload Your Dataset

-To get this dataset into ClearML as a versioned dataset, go to the dataset root folder and run the following command:

+Navigate to your dataset's root directory in the terminal and use the `clearml-data` CLI tool to upload it:

```bash

-cd coco128

-clearml-data sync --project YOLOv5 --name coco128 --folder .

+cd ../datasets/coco128

+clearml-data sync --project YOLOv5_Datasets --name coco128 --folder .

```

-The command `clearml-data sync` is actually a shorthand command. You could also run these commands one after the other:

+Alternatively, you can use the following commands:

```bash

-# Optionally add --parent

-## About ClearML

+## ℹ️ About ClearML

-[ClearML](https://clear.ml/) is an [open-source](https://github.com/clearml/clearml) toolbox designed to save you time ⏱️.

+[ClearML](https://clear.ml/) is an [open-source](https://github.com/clearml/clearml) MLOps platform designed to streamline your machine learning workflow and save you valuable time ⏱️. Integrating ClearML with Ultralytics YOLOv5 allows you to leverage a powerful suite of tools:

-🔨 Track every YOLOv5 training run in the experiment manager

+- **Experiment Management:** 🔨 Track every [YOLOv5](https://docs.ultralytics.com/models/yolov5/) training run, including parameters, metrics, and outputs. See the [Ultralytics ClearML integration guide](https://docs.ultralytics.com/integrations/clearml/) for more details.

+- **Data Versioning:** 🔧 Version and easily access your custom training data using the integrated ClearML Data Versioning Tool, similar to concepts in [DVC integration](https://docs.ultralytics.com/integrations/dvc/).

+- **Remote Execution:** 🔦 [Remotely train and monitor](https://docs.ultralytics.com/hub/cloud-training/) your YOLOv5 models using ClearML Agent.

+- **Hyperparameter Optimization:** 🔬 Achieve optimal [Mean Average Precision (mAP)](https://docs.ultralytics.com/guides/yolo-performance-metrics/) using ClearML's [Hyperparameter Optimization](https://docs.ultralytics.com/guides/hyperparameter-tuning/) capabilities.

+- **Model Deployment:** 🔭 Turn your trained YOLOv5 model into an API with just a few commands using ClearML Serving, complementing [Ultralytics deployment options](https://docs.ultralytics.com/guides/model-deployment-options/).

-🔧 Version and easily access your custom training data with the integrated ClearML Data Versioning Tool

-

-🔦 Remotely train and monitor your YOLOv5 training runs using ClearML Agent

-

-🔬 Get the very best mAP using ClearML Hyperparameter Optimization

-

-🔭 Turn your newly trained YOLOv5 model into an API with just a few commands using ClearML Serving

-

-And so much more. It's up to you how many of these tools you want to use, you can stick to the experiment manager, or chain them all together into an impressive pipeline!

+You can choose to use only the experiment manager or combine multiple tools into a comprehensive MLOps pipeline.

## 🦾 Setting Things Up

-To keep track of your experiments and/or data, ClearML needs to communicate to a server. You have 2 options to get one:

+ClearML requires communication with a server to track experiments and data. You have two main options:

-Either sign up for free to the [ClearML Hosted Service](https://clear.ml/) or you can set up your own server, see [here](https://clear.ml/docs/latest/docs/deploying_clearml/clearml_server). Even the server is open-source, so even if you're dealing with sensitive data, you should be good to go!

+1. **ClearML Hosted Service:** Sign up for a free account at [app.clear.ml](https://app.clear.ml/).

+2. **Self-Hosted Server:** Set up your own ClearML server. Find instructions [here](https://clear.ml/docs/latest/docs/deploying_clearml/clearml_server). The server is also open-source, ensuring data privacy.

-1. Install the `clearml` python package:

+Follow these steps to get started:

- ```bash

- pip install clearml

- ```

+1. Install the `clearml` Python package:

+ ```bash

+ pip install clearml

+ ```

+ *Note: This package is included in the `requirements.txt` of YOLOv5.*

-2. Connect the ClearML SDK to the server by [creating credentials](https://app.clear.ml/settings/workspace-configuration) (go right top to Settings -> Workspace -> Create new credentials), then execute the command below and follow the instructions:

+2. Connect the ClearML SDK to your server. [Create credentials](https://app.clear.ml/settings/workspace-configuration) (Settings -> Workspace -> Create new credentials), then run the following command and follow the prompts:

+ ```bash

+ clearml-init

+ ```

- ```bash

- clearml-init

- ```

-

-That's it! You're done 😎

+That's it! You're ready to integrate ClearML with your YOLOv5 projects 😎. For a general Ultralytics setup, see the [Quickstart Guide](https://docs.ultralytics.com/quickstart/).

## 🚀 Training YOLOv5 With ClearML

-To enable ClearML experiment tracking, simply install the ClearML pip package.

-

-```bash

-pip install clearml

-```

-

-This will enable integration with the YOLOv5 training script. Every training run from now on, will be captured and stored by the ClearML experiment manager.

-

-If you want to change the `project_name` or `task_name`, use the `--project` and `--name` arguments of the `train.py` script, by default the project will be called `YOLOv5` and the task `Training`. PLEASE NOTE: ClearML uses `/` as a delimiter for subprojects, so be careful when using `/` in your project name!

+ClearML experiment tracking is automatically enabled when the `clearml` package is installed. Every YOLOv5 [training run](https://docs.ultralytics.com/modes/train/) will be captured and stored in the ClearML experiment manager.

+

+To customize the project or task name in ClearML, use the `--project` and `--name` arguments when running `train.py`. By default, the project is `YOLOv5` and the task is `Training`. Note that ClearML uses `/` as a delimiter for subprojects.

+

+**Example Training Command:**

```bash

+# Train YOLOv5s on COCO128 dataset for 3 epochs

python train.py --img 640 --batch 16 --epochs 3 --data coco128.yaml --weights yolov5s.pt --cache

```

-or with custom project and task name:

+**Example with Custom Project and Task Names:**

```bash

-python train.py --project my_project --name my_training --img 640 --batch 16 --epochs 3 --data coco128.yaml --weights yolov5s.pt --cache

+# Train with custom names

+python train.py --project my_yolo_project --name experiment_001 --img 640 --batch 16 --epochs 3 --data coco128.yaml --weights yolov5s.pt --cache

```

-This will capture:

+ClearML automatically captures comprehensive information about your training run:

-- Source code + uncommitted changes

-- Installed packages

-- (Hyper)parameters

-- Model files (use `--save-period n` to save a checkpoint every n epochs)

-- Console output

-- Scalars (mAP_0.5, mAP_0.5:0.95, precision, recall, losses, learning rates, ...)

-- General info such as machine details, runtime, creation date etc.

-- All produced plots such as label correlogram and confusion matrix

-- Images with bounding boxes per epoch

-- Mosaic per epoch

-- Validation images per epoch

-- ...

+- Source code and uncommitted changes

+- Installed Python packages

+- Hyperparameters and configuration settings

+- Model checkpoints (use `--save-period n` to save every `n` epochs)

+- Console output logs

+- Performance metrics ([mAP_0.5](https://docs.ultralytics.com/guides/yolo-performance-metrics/), mAP_0.5:0.95, [precision, recall](https://docs.ultralytics.com/guides/yolo-performance-metrics/), [losses](https://docs.ultralytics.com/reference/utils/loss/), [learning rates](https://www.ultralytics.com/glossary/learning-rate), etc.)

+- System details (machine specs, runtime, creation date)

+- Generated plots (e.g., label correlogram, [confusion matrix](https://www.ultralytics.com/glossary/confusion-matrix))

+- Images with bounding boxes per epoch

+- Mosaic augmentation previews per epoch

+- Validation images per epoch

-That's a lot right? 🤯 Now, we can visualize all of this information in the ClearML UI to get an overview of our training progress. Add custom columns to the table view (such as e.g. mAP_0.5) so you can easily sort on the best performing model. Or select multiple experiments and directly compare them!

-

-There even more we can do with all of this information, like hyperparameter optimization and remote execution, so keep reading if you want to see how that works!

+This wealth of information 🤯 can be visualized in the ClearML UI. You can customize table views, sort experiments by metrics like mAP, and directly compare multiple runs. This detailed tracking enables advanced features like hyperparameter optimization and remote execution.

## 🔗 Dataset Version Management

-Versioning your data separately from your code is generally a good idea and makes it easy to acquire the latest version too. This repository supports supplying a dataset version ID, and it will make sure to get the data if it's not there yet. Next to that, this workflow also saves the used dataset ID as part of the task parameters, so you will always know for sure which data was used in which experiment!

+Versioning your [datasets](https://docs.ultralytics.com/datasets/) separately from code is crucial for reproducibility and collaboration. ClearML's Data Versioning Tool helps manage this process. YOLOv5 supports using ClearML dataset version IDs, automatically downloading the data if needed. The dataset ID used is saved as a task parameter, ensuring you always know which data version was used for each experiment.

### Prepare Your Dataset

-The YOLOv5 repository supports a number of different datasets by using yaml files containing their information. By default datasets are downloaded to the `../datasets` folder in relation to the repository root folder. So if you downloaded the `coco128` dataset using the link in the yaml or with the scripts provided by yolov5, you get this folder structure:

+YOLOv5 uses [YAML](https://www.ultralytics.com/glossary/yaml) files to define dataset configurations. By default, datasets are expected in the `../datasets` directory relative to the repository root. For example, the [COCO128 dataset](https://docs.ultralytics.com/datasets/detect/coco128/) structure looks like this:

```

-..

-|_ yolov5

-|_ datasets

- |_ coco128

- |_ images

- |_ labels

- |_ LICENSE

- |_ README.txt

+../

+├── yolov5/ # Your YOLOv5 repository clone

+└── datasets/

+ └── coco128/

+ ├── images/

+ ├── labels/

+ ├── LICENSE

+ └── README.txt

```

-But this can be any dataset you wish. Feel free to use your own, as long as you keep to this folder structure.

+Ensure your custom dataset follows a similar structure.

-Next, ⚠️**copy the corresponding yaml file to the root of the dataset folder**⚠️. This yaml files contains the information ClearML will need to properly use the dataset. You can make this yourself too, of course, just follow the structure of the example yamls.

-

-Basically we need the following keys: `path`, `train`, `test`, `val`, `nc`, `names`.

+Next, ⚠️**copy the corresponding dataset `.yaml` file into the root of your dataset folder**⚠️. This file contains essential information (`path`, `train`, `test`, `val`, `nc`, `names`) that ClearML needs.

```

-..

-|_ yolov5

-|_ datasets

- |_ coco128

- |_ images

- |_ labels

- |_ coco128.yaml # <---- HERE!

- |_ LICENSE

- |_ README.txt

+../

+└── datasets/

+ └── coco128/

+ ├── images/

+ ├── labels/

+ ├── coco128.yaml # <---- Place the YAML file here!

+ ├── LICENSE

+ └── README.txt

```

### Upload Your Dataset

-To get this dataset into ClearML as a versioned dataset, go to the dataset root folder and run the following command:

+Navigate to your dataset's root directory in the terminal and use the `clearml-data` CLI tool to upload it:

```bash

-cd coco128

-clearml-data sync --project YOLOv5 --name coco128 --folder .

+cd ../datasets/coco128

+clearml-data sync --project YOLOv5_Datasets --name coco128 --folder .

```

-The command `clearml-data sync` is actually a shorthand command. You could also run these commands one after the other:

+Alternatively, you can use the following commands:

```bash

-# Optionally add --parent  -# YOLOv5 with Comet

+# Using YOLOv5 with Comet

-This guide will cover how to use YOLOv5 with [Comet](https://bit.ly/yolov5-readme-comet2)

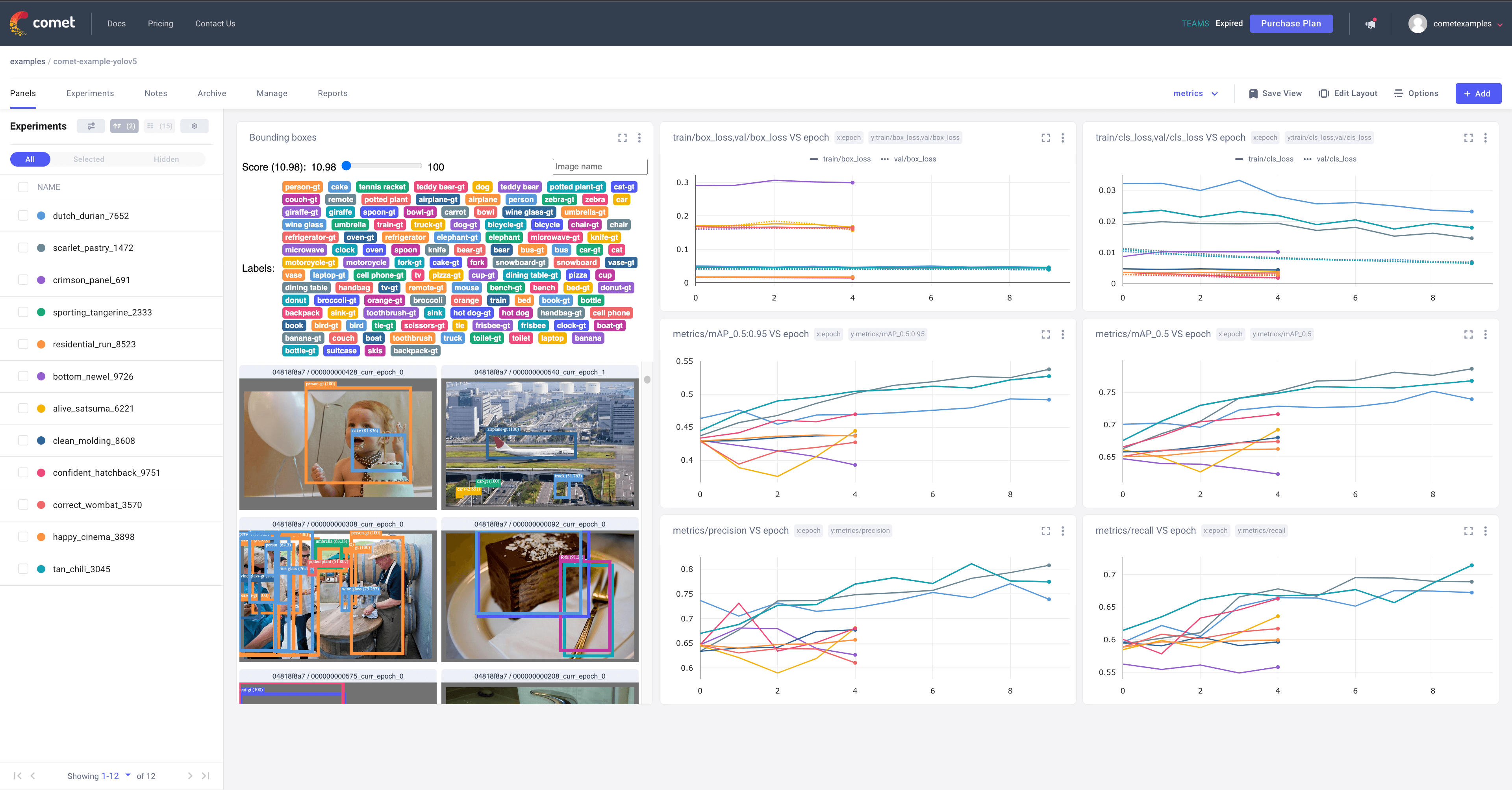

+Welcome to the guide on integrating [Ultralytics YOLOv5](https://github.com/ultralytics/yolov5) with [Comet](https://www.comet.com/site/?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github_readme)! Comet provides powerful tools for experiment tracking, model management, and visualization, enhancing your machine learning workflow. This document details how to leverage Comet to monitor training, log results, manage datasets, and optimize hyperparameters for your YOLOv5 models.

-# About Comet

+## 🧪 About Comet

-Comet builds tools that help data scientists, engineers, and team leaders accelerate and optimize machine learning and deep learning models.

+[Comet](https://www.comet.com/site/?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github_readme) builds tools that help data scientists, engineers, and team leaders accelerate and optimize machine learning and deep learning models.

-Track and visualize model metrics in real time, save your hyperparameters, datasets, and model checkpoints, and visualize your model predictions with [Comet Custom Panels](https://www.comet.com/docs/v2/guides/comet-dashboard/code-panels/about-panels/?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)! Comet makes sure you never lose track of your work and makes it easy to share results and collaborate across teams of all sizes!

+Track and visualize model metrics in real-time, save your hyperparameters, datasets, and model checkpoints, and visualize your model predictions with [Comet Custom Panels](https://www.comet.com/docs/v2/guides/comet-dashboard/code-panels/about-panels/?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github_readme)! Comet ensures you never lose track of your work and makes it easy to share results and collaborate across teams of all sizes.

-# Getting Started

+## 🚀 Getting Started

-## Install Comet

+Follow these steps to set up Comet for your YOLOv5 projects.

+

+### Install Comet

+

+Install the necessary [Python package](https://pypi.org/project/comet-ml/) using pip:

```shell

pip install comet_ml

```

-## Configure Comet Credentials

+### Configure Comet Credentials

-There are two ways to configure Comet with YOLOv5.

+You can configure Comet in two ways:

-You can either set your credentials through environment variables

+1. **Environment Variables:** Set your credentials directly in your environment.

+ ```shell

+ export COMET_API_KEY=

-# YOLOv5 with Comet

+# Using YOLOv5 with Comet

-This guide will cover how to use YOLOv5 with [Comet](https://bit.ly/yolov5-readme-comet2)

+Welcome to the guide on integrating [Ultralytics YOLOv5](https://github.com/ultralytics/yolov5) with [Comet](https://www.comet.com/site/?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github_readme)! Comet provides powerful tools for experiment tracking, model management, and visualization, enhancing your machine learning workflow. This document details how to leverage Comet to monitor training, log results, manage datasets, and optimize hyperparameters for your YOLOv5 models.

-# About Comet

+## 🧪 About Comet

-Comet builds tools that help data scientists, engineers, and team leaders accelerate and optimize machine learning and deep learning models.

+[Comet](https://www.comet.com/site/?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github_readme) builds tools that help data scientists, engineers, and team leaders accelerate and optimize machine learning and deep learning models.

-Track and visualize model metrics in real time, save your hyperparameters, datasets, and model checkpoints, and visualize your model predictions with [Comet Custom Panels](https://www.comet.com/docs/v2/guides/comet-dashboard/code-panels/about-panels/?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)! Comet makes sure you never lose track of your work and makes it easy to share results and collaborate across teams of all sizes!

+Track and visualize model metrics in real-time, save your hyperparameters, datasets, and model checkpoints, and visualize your model predictions with [Comet Custom Panels](https://www.comet.com/docs/v2/guides/comet-dashboard/code-panels/about-panels/?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github_readme)! Comet ensures you never lose track of your work and makes it easy to share results and collaborate across teams of all sizes.

-# Getting Started

+## 🚀 Getting Started

-## Install Comet

+Follow these steps to set up Comet for your YOLOv5 projects.

+

+### Install Comet

+

+Install the necessary [Python package](https://pypi.org/project/comet-ml/) using pip:

```shell

pip install comet_ml

```

-## Configure Comet Credentials

+### Configure Comet Credentials

-There are two ways to configure Comet with YOLOv5.

+You can configure Comet in two ways:

-You can either set your credentials through environment variables

+1. **Environment Variables:** Set your credentials directly in your environment.

+ ```shell

+ export COMET_API_KEY= +

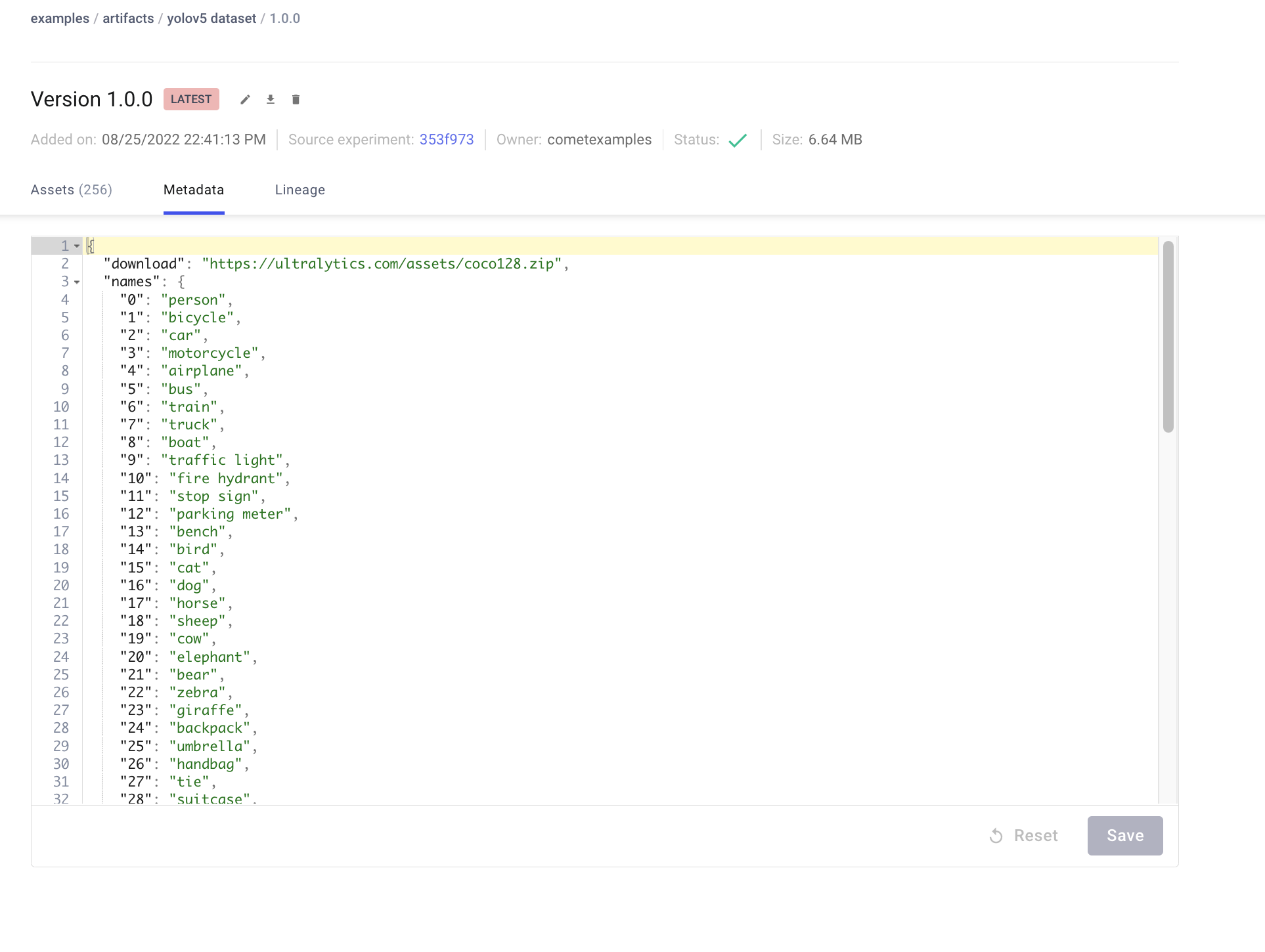

+ +View the uploaded dataset in the Artifacts tab of your Comet Workspace.

+

+View the uploaded dataset in the Artifacts tab of your Comet Workspace.

+ +Preview data directly in the Comet UI.

+

+Preview data directly in the Comet UI.

+ +Artifacts are versioned and support metadata. Comet automatically logs metadata from your dataset `yaml` file.

+

+Artifacts are versioned and support metadata. Comet automatically logs metadata from your dataset `yaml` file.

+ +Artifacts track data lineage, showing which experiments used specific dataset versions.

+

+Artifacts track data lineage, showing which experiments used specific dataset versions.

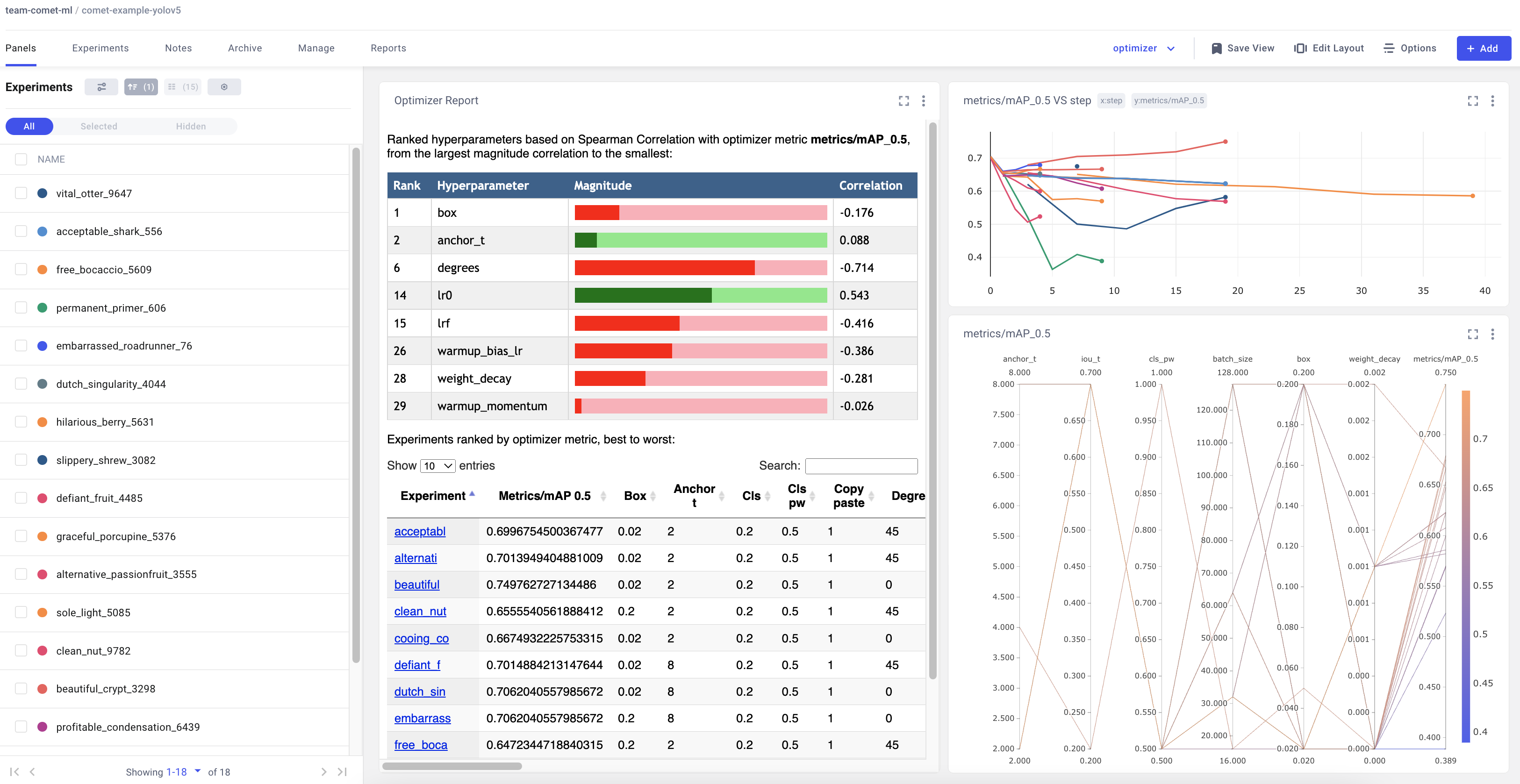

+ +Comet offers various visualizations for analyzing sweep results, such as parallel coordinate plots and parameter importance plots. Explore a [project with a completed sweep here](https://www.comet.com/examples/comet-example-yolov5/view/PrlArHGuuhDTKC1UuBmTtOSXD/panels?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github_readme).

+

+

+Comet offers various visualizations for analyzing sweep results, such as parallel coordinate plots and parameter importance plots. Explore a [project with a completed sweep here](https://www.comet.com/examples/comet-example-yolov5/view/PrlArHGuuhDTKC1UuBmTtOSXD/panels?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github_readme).

+

+