Merge branch 'ultralytics:master' into master

commit

cddd180cc1

.github/workflows

models

utils

flask_rest_api

|

|

@ -142,7 +142,7 @@ jobs:

|

|||

if: always()

|

||||

steps:

|

||||

- name: Check for failure and notify

|

||||

if: (needs.Benchmarks.result == 'failure' || needs.Tests.result == 'failure' || needs.Benchmarks.result == 'cancelled' || needs.Tests.result == 'cancelled') && github.repository == 'ultralytics/yolov5' && (github.event_name == 'schedule' || github.event_name == 'push')

|

||||

if: (needs.Benchmarks.result == 'failure' || needs.Tests.result == 'failure' || needs.Benchmarks.result == 'cancelled' || needs.Tests.result == 'cancelled') && github.repository == 'ultralytics/yolov5' && (github.event_name == 'schedule' || github.event_name == 'push') && github.run_attempt == '1'

|

||||

uses: slackapi/slack-github-action@v2.0.0

|

||||

with:

|

||||

webhook-type: incoming-webhook

|

||||

|

|

|

|||

371

README.md

371

README.md

|

|

@ -7,7 +7,7 @@

|

|||

[中文](https://docs.ultralytics.com/zh) | [한국어](https://docs.ultralytics.com/ko) | [日本語](https://docs.ultralytics.com/ja) | [Русский](https://docs.ultralytics.com/ru) | [Deutsch](https://docs.ultralytics.com/de) | [Français](https://docs.ultralytics.com/fr) | [Español](https://docs.ultralytics.com/es) | [Português](https://docs.ultralytics.com/pt) | [Türkçe](https://docs.ultralytics.com/tr) | [Tiếng Việt](https://docs.ultralytics.com/vi) | [العربية](https://docs.ultralytics.com/ar)

|

||||

|

||||

<div>

|

||||

<a href="https://github.com/ultralytics/yolov5/actions/workflows/ci-testing.yml"><img src="https://github.com/ultralytics/yolov5/actions/workflows/ci-testing.yml/badge.svg" alt="YOLOv5 CI"></a>

|

||||

<a href="https://github.com/ultralytics/yolov5/actions/workflows/ci-testing.yml"><img src="https://github.com/ultralytics/yolov5/actions/workflows/ci-testing.yml/badge.svg" alt="YOLOv5 CI Testing"></a>

|

||||

<a href="https://zenodo.org/badge/latestdoi/264818686"><img src="https://zenodo.org/badge/264818686.svg" alt="YOLOv5 Citation"></a>

|

||||

<a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>

|

||||

<a href="https://discord.com/invite/ultralytics"><img alt="Discord" src="https://img.shields.io/discord/1089800235347353640?logo=discord&logoColor=white&label=Discord&color=blue"></a> <a href="https://community.ultralytics.com/"><img alt="Ultralytics Forums" src="https://img.shields.io/discourse/users?server=https%3A%2F%2Fcommunity.ultralytics.com&logo=discourse&label=Forums&color=blue"></a> <a href="https://reddit.com/r/ultralytics"><img alt="Ultralytics Reddit" src="https://img.shields.io/reddit/subreddit-subscribers/ultralytics?style=flat&logo=reddit&logoColor=white&label=Reddit&color=blue"></a>

|

||||

|

|

@ -18,84 +18,92 @@

|

|||

</div>

|

||||

<br>

|

||||

|

||||

YOLOv5 🚀 is the world's most loved vision AI, representing <a href="https://www.ultralytics.com/">Ultralytics</a> open-source research into future vision AI methods, incorporating lessons learned and best practices evolved over thousands of hours of research and development.

|

||||

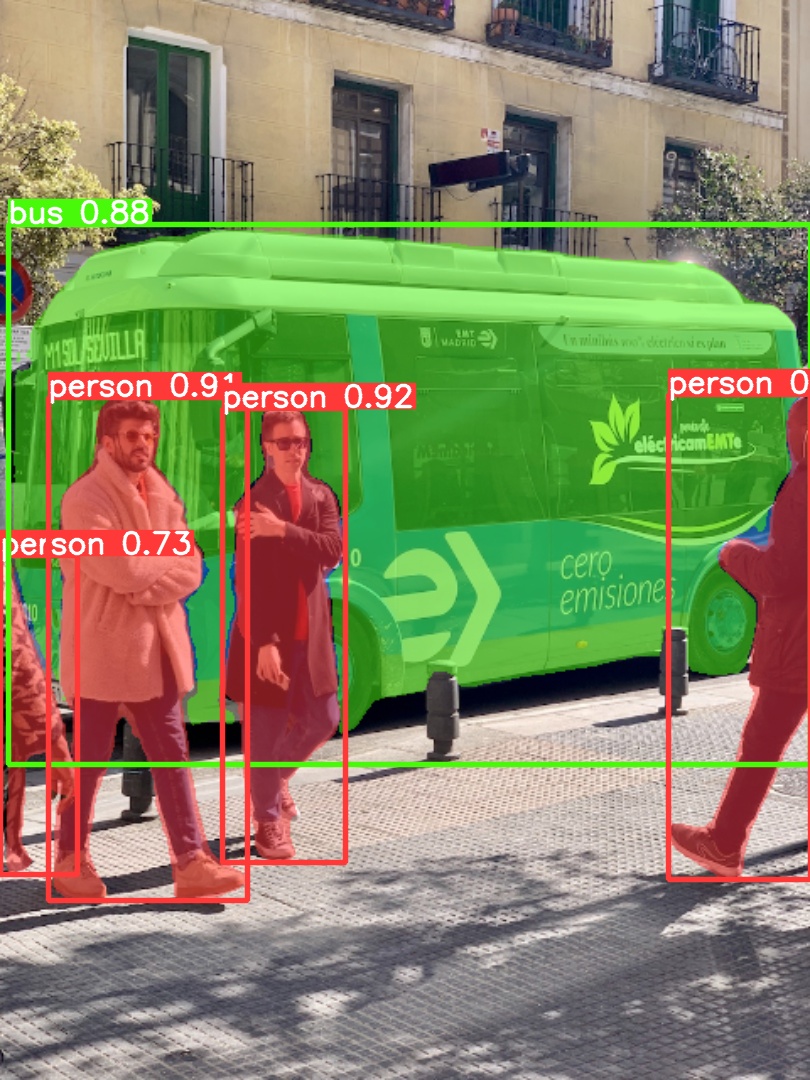

Ultralytics YOLOv5 🚀 is a cutting-edge, state-of-the-art (SOTA) computer vision model developed by [Ultralytics](https://www.ultralytics.com/). Based on the [PyTorch](https://pytorch.org/) framework, YOLOv5 is renowned for its ease of use, speed, and accuracy. It incorporates insights and best practices from extensive research and development, making it a popular choice for a wide range of vision AI tasks, including [object detection](https://docs.ultralytics.com/tasks/detect/), [image segmentation](https://docs.ultralytics.com/tasks/segment/), and [image classification](https://docs.ultralytics.com/tasks/classify/).

|

||||

|

||||

We hope that the resources here will help you get the most out of YOLOv5. Please browse the YOLOv5 <a href="https://docs.ultralytics.com/yolov5/">Docs</a> for details, raise an issue on <a href="https://github.com/ultralytics/yolov5/issues/new/choose">GitHub</a> for support, and join our <a href="https://discord.com/invite/ultralytics">Discord</a> community for questions and discussions!

|

||||

We hope the resources here help you get the most out of YOLOv5. Please browse the [YOLOv5 Docs](https://docs.ultralytics.com/yolov5/) for detailed information, raise an issue on [GitHub](https://github.com/ultralytics/yolov5/issues/new/choose) for support, and join our [Discord community](https://discord.com/invite/ultralytics) for questions and discussions!

|

||||

|

||||

To request an Enterprise License please complete the form at [Ultralytics Licensing](https://www.ultralytics.com/license).

|

||||

To request an Enterprise License, please complete the form at [Ultralytics Licensing](https://www.ultralytics.com/license).

|

||||

|

||||

<div align="center">

|

||||

<a href="https://github.com/ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-github.png" width="2%" alt="Ultralytics GitHub"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="space">

|

||||

<a href="https://www.linkedin.com/company/ultralytics/"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-linkedin.png" width="2%" alt="Ultralytics LinkedIn"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="space">

|

||||

<a href="https://twitter.com/ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-twitter.png" width="2%" alt="Ultralytics Twitter"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="space">

|

||||

<a href="https://youtube.com/ultralytics?sub_confirmation=1"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-youtube.png" width="2%" alt="Ultralytics YouTube"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="space">

|

||||

<a href="https://www.tiktok.com/@ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-tiktok.png" width="2%" alt="Ultralytics TikTok"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="space">

|

||||

<a href="https://ultralytics.com/bilibili"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-bilibili.png" width="2%" alt="Ultralytics BiliBili"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="space">

|

||||

<a href="https://discord.com/invite/ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-discord.png" width="2%" alt="Ultralytics Discord"></a>

|

||||

</div>

|

||||

|

||||

</div>

|

||||

<br>

|

||||

|

||||

## <div align="center">YOLO11 🚀 NEW</div>

|

||||

## 🚀 YOLO11: The Next Evolution

|

||||

|

||||

We are excited to unveil the launch of Ultralytics YOLO11 🚀, the latest advancement in our state-of-the-art (SOTA) vision models! Available now at **[GitHub](https://github.com/ultralytics/ultralytics)**, YOLO11 builds on our legacy of speed, precision, and ease of use. Whether you're tackling object detection, image segmentation, or image classification, YOLO11 delivers the performance and versatility needed to excel in diverse applications.

|

||||

We are excited to announce the launch of **Ultralytics YOLO11** 🚀, the latest advancement in our state-of-the-art (SOTA) vision models! Available now at the [Ultralytics YOLO GitHub repository](https://github.com/ultralytics/ultralytics), YOLO11 builds on our legacy of speed, precision, and ease of use. Whether you're tackling [object detection](https://docs.ultralytics.com/tasks/detect/), [instance segmentation](https://docs.ultralytics.com/tasks/segment/), [pose estimation](https://docs.ultralytics.com/tasks/pose/), [image classification](https://docs.ultralytics.com/tasks/classify/), or [oriented object detection (OBB)](https://docs.ultralytics.com/tasks/obb/), YOLO11 delivers the performance and versatility needed to excel in diverse applications.

|

||||

|

||||

Get started today and unlock the full potential of YOLO11! Visit the [Ultralytics Docs](https://docs.ultralytics.com/) for comprehensive guides and resources:

|

||||

|

||||

[](https://badge.fury.io/py/ultralytics) [](https://www.pepy.tech/projects/ultralytics)

|

||||

|

||||

```bash

|

||||

# Install the ultralytics package

|

||||

pip install ultralytics

|

||||

```

|

||||

|

||||

<div align="center">

|

||||

<a href="https://www.ultralytics.com/yolo" target="_blank">

|

||||

<img width="100%" src="https://raw.githubusercontent.com/ultralytics/assets/refs/heads/main/yolo/performance-comparison.png"></a>

|

||||

<img width="100%" src="https://raw.githubusercontent.com/ultralytics/assets/refs/heads/main/yolo/performance-comparison.png" alt="Ultralytics YOLO Performance Comparison"></a>

|

||||

</div>

|

||||

|

||||

## <div align="center">Documentation</div>

|

||||

## 📚 Documentation

|

||||

|

||||

See the [YOLOv5 Docs](https://docs.ultralytics.com/yolov5/) for full documentation on training, testing and deployment. See below for quickstart examples.

|

||||

See the [YOLOv5 Docs](https://docs.ultralytics.com/yolov5/) for full documentation on training, testing, and deployment. See below for quickstart examples.

|

||||

|

||||

<details open>

|

||||

<summary>Install</summary>

|

||||

|

||||

Clone repo and install [requirements.txt](https://github.com/ultralytics/yolov5/blob/master/requirements.txt) in a [**Python>=3.8.0**](https://www.python.org/) environment, including [**PyTorch>=1.8**](https://pytorch.org/get-started/locally/).

|

||||

Clone the repository and install dependencies in a [**Python>=3.8.0**](https://www.python.org/) environment. Ensure you have [**PyTorch>=1.8**](https://pytorch.org/get-started/locally/) installed.

|

||||

|

||||

```bash

|

||||

git clone https://github.com/ultralytics/yolov5 # clone

|

||||

# Clone the YOLOv5 repository

|

||||

git clone https://github.com/ultralytics/yolov5

|

||||

|

||||

# Navigate to the cloned directory

|

||||

cd yolov5

|

||||

pip install -r requirements.txt # install

|

||||

|

||||

# Install required packages

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

|

||||

</details>

|

||||

|

||||

<details open>

|

||||

<summary>Inference</summary>

|

||||

<summary>Inference with PyTorch Hub</summary>

|

||||

|

||||

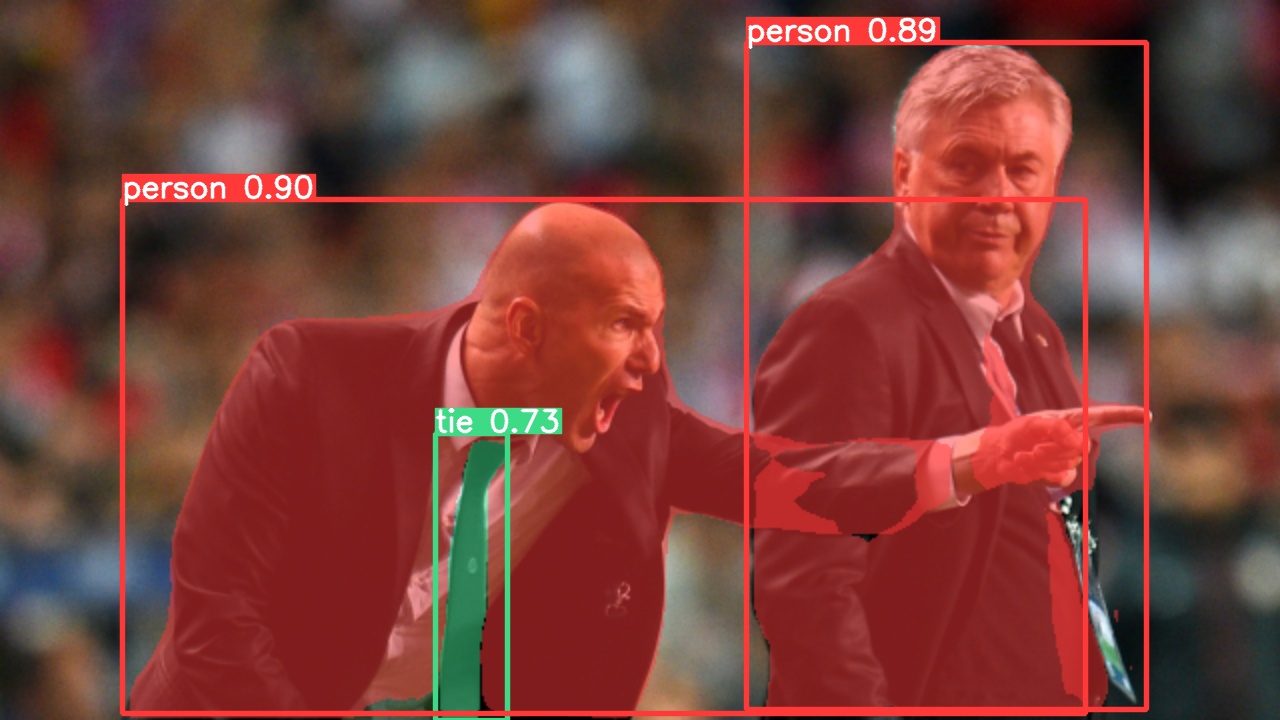

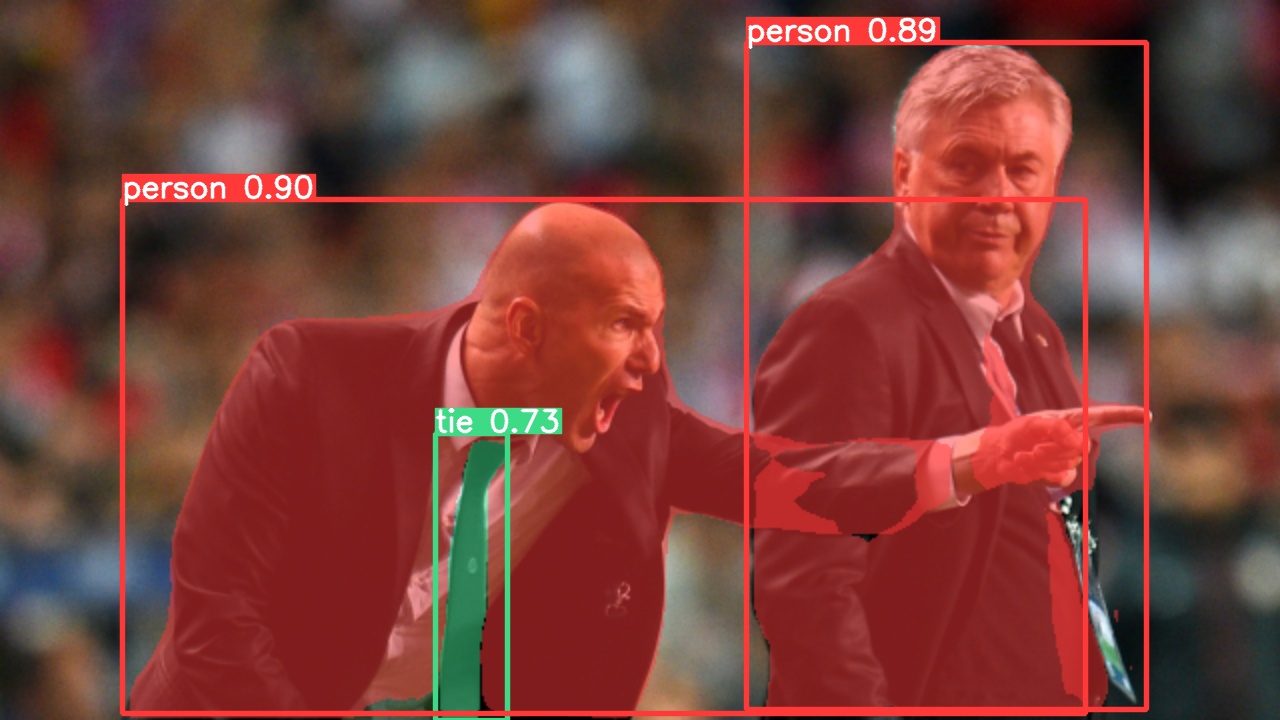

YOLOv5 [PyTorch Hub](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/) inference. [Models](https://github.com/ultralytics/yolov5/tree/master/models) download automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases).

|

||||

Use YOLOv5 via [PyTorch Hub](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/) for inference. [Models](https://github.com/ultralytics/yolov5/tree/master/models) are automatically downloaded from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases).

|

||||

|

||||

```python

|

||||

import torch

|

||||

|

||||

# Load YOLOv5 model (options: yolov5n, yolov5s, yolov5m, yolov5l, yolov5x)

|

||||

model = torch.hub.load("ultralytics/yolov5", "yolov5s")

|

||||

# Load a YOLOv5 model (options: yolov5n, yolov5s, yolov5m, yolov5l, yolov5x)

|

||||

model = torch.hub.load("ultralytics/yolov5", "yolov5s") # Default: yolov5s

|

||||

|

||||

# Input source (URL, file, PIL, OpenCV, numpy array, or list)

|

||||

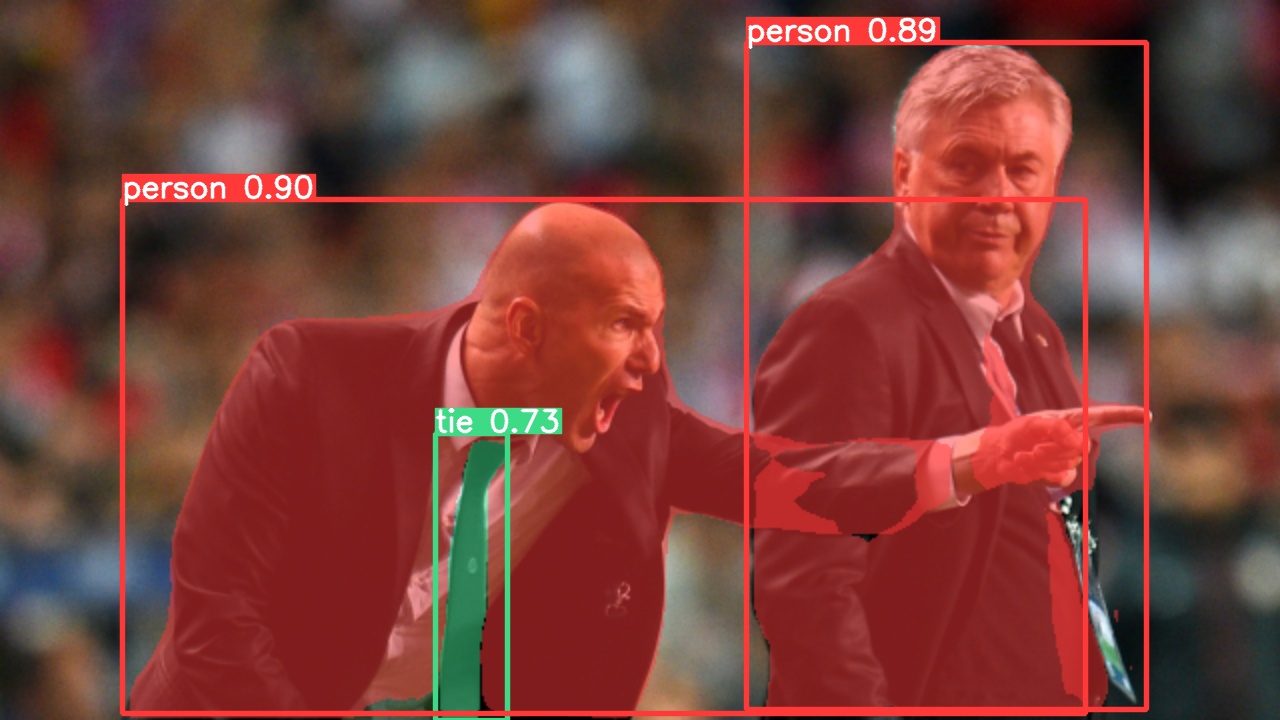

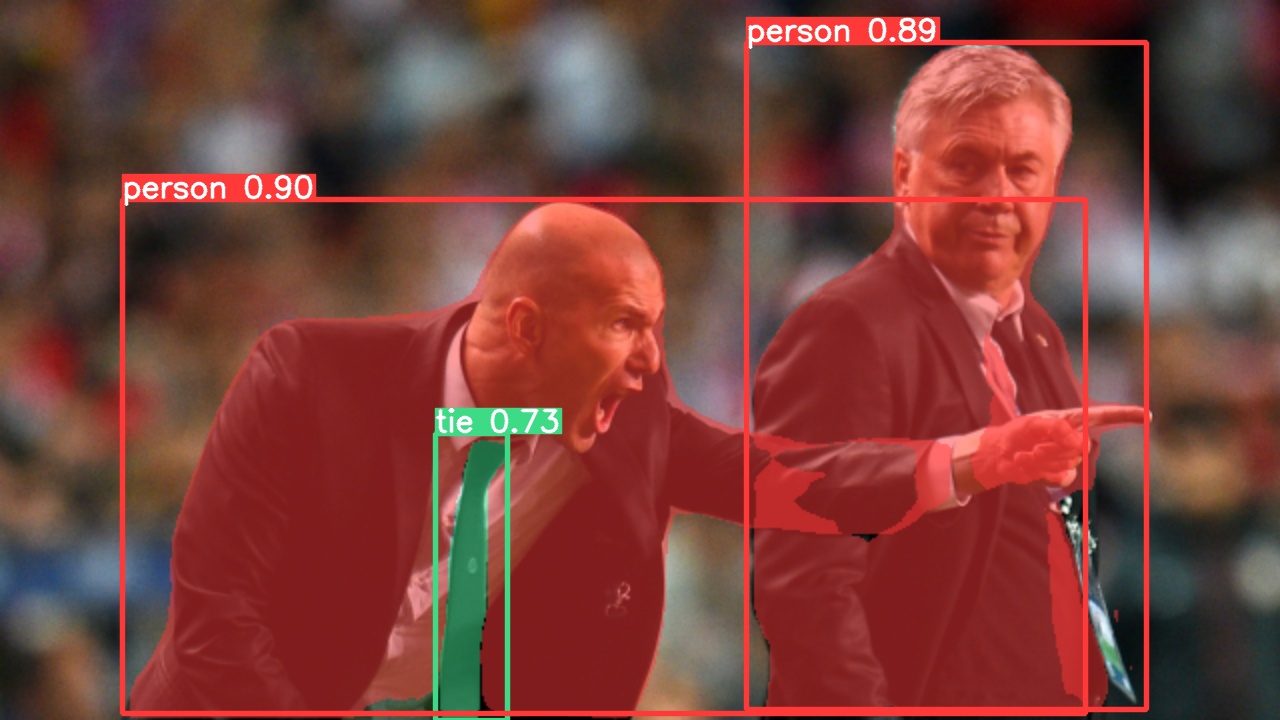

img = "https://ultralytics.com/images/zidane.jpg"

|

||||

# Define the input image source (URL, local file, PIL image, OpenCV frame, numpy array, or list)

|

||||

img = "https://ultralytics.com/images/zidane.jpg" # Example image

|

||||

|

||||

# Perform inference (handles batching, resizing, normalization)

|

||||

# Perform inference (handles batching, resizing, normalization automatically)

|

||||

results = model(img)

|

||||

|

||||

# Process results (options: .print(), .show(), .save(), .crop(), .pandas())

|

||||

results.print()

|

||||

# Process the results (options: .print(), .show(), .save(), .crop(), .pandas())

|

||||

results.print() # Print results to console

|

||||

results.show() # Display results in a window

|

||||

results.save() # Save results to runs/detect/exp

|

||||

```

|

||||

|

||||

</details>

|

||||

|

|

@ -103,19 +111,38 @@ results.print()

|

|||

<details>

|

||||

<summary>Inference with detect.py</summary>

|

||||

|

||||

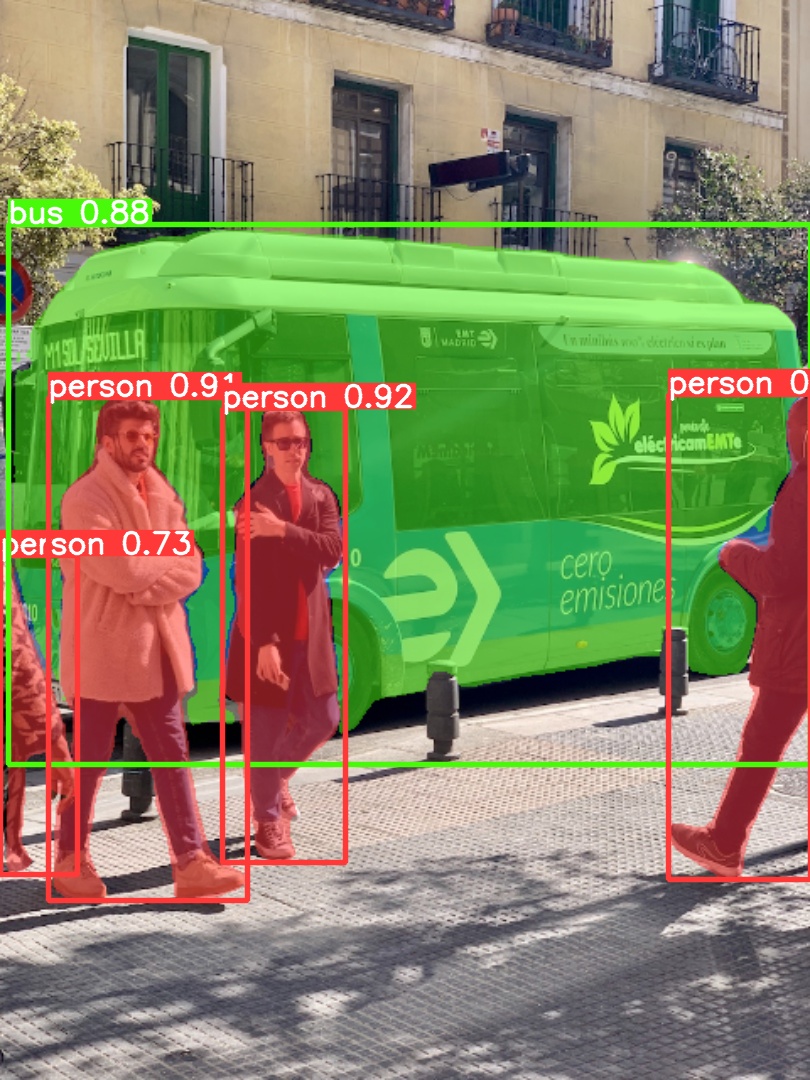

`detect.py` runs inference on a variety of sources, downloading [models](https://github.com/ultralytics/yolov5/tree/master/models) automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases) and saving results to `runs/detect`.

|

||||

The `detect.py` script runs inference on various sources. It automatically downloads [models](https://github.com/ultralytics/yolov5/tree/master/models) from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases) and saves the results to the `runs/detect` directory.

|

||||

|

||||

```bash

|

||||

python detect.py --weights yolov5s.pt --source 0 # webcam

|

||||

python detect.py --weights yolov5s.pt --source img.jpg # image

|

||||

python detect.py --weights yolov5s.pt --source vid.mp4 # video

|

||||

python detect.py --weights yolov5s.pt --source screen # screenshot

|

||||

python detect.py --weights yolov5s.pt --source path/ # directory

|

||||

python detect.py --weights yolov5s.pt --source list.txt # list of images

|

||||

python detect.py --weights yolov5s.pt --source list.streams # list of streams

|

||||

python detect.py --weights yolov5s.pt --source 'path/*.jpg' # glob

|

||||

python detect.py --weights yolov5s.pt --source 'https://youtu.be/LNwODJXcvt4' # YouTube

|

||||

python detect.py --weights yolov5s.pt --source 'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream

|

||||

# Run inference using a webcam

|

||||

python detect.py --weights yolov5s.pt --source 0

|

||||

|

||||

# Run inference on a local image file

|

||||

python detect.py --weights yolov5s.pt --source img.jpg

|

||||

|

||||

# Run inference on a local video file

|

||||

python detect.py --weights yolov5s.pt --source vid.mp4

|

||||

|

||||

# Run inference on a screen capture

|

||||

python detect.py --weights yolov5s.pt --source screen

|

||||

|

||||

# Run inference on a directory of images

|

||||

python detect.py --weights yolov5s.pt --source path/to/images/

|

||||

|

||||

# Run inference on a text file listing image paths

|

||||

python detect.py --weights yolov5s.pt --source list.txt

|

||||

|

||||

# Run inference on a text file listing stream URLs

|

||||

python detect.py --weights yolov5s.pt --source list.streams

|

||||

|

||||

# Run inference using a glob pattern for images

|

||||

python detect.py --weights yolov5s.pt --source 'path/to/*.jpg'

|

||||

|

||||

# Run inference on a YouTube video URL

|

||||

python detect.py --weights yolov5s.pt --source 'https://youtu.be/LNwODJXcvt4'

|

||||

|

||||

# Run inference on an RTSP, RTMP, or HTTP stream

|

||||

python detect.py --weights yolov5s.pt --source 'rtsp://example.com/media.mp4'

|

||||

```

|

||||

|

||||

</details>

|

||||

|

|

@ -123,49 +150,58 @@ python detect.py --weights yolov5s.pt --source 'rtsp://example.com/media.mp4' #

|

|||

<details>

|

||||

<summary>Training</summary>

|

||||

|

||||

The commands below reproduce YOLOv5 [COCO](https://github.com/ultralytics/yolov5/blob/master/data/scripts/get_coco.sh) results. [Models](https://github.com/ultralytics/yolov5/tree/master/models) and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) download automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases). Training times for YOLOv5n/s/m/l/x are 1/2/4/6/8 days on a V100 GPU ([Multi-GPU](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training/) times faster). Use the largest `--batch-size` possible, or pass `--batch-size -1` for YOLOv5 [AutoBatch](https://github.com/ultralytics/yolov5/pull/5092). Batch sizes shown for V100-16GB.

|

||||

The commands below demonstrate how to reproduce YOLOv5 [COCO dataset](https://docs.ultralytics.com/datasets/detect/coco/) results. Both [models](https://github.com/ultralytics/yolov5/tree/master/models) and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) are downloaded automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases). Training times for YOLOv5n/s/m/l/x are approximately 1/2/4/6/8 days on a single [NVIDIA V100 GPU](https://www.nvidia.com/en-us/data-center/v100/). Using [Multi-GPU training](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training/) can significantly reduce training time. Use the largest `--batch-size` your hardware allows, or use `--batch-size -1` for YOLOv5 [AutoBatch](https://github.com/ultralytics/yolov5/pull/5092). The batch sizes shown below are for V100-16GB GPUs.

|

||||

|

||||

```bash

|

||||

# Train YOLOv5n on COCO for 300 epochs

|

||||

python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5n.yaml --batch-size 128

|

||||

|

||||

# Train YOLOv5s on COCO for 300 epochs

|

||||

python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5s.yaml --batch-size 64

|

||||

|

||||

# Train YOLOv5m on COCO for 300 epochs

|

||||

python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5m.yaml --batch-size 40

|

||||

|

||||

# Train YOLOv5l on COCO for 300 epochs

|

||||

python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5l.yaml --batch-size 24

|

||||

|

||||

# Train YOLOv5x on COCO for 300 epochs

|

||||

python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5x.yaml --batch-size 16

|

||||

```

|

||||

|

||||

<img width="800" src="https://user-images.githubusercontent.com/26833433/90222759-949d8800-ddc1-11ea-9fa1-1c97eed2b963.png">

|

||||

<img width="800" src="https://user-images.githubusercontent.com/26833433/90222759-949d8800-ddc1-11ea-9fa1-1c97eed2b963.png" alt="YOLOv5 Training Results">

|

||||

|

||||

</details>

|

||||

|

||||

<details open>

|

||||

<summary>Tutorials</summary>

|

||||

|

||||

- [Train Custom Data](https://docs.ultralytics.com/yolov5/tutorials/train_custom_data/) 🚀 RECOMMENDED

|

||||

- [Tips for Best Training Results](https://docs.ultralytics.com/guides/model-training-tips/) ☘️

|

||||

- [Multi-GPU Training](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training/)

|

||||

- [PyTorch Hub](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/) 🌟 NEW

|

||||

- [TFLite, ONNX, CoreML, TensorRT Export](https://docs.ultralytics.com/yolov5/tutorials/model_export/) 🚀

|

||||

- [NVIDIA Jetson platform Deployment](https://docs.ultralytics.com/yolov5/tutorials/running_on_jetson_nano/) 🌟 NEW

|

||||

- [Test-Time Augmentation (TTA)](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/)

|

||||

- [Model Ensembling](https://docs.ultralytics.com/yolov5/tutorials/model_ensembling/)

|

||||

- [Model Pruning/Sparsity](https://docs.ultralytics.com/yolov5/tutorials/model_pruning_and_sparsity/)

|

||||

- [Hyperparameter Evolution](https://docs.ultralytics.com/yolov5/tutorials/hyperparameter_evolution/)

|

||||

- [Transfer Learning with Frozen Layers](https://docs.ultralytics.com/yolov5/tutorials/transfer_learning_with_frozen_layers/)

|

||||

- [Architecture Summary](https://docs.ultralytics.com/yolov5/tutorials/architecture_description/) 🌟 NEW

|

||||

- [Ultralytics HUB to train and deploy YOLO](https://www.ultralytics.com/hub) 🚀 RECOMMENDED

|

||||

- [ClearML Logging](https://docs.ultralytics.com/yolov5/tutorials/clearml_logging_integration/)

|

||||

- [YOLOv5 with Neural Magic's Deepsparse](https://docs.ultralytics.com/yolov5/tutorials/neural_magic_pruning_quantization/)

|

||||

- [Comet Logging](https://docs.ultralytics.com/yolov5/tutorials/comet_logging_integration/) 🌟 NEW

|

||||

- **[Train Custom Data](https://docs.ultralytics.com/yolov5/tutorials/train_custom_data/)** 🚀 **RECOMMENDED**: Learn how to train YOLOv5 on your own datasets.

|

||||

- **[Tips for Best Training Results](https://docs.ultralytics.com/guides/model-training-tips/)** ☘️: Improve your model's performance with expert tips.

|

||||

- **[Multi-GPU Training](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training/)**: Speed up training using multiple GPUs.

|

||||

- **[PyTorch Hub Integration](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/)** 🌟 **NEW**: Easily load models using PyTorch Hub.

|

||||

- **[Model Export (TFLite, ONNX, CoreML, TensorRT)](https://docs.ultralytics.com/yolov5/tutorials/model_export/)** 🚀: Convert your models to various deployment formats like [ONNX](https://onnx.ai/) or [TensorRT](https://developer.nvidia.com/tensorrt).

|

||||

- **[NVIDIA Jetson Deployment](https://docs.ultralytics.com/yolov5/tutorials/running_on_jetson_nano/)** 🌟 **NEW**: Deploy YOLOv5 on [NVIDIA Jetson](https://developer.nvidia.com/embedded-computing) devices.

|

||||

- **[Test-Time Augmentation (TTA)](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/)**: Enhance prediction accuracy with TTA.

|

||||

- **[Model Ensembling](https://docs.ultralytics.com/yolov5/tutorials/model_ensembling/)**: Combine multiple models for better performance.

|

||||

- **[Model Pruning/Sparsity](https://docs.ultralytics.com/yolov5/tutorials/model_pruning_and_sparsity/)**: Optimize models for size and speed.

|

||||

- **[Hyperparameter Evolution](https://docs.ultralytics.com/yolov5/tutorials/hyperparameter_evolution/)**: Automatically find the best training hyperparameters.

|

||||

- **[Transfer Learning with Frozen Layers](https://docs.ultralytics.com/yolov5/tutorials/transfer_learning_with_frozen_layers/)**: Adapt pretrained models to new tasks efficiently using [transfer learning](https://www.ultralytics.com/glossary/transfer-learning).

|

||||

- **[Architecture Summary](https://docs.ultralytics.com/yolov5/tutorials/architecture_description/)** 🌟 **NEW**: Understand the YOLOv5 model architecture.

|

||||

- **[Ultralytics HUB Training](https://www.ultralytics.com/hub)** 🚀 **RECOMMENDED**: Train and deploy YOLO models using Ultralytics HUB.

|

||||

- **[ClearML Logging](https://docs.ultralytics.com/yolov5/tutorials/clearml_logging_integration/)**: Integrate with [ClearML](https://clear.ml/) for experiment tracking.

|

||||

- **[Neural Magic DeepSparse Integration](https://docs.ultralytics.com/yolov5/tutorials/neural_magic_pruning_quantization/)**: Accelerate inference with DeepSparse.

|

||||

- **[Comet Logging](https://docs.ultralytics.com/yolov5/tutorials/comet_logging_integration/)** 🌟 **NEW**: Log experiments using [Comet ML](https://www.comet.com/).

|

||||

|

||||

</details>

|

||||

|

||||

## <div align="center">Integrations</div>

|

||||

## 🧩 Integrations

|

||||

|

||||

Our key integrations with leading AI platforms extend the functionality of Ultralytics' offerings, enhancing tasks like dataset labeling, training, visualization, and model management. Discover how Ultralytics, in collaboration with [W&B](https://docs.wandb.ai/guides/integrations/ultralytics/), [Comet](https://bit.ly/yolov8-readme-comet), [Roboflow](https://roboflow.com/?ref=ultralytics) and [OpenVINO](https://docs.ultralytics.com/integrations/openvino/), can optimize your AI workflow.

|

||||

Our key integrations with leading AI platforms extend the functionality of Ultralytics' offerings, enhancing tasks like dataset labeling, training, visualization, and model management. Discover how Ultralytics, in collaboration with partners like [Weights & Biases](https://docs.ultralytics.com/integrations/weights-biases/), [Comet ML](https://docs.ultralytics.com/integrations/comet/), [Roboflow](https://docs.ultralytics.com/integrations/roboflow/), and [Intel OpenVINO](https://docs.ultralytics.com/integrations/openvino/), can optimize your AI workflow. Explore more at [Ultralytics Integrations](https://docs.ultralytics.com/integrations/).

|

||||

|

||||

<br>

|

||||

<a href="https://www.ultralytics.com/hub" target="_blank">

|

||||

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/yolov8/banner-integrations.png" alt="Ultralytics active learning integrations"></a>

|

||||

<a href="https://docs.ultralytics.com/integrations/" target="_blank">

|

||||

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/yolov8/banner-integrations.png" alt="Ultralytics active learning integrations">

|

||||

</a>

|

||||

<br>

|

||||

<br>

|

||||

|

||||

|

|

@ -173,88 +209,90 @@ Our key integrations with leading AI platforms extend the functionality of Ultra

|

|||

<a href="https://www.ultralytics.com/hub">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-ultralytics-hub.png" width="10%" alt="Ultralytics HUB logo"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="15%" height="0" alt="space">

|

||||

<a href="https://docs.wandb.ai/guides/integrations/ultralytics/">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-wb.png" width="10%" alt="ClearML logo"></a>

|

||||

<a href="https://docs.ultralytics.com/integrations/weights-biases/">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-wb.png" width="10%" alt="Weights & Biases logo"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="15%" height="0" alt="space">

|

||||

<a href="https://bit.ly/yolov8-readme-comet">

|

||||

<a href="https://docs.ultralytics.com/integrations/comet/">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-comet.png" width="10%" alt="Comet ML logo"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="15%" height="0" alt="space">

|

||||

<a href="https://bit.ly/yolov5-neuralmagic">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-neuralmagic.png" width="10%" alt="NeuralMagic logo"></a>

|

||||

<a href="https://docs.ultralytics.com/integrations/neural-magic/">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-neuralmagic.png" width="10%" alt="Neural Magic logo"></a>

|

||||

</div>

|

||||

|

||||

| Ultralytics HUB 🚀 | W&B | Comet ⭐ NEW | Neural Magic |

|

||||

| :--------------------------------------------------------------------------------------------------------------------------------: | :-----------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------: |

|

||||

| Streamline YOLO workflows: Label, train, and deploy effortlessly with [Ultralytics HUB](https://www.ultralytics.com/hub). Try now! | Track experiments, hyperparameters, and results with [Weights & Biases](https://docs.wandb.ai/guides/integrations/ultralytics/) | Free forever, [Comet](https://bit.ly/yolov5-readme-comet) lets you save YOLOv5 models, resume training, and interactively visualize and debug predictions | Run YOLO11 inference up to 6x faster with [Neural Magic DeepSparse](https://bit.ly/yolov5-neuralmagic) |

|

||||

| Ultralytics HUB 🌟 | Weights & Biases | Comet | Neural Magic |

|

||||

| :----------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------: |

|

||||

| Streamline YOLO workflows: Label, train, and deploy effortlessly with [Ultralytics HUB](https://hub.ultralytics.com). Try now! | Track experiments, hyperparameters, and results with [Weights & Biases](https://docs.ultralytics.com/integrations/weights-biases/). | Free forever, [Comet ML](https://docs.ultralytics.com/integrations/comet/) lets you save YOLO models, resume training, and interactively visualize predictions. | Run YOLO inference up to 6x faster with [Neural Magic DeepSparse](https://docs.ultralytics.com/integrations/neural-magic/). |

|

||||

|

||||

## <div align="center">Ultralytics HUB</div>

|

||||

## ⭐ Ultralytics HUB

|

||||

|

||||

Experience seamless AI with [Ultralytics HUB](https://www.ultralytics.com/hub) ⭐, the all-in-one solution for data visualization, YOLOv5 and YOLOv8 🚀 model training and deployment, without any coding. Transform images into actionable insights and bring your AI visions to life with ease using our cutting-edge platform and user-friendly [Ultralytics App](https://www.ultralytics.com/app-install). Start your journey for **Free** now!

|

||||

Experience seamless AI development with [Ultralytics HUB](https://www.ultralytics.com/hub) ⭐, the ultimate platform for building, training, and deploying [computer vision](https://www.ultralytics.com/glossary/computer-vision-cv) models. Visualize datasets, train [YOLOv5](https://docs.ultralytics.com/models/yolov5/) and [YOLOv8](https://docs.ultralytics.com/models/yolov8/) 🚀 models, and deploy them to real-world applications without writing any code. Transform images into actionable insights using our cutting-edge tools and user-friendly [Ultralytics App](https://www.ultralytics.com/app-install). Start your journey for **Free** today!

|

||||

|

||||

<a align="center" href="https://www.ultralytics.com/hub" target="_blank">

|

||||

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/im/ultralytics-hub.png"></a>

|

||||

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/im/ultralytics-hub.png" alt="Ultralytics HUB Platform Screenshot"></a>

|

||||

|

||||

## <div align="center">Why YOLOv5</div>

|

||||

## 🤔 Why YOLOv5?

|

||||

|

||||

YOLOv5 has been designed to be super easy to get started and simple to learn. We prioritize real-world results.

|

||||

YOLOv5 is designed for simplicity and ease of use. We prioritize real-world performance and accessibility.

|

||||

|

||||

<p align="left"><img width="800" src="https://user-images.githubusercontent.com/26833433/155040763-93c22a27-347c-4e3c-847a-8094621d3f4e.png"></p>

|

||||

<p align="left"><img width="800" src="https://user-images.githubusercontent.com/26833433/155040763-93c22a27-347c-4e3c-847a-8094621d3f4e.png" alt="YOLOv5 Performance Chart"></p>

|

||||

<details>

|

||||

<summary>YOLOv5-P5 640 Figure</summary>

|

||||

|

||||

<p align="left"><img width="800" src="https://user-images.githubusercontent.com/26833433/155040757-ce0934a3-06a6-43dc-a979-2edbbd69ea0e.png"></p>

|

||||

<p align="left"><img width="800" src="https://user-images.githubusercontent.com/26833433/155040757-ce0934a3-06a6-43dc-a979-2edbbd69ea0e.png" alt="YOLOv5 P5 640 Performance Chart"></p>

|

||||

</details>

|

||||

<details>

|

||||

<summary>Figure Notes</summary>

|

||||

|

||||

- **COCO AP val** denotes mAP@0.5:0.95 metric measured on the 5000-image [COCO val2017](http://cocodataset.org) dataset over various inference sizes from 256 to 1536.

|

||||

- **GPU Speed** measures average inference time per image on [COCO val2017](http://cocodataset.org) dataset using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p4/) V100 instance at batch-size 32.

|

||||

- **EfficientDet** data from [google/automl](https://github.com/google/automl) at batch size 8.

|

||||

- **Reproduce** by `python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`

|

||||

- **COCO AP val** denotes the [mean Average Precision (mAP)](https://www.ultralytics.com/glossary/mean-average-precision-map) at [Intersection over Union (IoU)](https://www.ultralytics.com/glossary/intersection-over-union-iou) thresholds from 0.5 to 0.95, measured on the 5,000-image [COCO val2017 dataset](https://docs.ultralytics.com/datasets/detect/coco/) across various inference sizes (256 to 1536 pixels).

|

||||

- **GPU Speed** measures the average inference time per image on the [COCO val2017 dataset](https://docs.ultralytics.com/datasets/detect/coco/) using an [AWS p3.2xlarge V100 instance](https://aws.amazon.com/ec2/instance-types/p3/) with a batch size of 32.

|

||||

- **EfficientDet** data is sourced from the [google/automl repository](https://github.com/google/automl) at batch size 8.

|

||||

- **Reproduce** these results using the command: `python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`

|

||||

|

||||

</details>

|

||||

|

||||

### Pretrained Checkpoints

|

||||

|

||||

| Model | size<br><sup>(pixels) | mAP<sup>val<br>50-95 | mAP<sup>val<br>50 | Speed<br><sup>CPU b1<br>(ms) | Speed<br><sup>V100 b1<br>(ms) | Speed<br><sup>V100 b32<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>@640 (B) |

|

||||

| ----------------------------------------------------------------------------------------------- | --------------------- | -------------------- | ----------------- | ---------------------------- | ----------------------------- | ------------------------------ | ------------------ | ---------------------- |

|

||||

| [YOLOv5n](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n.pt) | 640 | 28.0 | 45.7 | **45** | **6.3** | **0.6** | **1.9** | **4.5** |

|

||||

| [YOLOv5s](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s.pt) | 640 | 37.4 | 56.8 | 98 | 6.4 | 0.9 | 7.2 | 16.5 |

|

||||

| [YOLOv5m](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m.pt) | 640 | 45.4 | 64.1 | 224 | 8.2 | 1.7 | 21.2 | 49.0 |

|

||||

| [YOLOv5l](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l.pt) | 640 | 49.0 | 67.3 | 430 | 10.1 | 2.7 | 46.5 | 109.1 |

|

||||

| [YOLOv5x](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x.pt) | 640 | 50.7 | 68.9 | 766 | 12.1 | 4.8 | 86.7 | 205.7 |

|

||||

| | | | | | | | | |

|

||||

| [YOLOv5n6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n6.pt) | 1280 | 36.0 | 54.4 | 153 | 8.1 | 2.1 | 3.2 | 4.6 |

|

||||

| [YOLOv5s6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s6.pt) | 1280 | 44.8 | 63.7 | 385 | 8.2 | 3.6 | 12.6 | 16.8 |

|

||||

| [YOLOv5m6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m6.pt) | 1280 | 51.3 | 69.3 | 887 | 11.1 | 6.8 | 35.7 | 50.0 |

|

||||

| [YOLOv5l6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l6.pt) | 1280 | 53.7 | 71.3 | 1784 | 15.8 | 10.5 | 76.8 | 111.4 |

|

||||

| [YOLOv5x6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x6.pt)<br>+ [TTA] | 1280<br>1536 | 55.0<br>**55.8** | 72.7<br>**72.7** | 3136<br>- | 26.2<br>- | 19.4<br>- | 140.7<br>- | 209.8<br>- |

|

||||

This table shows the performance metrics for various YOLOv5 models trained on the COCO dataset.

|

||||

|

||||

| Model | Size<br><sup>(pixels) | mAP<sup>val<br>50-95 | mAP<sup>val<br>50 | Speed<br><sup>CPU b1<br>(ms) | Speed<br><sup>V100 b1<br>(ms) | Speed<br><sup>V100 b32<br>(ms) | Params<br><sup>(M) | FLOPs<br><sup>@640 (B) |

|

||||

| ------------------------------------------------------------------------------------------------------------------------------------------------------------------------ | --------------------- | -------------------- | ----------------- | ---------------------------- | ----------------------------- | ------------------------------ | ------------------ | ---------------------- |

|

||||

| [YOLOv5n](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n.pt) | 640 | 28.0 | 45.7 | **45** | **6.3** | **0.6** | **1.9** | **4.5** |

|

||||

| [YOLOv5s](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s.pt) | 640 | 37.4 | 56.8 | 98 | 6.4 | 0.9 | 7.2 | 16.5 |

|

||||

| [YOLOv5m](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m.pt) | 640 | 45.4 | 64.1 | 224 | 8.2 | 1.7 | 21.2 | 49.0 |

|

||||

| [YOLOv5l](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l.pt) | 640 | 49.0 | 67.3 | 430 | 10.1 | 2.7 | 46.5 | 109.1 |

|

||||

| [YOLOv5x](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x.pt) | 640 | 50.7 | 68.9 | 766 | 12.1 | 4.8 | 86.7 | 205.7 |

|

||||

| | | | | | | | | |

|

||||

| [YOLOv5n6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n6.pt) | 1280 | 36.0 | 54.4 | 153 | 8.1 | 2.1 | 3.2 | 4.6 |

|

||||

| [YOLOv5s6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s6.pt) | 1280 | 44.8 | 63.7 | 385 | 8.2 | 3.6 | 12.6 | 16.8 |

|

||||

| [YOLOv5m6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m6.pt) | 1280 | 51.3 | 69.3 | 887 | 11.1 | 6.8 | 35.7 | 50.0 |

|

||||

| [YOLOv5l6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l6.pt) | 1280 | 53.7 | 71.3 | 1784 | 15.8 | 10.5 | 76.8 | 111.4 |

|

||||

| [YOLOv5x6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x6.pt)<br>+ [[TTA]](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/) | 1280<br>1536 | 55.0<br>**55.8** | 72.7<br>**72.7** | 3136<br>- | 26.2<br>- | 19.4<br>- | 140.7<br>- | 209.8<br>- |

|

||||

|

||||

<details>

|

||||

<summary>Table Notes</summary>

|

||||

|

||||

- All checkpoints are trained to 300 epochs with default settings. Nano and Small models use [hyp.scratch-low.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-low.yaml) hyps, all others use [hyp.scratch-high.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-high.yaml).

|

||||

- **mAP<sup>val</sup>** values are for single-model single-scale on [COCO val2017](http://cocodataset.org) dataset.<br>Reproduce by `python val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65`

|

||||

- **Speed** averaged over COCO val images using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p4/) instance. NMS times (~1 ms/img) not included.<br>Reproduce by `python val.py --data coco.yaml --img 640 --task speed --batch 1`

|

||||

- **TTA** [Test Time Augmentation](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/) includes reflection and scale augmentations.<br>Reproduce by `python val.py --data coco.yaml --img 1536 --iou 0.7 --augment`

|

||||

- All checkpoints were trained for 300 epochs using default settings. Nano (n) and Small (s) models use [hyp.scratch-low.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-low.yaml) hyperparameters, while Medium (m), Large (l), and Extra-Large (x) models use [hyp.scratch-high.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-high.yaml).

|

||||

- **mAP<sup>val</sup>** values represent single-model, single-scale performance on the [COCO val2017 dataset](https://docs.ultralytics.com/datasets/detect/coco/).<br>Reproduce using: `python val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65`

|

||||

- **Speed** metrics are averaged over COCO val images using an [AWS p3.2xlarge V100 instance](https://aws.amazon.com/ec2/instance-types/p3/). Non-Maximum Suppression (NMS) time (~1 ms/image) is not included.<br>Reproduce using: `python val.py --data coco.yaml --img 640 --task speed --batch 1`

|

||||

- **TTA** ([Test Time Augmentation](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/)) includes reflection and scale augmentations for improved accuracy.<br>Reproduce using: `python val.py --data coco.yaml --img 1536 --iou 0.7 --augment`

|

||||

|

||||

</details>

|

||||

|

||||

## <div align="center">Segmentation</div>

|

||||

## 🖼️ Segmentation

|

||||

|

||||

Our new YOLOv5 [release v7.0](https://github.com/ultralytics/yolov5/releases/v7.0) instance segmentation models are the fastest and most accurate in the world, beating all current [SOTA benchmarks](https://paperswithcode.com/sota/real-time-instance-segmentation-on-mscoco). We've made them super simple to train, validate and deploy. See full details in our [Release Notes](https://github.com/ultralytics/yolov5/releases/v7.0) and visit our [YOLOv5 Segmentation Colab Notebook](https://github.com/ultralytics/yolov5/blob/master/segment/tutorial.ipynb) for quickstart tutorials.

|

||||

The YOLOv5 [release v7.0](https://github.com/ultralytics/yolov5/releases/v7.0) introduced [instance segmentation](https://docs.ultralytics.com/tasks/segment/) models that achieve state-of-the-art performance. These models are designed for easy training, validation, and deployment. For full details, see the [Release Notes](https://github.com/ultralytics/yolov5/releases/v7.0) and explore the [YOLOv5 Segmentation Colab Notebook](https://github.com/ultralytics/yolov5/blob/master/segment/tutorial.ipynb) for quickstart examples.

|

||||

|

||||

<details>

|

||||

<summary>Segmentation Checkpoints</summary>

|

||||

|

||||

<div align="center">

|

||||

<a align="center" href="https://www.ultralytics.com/yolo" target="_blank">

|

||||

<img width="800" src="https://user-images.githubusercontent.com/61612323/204180385-84f3aca9-a5e9-43d8-a617-dda7ca12e54a.png"></a>

|

||||

<img width="800" src="https://user-images.githubusercontent.com/61612323/204180385-84f3aca9-a5e9-43d8-a617-dda7ca12e54a.png" alt="YOLOv5 Segmentation Performance Chart"></a>

|

||||

</div>

|

||||

|

||||

We trained YOLOv5 segmentations models on COCO for 300 epochs at image size 640 using A100 GPUs. We exported all models to ONNX FP32 for CPU speed tests and to TensorRT FP16 for GPU speed tests. We ran all speed tests on Google [Colab Pro](https://colab.research.google.com/signup) notebooks for easy reproducibility.

|

||||

YOLOv5 segmentation models were trained on the [COCO dataset](https://docs.ultralytics.com/datasets/segment/coco/) for 300 epochs at an image size of 640 pixels using A100 GPUs. Models were exported to [ONNX](https://onnx.ai/) FP32 for CPU speed tests and [TensorRT](https://developer.nvidia.com/tensorrt) FP16 for GPU speed tests. All speed tests were conducted on Google [Colab Pro](https://colab.research.google.com/signup) notebooks for reproducibility.

|

||||

|

||||

| Model | size<br><sup>(pixels) | mAP<sup>box<br>50-95 | mAP<sup>mask<br>50-95 | Train time<br><sup>300 epochs<br>A100 (hours) | Speed<br><sup>ONNX CPU<br>(ms) | Speed<br><sup>TRT A100<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>@640 (B) |

|

||||

| Model | Size<br><sup>(pixels) | mAP<sup>box<br>50-95 | mAP<sup>mask<br>50-95 | Train Time<br><sup>300 epochs<br>A100 (hours) | Speed<br><sup>ONNX CPU<br>(ms) | Speed<br><sup>TRT A100<br>(ms) | Params<br><sup>(M) | FLOPs<br><sup>@640 (B) |

|

||||

| ------------------------------------------------------------------------------------------ | --------------------- | -------------------- | --------------------- | --------------------------------------------- | ------------------------------ | ------------------------------ | ------------------ | ---------------------- |

|

||||

| [YOLOv5n-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n-seg.pt) | 640 | 27.6 | 23.4 | 80:17 | **62.7** | **1.2** | **2.0** | **7.1** |

|

||||

| [YOLOv5s-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s-seg.pt) | 640 | 37.6 | 31.7 | 88:16 | 173.3 | 1.4 | 7.6 | 26.4 |

|

||||

|

|

@ -262,10 +300,10 @@ We trained YOLOv5 segmentations models on COCO for 300 epochs at image size 640

|

|||

| [YOLOv5l-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l-seg.pt) | 640 | 49.0 | 39.9 | 66:43 (2x) | 857.4 | 2.9 | 47.9 | 147.7 |

|

||||

| [YOLOv5x-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x-seg.pt) | 640 | **50.7** | **41.4** | 62:56 (3x) | 1579.2 | 4.5 | 88.8 | 265.7 |

|

||||

|

||||

- All checkpoints are trained to 300 epochs with SGD optimizer with `lr0=0.01` and `weight_decay=5e-5` at image size 640 and all default settings.<br>Runs logged to https://wandb.ai/glenn-jocher/YOLOv5_v70_official

|

||||

- **Accuracy** values are for single-model single-scale on COCO dataset.<br>Reproduce by `python segment/val.py --data coco.yaml --weights yolov5s-seg.pt`

|

||||

- **Speed** averaged over 100 inference images using a [Colab Pro](https://colab.research.google.com/signup) A100 High-RAM instance. Values indicate inference speed only (NMS adds about 1ms per image). <br>Reproduce by `python segment/val.py --data coco.yaml --weights yolov5s-seg.pt --batch 1`

|

||||

- **Export** to ONNX at FP32 and TensorRT at FP16 done with `export.py`. <br>Reproduce by `python export.py --weights yolov5s-seg.pt --include engine --device 0 --half`

|

||||

- All checkpoints were trained for 300 epochs using the SGD optimizer with `lr0=0.01` and `weight_decay=5e-5` at an image size of 640 pixels, using default settings.<br>Training runs are logged at [https://wandb.ai/glenn-jocher/YOLOv5_v70_official](https://wandb.ai/glenn-jocher/YOLOv5_v70_official).

|

||||

- **Accuracy** values represent single-model, single-scale performance on the COCO dataset.<br>Reproduce using: `python segment/val.py --data coco.yaml --weights yolov5s-seg.pt`

|

||||

- **Speed** metrics are averaged over 100 inference images using a [Colab Pro A100 High-RAM instance](https://colab.research.google.com/signup). Values indicate inference speed only (NMS adds approximately 1ms per image).<br>Reproduce using: `python segment/val.py --data coco.yaml --weights yolov5s-seg.pt --batch 1`

|

||||

- **Export** to ONNX (FP32) and TensorRT (FP16) was performed using `export.py`.<br>Reproduce using: `python export.py --weights yolov5s-seg.pt --include engine --device 0 --half`

|

||||

|

||||

</details>

|

||||

|

||||

|

|

@ -274,64 +312,68 @@ We trained YOLOv5 segmentations models on COCO for 300 epochs at image size 640

|

|||

|

||||

### Train

|

||||

|

||||

YOLOv5 segmentation training supports auto-download COCO128-seg segmentation dataset with `--data coco128-seg.yaml` argument and manual download of COCO-segments dataset with `bash data/scripts/get_coco.sh --train --val --segments` and then `python train.py --data coco.yaml`.

|

||||

YOLOv5 segmentation training supports automatic download of the [COCO128-seg dataset](https://docs.ultralytics.com/datasets/segment/coco8-seg/) via the `--data coco128-seg.yaml` argument. For the full [COCO-segments dataset](https://docs.ultralytics.com/datasets/segment/coco/), download it manually using `bash data/scripts/get_coco.sh --train --val --segments` and then train with `python train.py --data coco.yaml`.

|

||||

|

||||

```bash

|

||||

# Single-GPU

|

||||

# Train on a single GPU

|

||||

python segment/train.py --data coco128-seg.yaml --weights yolov5s-seg.pt --img 640

|

||||

|

||||

# Multi-GPU DDP

|

||||

# Train using Multi-GPU Distributed Data Parallel (DDP)

|

||||

python -m torch.distributed.run --nproc_per_node 4 --master_port 1 segment/train.py --data coco128-seg.yaml --weights yolov5s-seg.pt --img 640 --device 0,1,2,3

|

||||

```

|

||||

|

||||

### Val

|

||||

|

||||

Validate YOLOv5s-seg mask mAP on COCO dataset:

|

||||

Validate the mask [mean Average Precision (mAP)](https://www.ultralytics.com/glossary/mean-average-precision-map) of YOLOv5s-seg on the COCO dataset:

|

||||

|

||||

```bash

|

||||

bash data/scripts/get_coco.sh --val --segments # download COCO val segments split (780MB, 5000 images)

|

||||

python segment/val.py --weights yolov5s-seg.pt --data coco.yaml --img 640 # validate

|

||||

# Download COCO validation segments split (780MB, 5000 images)

|

||||

bash data/scripts/get_coco.sh --val --segments

|

||||

|

||||

# Validate the model

|

||||

python segment/val.py --weights yolov5s-seg.pt --data coco.yaml --img 640

|

||||

```

|

||||

|

||||

### Predict

|

||||

|

||||

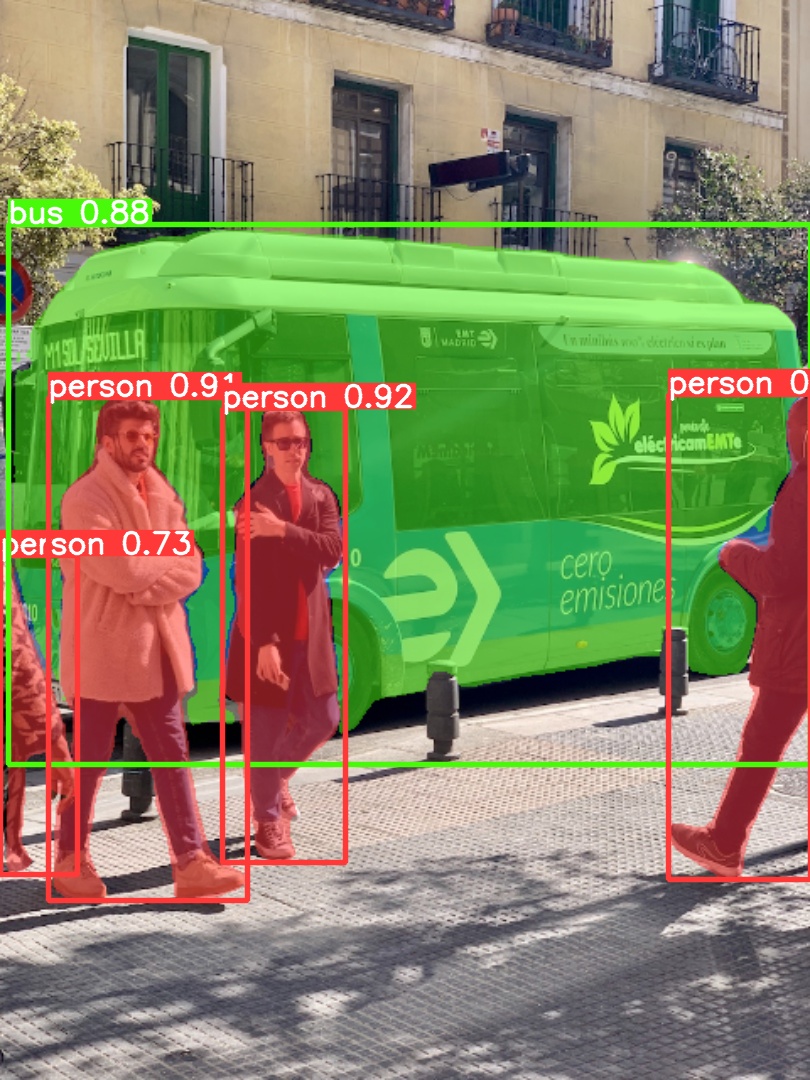

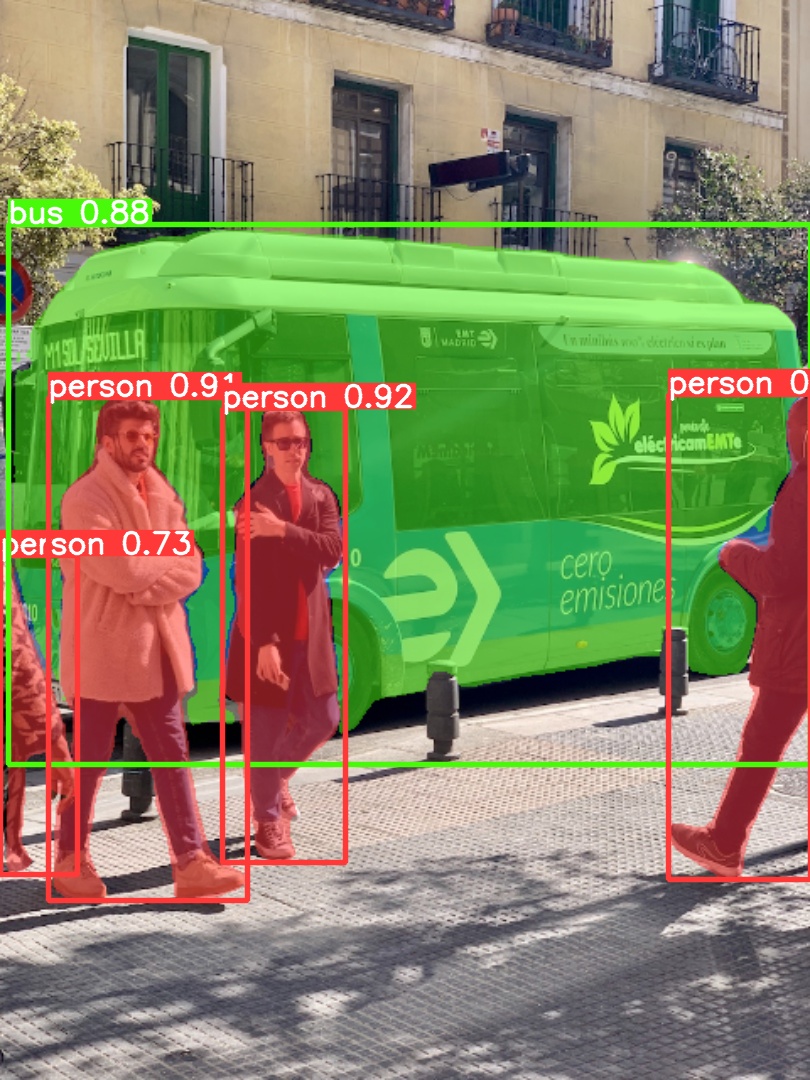

Use pretrained YOLOv5m-seg.pt to predict bus.jpg:

|

||||

Use the pretrained YOLOv5m-seg.pt model to perform segmentation on `bus.jpg`:

|

||||

|

||||

```bash

|

||||

# Run prediction

|

||||

python segment/predict.py --weights yolov5m-seg.pt --source data/images/bus.jpg

|

||||

```

|

||||

|

||||

```python

|

||||

model = torch.hub.load(

|

||||

"ultralytics/yolov5", "custom", "yolov5m-seg.pt"

|

||||

) # load from PyTorch Hub (WARNING: inference not yet supported)

|

||||

# Load model from PyTorch Hub (Note: Inference support might vary)

|

||||

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5m-seg.pt")

|

||||

```

|

||||

|

||||

|  |  |

|

||||

| ---------------------------------------------------------------------------------------------------------------- | ------------------------------------------------------------------------------------------------------------- |

|

||||

|  |  |

|

||||

| :-----------------------------------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|

||||

### Export

|

||||

|

||||

Export YOLOv5s-seg model to ONNX and TensorRT:

|

||||

Export the YOLOv5s-seg model to ONNX and TensorRT formats:

|

||||

|

||||

```bash

|

||||

# Export model

|

||||

python export.py --weights yolov5s-seg.pt --include onnx engine --img 640 --device 0

|

||||

```

|

||||

|

||||

</details>

|

||||

|

||||

## <div align="center">Classification</div>

|

||||

## 🏷️ Classification

|

||||

|

||||

YOLOv5 [release v6.2](https://github.com/ultralytics/yolov5/releases) brings support for classification model training, validation and deployment! See full details in our [Release Notes](https://github.com/ultralytics/yolov5/releases/v6.2) and visit our [YOLOv5 Classification Colab Notebook](https://github.com/ultralytics/yolov5/blob/master/classify/tutorial.ipynb) for quickstart tutorials.

|

||||

YOLOv5 [release v6.2](https://github.com/ultralytics/yolov5/releases/v6.2) introduced support for [image classification](https://docs.ultralytics.com/tasks/classify/) model training, validation, and deployment. Check the [Release Notes](https://github.com/ultralytics/yolov5/releases/v6.2) for details and the [YOLOv5 Classification Colab Notebook](https://github.com/ultralytics/yolov5/blob/master/classify/tutorial.ipynb) for quickstart guides.

|

||||

|

||||

<details>

|

||||

<summary>Classification Checkpoints</summary>

|

||||

|

||||

<br>

|

||||

|

||||

We trained YOLOv5-cls classification models on ImageNet for 90 epochs using a 4xA100 instance, and we trained ResNet and EfficientNet models alongside with the same default training settings to compare. We exported all models to ONNX FP32 for CPU speed tests and to TensorRT FP16 for GPU speed tests. We ran all speed tests on Google [Colab Pro](https://colab.research.google.com/signup) for easy reproducibility.

|

||||

YOLOv5-cls classification models were trained on [ImageNet](https://docs.ultralytics.com/datasets/classify/imagenet/) for 90 epochs using a 4xA100 instance. [ResNet](https://arxiv.org/abs/1512.03385) and [EfficientNet](https://arxiv.org/abs/1905.11946) models were trained alongside under identical settings for comparison. Models were exported to [ONNX](https://onnx.ai/) FP32 (CPU speed tests) and [TensorRT](https://developer.nvidia.com/tensorrt) FP16 (GPU speed tests). All speed tests were run on Google [Colab Pro](https://colab.research.google.com/signup) for reproducibility.

|

||||

|

||||

| Model | size<br><sup>(pixels) | acc<br><sup>top1 | acc<br><sup>top5 | Training<br><sup>90 epochs<br>4xA100 (hours) | Speed<br><sup>ONNX CPU<br>(ms) | Speed<br><sup>TensorRT V100<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>@224 (B) |

|

||||

| Model | Size<br><sup>(pixels) | Acc<br><sup>top1 | Acc<br><sup>top5 | Training<br><sup>90 epochs<br>4xA100 (hours) | Speed<br><sup>ONNX CPU<br>(ms) | Speed<br><sup>TensorRT V100<br>(ms) | Params<br><sup>(M) | FLOPs<br><sup>@224 (B) |

|

||||

| -------------------------------------------------------------------------------------------------- | --------------------- | ---------------- | ---------------- | -------------------------------------------- | ------------------------------ | ----------------------------------- | ------------------ | ---------------------- |

|

||||

| [YOLOv5n-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n-cls.pt) | 224 | 64.6 | 85.4 | 7:59 | **3.3** | **0.5** | **2.5** | **0.5** |

|

||||

| [YOLOv5s-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s-cls.pt) | 224 | 71.5 | 90.2 | 8:09 | 6.6 | 0.6 | 5.4 | 1.4 |

|

||||

|

|

@ -352,10 +394,10 @@ We trained YOLOv5-cls classification models on ImageNet for 90 epochs using a 4x

|

|||

<details>

|

||||

<summary>Table Notes (click to expand)</summary>

|

||||

|

||||

- All checkpoints are trained to 90 epochs with SGD optimizer with `lr0=0.001` and `weight_decay=5e-5` at image size 224 and all default settings.<br>Runs logged to https://wandb.ai/glenn-jocher/YOLOv5-Classifier-v6-2

|

||||

- **Accuracy** values are for single-model single-scale on [ImageNet-1k](https://www.image-net.org/index.php) dataset.<br>Reproduce by `python classify/val.py --data ../datasets/imagenet --img 224`

|

||||

- **Speed** averaged over 100 inference images using a Google [Colab Pro](https://colab.research.google.com/signup) V100 High-RAM instance.<br>Reproduce by `python classify/val.py --data ../datasets/imagenet --img 224 --batch 1`

|

||||

- **Export** to ONNX at FP32 and TensorRT at FP16 done with `export.py`. <br>Reproduce by `python export.py --weights yolov5s-cls.pt --include engine onnx --imgsz 224`

|

||||

- All checkpoints were trained for 90 epochs using the SGD optimizer with `lr0=0.001` and `weight_decay=5e-5` at an image size of 224 pixels, using default settings.<br>Training runs are logged at [https://wandb.ai/glenn-jocher/YOLOv5-Classifier-v6-2](https://wandb.ai/glenn-jocher/YOLOv5-Classifier-v6-2).

|

||||

- **Accuracy** values (top-1 and top-5) represent single-model, single-scale performance on the [ImageNet-1k dataset](https://docs.ultralytics.com/datasets/classify/imagenet/).<br>Reproduce using: `python classify/val.py --data ../datasets/imagenet --img 224`

|

||||

- **Speed** metrics are averaged over 100 inference images using a Google [Colab Pro V100 High-RAM instance](https://colab.research.google.com/signup).<br>Reproduce using: `python classify/val.py --data ../datasets/imagenet --img 224 --batch 1`

|

||||

- **Export** to ONNX (FP32) and TensorRT (FP16) was performed using `export.py`.<br>Reproduce using: `python export.py --weights yolov5s-cls.pt --include engine onnx --imgsz 224`

|

||||

|

||||

</details>

|

||||

</details>

|

||||

|

|

@ -365,106 +407,107 @@ We trained YOLOv5-cls classification models on ImageNet for 90 epochs using a 4x

|

|||

|

||||

### Train

|

||||

|

||||

YOLOv5 classification training supports auto-download of MNIST, Fashion-MNIST, CIFAR10, CIFAR100, Imagenette, Imagewoof, and ImageNet datasets with the `--data` argument. To start training on MNIST for example use `--data mnist`.

|

||||

YOLOv5 classification training supports automatic download for datasets like [MNIST](https://docs.ultralytics.com/datasets/classify/mnist/), [Fashion-MNIST](https://docs.ultralytics.com/datasets/classify/fashion-mnist/), [CIFAR10](https://docs.ultralytics.com/datasets/classify/cifar10/), [CIFAR100](https://docs.ultralytics.com/datasets/classify/cifar100/), [Imagenette](https://docs.ultralytics.com/datasets/classify/imagenette/), [Imagewoof](https://docs.ultralytics.com/datasets/classify/imagewoof/), and [ImageNet](https://docs.ultralytics.com/datasets/classify/imagenet/) using the `--data` argument. For example, start training on MNIST with `--data mnist`.

|

||||

|

||||

```bash

|

||||

# Single-GPU

|

||||

# Train on a single GPU using CIFAR-100 dataset

|

||||

python classify/train.py --model yolov5s-cls.pt --data cifar100 --epochs 5 --img 224 --batch 128

|

||||

|

||||

# Multi-GPU DDP

|

||||

# Train using Multi-GPU DDP on ImageNet dataset

|

||||

python -m torch.distributed.run --nproc_per_node 4 --master_port 1 classify/train.py --model yolov5s-cls.pt --data imagenet --epochs 5 --img 224 --device 0,1,2,3

|

||||

```

|

||||

|

||||

### Val

|

||||

|

||||

Validate YOLOv5m-cls accuracy on ImageNet-1k dataset:

|

||||

Validate the accuracy of the YOLOv5m-cls model on the ImageNet-1k validation dataset:

|

||||

|

||||

```bash

|

||||

bash data/scripts/get_imagenet.sh --val # download ImageNet val split (6.3G, 50000 images)

|

||||

python classify/val.py --weights yolov5m-cls.pt --data ../datasets/imagenet --img 224 # validate

|

||||

# Download ImageNet validation split (6.3GB, 50,000 images)

|

||||

bash data/scripts/get_imagenet.sh --val

|

||||

|

||||

# Validate the model

|

||||

python classify/val.py --weights yolov5m-cls.pt --data ../datasets/imagenet --img 224

|

||||

```

|

||||

|

||||

### Predict

|

||||

|

||||

Use pretrained YOLOv5s-cls.pt to predict bus.jpg:

|

||||

Use the pretrained YOLOv5s-cls.pt model to classify the image `bus.jpg`:

|

||||

|

||||

```bash

|

||||

# Run prediction

|

||||

python classify/predict.py --weights yolov5s-cls.pt --source data/images/bus.jpg

|

||||

```

|

||||

|

||||

```python

|

||||

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s-cls.pt") # load from PyTorch Hub

|

||||

# Load model from PyTorch Hub

|

||||

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s-cls.pt")

|

||||

```

|

||||

|

||||

### Export

|

||||

|

||||

Export a group of trained YOLOv5s-cls, ResNet and EfficientNet models to ONNX and TensorRT:

|

||||

Export trained YOLOv5s-cls, ResNet50, and EfficientNet_b0 models to ONNX and TensorRT formats:

|

||||

|

||||

```bash

|

||||

# Export models

|

||||

python export.py --weights yolov5s-cls.pt resnet50.pt efficientnet_b0.pt --include onnx engine --img 224

|

||||

```

|

||||

|

||||

</details>

|

||||

|

||||

## <div align="center">Environments</div>

|

||||

## ☁️ Environments

|

||||

|

||||

Get started in seconds with our verified environments. Click each icon below for details.

|

||||

Get started quickly with our pre-configured environments. Click the icons below for setup details.

|

||||

|

||||

<div align="center">

|

||||

<a href="https://bit.ly/yolov5-paperspace-notebook">

|

||||

<a href="https://bit.ly/yolov5-paperspace-notebook" title="Run on Paperspace Gradient">

|

||||

<img src="https://github.com/ultralytics/assets/releases/download/v0.0.0/logo-gradient.png" width="10%" /></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

||||

<a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb">

|

||||

<a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb" title="Open in Google Colab">

|

||||

<img src="https://github.com/ultralytics/assets/releases/download/v0.0.0/logo-colab-small.png" width="10%" /></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

||||

<a href="https://www.kaggle.com/models/ultralytics/yolov5">

|

||||

<a href="https://www.kaggle.com/models/ultralytics/yolov5" title="Open in Kaggle">

|

||||

<img src="https://github.com/ultralytics/assets/releases/download/v0.0.0/logo-kaggle-small.png" width="10%" /></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

||||

<a href="https://hub.docker.com/r/ultralytics/yolov5">

|

||||

<a href="https://hub.docker.com/r/ultralytics/yolov5" title="Pull Docker Image">

|

||||

<img src="https://github.com/ultralytics/assets/releases/download/v0.0.0/logo-docker-small.png" width="10%" /></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

||||

<a href="https://docs.ultralytics.com/yolov5/environments/aws_quickstart_tutorial/">

|

||||

<a href="https://docs.ultralytics.com/yolov5/environments/aws_quickstart_tutorial/" title="AWS Quickstart Guide">

|

||||

<img src="https://github.com/ultralytics/assets/releases/download/v0.0.0/logo-aws-small.png" width="10%" /></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

||||

<a href="https://docs.ultralytics.com/yolov5/environments/google_cloud_quickstart_tutorial/">

|

||||

<a href="https://docs.ultralytics.com/yolov5/environments/google_cloud_quickstart_tutorial/" title="GCP Quickstart Guide">

|

||||

<img src="https://github.com/ultralytics/assets/releases/download/v0.0.0/logo-gcp-small.png" width="10%" /></a>

|

||||

</div>

|

||||

|

||||

## <div align="center">Contribute</div>

|

||||

## 🤝 Contribute

|

||||

|

||||

We love your input! We want to make contributing to YOLOv5 as easy and transparent as possible. Please see our [Contributing Guide](https://docs.ultralytics.com/help/contributing/) to get started, and fill out the [YOLOv5 Survey](https://www.ultralytics.com/survey?utm_source=github&utm_medium=social&utm_campaign=Survey) to send us feedback on your experiences. Thank you to all our contributors!

|

||||

We welcome your contributions! Making YOLOv5 accessible and effective is a community effort. Please see our [Contributing Guide](https://docs.ultralytics.com/help/contributing/) to get started. Share your feedback through the [YOLOv5 Survey](https://www.ultralytics.com/survey?utm_source=github&utm_medium=social&utm_campaign=Survey). Thank you to all our contributors for making YOLOv5 better!

|

||||

|

||||

<!-- SVG image from https://opencollective.com/ultralytics/contributors.svg?width=990 -->

|

||||

[](https://github.com/ultralytics/yolov5/graphs/contributors)

|

||||

|

||||

<a href="https://github.com/ultralytics/yolov5/graphs/contributors">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/im/image-contributors.png" /></a>

|

||||

## 📜 License

|

||||

|

||||

## <div align="center">License</div>

|

||||

Ultralytics provides two licensing options to meet different needs:

|

||||

|

||||

Ultralytics offers two licensing options to accommodate diverse use cases:

|

||||

- **AGPL-3.0 License**: An [OSI-approved](https://opensource.org/license/agpl-v3) open-source license ideal for academic research, personal projects, and testing. It promotes open collaboration and knowledge sharing. See the [LICENSE](https://github.com/ultralytics/yolov5/blob/master/LICENSE) file for details.

|

||||

- **Enterprise License**: Tailored for commercial applications, this license allows seamless integration of Ultralytics software and AI models into commercial products and services, bypassing the open-source requirements of AGPL-3.0. For commercial use cases, please contact us via [Ultralytics Licensing](https://www.ultralytics.com/license).

|

||||

|

||||

- **AGPL-3.0 License**: This [OSI-approved](https://opensource.org/license) open-source license is ideal for students and enthusiasts, promoting open collaboration and knowledge sharing. See the [LICENSE](https://github.com/ultralytics/yolov5/blob/master/LICENSE) file for more details.

|

||||

- **Enterprise License**: Designed for commercial use, this license permits seamless integration of Ultralytics software and AI models into commercial goods and services, bypassing the open-source requirements of AGPL-3.0. If your scenario involves embedding our solutions into a commercial offering, reach out through [Ultralytics Licensing](https://www.ultralytics.com/license).

|

||||

## 📧 Contact

|

||||

|

||||

## <div align="center">Contact</div>

|

||||

|

||||

For YOLOv5 bug reports and feature requests please visit [GitHub Issues](https://github.com/ultralytics/yolov5/issues), and join our [Discord](https://discord.com/invite/ultralytics) community for questions and discussions!

|

||||

For bug reports and feature requests related to YOLOv5, please visit [GitHub Issues](https://github.com/ultralytics/yolov5/issues). For general questions, discussions, and community support, join our [Discord server](https://discord.com/invite/ultralytics)!

|

||||

|

||||

<br>

|

||||

<div align="center">

|

||||

<a href="https://github.com/ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-github.png" width="3%" alt="Ultralytics GitHub"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="space">

|

||||

<a href="https://www.linkedin.com/company/ultralytics/"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-linkedin.png" width="3%" alt="Ultralytics LinkedIn"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="space">

|

||||

<a href="https://twitter.com/ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-twitter.png" width="3%" alt="Ultralytics Twitter"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="space">

|

||||

<a href="https://youtube.com/ultralytics?sub_confirmation=1"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-youtube.png" width="3%" alt="Ultralytics YouTube"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="space">

|

||||

<a href="https://www.tiktok.com/@ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-tiktok.png" width="3%" alt="Ultralytics TikTok"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="space">

|

||||

<a href="https://ultralytics.com/bilibili"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-bilibili.png" width="3%" alt="Ultralytics BiliBili"></a>

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%">

|

||||

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="space">

|

||||

<a href="https://discord.com/invite/ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-discord.png" width="3%" alt="Ultralytics Discord"></a>

|

||||

</div>

|

||||

|

||||

[tta]: https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation

|

||||

|

|

|

|||

443

README.zh-CN.md

443

README.zh-CN.md

|

|

@ -7,95 +7,103 @@

|

|||