14 KiB

Using Ultralytics YOLO With Comet

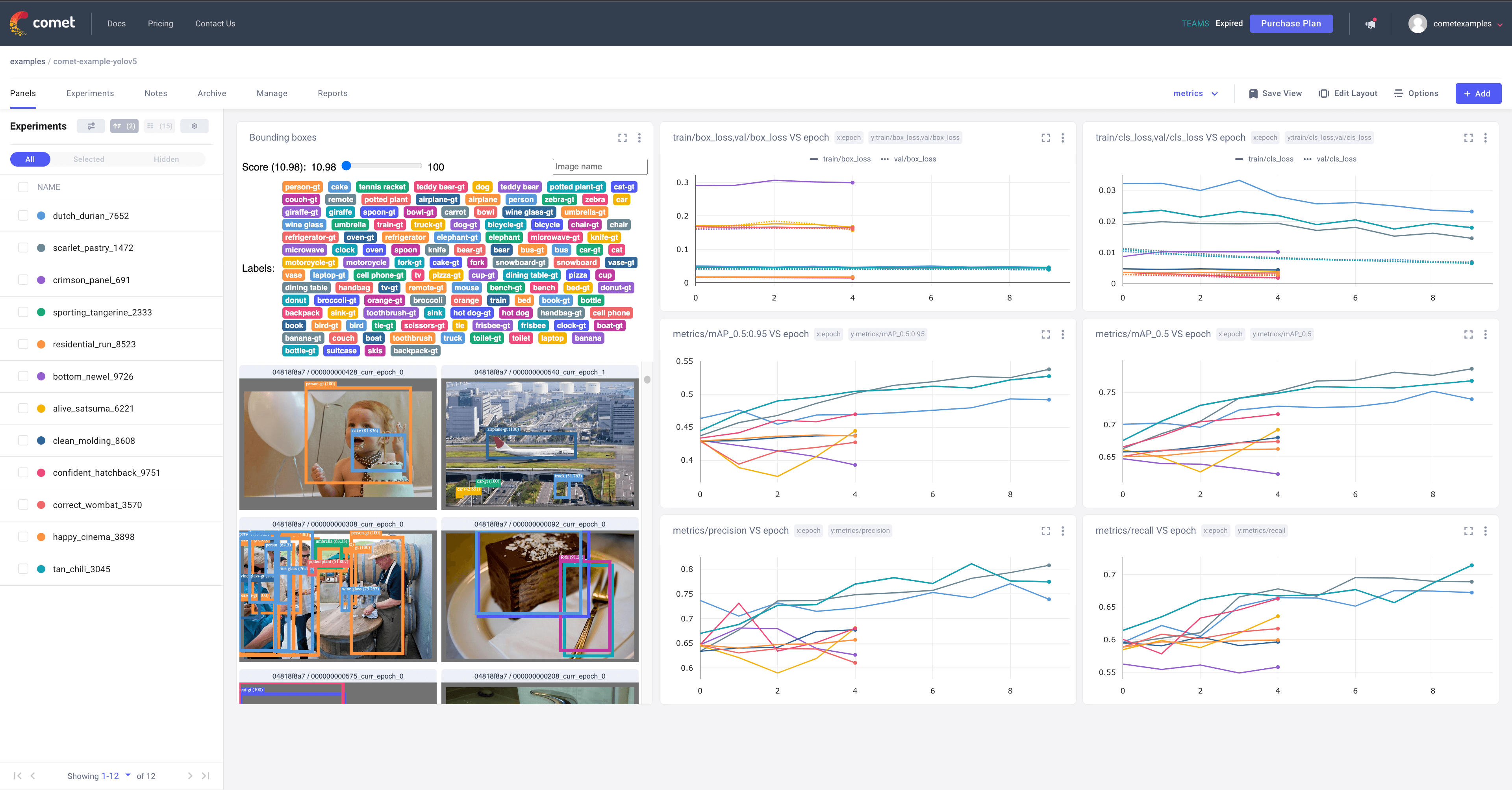

Welcome to the guide for integrating Ultralytics YOLO with Comet! Comet offers robust experiment tracking, model management, and visualization tools to enhance your machine learning workflow. This guide explains how to leverage Comet for monitoring training, logging results, managing datasets, and optimizing hyperparameters for your YOLO models.

🧪 About Comet

Comet provides tools for data scientists, engineers, and teams to accelerate and optimize deep learning and machine learning models.

With Comet, you can track and visualize model metrics in real time, save hyperparameters, datasets, and model checkpoints, and visualize predictions using Custom Panels. Comet ensures you never lose track of your work and makes sharing results and collaborating across teams seamless. For more details, see the Comet Documentation.

🚀 Getting Started

Follow these steps to set up Comet for your YOLO projects.

Install Comet

Install the comet_ml Python package using pip:

pip install comet_ml

Configure Comet Credentials

You can configure Comet in two ways:

-

Environment Variables:

Set your credentials directly in your environment.export COMET_API_KEY=YOUR_COMET_API_KEY export COMET_PROJECT_NAME=YOUR_COMET_PROJECT_NAME # Defaults to 'yolov5' if not setFind your API key in your Comet Account Settings.

-

Configuration File:

Create a.comet.configfile in your working directory:[comet] api_key=YOUR_COMET_API_KEY project_name=YOUR_COMET_PROJECT_NAME # Defaults to 'yolov5' if not set

Run the Training Script

Run the YOLO training script. Comet will automatically log your run.

# Train YOLO on COCO128 for 5 epochs

python train.py --img 640 --batch 16 --epochs 5 --data coco128.yaml --weights yolov5s.pt

Comet automatically logs hyperparameters, command-line arguments, and training/validation metrics. Visualize and analyze your runs in the Comet UI. For more details, see the Ultralytics training documentation.

✨ Try an Example!

Explore a completed YOLO training run tracked with Comet:

Run the example yourself using this Google Colab notebook:

📊 Automatic Logging

Comet automatically logs the following information by default:

Metrics

- Losses: Box Loss, Object Loss, Classification Loss (Training and Validation)

- Performance: mAP@0.5, mAP@0.5:0.95 (Validation). Learn more in the YOLO Performance Metrics guide.

- Precision and Recall: Validation data metrics

Parameters

- Model Hyperparameters: Configuration used for the model

- Command Line Arguments: All arguments passed via the CLI

Visualizations

- Confusion Matrix: Model predictions on validation data (Wikipedia)

- Curves: PR and F1 curves across all classes

- Label Correlogram: Correlation visualization of class labels

⚙️ Advanced Configuration

Customize Comet's logging behavior using command-line flags or environment variables.

# Environment Variables for Comet Configuration

export COMET_MODE=online # 'online' or 'offline'. Default: online

export COMET_MODEL_NAME=YOUR_MODEL_NAME # Name for the saved model. Default: yolov5

export COMET_LOG_CONFUSION_MATRIX=false # Disable confusion matrix logging. Default: true

export COMET_MAX_IMAGE_UPLOADS=NUMBER # Max prediction images to log. Default: 100

export COMET_LOG_PER_CLASS_METRICS=true # Log metrics per class. Default: false

export COMET_DEFAULT_CHECKPOINT_FILENAME=checkpoint_file.pt # Checkpoint for resuming. Default: 'last.pt'

export COMET_LOG_BATCH_LEVEL_METRICS=true # Log training metrics per batch. Default: false

export COMET_LOG_PREDICTIONS=true # Disable prediction logging if set to false. Default: true

For more configuration options, see the Comet documentation.

Logging Checkpoints With Comet

Model checkpoint logging to Comet is disabled by default. Enable it using the --save-period argument during training to save checkpoints at the specified epoch interval.

python train.py \

--img 640 \

--batch 16 \

--epochs 5 \

--data coco128.yaml \

--weights yolov5s.pt \

--save-period 1 # Save checkpoint every epoch

Checkpoints will appear in the "Assets & Artifacts" tab of your Comet experiment. Learn more about model management in the Comet Model Registry documentation.

Logging Model Predictions

By default, model predictions (images, ground truth labels, bounding boxes) for the validation set are logged. Control the logging frequency using the --bbox_interval argument, which specifies logging every Nth batch per epoch.

Note: The YOLO validation dataloader defaults to a batch size of 32. Adjust --bbox_interval as needed.

Visualize predictions using Comet's Object Detection Custom Panel. See an example project using the Panel.

python train.py \

--img 640 \

--batch 16 \

--epochs 5 \

--data coco128.yaml \

--weights yolov5s.pt \

--bbox_interval 2 # Log predictions every 2nd validation batch per epoch

Controlling the Number of Prediction Images

Adjust the maximum number of validation images logged using the COMET_MAX_IMAGE_UPLOADS environment variable.

env COMET_MAX_IMAGE_UPLOADS=200 python train.py \

--img 640 \

--batch 16 \

--epochs 5 \

--data coco128.yaml \

--weights yolov5s.pt \

--bbox_interval 1 # Log every batch

Logging Class Level Metrics

Enable logging of mAP, precision, recall, and F1-score for each class using the COMET_LOG_PER_CLASS_METRICS environment variable.

env COMET_LOG_PER_CLASS_METRICS=true python train.py \

--img 640 \

--batch 16 \

--epochs 5 \

--data coco128.yaml \

--weights yolov5s.pt

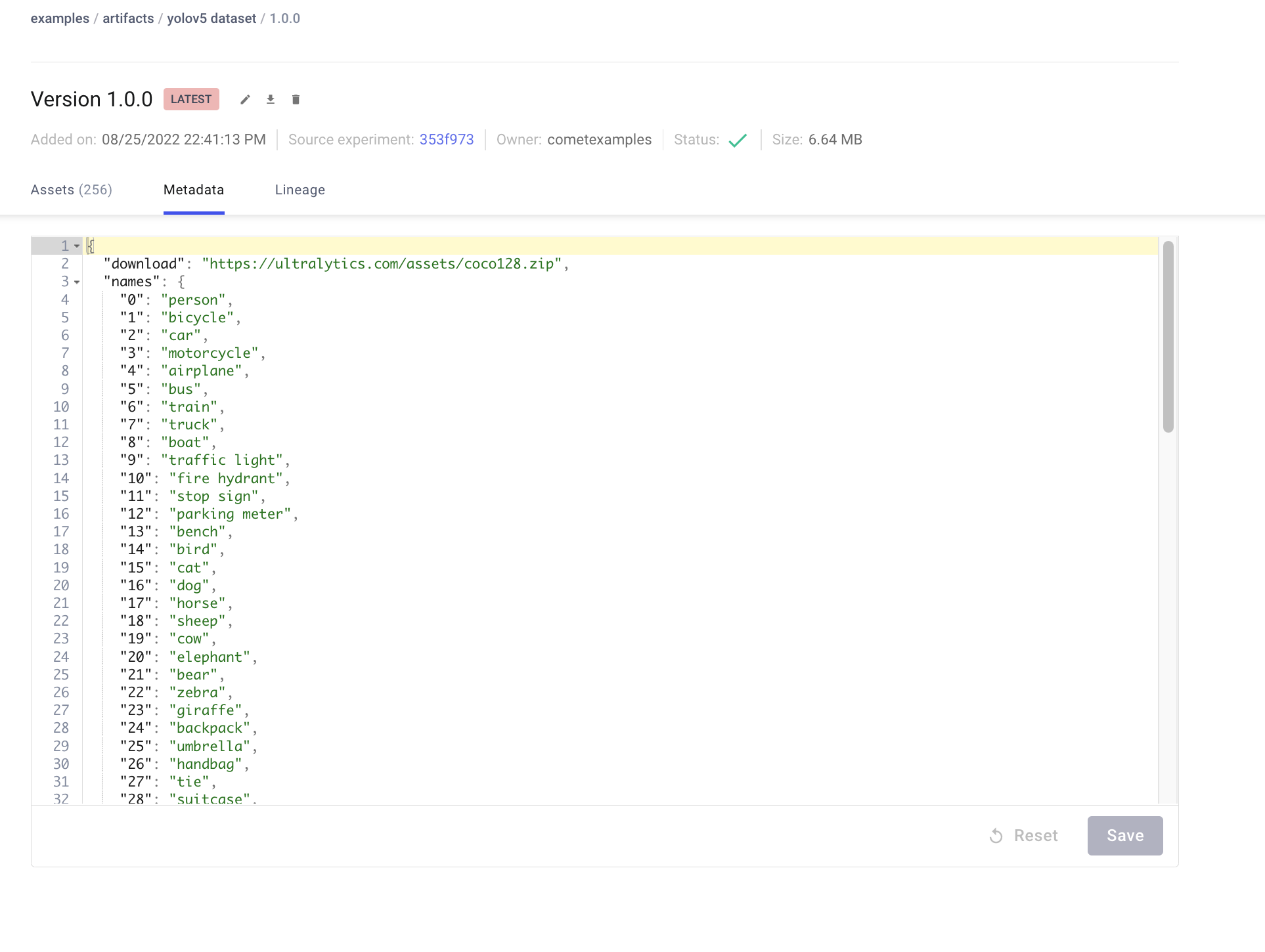

💾 Dataset Management With Comet Artifacts

Use Comet Artifacts to version, store, and manage your datasets.

Uploading a Dataset

Upload your dataset using the --upload_dataset flag. Ensure your dataset follows the structure described in the Ultralytics Datasets documentation and that your dataset config YAML file matches the format of coco128.yaml (see the COCO128 dataset docs).

python train.py \

--img 640 \

--batch 16 \

--epochs 5 \

--data coco128.yaml \

--weights yolov5s.pt \

--upload_dataset # Upload the dataset specified in coco128.yaml

View the uploaded dataset in the Artifacts tab of your Comet Workspace.

Preview data directly in the Comet UI.

Artifacts are versioned and support metadata. Comet automatically logs metadata from your dataset YAML file.

Using a Saved Artifact

To use a dataset stored in Comet Artifacts, update the path in your dataset YAML file to the Artifact resource URL:

# contents of artifact.yaml

path: "comet://WORKSPACE_NAME/ARTIFACT_NAME:ARTIFACT_VERSION_OR_ALIAS"

train: images/train # Adjust subdirectory if needed

val: images/val # Adjust subdirectory if needed

# Other dataset configurations...

Then, pass this configuration file to your training script:

python train.py \

--img 640 \

--batch 16 \

--epochs 5 \

--data artifact.yaml \

--weights yolov5s.pt

Artifacts track data lineage, showing which experiments used specific dataset versions.

🔄 Resuming Training Runs

If a training run is interrupted (for example, due to connection issues), you can resume it using the --resume flag with the Comet Run Path (comet://YOUR_WORKSPACE/YOUR_PROJECT/EXPERIMENT_ID).

This restores the model state, hyperparameters, arguments, and downloads necessary Artifacts, continuing logging to the existing Comet Experiment. Learn more about resuming runs in the Comet documentation.

python train.py \

--resume "comet://YOUR_WORKSPACE/YOUR_PROJECT/EXPERIMENT_ID"

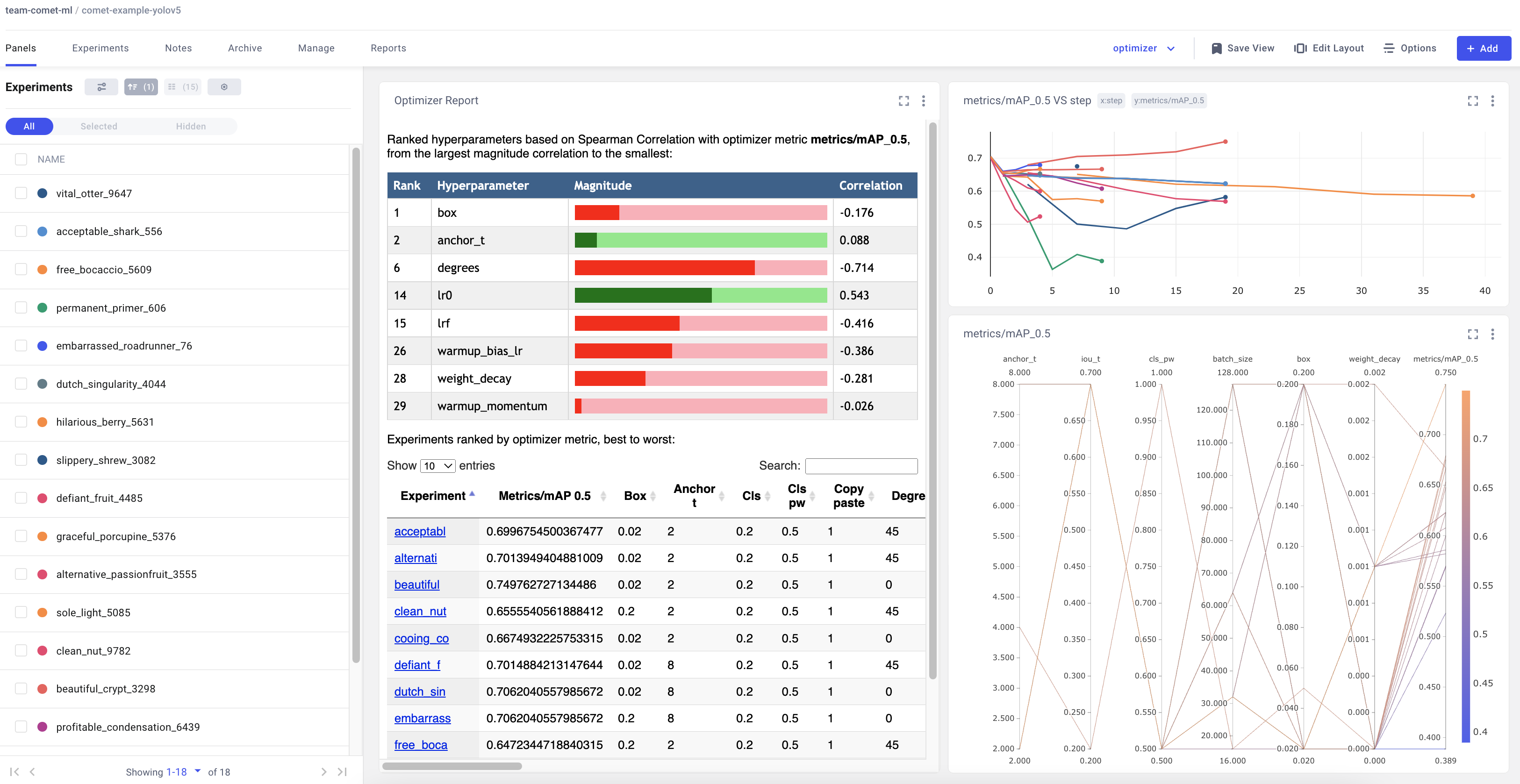

🔍 Hyperparameter Optimization (HPO)

YOLO integrates with the Comet Optimizer for easy hyperparameter sweeps and visualization. This helps you find the best set of parameters for your model, a process often referred to as Hyperparameter Tuning.

Configuring an Optimizer Sweep

Create a JSON configuration file defining the sweep parameters, search strategy, and objective metric. An example is provided at utils/loggers/comet/optimizer_config.json.

Run the sweep using the hpo.py script:

python utils/loggers/comet/hpo.py \

--comet_optimizer_config "utils/loggers/comet/optimizer_config.json"

The hpo.py script accepts the same arguments as train.py. Pass additional fixed arguments for the sweep:

python utils/loggers/comet/hpo.py \

--comet_optimizer_config "utils/loggers/comet/optimizer_config.json" \

--save-period 1 \

--bbox_interval 1

Running a Sweep in Parallel

Execute multiple sweep trials concurrently using the comet optimizer command:

comet optimizer -j \

utils/loggers/comet/hpo.py NUM_WORKERS utils/loggers/comet/optimizer_config.json

Replace NUM_WORKERS with the desired number of parallel processes.

Visualizing HPO Results

Comet offers various visualizations for analyzing sweep results, such as parallel coordinate plots and parameter importance plots. Explore a project with a completed sweep.

🤝 Contributing

Contributions to enhance the YOLO-Comet integration are welcome! Please see the Ultralytics Contributing Guide for more information on how to get involved. Thank you for helping improve this integration!